网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

node01

node02

node03

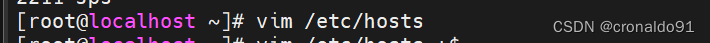

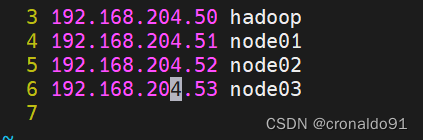

(5)域名主机名

[root@localhost ~]# vim /etc/hosts

……

192.168.205.50 hadoop

192.168.205.51 node01

192.168.205.52 node02

192.168.205.53 node03

(6)修改主机名

[root@localhost ~]# hostnamectl set-hostname 主机名

[root@localhost ~]# bash

(7)hadoop节点创建密钥

[root@hadoop ~]# mkdir /root/.ssh

[root@hadoop ~]# cd /root/.ssh/

[root@hadoop .ssh]# ssh-keygen -t rsa -b 2048 -N ''

(8)添加免密登录

[root@hadoop .ssh]# ssh-copy-id -i id_rsa.pub hadoop

[root@hadoop .ssh]# ssh-copy-id -i id_rsa.pub node01

[root@hadoop .ssh]# ssh-copy-id -i id_rsa.pub node02

[root@hadoop .ssh]# ssh-copy-id -i id_rsa.pub node03

2.Linux 部署 HDFS 分布式集群

(1)官网

https://hadoop.apache.org/

查看版本

https://archive.apache.org/dist/hadoop/common/

(2)下载

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

(3) 解压

tar -zxf hadoop-2.7.7.tar.gz

(3)移动

mv hadoop-2.7.7 /usr/local/hadoop

(4)更改权限

chown -R root.root hadoop

(5)验证版本

(需要修改环境配置文件hadoop-env.sh 申明JAVA安装路径和hadoop配置文件路径)

修改配置文件

[root@hadoop hadoop]# vim hadoop-env.sh

验证

[root@hadoop hadoop]# ./bin/hadoop version

(6)修改节点配置文件

[root@hadoop hadoop]# vim slaves

修改前:

修改后:

node01

node02

node03

(7)查看官方文档

https://hadoop.apache.org/docs/

指定版本

https://hadoop.apache.org/docs/r2.7.7/

查看核心配置文件

https://hadoop.apache.org/docs/r2.7.7/hadoop-project-dist/hadoop-common/core-default.xml

文件系统配置参数:

数据目录配置参数:

(8)修改核心配置文件

[root@hadoop hadoop]# vim core-site.xml

修改前:

修改后:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop:9000</value>

<description>hdfs file system</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/var/hadoop</value>

</property>

</configuration>

(9)查看HDFS配置文件

https://hadoop.apache.org/docs/r2.7.7/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

namenode:

副本数量:

(10)修改HDFS配置文件

[root@hadoop hadoop]# vim hdfs-site.xml

修改前:

修改后:

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop:50070</value>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop:50090</value>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

(11) 查看同步

[root@hadoop ~]# rpm -q rsync

同步

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop node01:/usr/local/

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop node02:/usr/local/

[root@hadoop ~]# rsync -aXSH --delete /usr/local/hadoop node03:/usr/local/

(12)初始化hdfs

[root@hadoop ~]# mkdir /var/hadoop

(13)查看命令

[root@hadoop hadoop]# ./bin/hdfs

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

dfs run a filesystem command on the file systems supported in Hadoop.

classpath prints the classpath

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

journalnode run the DFS journalnode

zkfc run the ZK Failover Controller daemon

datanode run a DFS datanode

dfsadmin run a DFS admin client

haadmin run a DFS HA admin client

fsck run a DFS filesystem checking utility

balancer run a cluster balancing utility

jmxget get JMX exported values from NameNode or DataNode.

mover run a utility to move block replicas across

storage types

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to an legacy fsimage

oev apply the offline edits viewer to an edits file

fetchdt fetch a delegation token from the NameNode

getconf get config values from configuration

groups get the groups which users belong to

snapshotDiff diff two snapshots of a directory or diff the

current directory contents with a snapshot

lsSnapshottableDir list all snapshottable dirs owned by the current user

Use -help to see options

portmap run a portmap service

nfs3 run an NFS version 3 gateway

cacheadmin configure the HDFS cache

crypto configure HDFS encryption zones

storagepolicies list/get/set block storage policies

version print the version

Most commands print help when invoked w/o parameters.

(14)格式化hdfs

[root@hadoop hadoop]# ./bin/hdfs namenode -format

查看目录

[root@hadoop hadoop]# cd /var/hadoop/

[root@hadoop hadoop]# tree .

.

└── dfs

└── name

└── current

├── fsimage_0000000000000000000

├── fsimage_0000000000000000000.md5

├── seen_txid

└── VERSION

3 directories, 4 files

(15) 启动集群

查看目录

[root@hadoop hadoop]# cd ~

[root@hadoop ~]# cd /usr/local/hadoop/

[root@hadoop hadoop]# ls

启动

[root@hadoop hadoop]# ./sbin/start-dfs.sh

查看日志(新生成logs目录)

[root@hadoop hadoop]# cd logs/ ; ll

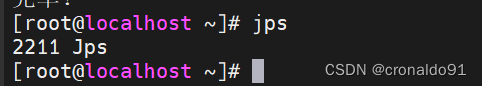

查看jps

[root@hadoop hadoop]# jps

datanode节点查看(node01)

datanode节点查看(node02)

datanode节点查看(node03)

(16)查看命令

[root@hadoop hadoop]# ./bin/hdfs dfsadmin

Usage: hdfs dfsadmin

Note: Administrative commands can only be run as the HDFS superuser.

[-report [-live] [-dead] [-decommissioning]]

[-safemode <enter | leave | get | wait>]

[-saveNamespace]

[-rollEdits]

[-restoreFailedStorage true|false|check]

[-refreshNodes]

[-setQuota <quota> <dirname>...<dirname>]

[-clrQuota <dirname>...<dirname>]

[-setSpaceQuota <quota> [-storageType <storagetype>] <dirname>...<dirname>]

[-clrSpaceQuota [-storageType <storagetype>] <dirname>...<dirname>]

[-finalizeUpgrade]

[-rollingUpgrade [<query|prepare|finalize>]]

[-refreshServiceAcl]

[-refreshUserToGroupsMappings]

[-refreshSuperUserGroupsConfiguration]

[-refreshCallQueue]

[-refresh <host:ipc_port> <key> [arg1..argn]

[-reconfig <datanode|...> <host:ipc_port> <start|status>]

[-printTopology]

[-refreshNamenodes datanode_host:ipc_port]

[-deleteBlockPool datanode_host:ipc_port blockpoolId [force]]

[-setBalancerBandwidth <bandwidth in bytes per second>]

[-fetchImage <local directory>]

[-allowSnapshot <snapshotDir>]

[-disallowSnapshot <snapshotDir>]

[-shutdownDatanode <datanode_host:ipc_port> [upgrade]]

[-getDatanodeInfo <datanode_host:ipc_port>]

[-metasave filename]

[-triggerBlockReport [-incremental] <datanode_host:ipc_port>]

[-help [cmd]]

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

(17)验证集群

查看报告,发现3个节点

[root@hadoop hadoop]# ./bin/hdfs dfsadmin -report

Configured Capacity: 616594919424 (574.25 GB)

Present Capacity: 598915952640 (557.78 GB)

DFS Remaining: 598915915776 (557.78 GB)

DFS Used: 36864 (36 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.204.53:50010 (node03)

Hostname: node03

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 5620584448 (5.23 GB)

DFS Remaining: 199911043072 (186.18 GB)

DFS Used%: 0.00%

DFS Remaining%: 97.27%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 10:30:18 CST 2024

Name: 192.168.204.51:50010 (node01)

Hostname: node01

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 6028849152 (5.61 GB)

DFS Remaining: 199502778368 (185.80 GB)

DFS Used%: 0.00%

DFS Remaining%: 97.07%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 10:30:18 CST 2024

Name: 192.168.204.52:50010 (node02)

Hostname: node02

Decommission Status : Normal

Configured Capacity: 205531639808 (191.42 GB)

DFS Used: 12288 (12 KB)

Non DFS Used: 6029533184 (5.62 GB)

DFS Remaining: 199502094336 (185.80 GB)

DFS Used%: 0.00%

DFS Remaining%: 97.07%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Thu Mar 14 10:30:18 CST 2024

(18)web页面验证

http://192.168.204.50:50070/

http://192.168.204.50:50090/

http://192.168.204.51:50075/

(19)访问系统

目前为空

3.Linux 使用 HDFS 文件系统

(1)查看命令

[root@hadoop hadoop]# ./bin/hadoop

Usage: hadoop [--config confdir] [COMMAND | CLASSNAME]

CLASSNAME run the class named CLASSNAME

or

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar <jar> run a jar file

note: please use "yarn jar" to launch

YARN applications, not this command.

checknative [-a|-h] check native hadoop and compression libraries availability

distcp <srcurl> <desturl> copy file or directories recursively

archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive

classpath prints the class path needed to get the

credential interact with credential providers

Hadoop jar and the required libraries

daemonlog get/set the log level for each daemon

trace view and modify Hadoop tracing settings

Most commands print help when invoked w/o parameters.

[root@hadoop hadoop]# ./bin/hadoop fs

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] <path> ...]

[-cp [-f] [-p | -p[topax]] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions]

(2)查看文件目录

[root@hadoop hadoop]# ./bin/hadoop fs -ls /

(3)创建文件夹

[root@hadoop hadoop]# ./bin/hadoop fs -mkdir /devops

查看

查看web

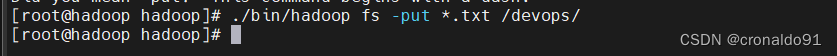

(4)上传文件

[root@hadoop hadoop]# ./bin/hadoop fs -put *.txt /devops/

查看

[root@hadoop hadoop]# ./bin/hadoop fs -ls /devops/

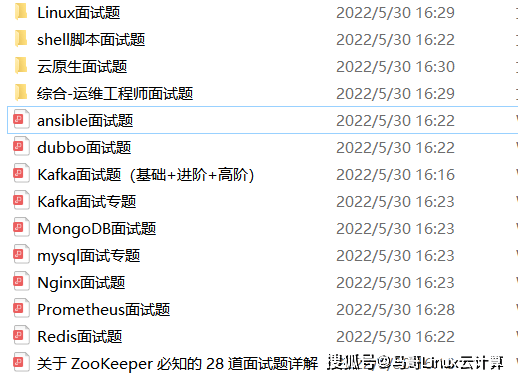

为了做好运维面试路上的助攻手,特整理了上百道 【运维技术栈面试题集锦】 ,让你面试不慌心不跳,高薪offer怀里抱!

这次整理的面试题,小到shell、MySQL,大到K8s等云原生技术栈,不仅适合运维新人入行面试需要,还适用于想提升进阶跳槽加薪的运维朋友。

本份面试集锦涵盖了

- 174 道运维工程师面试题

- 128道k8s面试题

- 108道shell脚本面试题

- 200道Linux面试题

- 51道docker面试题

- 35道Jenkis面试题

- 78道MongoDB面试题

- 17道ansible面试题

- 60道dubbo面试题

- 53道kafka面试

- 18道mysql面试题

- 40道nginx面试题

- 77道redis面试题

- 28道zookeeper

总计 1000+ 道面试题, 内容 又全含金量又高

- 174道运维工程师面试题

1、什么是运维?

2、在工作中,运维人员经常需要跟运营人员打交道,请问运营人员是做什么工作的?

3、现在给你三百台服务器,你怎么对他们进行管理?

4、简述raid0 raid1raid5二种工作模式的工作原理及特点

5、LVS、Nginx、HAproxy有什么区别?工作中你怎么选择?

6、Squid、Varinsh和Nginx有什么区别,工作中你怎么选择?

7、Tomcat和Resin有什么区别,工作中你怎么选择?

8、什么是中间件?什么是jdk?

9、讲述一下Tomcat8005、8009、8080三个端口的含义?

10、什么叫CDN?

11、什么叫网站灰度发布?

12、简述DNS进行域名解析的过程?

13、RabbitMQ是什么东西?

14、讲一下Keepalived的工作原理?

15、讲述一下LVS三种模式的工作过程?

16、mysql的innodb如何定位锁问题,mysql如何减少主从复制延迟?

17、如何重置mysql root密码?

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

78道MongoDB面试题

- 17道ansible面试题

- 60道dubbo面试题

- 53道kafka面试

- 18道mysql面试题

- 40道nginx面试题

- 77道redis面试题

- 28道zookeeper

总计 1000+ 道面试题, 内容 又全含金量又高

- 174道运维工程师面试题

1、什么是运维?

2、在工作中,运维人员经常需要跟运营人员打交道,请问运营人员是做什么工作的?

3、现在给你三百台服务器,你怎么对他们进行管理?

4、简述raid0 raid1raid5二种工作模式的工作原理及特点

5、LVS、Nginx、HAproxy有什么区别?工作中你怎么选择?

6、Squid、Varinsh和Nginx有什么区别,工作中你怎么选择?

7、Tomcat和Resin有什么区别,工作中你怎么选择?

8、什么是中间件?什么是jdk?

9、讲述一下Tomcat8005、8009、8080三个端口的含义?

10、什么叫CDN?

11、什么叫网站灰度发布?

12、简述DNS进行域名解析的过程?

13、RabbitMQ是什么东西?

14、讲一下Keepalived的工作原理?

15、讲述一下LVS三种模式的工作过程?

16、mysql的innodb如何定位锁问题,mysql如何减少主从复制延迟?

17、如何重置mysql root密码?

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

3459

3459

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?