self.branch4_pool = nn.MaxPool2d(kernel_size=3,stride=1,padding=1)

self.branch4_conv1 = ConvBNReLU(in_channels=in_channels, out_channels=out_channels4, kernel_size=1)

def forward(self,x):

out1 = self.branch1_conv(x)

out2 = self.branch2_conv2(self.branch2_conv1(x))

out3 = self.branch3_conv2(self.branch3_conv1(x))

out4 = self.branch4_conv1(self.branch4_pool(x))

out = torch.cat([out1, out2, out3, out4], dim=1)

return out

class InceptionAux(nn.Module):

def init(self, in_channels,out_channels):

super(InceptionAux, self).init()

self.auxiliary_avgpool = nn.AvgPool2d(kernel_size=5, stride=3)

self.auxiliary_conv1 = ConvBNReLU(in_channels=in_channels, out_channels=128, kernel_size=1)

self.auxiliary_linear1 = nn.Linear(in_features=128 * 4 * 4, out_features=1024)

self.auxiliary_relu = nn.ReLU6(inplace=True)

self.auxiliary_dropout = nn.Dropout(p=0.7)

self.auxiliary_linear2 = nn.Linear(in_features=1024, out_features=out_channels)

def forward(self, x):

x = self.auxiliary_conv1(self.auxiliary_avgpool(x))

x = x.view(x.size(0), -1)

x= self.auxiliary_relu(self.auxiliary_linear1(x))

out = self.auxiliary_linear2(self.auxiliary_dropout(x))

return out

class InceptionV1(nn.Module):

def init(self, num_classes=1000, stage=‘train’):

super(InceptionV1, self).init()

self.stage = stage

self.block1 = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=64,kernel_size=7,stride=2,padding=3),

nn.BatchNorm2d(64),

nn.MaxPool2d(kernel_size=3,stride=2, padding=1),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=1, stride=1),

nn.BatchNorm2d(64),

)

self.block2 = nn.Sequential(

nn.Conv2d(in_channels=64, out_channels=192, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(192),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1),

)

self.block3 = nn.Sequential(

InceptionV1Module(in_channels=192,out_channels1=64, out_channels2reduce=96, out_channels2=128, out_channels3reduce = 16, out_channels3=32, out_channels4=32),

InceptionV1Module(in_channels=256, out_channels1=128, out_channels2reduce=128, out_channels2=192,out_channels3reduce=32, out_channels3=96, out_channels4=64),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1),

)

self.block4_1 = InceptionV1Module(in_channels=480, out_channels1=192, out_channels2reduce=96, out_channels2=208,out_channels3reduce=16, out_channels3=48, out_channels4=64)

if self.stage == ‘train’:

self.aux_logits1 = InceptionAux(in_channels=512,out_channels=num_classes)

self.block4_2 = nn.Sequential(

InceptionV1Module(in_channels=512, out_channels1=160, out_channels2reduce=112, out_channels2=224,

out_channels3reduce=24, out_channels3=64, out_channels4=64),

InceptionV1Module(in_channels=512, out_channels1=128, out_channels2reduce=128, out_channels2=256,

out_channels3reduce=24, out_channels3=64, out_channels4=64),

InceptionV1Module(in_channels=512, out_channels1=112, out_channels2reduce=144, out_channels2=288,

out_channels3reduce=32, out_channels3=64, out_channels4=64),

)

if self.stage == ‘train’:

self.aux_logits2 = InceptionAux(in_channels=528,out_channels=num_classes)

self.block4_3 = nn.Sequential(

InceptionV1Module(in_channels=528, out_channels1=256, out_channels2reduce=160, out_channels2=320,

out_channels3reduce=32, out_channels3=128, out_channels4=128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1),

)

self.block5 = nn.Sequential(

InceptionV1Module(in_channels=832, out_channels1=256, out_channels2reduce=160, out_channels2=320,out_channels3reduce=32, out_channels3=128, out_channels4=128),

InceptionV1Module(in_channels=832, out_channels1=384, out_channels2reduce=192, out_channels2=384,out_channels3reduce=48, out_channels3=128, out_channels4=128),

)

self.avgpool = nn.AvgPool2d(kernel_size=7,stride=1)

self.dropout = nn.Dropout(p=0.4)

self.linear = nn.Linear(in_features=1024,out_features=num_classes)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

aux1 = x = self.block4_1(x)

aux2 = x = self.block4_2(x)

x = self.block4_3(x)

out = self.block5(x)

out = self.avgpool(out)

out = self.dropout(out)

out = out.view(out.size(0), -1)

out = self.linear(out)

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

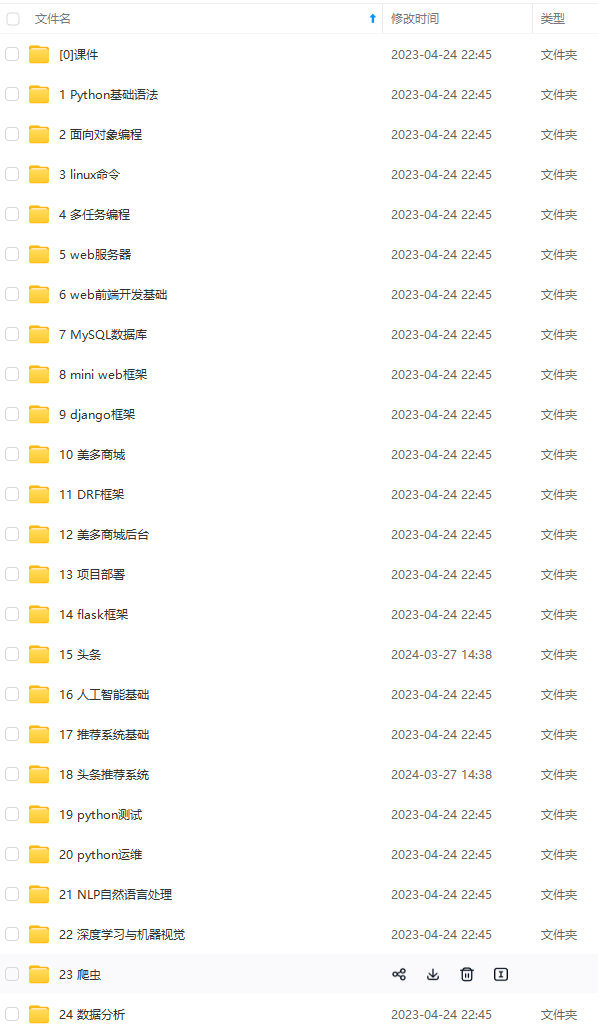

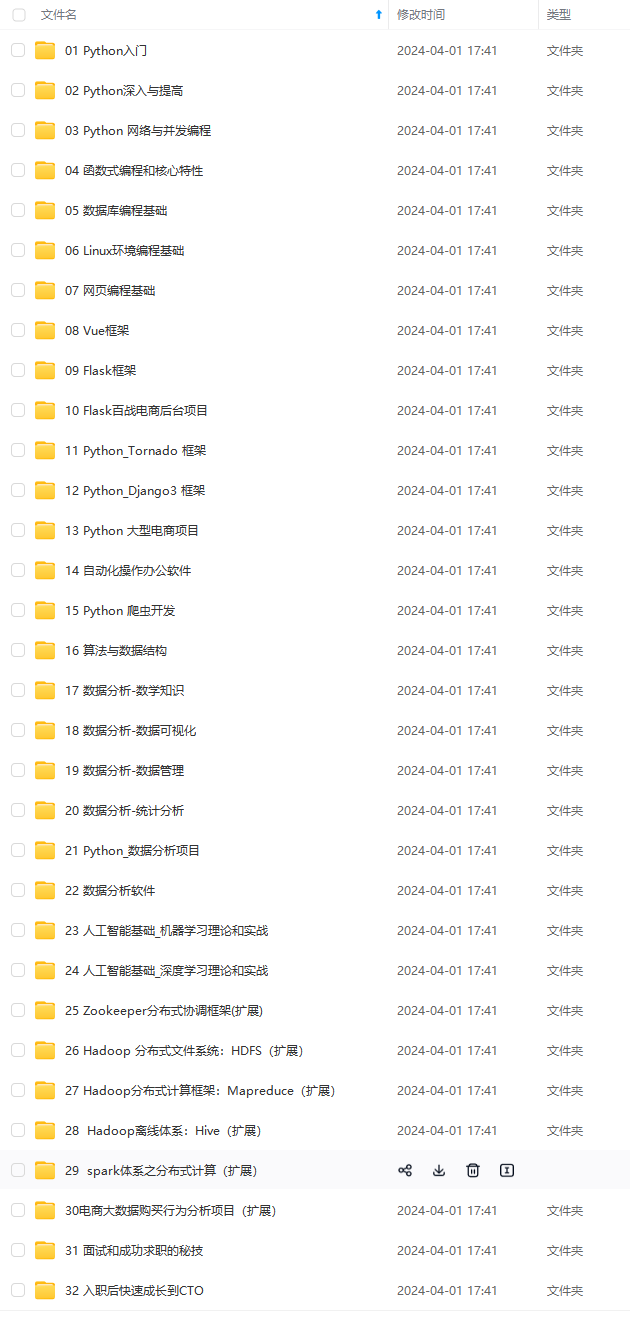

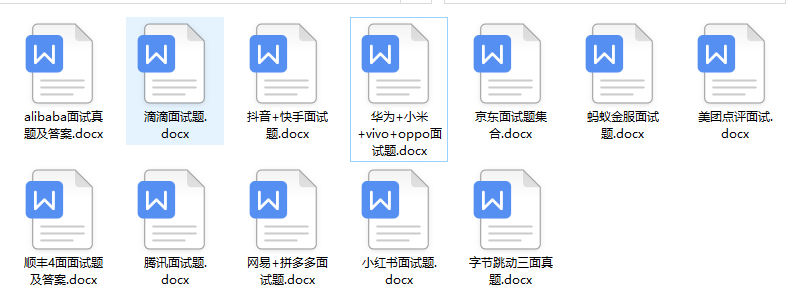

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注:Python)

b8a67243c1008edf79.png)

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注:Python)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?