activity_img_recognition.xml

<?xml version="1.0" encoding="utf-8"?><RelativeLayout xmlns:android=“http://schemas.android.com/apk/res/android”

xmlns:opencv=“http://schemas.android.com/apk/res-auto”

xmlns:tools=“http://schemas.android.com/tools”

android:id=“@+id/activity_img_recognition”

android:layout_width=“match_parent”

android:layout_height=“match_parent”

tools:context=“com.sueed.imagerecognition.CameraActivity”>

<org.opencv.android.JavaCameraView

android:id=“@+id/jcv”

android:layout_width=“match_parent”

android:layout_height=“match_parent”

android:visibility=“gone”

opencv:camera_id=“any”

opencv:show_fps=“true” />

主要逻辑代码

CameraActivity.java 【相机启动获取图像和包装MAT相关】

因为OpenCV中JavaCameraView继承自SurfaceView,若有需要可以自定义编写extends SurfaceView implements SurfaceHolder.Callback的xxxSurfaceView替换使用。

package com.sueed.imagerecognition;

import android.Manifest;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.os.Bundle;

import android.util.Log;

import android.view.Menu;

import android.view.MenuItem;

import android.view.SurfaceView;

import android.view.View;

import android.view.WindowManager;

import android.widget.ImageView;

import android.widget.RelativeLayout;

import android.widget.Toast;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import com.sueed.imagerecognition.filters.Filter;

import com.sueed.imagerecognition.filters.NoneFilter;

import com.sueed.imagerecognition.filters.ar.ImageDetectionFilter;

import com.sueed.imagerecognition.imagerecognition.R;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

import org.opencv.android.JavaCameraView;

import org.opencv.android.OpenCVLoader;

import org.opencv.core.Mat;

import java.io.IOException;

// Use the deprecated Camera class.

@SuppressWarnings(“deprecation”)

public final class CameraActivity extends AppCompatActivity implements CvCameraViewListener2 {

// A tag for log output.

private static final String TAG = CameraActivity.class.getSimpleName();

// The filters.

private Filter[] mImageDetectionFilters;

// The indices of the active filters.

private int mImageDetectionFilterIndex;

// The camera view.

private CameraBridgeViewBase mCameraView;

@Override

protected void onCreate(final Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

//init CameraView

mCameraView = new JavaCameraView(this, 0);

mCameraView.setMaxFrameSize(size.MaxWidth, size.MaxHeight);

mCameraView.setCvCameraViewListener(this);

setContentView(mCameraView);

requestPermissions();

mCameraView.enableView();

}

@Override

public void onPause() {

if (mCameraView != null) {

mCameraView.disableView();

}

super.onPause();

}

@Override

public void onResume() {

super.onResume();

OpenCVLoader.initDebug();

}

@Override

public void onDestroy() {

if (mCameraView != null) {

mCameraView.disableView();

}

super.onDestroy();

}

@Override

public boolean onCreateOptionsMenu(final Menu menu) {

getMenuInflater().inflate(R.menu.activity_camera, menu);

return true;

}

@Override

public boolean onOptionsItemSelected(final MenuItem item) {

switch (item.getItemId()) {

case R.id.menu_next_image_detection_filter:

mImageDetectionFilterIndex++;

if (mImageDetectionFilters != null && mImageDetectionFilterIndex == mImageDetectionFilters.length) {

mImageDetectionFilterIndex = 0;

}

return true;

default:

return super.onOptionsItemSelected(item);

}

}

@Override

public void onCameraViewStarted(final int width, final int height) {

Filter Enkidu = null;

try {

Enkidu = new ImageDetectionFilter(CameraActivity.this, R.drawable.enkidu);

} catch (IOException e) {

e.printStackTrace();

}

Filter akbarHunting = null;

try {

akbarHunting = new ImageDetectionFilter(CameraActivity.this, R.drawable.akbar_hunting_with_cheetahs);

} catch (IOException e) {

Log.e(TAG, "Failed to load drawable: " + “akbar_hunting_with_cheetahs”);

e.printStackTrace();

}

mImageDetectionFilters = new Filter[]{

new NoneFilter(),

Enkidu,

akbarHunting

};

}

@Override

public void onCameraViewStopped() {

}

@Override

public Mat onCameraFrame(final CvCameraViewFrame inputFrame) {

final Mat rgba = inputFrame.rgba();

if (mImageDetectionFilters != null) {

mImageDetectionFilters[mImageDetectionFilterIndex].apply(rgba, rgba);

}

return rgba;

}

}

ImageRecognitionFilter.java【图像特征过滤比对及绘制追踪绿框】

package com.nummist.secondsight.filters.ar;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.opencv.android.Utils;

import org.opencv.calib3d.Calib3d;

import org.opencv.core.Core;

import org.opencv.core.CvType;

import org.opencv.core.DMatch;

import org.opencv.core.KeyPoint;

import org.opencv.core.Mat;

import org.opencv.core.MatOfDMatch;

import org.opencv.core.MatOfKeyPoint;

import org.opencv.core.MatOfPoint;

import org.opencv.core.MatOfPoint2f;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.features2d.DescriptorExtractor;

import org.opencv.features2d.DescriptorMatcher;

import org.opencv.features2d.FeatureDetector;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import android.content.Context;

import com.nummist.secondsight.filters.Filter;

public final class ImageDetectionFilter implements Filter {

// The reference image (this detector’s target).

private final Mat mReferenceImage;

// Features of the reference image.

private final MatOfKeyPoint mReferenceKeypoints = new MatOfKeyPoint();

// Descriptors of the reference image’s features.

private final Mat mReferenceDescriptors = new Mat();

// The corner coordinates of the reference image, in pixels.

// CvType defines the color depth, number of channels, and

// channel layout in the image. Here, each point is represented

// by two 32-bit floats.

private final Mat mReferenceCorners = new Mat(4, 1, CvType.CV_32FC2);

// Features of the scene (the current frame).

private final MatOfKeyPoint mSceneKeypoints = new MatOfKeyPoint();

// Descriptors of the scene’s features.

private final Mat mSceneDescriptors = new Mat();

// Tentative corner coordinates detected in the scene, in

// pixels.

private final Mat mCandidateSceneCorners = new Mat(4, 1, CvType.CV_32FC2);

// Good corner coordinates detected in the scene, in pixels.

private final Mat mSceneCorners = new Mat(0, 0, CvType.CV_32FC2);

// The good detected corner coordinates, in pixels, as integers.

private final MatOfPoint mIntSceneCorners = new MatOfPoint();

// A grayscale version of the scene.

private final Mat mGraySrc = new Mat();

// Tentative matches of scene features and reference features.

private final MatOfDMatch mMatches = new MatOfDMatch();

// A feature detector, which finds features in images.

private final FeatureDetector mFeatureDetector = FeatureDetector.create(FeatureDetector.ORB);

// A descriptor extractor, which creates descriptors of

// features.

private final DescriptorExtractor mDescriptorExtractor = DescriptorExtractor.create(DescriptorExtractor.ORB);

// A descriptor matcher, which matches features based on their

// descriptors.

private final DescriptorMatcher mDescriptorMatcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE_HAMMINGLUT);

// The color of the outline drawn around the detected image.

private final Scalar mLineColor = new Scalar(0, 255, 0);

public ImageDetectionFilter(final Context context, final int referenceImageResourceID) throws IOException {

// Load the reference image from the app’s resources.

// It is loaded in BGR (blue, green, red) format.

mReferenceImage = Utils.loadResource(context, referenceImageResourceID, Imgcodecs.CV_LOAD_IMAGE_COLOR);

// Create grayscale and RGBA versions of the reference image.

final Mat referenceImageGray = new Mat();

Imgproc.cvtColor(mReferenceImage, referenceImageGray, Imgproc.COLOR_BGR2GRAY);

Imgproc.cvtColor(mReferenceImage, mReferenceImage, Imgproc.COLOR_BGR2RGBA);

// Store the reference image’s corner coordinates, in pixels.

mReferenceCorners.put(0, 0, new double[]{0.0, 0.0});

mReferenceCorners.put(1, 0, new double[]{referenceImageGray.cols(), 0.0});

mReferenceCorners.put(2, 0, new double[]{referenceImageGray.cols(), referenceImageGray.rows()});

mReferenceCorners.put(3, 0, new double[]{0.0, referenceImageGray.rows()});

// Detect the reference features and compute their

// descriptors.

mFeatureDetector.detect(referenceImageGray, mReferenceKeypoints);

mDescriptorExtractor.compute(referenceImageGray, mReferenceKeypoints, mReferenceDescriptors);

}

@Override

public void apply(final Mat src, final Mat dst) {

// Convert the scene to grayscale.

Imgproc.cvtColor(src, mGraySrc, Imgproc.COLOR_RGBA2GRAY);

// Detect the scene features, compute their descriptors,

// and match the scene descriptors to reference descriptors.

mFeatureDetector.detect(mGraySrc, mSceneKeypoints);

mDescriptorExtractor.compute(mGraySrc, mSceneKeypoints, mSceneDescriptors);

mDescriptorMatcher.match(mSceneDescriptors, mReferenceDescriptors, mMatches);

// Attempt to find the target image’s corners in the scene.

findSceneCorners();

// If the corners have been found, draw an outline around the

// target image.

// Else, draw a thumbnail of the target image.

draw(src, dst);

}

private void findSceneCorners() {

final List matchesList = mMatches.toList();

if (matchesList.size() < 4) {

// There are too few matches to find the homography.

return;

}

final List referenceKeypointsList = mReferenceKeypoints.toList();

final List sceneKeypointsList = mSceneKeypoints.toList();

// Calculate the max and min distances between keypoints.

double maxDist = 0.0;

double minDist = Double.MAX_VALUE;

for (final DMatch match : matchesList) {

final double dist = match.distance;

if (dist < minDist) {

minDist = dist;

}

if (dist > maxDist) {

maxDist = dist;

}

}

// The thresholds for minDist are chosen subjectively

// based on testing. The unit is not related to pixel

// distances; it is related to the number of failed tests

// for similarity between the matched descriptors.

if (minDist > 50.0) {

// The target is completely lost.

// Discard any previously found corners.

mSceneCorners.create(0, 0, mSceneCorners.type());

return;

} else if (minDist > 25.0) {

// The target is lost but maybe it is still close.

// Keep any previously found corners.

return;

}

// Identify “good” keypoints based on match distance.

final ArrayList goodReferencePointsList = new ArrayList();

final ArrayList goodScenePointsList = new ArrayList();

final double maxGoodMatchDist = 1.75 * minDist;

for (final DMatch match : matchesList) {

if (match.distance < maxGoodMatchDist) {

goodReferencePointsList.add(referenceKeypointsList.get(match.trainIdx).pt);

goodScenePointsList.add(sceneKeypointsList.get(match.queryIdx).pt);

}

}

if (goodReferencePointsList.size() < 4 || goodScenePointsList.size() < 4) {

// There are too few good points to find the homography.

return;

}

// There are enough good points to find the homography.

// (Otherwise, the method would have already returned.)

// Convert the matched points to MatOfPoint2f format, as

// required by the Calib3d.findHomography function.

final MatOfPoint2f goodReferencePoints = new MatOfPoint2f();

goodReferencePoints.fromList(goodReferencePointsList);

final MatOfPoint2f goodScenePoints = new MatOfPoint2f();

goodScenePoints.fromList(goodScenePointsList);

// Find the homography.

final Mat homography = Calib3d.findHomography(goodReferencePoints, goodScenePoints);

// Use the homography to project the reference corner

// coordinates into scene coordinates.

Core.perspectiveTransform(mReferenceCorners, mCandidateSceneCorners, homography);

// Convert the scene corners to integer format, as required

// by the Imgproc.isContourConvex function.

mCandidateSceneCorners.convertTo(mIntSceneCorners, CvType.CV_32S);

// Check whether the corners form a convex polygon. If not,

最后

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数初中级Android工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。

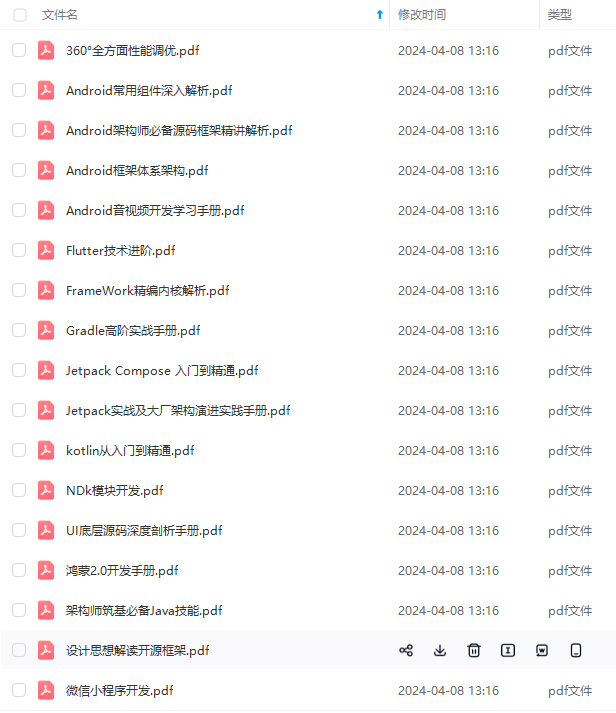

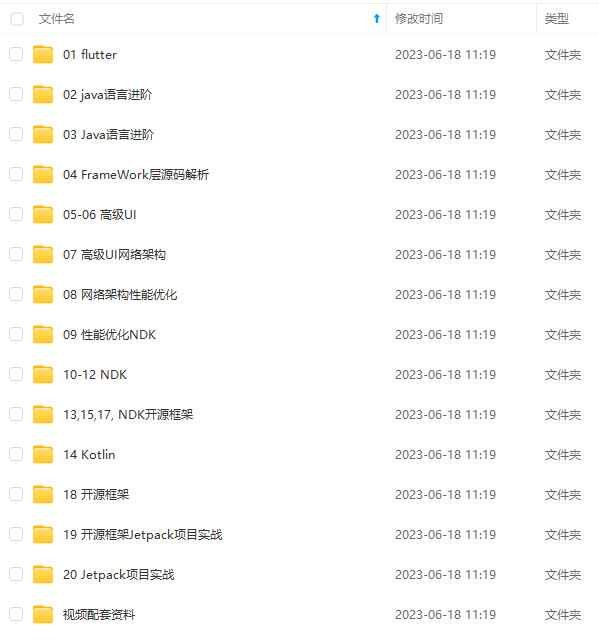

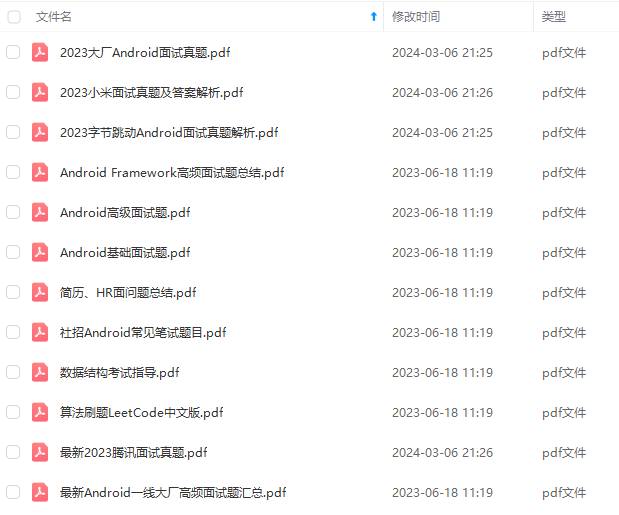

因此我收集整理了一份《2024年Android移动开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Android开发知识点!不论你是刚入门Android开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

SceneCorners, CvType.CV_32S);

// Check whether the corners form a convex polygon. If not,

最后

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数初中级Android工程师,想要提升技能,往往是自己摸索成长,自己不成体系的自学效果低效漫长且无助。

因此我收集整理了一份《2024年Android移动开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-ikOBJPFq-1715405221759)]

[外链图片转存中…(img-FmLg2S7W-1715405221761)]

[外链图片转存中…(img-QnqHA45b-1715405221762)]

[外链图片转存中…(img-Z5IA3qUw-1715405221763)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Android开发知识点!不论你是刚入门Android开发的新手,还是希望在技术上不断提升的资深开发者,这些资料都将为你打开新的学习之门

如果你觉得这些内容对你有帮助,需要这份全套学习资料的朋友可以戳我获取!!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

2461

2461

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?