3.开启hive远程连接

nohup hive --service hiveserver2 &

nohup hive --service metastore &

4.将hive下面的hive-site.xml和jar包拷贝到spark的conf目录下

[root@lxm147 jars]# pwd

/opt/soft/spark312/conf

[root@lxm147 conf]# cp /opt/soft/hive312/conf/hive-site.xml /opt/soft/spark312/conf/

[root@lxm147 jars]# pwd

/opt/soft/spark312/jars

[root@lxm147 jars]# cp /opt/soft/hive312/lib/hive-beeline-3.1.2.jar ./

[root@lxm147 jars]# cp /opt/soft/hive312/lib/hive-cli-3.1.2.jar ./

[root@lxm147 jars]# cp /opt/soft/hive312/lib/hive-exec-3.1.2.jar ./

[root@lxm147 jars]# cp /opt/soft/hive312/lib/hive-jdbc-3.1.2.jar ./

[root@lxm147 jars]# cp /opt/soft/hive312/lib/hive-metastore-3.1.2.jar ./

[root@lxm147 jars]# cp /opt/soft/hive312/lib/mysql-connector-java-8.0.29.jar ./

hive-site.xml内容如下:

下面的配置一定要加上,否则无法连接:

hive.metastore.uris

thrift://lxm147:9083

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/opt/soft/hive312/warehouse</value>

<description></description>

</property>

<property>

<name>hive.metastore.db.type</name>

<value>mysql</value>

<description>使用连接的数据库</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.180.141:3306/hive147?createDatabaseIfNotExist=true</value>

<description>Mysql的url</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description></description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description></description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description></description>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>关闭schema验证</description>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>提示当前数据库名</description>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

<description>查询输出时带列名一起输出</description>

</property>

<property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>false</value>

<description>controls whether to connect to remove metastore server or open a new metastore server in Hive Client JVM</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://lxm147:9083</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>192.168.180.147</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>192.168.180.147</value>

</property>

<property>

<name>hive.aux.jars.path</name>

<value>file:///opt/soft/hive312/lib/hive-hbase-handler-3.1.2.jar,file:///opt/soft/hive312/lib/zookeeper-3.4.6.jar,file:///opt/soft/hive312/lib/hbase-client-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5-tests.jar,file:///opt/soft/hive312/lib/hbase-server-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-common-2.3.5.jar,file:///opt/soft/hive312/lib/hbase-protocol-2.3.5.jar,file:///opt/soft/hive312/lib/htrace-core-3.2.0-incubating.jar</value>

</property>

</configuration>

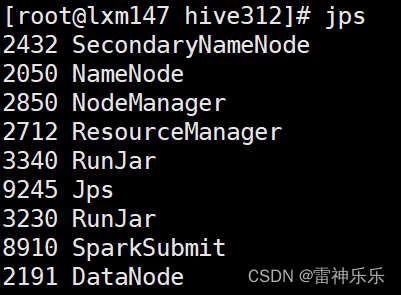

5.重启spark-shell

[root@lxm147 jars]# spark-shell

6.查询数据库的表

scala> spark.table("db_hive2.login_events").show

2023-04-04 13:33:35,138 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

2023-04-04 13:33:35,138 WARN conf.HiveConf: HiveConf of name hive.server2.active.passive.ha.enable does not exist

2023-04-04 13:33:35,139 WARN conf.HiveConf: HiveConf of name hive.metastore.db.type does not exist

+-------+-------------------+

|user_id| login_datetime|

+-------+-------------------+

| 100|2021-12-01 19:00:00|

| 100|2021-12-01 19:30:00|

| 100|2021-12-02 21:01:00|

| 100|2021-12-03 11:01:00|

| 101|2021-12-01 19:05:00|

| 101|2021-12-01 21:05:00|

| 101|2021-12-03 21:05:00|

| 101|2021-12-05 15:05:00|

| 101|2021-12-06 19:05:00|

| 102|2021-12-01 19:55:00|

| 102|2021-12-01 21:05:00|

| 102|2021-12-02 21:57:00|

| 102|2021-12-03 19:10:00|

| 104|2021-12-04 21:57:00|

| 104|2021-12-02 22:57:00|

| 105|2021-12-01 10:01:00|

+-------+-------------------+

7.IDEA操作连接hive

import org.apache.spark.sql.SparkSession

object SparkGive {

def main(args: Array[String]): Unit = {

// 创建会话

val spark: SparkSession = SparkSession.builder().appName("sparkhive")

.master("local[*]")

.config("hive.metastore.uris","thrift://192.168.180.147:9083")

.enableHiveSupport()

.getOrCreate()

// 关闭会话

spark.close()

}

}

8.Spark读取Hive中的库

// 读取数据库

spark.sql("show databases").show()

/*

+---------+

|namespace|

+---------+

| atguigu|

| bigdata|

| db_hive2|

| default|

| lalian|

| mydb|

| shopping|

+---------+

*/

9.Spark操作Hive中指定表

// 读取指定数据库指定表

val ecd = spark.table("shopping.ext_customer_details")

ecd.show()

+-----------+----------+-----------+--------------------+------+--------------------+-------+---------+--------------------+--------------------+-------------------+

|customer_id|first_name| last_name| email|gender| address|country| language| job| credit_type| credit_no|

+-----------+----------+-----------+--------------------+------+--------------------+-------+---------+--------------------+--------------------+-------------------+

|customer_id|first_name| last_name| email|gender| address|country| language| job| credit_type| credit_no|

| 1| Spencer| Raffeorty|sraffeorty0@dropb...| Male| 9274 Lyons Court| China| Khmer|Safety Technician...| jcb| 3589373385487669|

| 2| Cherye| Poynor| cpoynor1@51.la|Female|1377 Anzinger Avenue| China| Czech| Research Nurse| instapayment| 6376594861844533|

| 3| Natasha| Abendroth|nabendroth2@scrib...|Female| 2913 Evergreen Lane| China| Yiddish|Budget/Accounting...| visa| 4041591905616356|

| 4| Huntley| Seally| hseally3@prlog.org| Male| 694 Del Sol Lane| China| Albanian|Environmental Spe...| laser| 677118310740263477|

| 5| Druci| Coad| dcoad4@weibo.com|Female| 16 Debs Way| China| Hebrew| Teacher| jcb| 3537287259845047|

+-----------+----------+-----------+--------------------+------+--------------------+-------+---------+--------------------+--------------------+-------------------+

import spark.implicits._

import org.apache.spark.sql.functions._

// 过滤首行

val cuDF: Dataset[Row] = ecd.filter($"customer_id" =!= "customer_id")

+-----------+----------+-----------+--------------------+------+--------------------+-------+---------+--------------------+--------------------+-------------------+

**自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。**

**深知大多数大数据工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!**

**因此收集整理了一份《2024年大数据全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。**

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!**

**由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

**一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新**

**如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)**

[外链图片转存中...(img-qHDuEl3J-1712988447289)]

**一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

2138

2138

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?