网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

metadata:

labels:

app: sts-nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: sts-nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ “ReadWriteOnce” ]

storageClassName: “rook-ceph-block”

resources:

requests:

storage: 20Mi

apiVersion: v1

kind: Service

metadata:

name: sts-nginx

namespace: default

spec:

selector:

app: sts-nginx

type: ClusterIP

ports:

- name: sts-nginx

port: 80

targetPort: 80

protocol: TCP

>

> 测试: 创建sts、修改nginx数据、删除sts、重新创建sts。他们的数据丢不丢,共享不共享

>

>

>

### **三、文件存储(CephFS)**

#### **1、配置**

常用 文件存储。 RWX模式;如:10个Pod共同操作一个地方

**参考文档:**[Ceph Docs](https://bbs.csdn.net/topics/618545628)

apiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: myfs

namespace: rook-ceph # namespace:cluster

spec:

The metadata pool spec. Must use replication.

metadataPool:

replicated:

size: 3

requireSafeReplicaSize: true

parameters:

# Inline compression mode for the data pool

# Further reference: https://docs.ceph.com/docs/nautilus/rados/configuration/bluestore-config-ref/#inline-compression

compression_mode:

none

# gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool

# for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size

#target_size_ratio: “.5”

The list of data pool specs. Can use replication or erasure coding.

dataPools:

- failureDomain: host

replicated:

size: 3

# Disallow setting pool with replica 1, this could lead to data loss without recovery.

# Make sure you’re ABSOLUTELY CERTAIN that is what you want

requireSafeReplicaSize: true

parameters:

# Inline compression mode for the data pool

# Further reference: https://docs.ceph.com/docs/nautilus/rados/configuration/bluestore-config-ref/#inline-compression

compression_mode:

none

# gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool

# for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size

#target_size_ratio: “.5”

Whether to preserve filesystem after CephFilesystem CRD deletion

preserveFilesystemOnDelete: true

The metadata service (mds) configuration

metadataServer:

# The number of active MDS instances

activeCount: 1

# Whether each active MDS instance will have an active standby with a warm metadata cache for faster failover.

# If false, standbys will be available, but will not have a warm cache.

activeStandby: true

# The affinity rules to apply to the mds deployment

placement:

# nodeAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# nodeSelectorTerms:

# - matchExpressions:

# - key: role

# operator: In

# values:

# - mds-node

# topologySpreadConstraints:

# tolerations:

# - key: mds-node

# operator: Exists

# podAffinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

# topologyKey: kubernetes.io/hostname will place MDS across different hosts

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

# topologyKey: */zone can be used to spread MDS across different AZ

# Use <topologyKey: failure-domain.beta.kubernetes.io/zone> in k8s cluster if your cluster is v1.16 or lower

# Use <topologyKey: topology.kubernetes.io/zone> in k8s cluster is v1.17 or upper

topologyKey: topology.kubernetes.io/zone

# A key/value list of annotations

annotations:

# key: value

# A key/value list of labels

labels:

# key: value

resources:

# The requests and limits set here, allow the filesystem MDS Pod(s) to use half of one CPU core and 1 gigabyte of memory

# limits:

# cpu: “500m”

# memory: “1024Mi”

# requests:

# cpu: “500m”

# memory: “1024Mi”

# priorityClassName: my-priority-class

mirroring:

enabled: false

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-cephfs

Change “rook-ceph” provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.cephfs.csi.ceph.com

parameters:

clusterID is the namespace where operator is deployed.

clusterID: rook-ceph

CephFS filesystem name into which the volume shall be created

fsName: myfs

Ceph pool into which the volume shall be created

Required for provisionVolume: “true”

pool: myfs-data0

The secrets contain Ceph admin credentials. These are generated automatically by the operator

in the same namespace as the cluster.

csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-cephfs-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

reclaimPolicy: Delete

allowVolumeExpansion: true

#### **2、测试**

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

labels:

app: nginx-deploy

spec:

selector:

matchLabels:

app: nginx-deploy

replicas: 3

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: nginx-deploy

spec:

containers:

- name: nginx-deploy

image: nginx

volumeMounts:

- name: localtime

mountPath: /etc/localtime

- name: nginx-html-storage

mountPath: /usr/share/nginx/html

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- name: nginx-html-storage

persistentVolumeClaim:

claimName: nginx-pv-claim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pv-claim

labels:

app: nginx-deploy

spec:

storageClassName: rook-cephfs

accessModes:

- ReadWriteMany ##如果是ReadWriteOnce将会是什么效果

resources:

requests:

storage: 10Mi

>

> 测试,创建deploy、修改页面、删除deploy,新建deploy是否绑定成功,数据是否在

>

>

>

### **四、pvc扩容**

参照CSI(容器存储接口)文档:

**卷扩容:**[Ceph Docs](https://bbs.csdn.net/topics/618545628)

#### 动态卷扩容

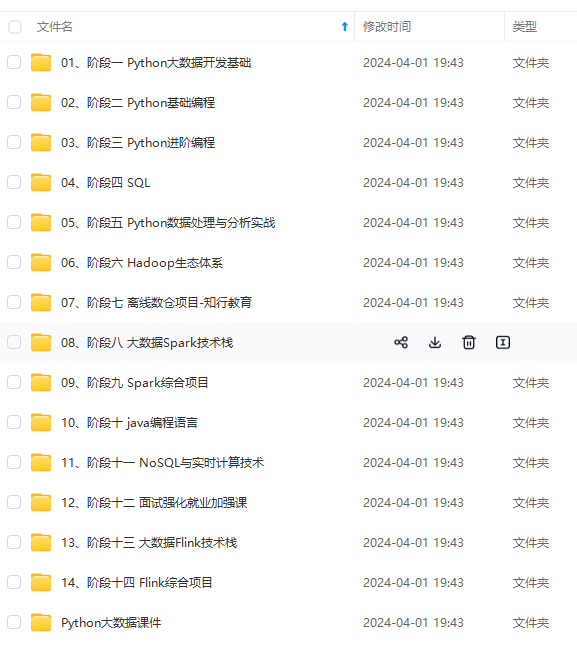

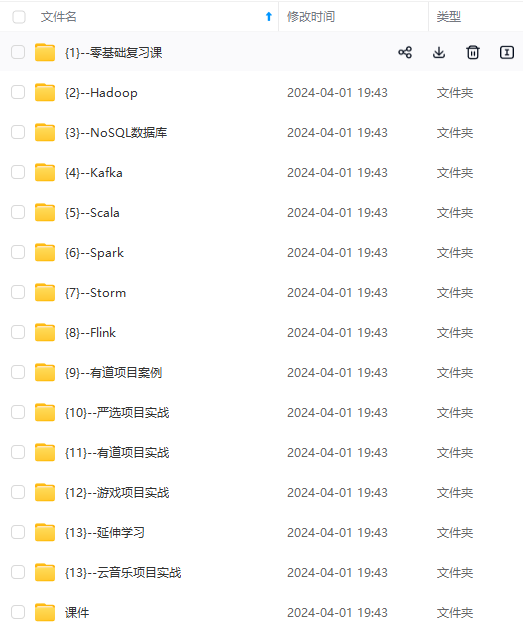

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

YlSBK-1715726046380)]

[外链图片转存中...(img-Kd9cv5ol-1715726046380)]

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

1450

1450

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?