既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

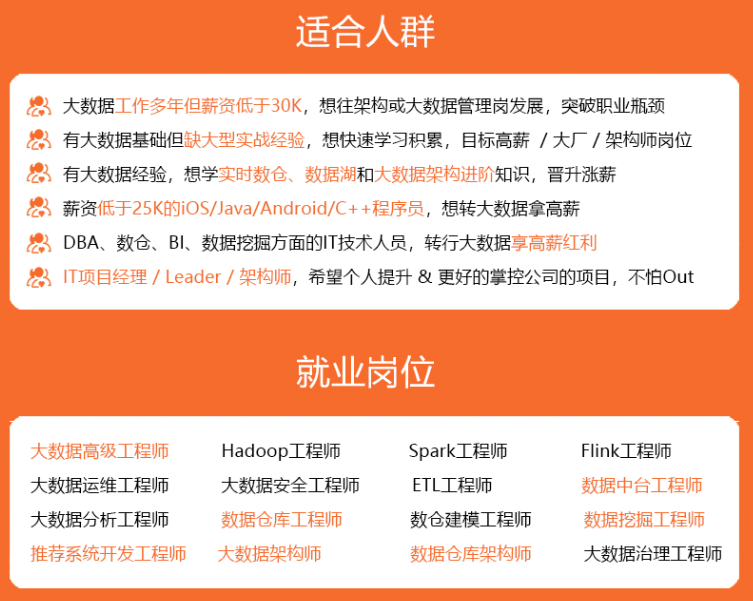

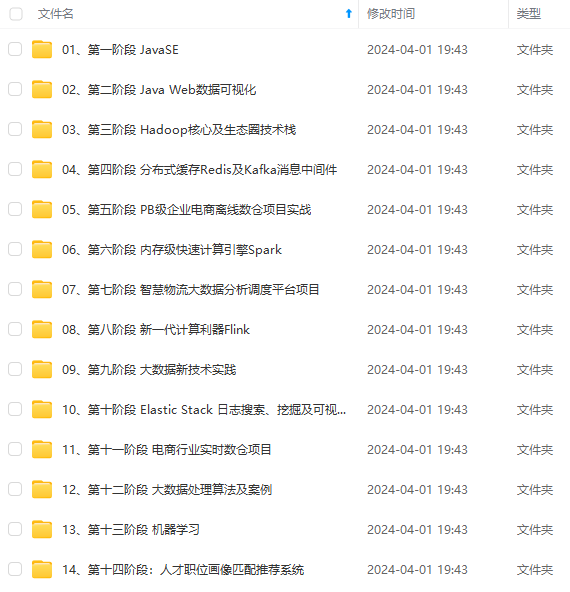

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

- Region Proposal Networks。RPN网络用于生成region proposals。该层通过softmax判断anchors属于positive或者negative,再利用bounding box regression修正anchors获得精确的proposals。

- Roi Pooling。该层收集输入的feature maps和proposals,综合这些信息后提取proposal feature maps,送入后续全连接层判定目标类别。

- Classification。利用proposal feature maps计算proposal的类别,同时再次bounding box regression获得检测框最终的精确位置。

算法整体架构可以阅读:Faster RCNN 实现思路详解

Faster R-CNN性能提升:

部分代码实现:

FasterRCNN.py:

import tensorflow as tf

import numpy as np

from model.rpn import RegionProposalNetwork, Extractor

from model.roi import RoIHead

from utils.anchor import loc2bbox, AnchorTargetCreator, ProposalTargetCreator

def \_smooth\_l1\_loss(pred_loc, gt_loc, in_weight, sigma):

# pred\_loc, gt\_loc, in\_weight

sigma2 = sigma \*\* 2

sigma2 = tf.constant(sigma2, dtype=tf.float32)

diff = in_weight \* (pred_loc - gt_loc)

abs_diff = tf.math.abs(diff)

abs_diff = tf.cast(abs_diff, dtype=tf.float32)

flag = tf.cast(abs_diff.numpy() < (1./sigma2), dtype=tf.float32)

y = (flag \* (sigma2 / 2.) \* (diff \*\* 2) + (1 - flag) \* (abs_diff - 0.5 / sigma2))

return tf.reduce_sum(y)

def \_fast\_rcnn\_loc\_loss(pred_loc, gt_loc, gt_label, sigma):

"""

:param pred\_loc: 1,38,50,36

:param gt\_loc: 17100,4

:param gt\_label: 17100

"""

idx = gt_label > 0

idx = tf.stack([idx, idx, idx, idx], axis=1)

idx = tf.reshape(idx, [-1, 4])

in_weight = tf.cast(idx, dtype=tf.int32)

loc_loss = _smooth_l1_loss(pred_loc, gt_loc, in_weight.numpy(), sigma)

# Normalize by total number of negative and positive rois.

loc_loss /= (tf.reduce_sum(tf.cast(gt_label >= 0, dtype=tf.float32))) # ignore gt\_label==-1 for rpn\_loss

return loc_loss

class FasterRCNN(tf.keras.Model):

def \_\_init\_\_(self, n_class, pool_size):

super(FasterRCNN, self).__init__()

self.n_class = n_class

self.extractor = Extractor()

self.rpn = RegionProposalNetwork()

self.head = RoIHead(n_class, pool_size)

self.score_thresh = 0.7

self.nms_thresh = 0.3

def \_\_call\_\_(self, x):

img_size = x.shape[1:3]

feature_map, rpn_locs, rpn_scores, rois, roi_score, anchor = self.rpn(x)

roi_cls_locs, roi_scores = self.head(feature_map, rois, img_size)

return roi_cls_locs, roi_scores, rois

def predict(self, imgs):

bboxes = []

labels = []

scores = []

img_size = imgs.shape[1:3]

# (2000,84) (2000,21) (2000,4)

roi_cls_loc, roi_score, rois = self(imgs)

prob = tf.nn.softmax(roi_score, axis=-1)

prob = prob.numpy()

roi_cls_loc = roi_cls_loc.numpy()

roi_cls_loc = roi_cls_loc.reshape(-1, self.n_class, 4) # 2000, 21, 4

for label_index in range(1, self.n_class):

cls_bbox = loc2bbox(rois, roi_cls_loc[:, label_index, :])

# clip bounding box

cls_bbox[:, 0::2] = tf.clip_by_value(cls_bbox[:, 0::2], clip_value_min=0, clip_value_max=img_size[0])

cls_bbox[:, 1::2] = tf.clip_by_value(cls_bbox[:, 1::2], clip_value_min=0, clip_value_max=img_size[1])

cls_prob = prob[:, label_index]

mask = cls_prob > 0.05

cls_bbox = cls_bbox[mask]

cls_prob = cls_prob[mask]

keep = tf.image.non_max_suppression(cls_bbox, cls_prob, max_output_size=-1, iou_threshold=self.nms_thresh)

if len(keep) > 0:

bboxes.append(cls_bbox[keep.numpy()])

# The labels are in [0, self.n\_class - 2].

labels.append((label_index - 1) \* np.ones((len(keep),)))

scores.append(cls_prob[keep.numpy()])

if len(bboxes) > 0:

bboxes = np.concatenate(bboxes, axis=0).astype(np.float32)

labels = np.concatenate(labels, axis=0).astype(np.float32)

scores = np.concatenate(scores, axis=0).astype(np.float32)

return bboxes, labels, scores

class FasterRCNNTrainer(tf.keras.Model):

def \_\_init\_\_(self, faster_rcnn):

super(FasterRCNNTrainer, self).__init__()

self.faster_rcnn = faster_rcnn

self.rpn_sigma = 3.0

self.roi_sigma = 1.0

# target creator create gt\_bbox gt\_label etc as training targets.

self.anchor_target_creator = AnchorTargetCreator()

self.proposal_target_creator = ProposalTargetCreator()

def \_\_call\_\_(self, imgs, bbox, label, scale, training=None):

_, H, W, _ = imgs.shape

img_size = (H, W)

features = self.faster_rcnn.extractor(imgs, training=training)

rpn_locs, rpn_scores, roi, anchor = self.faster_rcnn.rpn(features, img_size, scale, training=training)

rpn_score = rpn_scores[0]

rpn_loc = rpn_locs[0]

sample_roi, gt_roi_loc, gt_roi_label = self.proposal_target_creator(roi, bbox.numpy(), label.numpy())

roi_cls_loc, roi_score = self.faster_rcnn.head(features, sample_roi, img_size, training=training)

# RPN losses

gt_rpn_loc, gt_rpn_label = self.anchor_target_creator(bbox.numpy(), anchor, img_size)

gt_rpn_label = tf.constant(gt_rpn_label, dtype=tf.int32)

gt_rpn_loc = tf.constant(gt_rpn_loc, dtype=tf.float32)

rpn_loc_loss = _fast_rcnn_loc_loss(rpn_loc, gt_rpn_loc, gt_rpn_label, self.rpn_sigma)

idx_ = gt_rpn_label != -1

rpn_cls_loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)(gt_rpn_label[idx_], rpn_score[idx_])

# ROI losses

n_sample = roi_cls_loc.shape[0]

roi_cls_loc = tf.reshape(roi_cls_loc, [n_sample, -1, 4])

idx_ = [[i, j] for i, j in zip(tf.range(n_sample), tf.constant(gt_roi_label))]

roi_loc = tf.gather_nd(roi_cls_loc, idx_)

gt_roi_label = tf.constant(gt_roi_label)

gt_roi_loc = tf.constant(gt_roi_loc)

roi_loc_loss = _fast_rcnn_loc_loss(roi_loc, gt_roi_loc, gt_roi_label, self.roi_sigma)

idx_ = gt_roi_label != 0

roi_cls_loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False)(gt_roi_label[idx_], roi_score[idx_])

return rpn_loc_loss, rpn_cls_loss, roi_loc_loss, roi_cls_loss

RPN网络:

import tensorflow as tf

import numpy as np

from utils.anchor import generate_anchor_base, ProposalCreator, _enumerate_shifted_anchor

class Extractor(tf.keras.Model):

def \_\_init\_\_(self):

super(Extractor, self).__init__()

# conv1

self.conv1_1 = tf.keras.layers.Conv2D(32, 3, activation='relu', padding='same')

self.conv1_2 = tf.keras.layers.Conv2D(32, 3, activation='relu', padding='same')

self.pool1 = tf.keras.layers.MaxPooling2D(2, strides=2, padding='same')

# conv2

self.conv2_1 = tf.keras.layers.Conv2D(64, 3, activation='relu', padding='same')

self.conv2_2 = tf.keras.layers.Conv2D(64, 3, activation='relu', padding='same')

self.pool2 = tf.keras.layers.MaxPooling2D(2, strides=2, padding='same')

# conv3

self.conv3_1 = tf.keras.layers.Conv2D(128, 3, activation='relu', padding='same')

self.conv3_2 = tf.keras.layers.Conv2D(128, 3, activation='relu', padding='same')

self.conv3_3 = tf.keras.layers.Conv2D(128, 3, activation='relu', padding='same')

self.pool3 = tf.keras.layers.MaxPooling2D(2, strides=2, padding='same')

# conv4

self.conv4_1 = tf.keras.layers.Conv2D(256, 3, activation='relu', padding='same')

self.conv4_2 = tf.keras.layers.Conv2D(256, 3, activation='relu', padding='same')

self.conv4_3 = tf.keras.layers.Conv2D(256, 3, activation='relu', padding='same')

self.pool4 = tf.keras.layers.MaxPooling2D(2, strides=2, padding='same')

# conv5

self.conv5_1 = tf.keras.layers.Conv2D(512, 3, activation='relu', padding='same')

self.conv5_2 = tf.keras.layers.Conv2D(512, 3, activation='relu', padding='same')

self.conv5_3 = tf.keras.layers.Conv2D(512, 3, activation='relu', padding='same')

def \_\_call\_\_(self, imgs, training=None):

h = self.pool1(self.conv1_2(self.conv1_1(imgs)))

h = self.pool2(self.conv2_2(self.conv2_1(h)))

h = self.pool3(self.conv3_3(self.conv3_2(self.conv3_1(h))))

h = self.pool4(self.conv4_3(self.conv4_2(self.conv4_1(h))))

h = self.conv5_3(self.conv5_2(self.conv5_1(h)))

return h

class RegionProposalNetwork(tf.keras.Model):

def \_\_init\_\_(self, ratios=[0.5, 1, 2], anchor_scales=[8, 16, 32]):

super(RegionProposalNetwork, self).__init__()

# region\_proposal\_conv

self.region_proposal_conv = tf.keras.layers.Conv2D(512, kernel_size=3, activation=tf.nn.relu, padding='same')

# Bounding Boxes Regression layer

self.loc = tf.keras.layers.Conv2D(36, kernel_size=1, padding='same')

# Output Scores layer

self.score = tf.keras.layers.Conv2D(18, kernel_size=1, padding='same')

self.anchor = generate_anchor_base(anchor_scales=anchor_scales, ratios=ratios)

self.proposal_layer = ProposalCreator()

def \_\_call\_\_(self, x, img_size, scale, training=None):

n, hh, ww, _ = x.shape

anchor = _enumerate_shifted_anchor(np.array(self.anchor), 16, hh, ww)

n_anchor = anchor.shape[0] // (hh \* ww)

h = self.region_proposal_conv(x)

rpn_loc = self.loc(h) # [1, 38, 50, 36]

rpn_loc = tf.reshape(rpn_loc, [n, -1, 4])

rpn_score = self.score(h) # [1, 38, 50, 18]

# [1, 38, 50, 9, 2]

rpn_softmax_score = tf.nn.softmax(tf.reshape(rpn_score, [n, hh, ww, n_anchor, 2]), axis=-1)

rpn_fg_score = rpn_softmax_score[:, :, :, :, 1]

rpn_fg_score = tf.reshape(rpn_fg_score, [n, -1])

rpn_score = tf.reshape(rpn_score, [n, -1, 2])

roi = self.proposal_layer(rpn_loc[0].numpy(), rpn_fg_score[0].numpy(), anchor, img_size, scale)

return rpn_loc, rpn_score, roi, anchor

ROI.py:

import tensorflow as tf

def roi\_pooling(feature, rois, img_size, pool_size):

"""

用tf.image.crop\_and\_resize实现roi\_align

:param feature: 特征图[1, hh, ww, c]

:param rois: 原图的rois

:param img\_size: 原图的尺寸

:param pool\_size: align后的尺寸

"""

# 所有需要pool的框在batch中的对应图片序号,由于batch\_size为1,因此box\_ind里面的值都为0

box_ind = tf.zeros(rois.shape[0], dtype=tf.int32)

# ROI box coordinates. Must be normalized and ordered to [y1, x1, y2, x2]

# 在这里取到归一化框的坐标时需要的图片尺度

normalization = tf.cast(tf.stack([img_size[0], img_size[1], img_size[0], img_size[1]], axis=0), dtype=tf.float32)

# 归一化框的坐标为原图的0~1倍尺度

boxes = rois / normalization

# 进行ROI pool,之所以需要归一化框的坐标是因为tf接口的要求

# 2000,7,7,256

pool = tf.image.crop_and_resize(feature, boxes, box_ind, crop_size=pool_size)

return pool

class RoIPooling2D(tf.keras.Model):

def \_\_init\_\_(self, pool_size):

super(RoIPooling2D, self).__init__()

self.pool_size = pool_size

def \_\_call\_\_(self, feature, rois, img_size):

return roi_pooling(feature, rois, img_size, self.pool_size)

class RoIHead(tf.keras.Model):

def \_\_init\_\_(self, n_class, pool_size):

# n\_class includes the background

super(RoIHead, self).__init__()

self.fc = tf.keras.layers.Dense(4096)

self.cls_loc = tf.keras.layers.Dense(n_class \* 4)

self.score = tf.keras.layers.Dense(n_class)

self.n_class = n_class

self.roi = RoIPooling2D(pool_size)

def \_\_call\_\_(self, feature, rois, img_size, training=None):

rois = tf.constant(rois, dtype=tf.float32)

pool = self.roi(feature, rois, img_size)

pool = tf.reshape(pool, [rois.shape[0], -1])

fc = self.fc(pool)

roi_cls_locs = self.cls_loc(fc)

roi_scores = self.score(fc)

return roi_cls_locs, roi_scores

train.py:

import datetime

from utils.config import Config

from model.fasterrcnn import FasterRCNNTrainer, FasterRCNN

import tensorflow as tf

from utils.data import Dataset

physical_devices = tf.config.experimental.list_physical_devices('GPU')

assert len(physical_devices) > 0, "Not enough GPU hardware devices available"

tf.config.experimental.set_memory_growth(physical_devices[0], True)

config = Config()

config._parse({})

print("读取数据中....")

dataset = Dataset(config)

frcnn = FasterRCNN(21, (7, 7))

print('model construct completed')

"""

feature\_map, rpn\_locs, rpn\_scores, rois, roi\_indices, anchor = frcnn.rpn(x, scale)

'''

feature\_map : (1, 38, 50, 256) max= 0.0578503

rpn\_locs : (1, 38, 50, 36) max= 0.058497224

rpn\_scores : (1, 17100, 2) max= 0.047915094

rois : (2000, 4) max= 791.0

roi\_indices :(2000,) max= 0

anchor : (17100, 4) max= 1154.0387

'''

bbox = bboxes

label = labels

rpn\_score = rpn\_scores

rpn\_loc = rpn\_locs

roi = rois

proposal\_target\_creator = ProposalTargetCreator()

sample\_roi, gt\_roi\_loc, gt\_roi\_label, keep\_index = proposal\_target\_creator(roi, bbox, label)

roi\_cls\_loc, roi\_score = frcnn.head(feature\_map, sample\_roi, img\_size)

'''

roi\_cls\_loc : (128, 84) max= 0.062198948

roi\_score : (128, 21) max= 0.045144305

'''

anchor\_target\_creator = AnchorTargetCreator()

gt\_rpn\_loc, gt\_rpn\_label = anchor\_target\_creator(bbox, anchor, img\_size)

rpn\_loc\_loss = \_fast\_rcnn\_loc\_loss(rpn\_loc, gt\_rpn\_loc, gt\_rpn\_label, 3)

idx\_ = gt\_rpn\_label != -1

rpn\_cls\_loss = tf.keras.losses.SparseCategoricalCrossentropy()(gt\_rpn\_label[idx\_], rpn\_score[0][idx\_])

# ROI losses

n\_sample = roi\_cls\_loc.shape[0]

roi\_cls\_loc = tf.reshape(roi\_cls\_loc, [n\_sample, -1, 4])

idx\_ = [[i,j] for i,j in zip(range(n\_sample), gt\_roi\_label)]

roi\_loc = tf.gather\_nd(roi\_cls\_loc, idx\_)

roi\_loc\_loss = \_fast\_rcnn\_loc\_loss(roi\_loc, gt\_roi\_loc, gt\_roi\_label, 1)

roi\_cls\_loss = tf.keras.losses.SparseCategoricalCrossentropy()(gt\_roi\_label, roi\_score)

"""

model = FasterRCNNTrainer(frcnn)

optimizer = tf.keras.optimizers.SGD(learning_rate=1e-3, momentum=0.9)

current_time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

log_dir = 'logs/' + current_time

summary_writer = tf.summary.create_file_writer(log_dir)

epochs = 12

loss = []

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/forums/4f45ff00ff254613a03fab5e56a57acb)**

file_writer(log_dir)

epochs = 12

loss = []

[外链图片转存中...(img-dQ9ycTK6-1715501988456)]

[外链图片转存中...(img-RZSHCeJP-1715501988456)]

[外链图片转存中...(img-whfAPMZS-1715501988456)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/forums/4f45ff00ff254613a03fab5e56a57acb)**

1319

1319

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?