网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

+ [三、数据结构](#_17)

+ - * [Customer表](#Customer_22)

* [Transaction表](#Transaction_24)

* [Store表](#Store_26)

* [Review表](#Review_28)

+ [四、项目实战](#_30)

+ - * [通过UDF自定义 MD5加密函数](#UDF_MD5_118)

* [对表内容进行检查,为数据清洗做准备](#_139)

* [开启动态分区,通过窗口函数对数据进行清洗](#_175)

* [数据分析](#_214)

* [Customer分析](#Customer_216)

* [Transaction分析](#Transaction_250)

* [Store分析](#Store_467)

* [Review分析](#Review_590)

一、前言

Hive 学习过程中的一个练习项目,如果不妥的地方或者更好的建议,欢迎指出! 😁

我们主要进行一下一些练习:

- 数据结构

- 数据清洗

- 基于Hive的数据分析

二、项目需求

首先和大家讲一下这个项目的需求:

对某零售企业最近1年门店收集的数据进行数据分析

- 潜在客户画像

- 用户消费统计

- 门店的资源利用率

- 消费的特征人群定位

- 数据的可视化展现

三、数据结构

本次练习一共用到四张表,如下:

Customer表

Transaction表

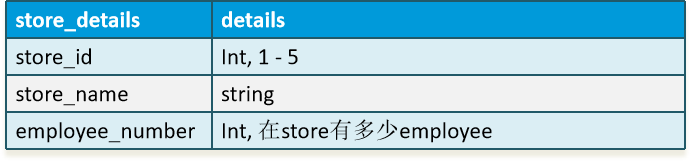

Store表

Review表

四、项目实战

Create HDFS Folder

hdfs dfs -mkdir -p /tmp/shopping/data/customer

hdfs dfs -mkdir -p /tmp/shopping/data/transaction

hdfs dfs -mkdir -p /tmp/shopping/data/store

hdfs dfs -mkdir -p /tmp/shopping/data/review

Upload the file to HDFS

hdfs dfs -put /opt/soft/data/customer_details.csv /tmp/shopping/data/customer/

hdfs dfs -put /opt/soft/data/transaction_details.csv /tmp/shopping/data/transaction/

hdfs dfs -put /opt/soft/data/store_details.csv /tmp/shopping/data/store/

hdfs dfs -put /opt/soft/data/store_review.csv /tmp/shopping/data/review/

Create database

drop database if exists shopping cascade

create database shopping

Use database

use shopping

Create external table

创建四张对应的外部表,也就是本次项目中的近源表。

create external table if not exists ext_customer_details(

customer_id string,

first_name string,

last_name string,

email string,

gender string,

address string,

country string,

language string,

job string,

credit_type string,

credit_no string

)

row format delimited fields terminated by ','

location '/tmp/shopping/data/customer/'

tblproperties('skip.header.line.count'='1')

create external table if not exists ext_transaction_details(

transaction_id string,

customer_id string,

store_id string,

price double,

product string,

buydate string,

buytime string

)

row format delimited fields terminated by ','

location '/tmp/shopping/data/transaction'

tblproperties('skip.header.line.count'='1')

create external table if not exists ext_store_details(

store_id string,

store_name string,

employee_number int

)

row format delimited fields terminated by ','

location '/tmp/shopping/data/store/'

tblproperties('skip.header.line.count'='1')

create external table if not exists ext_store_review(

transaction_id string,

store_id string,

review_score int

)

row format delimited fields terminated by ','

location '/tmp/shopping/data/review'

tblproperties('skip.header.line.count'='1')

通过UDF自定义 MD5加密函数

Create MD5 encryption function

这里通过UDF自定义 MD5加密函数 ,对地址、邮箱等信息进行加密。

-- md5 udf自定义加密函数

--add jar /opt/soft/data/md5.jar

--create temporary function md5 as 'com.shopping.services.Encryption'

--select md5('abc')

--drop temporary function encrymd5

Clean and Mask customer_details 创建明细表

create table if not exists customer_details

as select customer_id,first_name,last_name,md5(email) email,gender,md5(address) address,country,job,credit_type,md5(credit_no)

from ext_customer_details

对表内容进行检查,为数据清洗做准备

Check ext_transaction_details data

对transaction表的transaction_id进行检查,查看重复的、错误的、以及空值的数量。

这里从表中我们可以看到transaction_id存在100个重复的值。

with

t1 as (select 'countrow' as status,count(transaction_id) as val from ext_transaction_details),

t2 as (select 'distinct' as status,(count(transaction_id)-count(distinct transaction_id)) as val from ext_transaction_details),

t3 as (select 'nullrow' as status,count(transaction_id) as val from ext_transaction_details where transaction_id is null),

t4 as (select 'errorexp' as status,count(regexp_extract(transaction_id,'^([0-9]{1,4})$',0)) as val from ext_transaction_details)

select \* from t1 union all select \* from t2 union all select \* from t3 union all select \* from t4

Clean transaction_details into partition table

create table if not exists transaction_details(

transaction_id string,

customer_id string,

store_id string,

price double,

product string,

buydate string,

buytime string

)

partitioned by (partday string)

row format delimited fields terminated by ','

stored as rcfile

开启动态分区

set hive.exec.dynamic.partition=true

set hive.exec.dynamic.partition.mode=nonstrict

开启动态分区,通过窗口函数对数据进行清洗

Clear data and import data into transaction_details

-- partday 分区 transaction\_id 重复

select if(t.ct=1,transaction_id,concat(t.transaction_id,'\_',t.ct-1))

transaction_id,customer_id,store_id,price,product,buydate,buytime,date_format(buydate,'yyyy-MM')

as partday

from (select \*,row_number() over(partition by transaction_id) as ct

from ext_transaction_details) t

insert into transaction_details partition(partday)

select if(t.ct=1,transaction_id,concat(t.transaction_id,'\_',t.ct-1)) transaction_id,customer_id,store_id,price,product,buydate,buytime,date_format(regexp_replace(buydate,'/','-'),'yyyy-MM')

as partday from (select \*,row_number() over(partition by transaction_id) as ct

from ext_transaction_details) t

- row_number() over(partition by transaction_id) 窗口函数 :从1开始,按照顺序,生成分组内记录的序列,row_number()的值不会存在重复,当排序的值相同时,按照表中记录的顺序进行排列 这里我们对分组的transaction_id

if(t.ct=1,transaction_id,concat(t.transaction_id,'_',t.ct-1))如果满足ct=1,就是transaction_id,否则进行字符串拼接生成新的id

Clean store_review table

create table store_review

as select transaction_id,store_id,nvl(review_score,ceil(rand()\*5))

as review_score from ext_store_review

NVL(E1, E2)的功能为:如果E1为NULL,则函数返回E2,否则返回E1本身。

我们可以看到表中的数据存在空值,通过NVL函数对数据进行填充。

show tables

通过清洗后的近源表和明细表如上。

数据分析

Customer分析

- 找出顾客最常用的信用卡

select credit_type,count(credit_type) as peoplenum from customer_details

group by credit_type order by peoplenum desc limit 1

- 找出客户资料中排名前五的职位名称

select job,count(job) as jobnum from customer_details

group by job

order by jobnum desc

limit 5

- 在美国女性最常用的信用卡

select credit_type,count(credit_type) as femalenum from customer_details

where gender='Female'

group by credit_type

order by femalenum desc

limit 1

- 按性别和国家进行客户统计

select count(\*) as customernum,country,gender from customer_details

group by country,gender

Transaction分析

- 计算每月总收入

select partday,sum(price) as countMoney from transaction_details group by partday

- 计算每个季度的总收入

Create Quarter Macro 定义季度宏,将时间按季度进行划分

create temporary macro

calQuarter(dt string)

concat(year(regexp_replace(dt,'/','-')),'年第',ceil(month(regexp_replace(dt,'/','-'))/3),'季度')

select calQuarter(buydate) as quarter,sum(price) as sale

from transaction_details group by calQuarter(buydate)

- 按年计算总收入

create temporary macro calYear(dt string) year(regexp_replace(dt,'/','-'))

select calYear(buydate) as year,sum(price) as sale from transaction_details group by calYear(buydate)

- 按工作日计算总收入

create temporary macro calWeek(dt string) concat('星期',dayofweek(regexp_replace(dt,'/','-'))-1)

select concat('星期',dayofweek(regexp_replace(buydate,'/','-'))-1) as week,sum(price) as sale

from transaction_details group by dayofweek(regexp_replace(buydate,'/','-'))

- 按时间段计算总收入(需要清理数据)

select concat(regexp_extract(buytime,'[0-9]{1,2}',0),'时') as time,sum(price) as sale from transaction_details group by regexp_extract(buytime,'[0-9]{1,2}',0)

- 按时间段计算平均消费

Time macro

create temporary macro calTime(time string) if(split(time,' ')[1]='PM',regexp_extract(time,'[0-9]{1,2}',0)+12,

if(split(time,' ')[1]='AM',regexp_extract(time,'[0-9]{1,2}',0),split(time,':')[0]))

select calTime(buytime) as time,sum(price) as sale from transaction_details group by calTime(buytime)

--define time bucket

--early morning: (5:00, 8:00]

--morning: (8:00, 11:00]

--noon: (11:00, 13:00]

--afternoon: (13:00, 18:00]

--evening: (18:00, 22:00]

--night: (22:00, 5:00] --make it as else, since it is not liner increasing

--We also format the time. 1st format time to 19:23 like, then compare, then convert minites to hours

with

t1 as

(select calTime(buytime) as time,sum(price) as sale from transaction_details group by calTime(buytime) order by time),

t2 as

(select if(time>5 and time<=8,'early morning',if(time >8 and time<=11,'moring',if(time>11 and time <13,'noon',

if(time>13 and time <=18,'afternoon',if(time >18 and time <=22,'evening','night'))))) as sumtime,sale

from t1)

select sumtime,sum(sale) from t2

group by sumtime

- 按工作日计算平均消费

select concat('星期',dayofweek(regexp_replace(buydate,'/','-'))-1)

as week,avg(price) as sale from transaction_details

where dayofweek(regexp_replace(buydate,'/','-'))-1 !=0 and dayofweek(regexp_replace(buydate,'/','-'))-1 !=6

group by dayofweek(regexp_replace(buydate,'/','-'))

- 计算年、月、日的交易总数

select buydate as month,count(\*) as salenum from transaction_details group by buydate

- 找出交易量最大的10个客户

select c.customer_id,c.first_name,c.last_name,count(c.customer_id) as custnum from customer_details c

inner join transaction_details t

on c.customer_id=t.customer_id

group by c.customer_id,c.first_name,c.last_name

order by custnum desc

limit 10

- 找出消费最多的前10位顾客

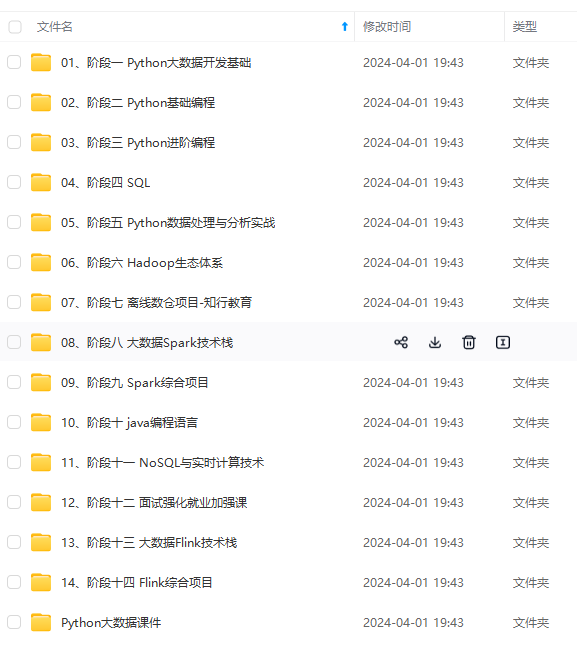

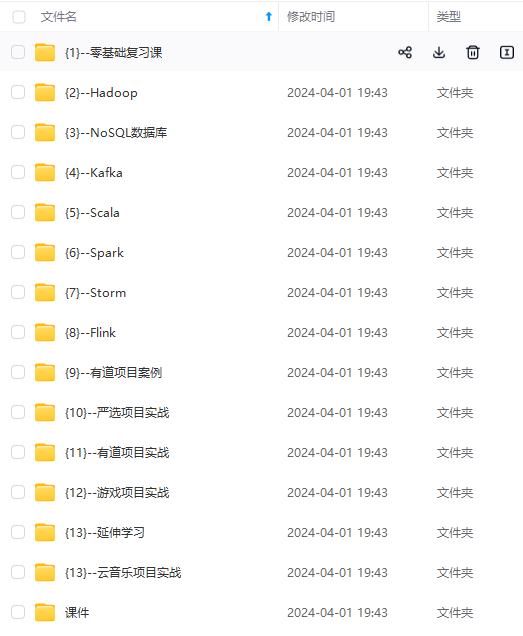

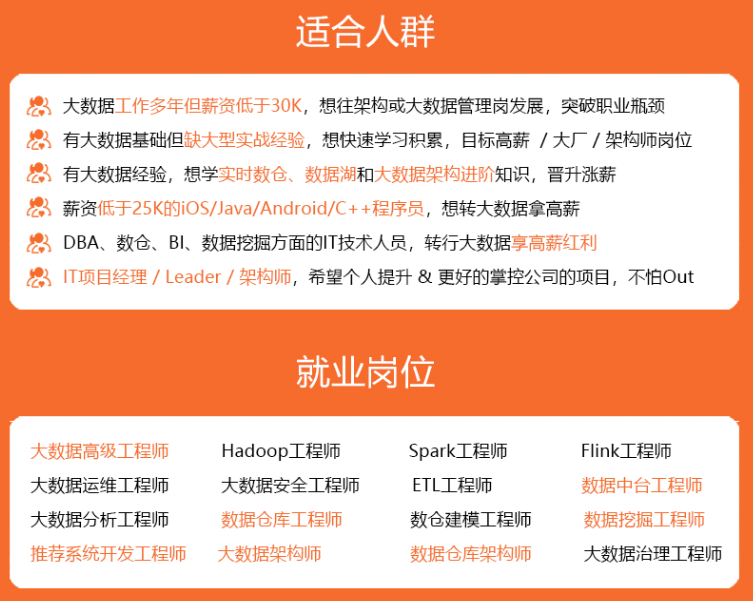

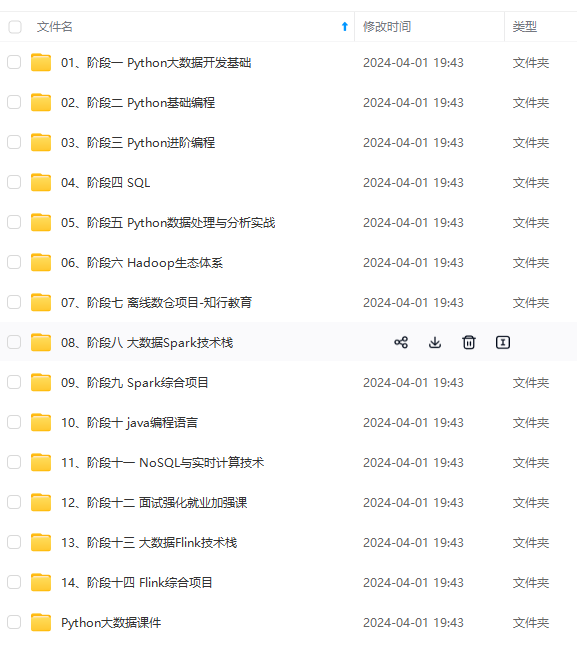

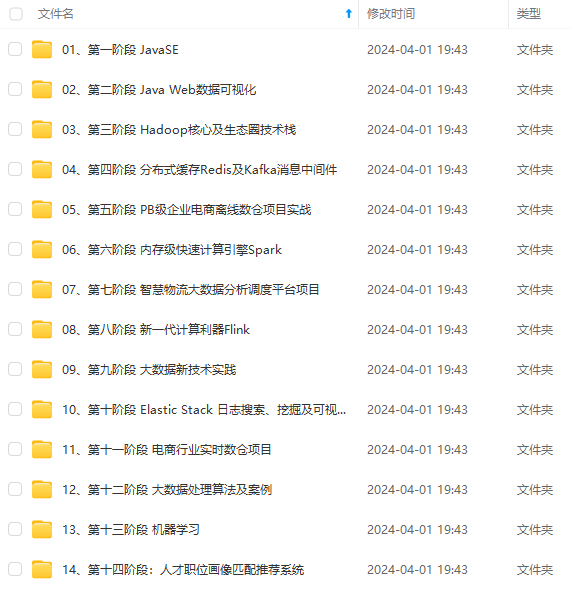

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

t(c.customer_id) as custnum from customer_details c

inner join transaction_details t

on c.customer_id=t.customer_id

group by c.customer_id,c.first_name,c.last_name

order by custnum desc

limit 10

* 找出消费最多的前10位顾客

[外链图片转存中...(img-pKUto4JA-1715698826389)]

[外链图片转存中...(img-hHwiSdAc-1715698826389)]

[外链图片转存中...(img-CvHdPF34-1715698826390)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?