网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- “”

resources: - nodes

- nodes/metrics

- services

- endpoints

- pods

verbs: - get

- list

- watch

- “”

- apiGroups:

- “”

resources: - configmaps

verbs: - get

- “”

- nonResourceURLs:

- “/metrics”

verbs: - get

- “/metrics”

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-rbac.yaml

### 3.4 配置管理

使用Configmap保存不需要加密配置信息

其中需要把nodes中ip地址根据自己的地址进行修改,其他不需要改动

[root@k8s-master prometheus-k8s]# vim prometheus-configmap.yaml

Prometheus configuration format https://prometheus.io/docs/prometheus/latest/configuration/configuration/

apiVersion: v1

kind: ConfigMap #

metadata:

name: prometheus-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: EnsureExists

data:

prometheus.yml: |

rule_files:

- /etc/config/rules/*.rules

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kubernetes-nodes

scrape_interval: 30s

static_configs:

- targets:

- 192.168.73.135:9100 #ip地址根据自己的地址进行修改

- 192.168.73.138:9100

- 192.168.73.139:9100

- 192.168.73.140:9100

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-configmap.yaml

### 3.5 有状态部署prometheus

这里使用storageclass进行动态供给,给prometheus的数据进行持久化,具体实现办法,可以查看之前的文章《k8s中的NFS动态存储供给》,除此之外可以使用静态供给的prometheus-statefulset-static-pv.yaml进行持久化

[root@k8s-master prometheus-k8s]# vim prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

version: v2.2.1

spec:

serviceName: “prometheus”

replicas: 1

podManagementPolicy: “Parallel”

updateStrategy:

type: “RollingUpdate”

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘’

spec:

priorityClassName: system-cluster-critical

serviceAccountName: prometheus

initContainers:

- name: “init-chown-data”

image: “busybox:latest”

imagePullPolicy: “IfNotPresent”

command: [“chown”, “-R”, “65534:65534”, “/data”]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: “”

containers:

- name: prometheus-server-configmap-reload

image: “jimmidyson/configmap-reload:v0.1”

imagePullPolicy: “IfNotPresent”

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.2.1"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

# based on 10 running nodes with 30 pods each

resources:

limits:

cpu: 200m

memory: 1000Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-rules

mountPath: /etc/config/rules

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

storageClassName: managed-nfs-storage #存储类根据自己的存储类名字修改

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “16Gi”

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-statefulset.yaml

检查状态

[root@k8s-master prometheus-k8s]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5bd5f9dbd9-wv45t 1/1 Running 1 8d

kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 14d

prometheus-0 2/2 Running 6 14d

可以看到一个prometheus-0的pod,这就刚才使用statefulset控制器进行的有状态部署,两个容器的状态为Runing则是正常,如果不为Runing可以使用kubectl describe pod prometheus-0 -n kube-system查看报错详情

### 3.6 创建service暴露访问端口

此处使用nodePort固定一个访问端口,不适用随机端口,便于访问

[root@k8s-master prometheus-k8s]# vim prometheus-service.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: “Prometheus”

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090 #固定的对外访问的端口

selector:

k8s-app: prometheus

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-service.yaml

检查

[root@k8s-master prometheus-k8s]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-5bd5f9dbd9-wv45t 1/1 Running 1 8dpod/kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 14dpod/prometheus-0 2/2 Running 6 14dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.0.0.2 53/UDP,53/TCP 13dservice/kubernetes-dashboard NodePort 10.0.0.127 443:30001/TCP 16dservice/prometheus NodePort 10.0.0.33 9090:30090/TCP 13d

### 3.7 web访问

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,此例就是:`http://192.168.73.139:30090`

访问成功的界面如图所示:

### 4 在K8S平台部署Grafana

通过上面的web访问,可以看出prometheus自带的UI界面是没有多少功能的,可视化展示的功能不完善,不能满足日常的监控所需,因此常常我们需要再结合Prometheus+Grafana的方式来进行可视化的数据展示

官网地址:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

https://grafana.com/grafana/download

刚才下载的项目中已经写好了Grafana的yaml,根据自己的环境进行修改

### 4.1 使用StatefulSet部署grafana

[root@k8s-master prometheus-k8s]# vim grafana.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana

namespace: kube-system

spec:

serviceName: “grafana”

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

volumeMounts:

- name: grafana-data

mountPath: /var/lib/grafana

subPath: grafana

securityContext:

fsGroup: 472

runAsUser: 472

volumeClaimTemplates:

- metadata:

name: grafana-data

spec:

storageClassName: managed-nfs-storage #和prometheus使用同一个存储类

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “1Gi”

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

spec:

type: NodePort

ports:

- port : 80

targetPort: 3000

nodePort: 30091

selector:

app: grafana

### 4.2 Grafana的web访问

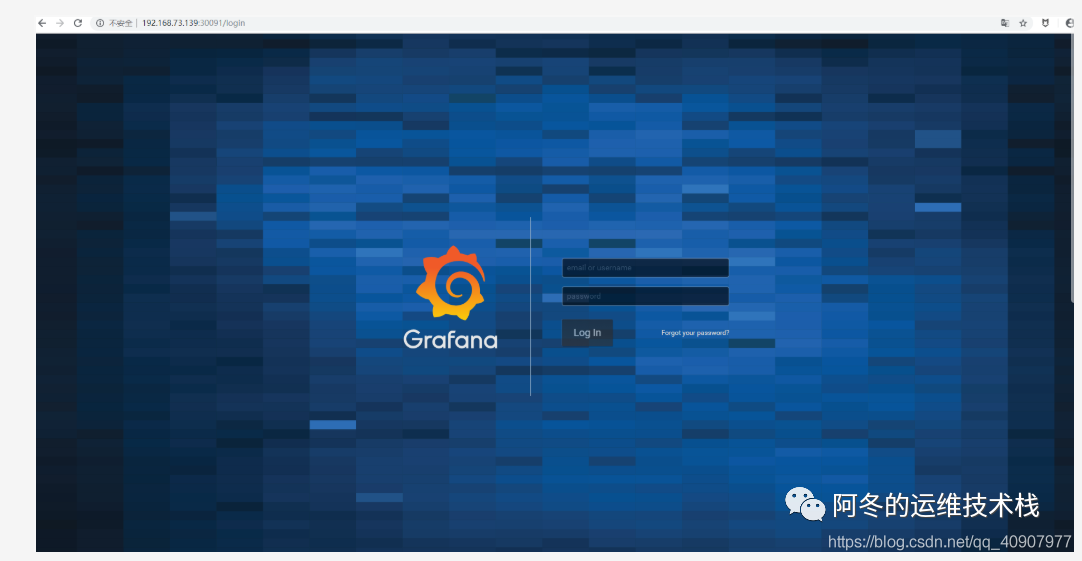

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,此例就是:http://192.168.73.139:30091

成功访问界面如下,会需要进行账号密码登陆,默认账号密码都为admin,登陆之后会让修改密码

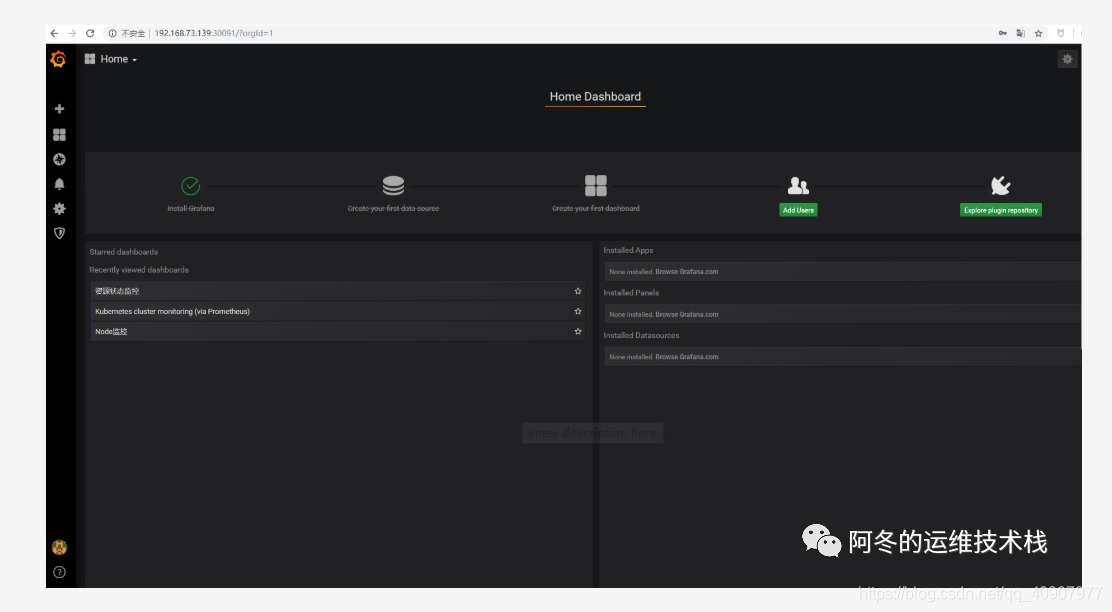

登陆之后的界面如下

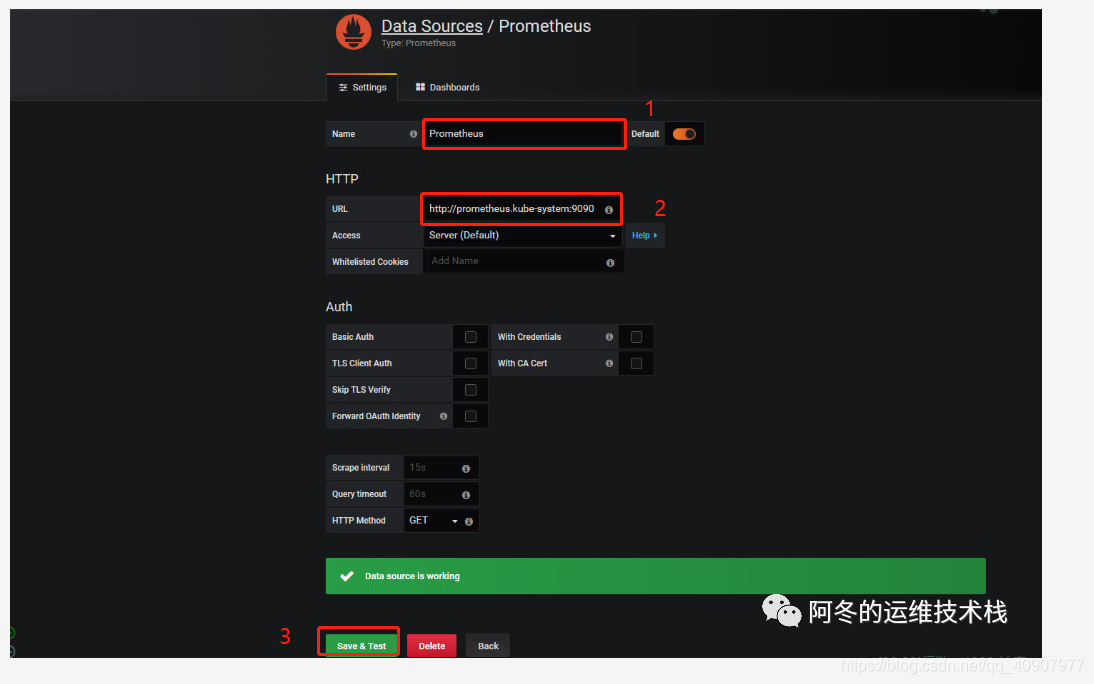

第一步需要进行数据源添加,点击create your first data source数据库图标,根据下图所示进行添加即可

第二步,添加完了之后点击底部的绿色的Save&Test,会成功提示Data sourse is working,则表示数据源添加成功

### 4.3 监控K8S集群中Pod、Node、资源对象数据的方法

1)Pod

kubelet的节点使用cAdvisor提供的metrics接口获取该节点所有Pod和容器相关的性能指标数据,安装kubelet默认就开启了

暴露接口地址:

https://NodeIP:10255/metrics/cadvisor

https://NodeIP:10250/metrics/cadvisor

2)Node

需要使用node\_exporter收集器采集节点资源利用率。

https://github.com/prometheus/node\_exporter

使用文档:https://prometheus.io/docs/guides/node-exporter/

使用node\_exporter.sh脚本分别在所有服务器上部署node\_exporter收集器,不需要修改可直接运行脚本

[root@k8s-master prometheus-k8s]# cat node_exporter.sh #!/bin/bashwget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

tar zxf node_exporter-0.17.0.linux-amd64.tar.gz

mv node_exporter-0.17.0.linux-amd64 /usr/local/node_exporter

cat </usr/lib/systemd/system/node_exporter.service

[Unit]

Description=https://prometheus.io

[Service]

Restart=on-failure

ExecStart=/usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable node_exporter

systemctl restart node_exporter

[root@k8s-master prometheus-k8s]# ./node_exporter.sh

检测node\_exporter的进程,是否生效

[root@k8s-master prometheus-k8s]# ps -ef|grep node_exporter

root 6227 1 0 Oct08 ? 00:06:43 /usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

root 118269 117584 0 23:27 pts/0 00:00:00 grep --color=auto node_exporter

3)资源对象

kube-state-metrics采集了k8s中各种资源对象的状态信息,只需要在master节点部署就行

https://github.com/kubernetes/kube-state-metrics

创建rbac的yaml对metrics进行授权

[root@k8s-master prometheus-k8s]# vim kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [“”]

resources:- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: [“list”, “watch”]

- apiGroups: [“extensions”]

resources:- daemonsets

- deployments

- replicasets

verbs: [“list”, “watch”]

- apiGroups: [“apps”]

resources:- statefulsets

verbs: [“list”, “watch”]

- statefulsets

- apiGroups: [“batch”]

resources:- cronjobs

- jobs

verbs: [“list”, “watch”]

- apiGroups: [“autoscaling”]

resources:- horizontalpodautoscalers

verbs: [“list”, “watch”]

- horizontalpodautoscalers

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [“”]

resources:- pods

verbs: [“get”]

- pods

- apiGroups: [“extensions”]

resources:- deployments

resourceNames: [“kube-state-metrics”]

verbs: [“get”, “update”]

- deployments

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-rbac.yaml

编写Deployment和ConfigMap的yaml进行metrics pod部署,不需要进行修改

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘’

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: lizhenliang/kube-state-metrics:v1.3.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: lizhenliang/addon-resizer:1.8.3

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-deployment.yaml

2.编写Service的yaml对metrics进行端口暴露

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

ap for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-deployment.yaml

2.编写Service的yaml对metrics进行端口暴露

[外链图片转存中…(img-rnhvvG6o-1715275642090)]

[外链图片转存中…(img-nBZPhCMf-1715275642091)]

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?