网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

- name: kafka-broker-2

host: "local-168-182-112"

path: "/opt/bigdata/servers/kraft/kafka-broker/data1"

service:

type: NodePort

nodePorts:

#NodePort 默认范围是 30000-32767

client: “32181”

tls: “32182”

Enable Prometheus to access ZooKeeper metrics endpoint

metrics:

enabled: true

kraft:

enabled: true

添加以下几个文件:

* **kafka/templates/broker/pv.yaml**

{{- range .Values.broker.persistence.local }}

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .name }}

labels:

name: {{ .name }}

spec:

storageClassName: {{ $.Values.broker.persistence.storageClass }}

capacity:

storage: {{ $.Values.broker.persistence.size }}

accessModes:

- ReadWriteOnce

local:

path: {{ .path }}

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- {{ .host }}

{{- end }}

* **kafka/templates/broker/storage-class.yaml**

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: {{ .Values.broker.persistence.storageClass }}

provisioner: kubernetes.io/no-provisioner

* **kafka/templates/controller-eligible/pv.yaml**

{{- range .Values.controller.persistence.local }}

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .name }}

labels:

name: {{ .name }}

spec:

storageClassName: {{ $.Values.controller.persistence.storageClass }}

capacity:

storage: {{ $.Values.controller.persistence.size }}

accessModes:

- ReadWriteOnce

local:

path: {{ .path }}

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- {{ .host }}

{{- end }}

* **kafka/templates/controller-eligible/storage-class.yaml**

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: {{ .Values.controller.persistence.storageClass }}

provisioner: kubernetes.io/no-provisioner

#### 4)使用 Helm 部署 Kafka 集群

先准备好镜像

docker pull docker.io/bitnami/kafka:3.6.0-debian-11-r0

docker tag docker.io/bitnami/kafka:3.6.0-debian-11-r0 registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0

docker push registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0

开始安装

helm install kraft ./kafka -n kraft --create-namespace

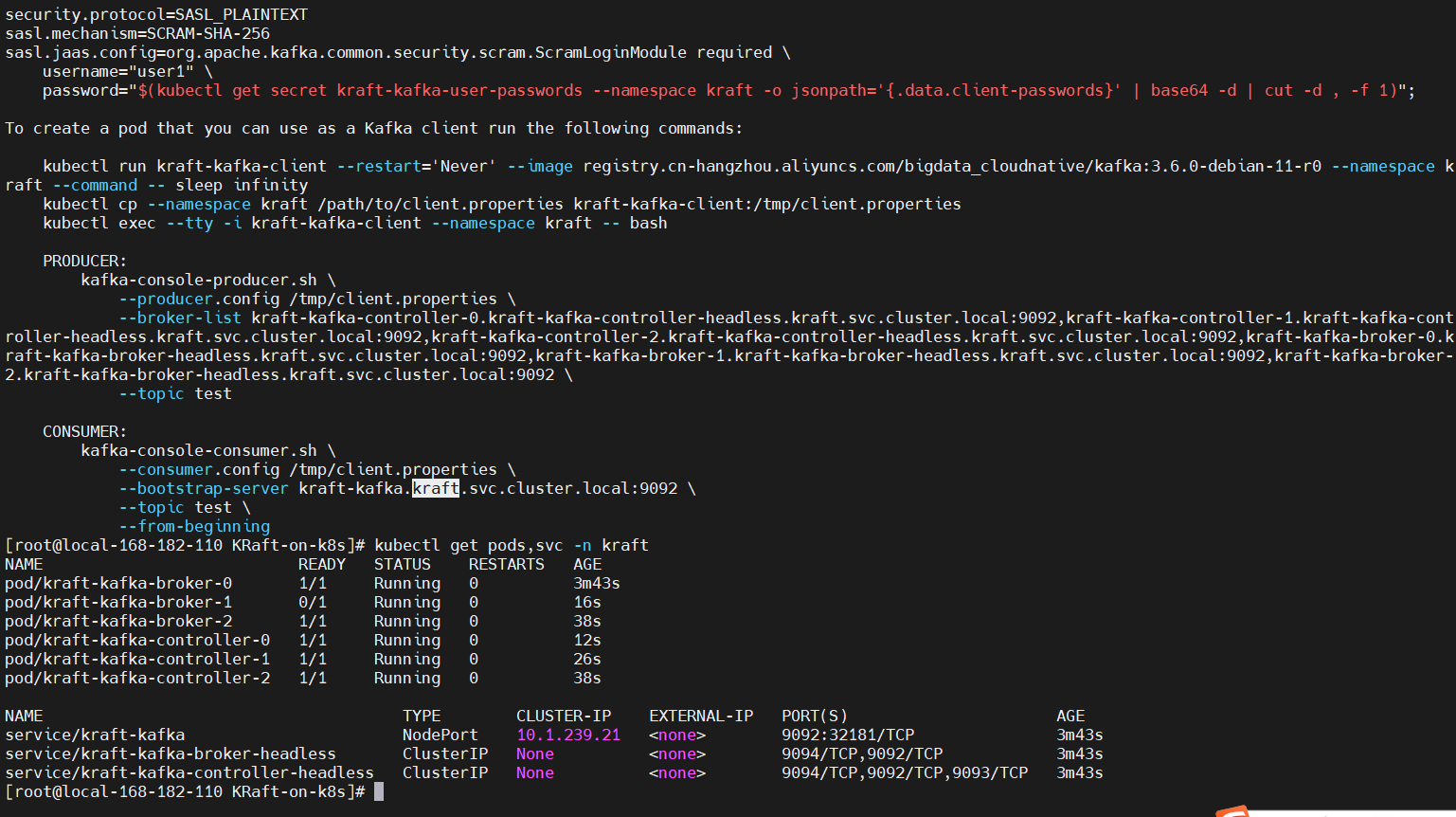

NOTES

[root@local-168-182-110 KRaft-on-k8s]# helm upgrade kraft

Release “kraft” has been upgraded. Happy Helming!

NAME: kraft

LAST DEPLOYED: Sun Mar 24 20:05:04 2024

NAMESPACE: kraft

STATUS: deployed

REVISION: 3

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 26.0.0

APP VERSION: 3.6.0

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kraft-kafka.kraft.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092

kraft-kafka-controller-1.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092

kraft-kafka-controller-2.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092

kraft-kafka-broker-0.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092

kraft-kafka-broker-1.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092

kraft-kafka-broker-2.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092

To create a pod that you can use as a Kafka client run the following commands:

kubectl run kraft-kafka-client --restart='Never' --image registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0 --namespace kraft --command -- sleep infinity

kubectl exec --tty -i kraft-kafka-client --namespace kraft -- bash

PRODUCER:

kafka-console-producer.sh \

--broker-list kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-controller-1.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-controller-2.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-0.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-1.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-2.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server kraft-kafka.kraft.svc.cluster.local:9092 \

--topic test \

--from-beginning

#### 5)测试验证

创建客户端

kubectl run kraft-kafka-client --restart=‘Never’ --image registry.cn-hangzhou.aliyuncs.com/bigdata_cloudnative/kafka:3.6.0-debian-11-r0 --namespace kraft --command – sleep infinity

创建客户端

kafka-topics.sh --create --topic test --bootstrap-server kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092 --partitions 3 --replication-factor 2

查看详情

kafka-topics.sh --describe --bootstrap-server kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092 --topic test

删除topic

kafka-topics.sh --delete --topic test --bootstrap-server kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092

生产者和消费者

生产者

kafka-console-producer.sh

–broker-list kraft-kafka-controller-0.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-controller-1.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-controller-2.kraft-kafka-controller-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-0.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-1.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092,kraft-kafka-broker-2.kraft-kafka-broker-headless.kraft.svc.cluster.local:9092

–topic test

消费者

kafka-console-consumer.sh

–bootstrap-server kraft-kafka.kraft.svc.cluster.local:9092

–topic test

–from-beginning

#### 6)更新集群

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/forums/4f45ff00ff254613a03fab5e56a57acb)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/forums/4f45ff00ff254613a03fab5e56a57acb)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

460

460

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?