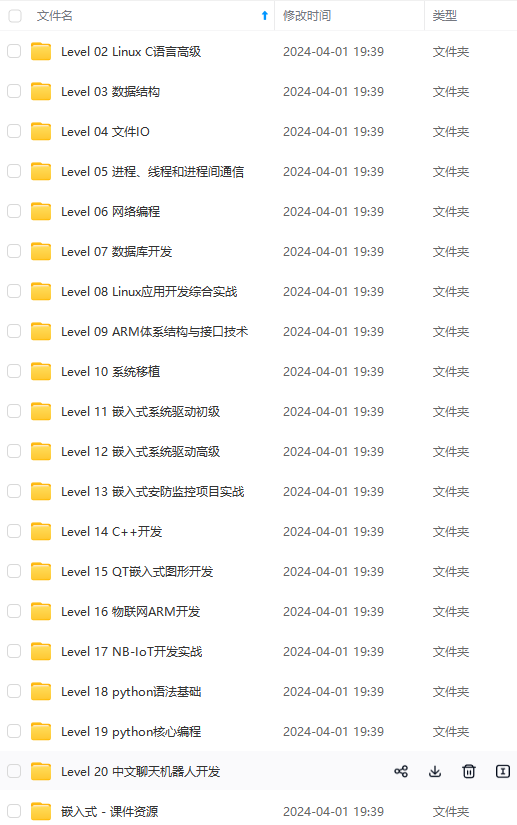

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上物联网嵌入式知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、电子书籍、讲解视频,并且后续会持续更新

需要这些体系化资料的朋友,可以加我V获取:vip1024c (备注嵌入式)

import os

from tqdm import tqdm

from time import time

os.environ[“KMP_DUPLICATE_LIB_OK”]=“TRUE”

from IPython import display

import torch

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

plt.rcParams[‘font.sans-serif’] = [‘SimHei’]

from sklearn.metrics import confusion_matrix

import torchvision

import torch.utils.data.dataloader as loader

import torch.utils.data as Dataset

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils import data

from torchvision import models

2、数据集下载以及数据增强

对训练集进行数据增强并通过ToTensor实例将图像数据从PIL类型变换tensor类型

transform = transforms.Compose(

[

transforms.RandomHorizontalFlip(),

transforms.RandomGrayscale(),

transforms.ToTensor(),

transforms.Normalize((0.5,),(0.5,))])

transform1 = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5,),(0.5,))])

mnist_train = torchvision.datasets.FashionMNIST(

root=“data”, train=True, transform=transform, download=True)

mnist_test = torchvision.datasets.FashionMNIST(

root=“data”, train=False, transform=transform1, download=True)

BATCH_SIZE = 100

trainloader =loader.DataLoader(mnist_train,batch_size = BATCH_SIZE,shuffle = True)

testloader =loader.DataLoader(mnist_test,batch_size = BATCH_SIZE,shuffle = False)

二、观察数据集图片:

“”“返回Fashion-MNIST数据集的文本标签。”“”

labels = [‘t-shirt’, ‘trouser’, ‘pullover’, ‘dress’, ‘coat’,

‘sandal’, ‘shirt’, ‘sneaker’, ‘bag’, ‘ankle boot’]

def show_images(imgs, num_rows, num_cols,targets,labels=None, scale=1.5):

“”“Plot a list of images.”“”

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for ax, img,target in zip(axes, imgs,targets):

if torch.is_tensor(img):

# 图片张量

ax.imshow(img.numpy())

else:

# PIL

ax.imshow(img)

# 设置坐标轴不可见

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

plt.subplots_adjust(hspace = 0.35)

if labels:

ax.set_title(‘{}-’.format(target)+labels[target])

return axes

将dataloader转换成迭代器才可以使用next方法

X, y = next(iter(data.DataLoader(mnist_train, batch_size=24,shuffle = True)))

show_images(X.reshape(24, 28, 28), 3, 8, labels=labels, targets = y)

三、建立模型

1、模型一:三层卷积加两层全连接,使用dropout层

class Net(nn.Module):

def init(self):

super(Net,self).init()

self.conv = nn.Sequential(

nn.Conv2d(1,40,2),

nn.ReLU(),

nn.MaxPool2d(2,1),

nn.Conv2d(40,80,2),

nn.ReLU(),

nn.MaxPool2d(2,1),

nn.Conv2d(80,160,3,padding = 1),

nn.ReLU(),

nn.Dropout(p = 0.5),

nn.MaxPool2d(3,3),)

self.classifier = nn.Sequential(

nn.Linear(160*8*8,200),

nn.ReLU(),

nn.Linear(120,84),

nn.ReLU(),

nn.Linear(84,42),

nn.ReLU(),

nn.Dropout(p = 0.5),

nn.Linear(200,10))

def forward(self,x):

x = self.conv(x)

x = x.view(x.size(0),-1)

x = self.classifier(x)

return x

2、模型二:参考vgg模型使用两个vgg块和两个全连接,使用批标准化

训练30个epochs后测试集准确率高达93.8%

class Net(nn.Module):

def init(self):

super(Net,self).init()

self.conv1 = nn.Conv2d(1,128,1,padding=1)

self.conv2 = nn.Conv2d(128,128,3,padding=1)

self.pool1 = nn.MaxPool2d(2, 2)

self.bn1 = nn.BatchNorm2d(128)

self.relu1 = nn.ReLU()

self.conv3 = nn.Conv2d(128,256,3,padding=1)

self.conv4 = nn.Conv2d(256, 256, 3,padding=1)

self.pool2 = nn.MaxPool2d(2, 2, padding=1)

self.bn2 = nn.BatchNorm2d(256)

self.relu2 = nn.ReLU()

self.fc5 = nn.Linear(256*8*8,512)

self.drop1 = nn.Dropout2d()

self.fc6 = nn.Linear(512,10)

def forward(self,x):

x = self.conv1(x)

x = self.conv2(x)

x = self.pool1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.pool2(x)

x = self.bn2(x)

x = self.relu2(x)

#print(" x shape ",x.size())

x = x.view(-1,256*8*8)

x = F.relu(self.fc5(x))

x = self.drop1(x)

x = self.fc6(x)

return x

四、训练前准备:

1、模型函数初始化

net =Net() # 模型初始化

loss = nn.CrossEntropyLoss() # 交叉熵损失函数

optimizer = optim.Adam(net.parameters(),lr = 0.001) # 随机梯度下降优化算法

xavier初始化

def init_xavier(model):

for m in model.modules():

if isinstance(m, (nn.Conv2d, nn.Linear)):

nn.init.xavier_uniform_(m.weight, gain=nn.init.calculate_gain(‘relu’))

凯明初始化

def init_kaiming(model):

for m in model.modules():

if isinstance(m, (nn.Conv2d, nn.Linear)):

nn.init.kaiming_normal_(m.weight, mode=‘fan_out’, nonlinearity=‘relu’)

init_kaiming(net)

2、使用GPU(无则自动使用CPU)

“”“使用GPU”“”

def use_gpu(net):

device = torch.device(“cuda:0” if torch.cuda.is_available() else “cpu”)

net.to(device)

gpu_nums = torch.cuda.device_count()

if gpu_nums > 1:

print(“Let’s use”, gpu_nums, “GPUs”)

net = nn.DataParallel(net)

elif gpu_nums == 1:

print(“Let’s use GPU”)

else:

print(“Let’s use CPU”)

return device

3、编写模型训练程序辅助函数

(1)可视化训练效果动画函数

class Animator: #@save

“”“在动画中绘制数据。”“”

def init(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale=‘linear’, yscale=‘linear’,

fmts=(‘-’, ‘m–’, ‘g-.’, ‘r:’), nrows=1, ncols=1,

figsize=(5, 3.5)):

# 增量地绘制多条线

if legend is None:

legend = []

# 使用矢量图

display.set_matplotlib_formats('svg')

self.fig, self.axes = plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使用lambda函数捕获、保存参数

self.config_axes = lambda: self.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, *y):

# 向图表中添加多个数据点

n = len(y)

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla() # 清除当前活动的axes

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt,linewidth = 2)

self.axes[0].set_yticks(ticks = np.linspace(0,1,11))

self.config_axes()

display.display(self.fig)

# 清除输出,使重画的图在原位置输出,形成动图效果

display.clear_output(wait=True)

def set_axes(self,axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend):

# 设置matplotlib的轴。\

axes.grid(True)

axes.set_title("gaojianwen")

axes.set_xlabel(xlabel)

axes.set_ylabel(ylabel)

axes.set_xscale(xscale)

axes.set_yscale(yscale)

axes.set_xlim(xlim)

axes.set_ylim(ylim)

if legend:

axes.legend(legend)

(2)累加器(存储中间数据,如准确率等)

class Accumulator:

“”“定义累加器”“”

def init(self, n):

self.data = [0.0 ] * n

def add(self, *args):

# 累加

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

# 重置累加器

self.data = [0.0] * len(self.data)

def __getitem__(self,index):

return self.data[index]

**收集整理了一份《2024年最新物联网嵌入式全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升的朋友。**

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618679757)**

**需要这些体系化资料的朋友,可以加我V获取:vip1024c (备注嵌入式)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人**

**都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

网嵌入式全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升的朋友。**

[外链图片转存中...(img-vBMRsb6a-1715894981327)]

[外链图片转存中...(img-xPRJXvAC-1715894981327)]

**[如果你需要这些资料,可以戳这里获取](https://bbs.csdn.net/topics/618679757)**

**需要这些体系化资料的朋友,可以加我V获取:vip1024c (备注嵌入式)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人**

**都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?