- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-configmap.yaml

### 3.5 有状态部署prometheus

这里使用storageclass进行动态供给,给prometheus的数据进行持久化,具体实现办法,可以查看之前的文章《k8s中的NFS动态存储供给》,除此之外可以使用静态供给的prometheus-statefulset-static-pv.yaml进行持久化

[root@k8s-master prometheus-k8s]# vim prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

version: v2.2.1

spec:

serviceName: “prometheus”

replicas: 1

podManagementPolicy: “Parallel”

updateStrategy:

type: “RollingUpdate”

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘’

spec:

priorityClassName: system-cluster-critical

serviceAccountName: prometheus

initContainers:

- name: “init-chown-data”

image: “busybox:latest”

imagePullPolicy: “IfNotPresent”

command: [“chown”, “-R”, “65534:65534”, “/data”]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: “”

containers:

- name: prometheus-server-configmap-reload

image: “jimmidyson/configmap-reload:v0.1”

imagePullPolicy: “IfNotPresent”

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.2.1"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

# based on 10 running nodes with 30 pods each

resources:

limits:

cpu: 200m

memory: 1000Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-rules

mountPath: /etc/config/rules

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

storageClassName: managed-nfs-storage #存储类根据自己的存储类名字修改

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “16Gi”

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-statefulset.yaml

检查状态

[root@k8s-master prometheus-k8s]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5bd5f9dbd9-wv45t 1/1 Running 1 8d

kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 14d

prometheus-0 2/2 Running 6 14d

可以看到一个prometheus-0的pod,这就刚才使用statefulset控制器进行的有状态部署,两个容器的状态为Runing则是正常,如果不为Runing可以使用kubectl describe pod prometheus-0 -n kube-system查看报错详情

### 3.6 创建service暴露访问端口

此处使用nodePort固定一个访问端口,不适用随机端口,便于访问

[root@k8s-master prometheus-k8s]# vim prometheus-service.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: “Prometheus”

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090 #固定的对外访问的端口

selector:

k8s-app: prometheus

创建

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-service.yaml

检查

[root@k8s-master prometheus-k8s]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-5bd5f9dbd9-wv45t 1/1 Running 1 8dpod/kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 14dpod/prometheus-0 2/2 Running 6 14dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.0.0.2 53/UDP,53/TCP 13dservice/kubernetes-dashboard NodePort 10.0.0.127 443:30001/TCP 16dservice/prometheus NodePort 10.0.0.33 9090:30090/TCP 13d

### 3.7 web访问

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,此例就是:`http://192.168.73.139:30090`

访问成功的界面如图所示:

### 4 在K8S平台部署Grafana

通过上面的web访问,可以看出prometheus自带的UI界面是没有多少功能的,可视化展示的功能不完善,不能满足日常的监控所需,因此常常我们需要再结合Prometheus+Grafana的方式来进行可视化的数据展示

官网地址:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

https://grafana.com/grafana/download

刚才下载的项目中已经写好了Grafana的yaml,根据自己的环境进行修改

### 4.1 使用StatefulSet部署grafana

[root@k8s-master prometheus-k8s]# vim grafana.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana

namespace: kube-system

spec:

serviceName: “grafana”

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

volumeMounts:

- name: grafana-data

mountPath: /var/lib/grafana

subPath: grafana

securityContext:

fsGroup: 472

runAsUser: 472

volumeClaimTemplates:

- metadata:

name: grafana-data

spec:

storageClassName: managed-nfs-storage #和prometheus使用同一个存储类

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “1Gi”

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

spec:

type: NodePort

ports:

- port : 80

targetPort: 3000

nodePort: 30091

selector:

app: grafana

### 4.2 Grafana的web访问

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,此例就是:http://192.168.73.139:30091

成功访问界面如下,会需要进行账号密码登陆,默认账号密码都为admin,登陆之后会让修改密码

登陆之后的界面如下

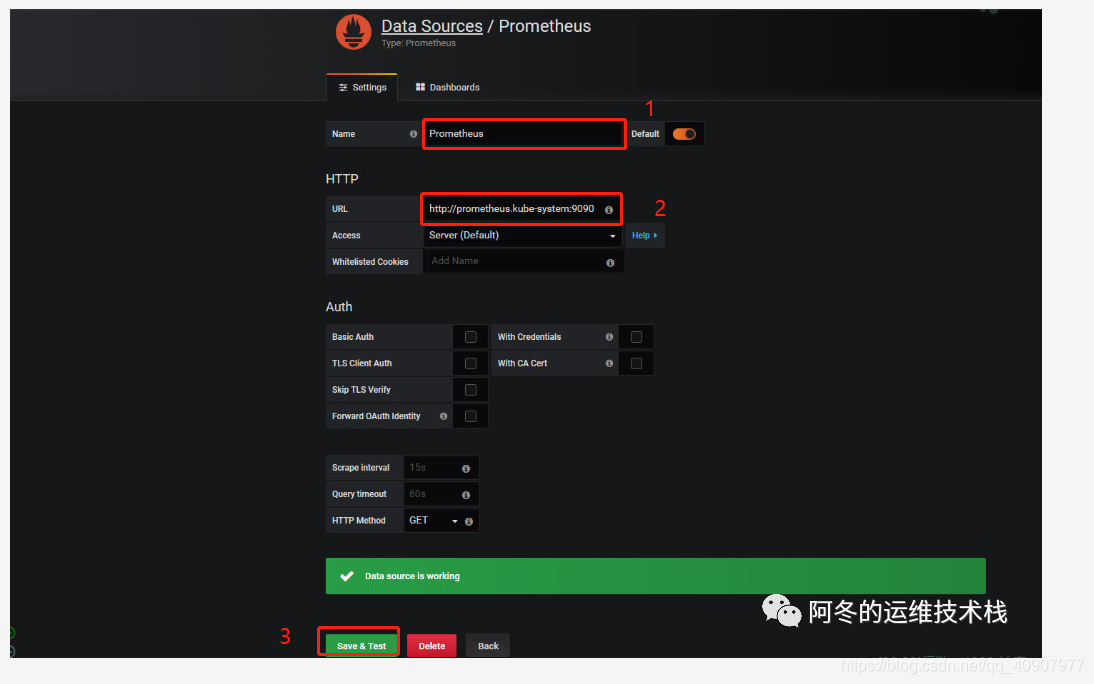

第一步需要进行数据源添加,点击create your first data source数据库图标,根据下图所示进行添加即可

第二步,添加完了之后点击底部的绿色的Save&Test,会成功提示Data sourse is working,则表示数据源添加成功

### 4.3 监控K8S集群中Pod、Node、资源对象数据的方法

1)Pod

kubelet的节点使用cAdvisor提供的metrics接口获取该节点所有Pod和容器相关的性能指标数据,安装kubelet默认就开启了

暴露接口地址:

https://NodeIP:10255/metrics/cadvisor

https://NodeIP:10250/metrics/cadvisor

2)Node

需要使用node\_exporter收集器采集节点资源利用率。

https://github.com/prometheus/node\_exporter

使用文档:https://prometheus.io/docs/guides/node-exporter/

使用node\_exporter.sh脚本分别在所有服务器上部署node\_exporter收集器,不需要修改可直接运行脚本

[root@k8s-master prometheus-k8s]# cat node_exporter.sh #!/bin/bashwget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

tar zxf node_exporter-0.17.0.linux-amd64.tar.gz

mv node_exporter-0.17.0.linux-amd64 /usr/local/node_exporter

cat </usr/lib/systemd/system/node_exporter.service

[Unit]

Description=https://prometheus.io

[Service]

Restart=on-failure

ExecStart=/usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable node_exporter

systemctl restart node_exporter

[root@k8s-master prometheus-k8s]# ./node_exporter.sh

检测node\_exporter的进程,是否生效

[root@k8s-master prometheus-k8s]# ps -ef|grep node_exporter

root 6227 1 0 Oct08 ? 00:06:43 /usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

root 118269 117584 0 23:27 pts/0 00:00:00 grep --color=auto node_exporter

3)资源对象

kube-state-metrics采集了k8s中各种资源对象的状态信息,只需要在master节点部署就行

https://github.com/kubernetes/kube-state-metrics

创建rbac的yaml对metrics进行授权

[root@k8s-master prometheus-k8s]# vim kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [“”]

resources:- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: [“list”, “watch”]

- apiGroups: [“extensions”]

resources:- daemonsets

- deployments

- replicasets

verbs: [“list”, “watch”]

- apiGroups: [“apps”]

resources:- statefulsets

verbs: [“list”, “watch”]

- statefulsets

- apiGroups: [“batch”]

resources:- cronjobs

- jobs

verbs: [“list”, “watch”]

- apiGroups: [“autoscaling”]

resources:- horizontalpodautoscalers

verbs: [“list”, “watch”]

- horizontalpodautoscalers

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [“”]

resources:- pods

verbs: [“get”]

- pods

- apiGroups: [“extensions”]

resources:- deployments

resourceNames: [“kube-state-metrics”]

verbs: [“get”, “update”]

- deployments

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-rbac.yaml

编写Deployment和ConfigMap的yaml进行metrics pod部署,不需要进行修改

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v1.3.0

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘’

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: lizhenliang/kube-state-metrics:v1.3.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: lizhenliang/addon-resizer:1.8.3

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-deployment.yaml

2.编写Service的yaml对metrics进行端口暴露

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: “kube-state-metrics”

annotations:

prometheus.io/scrape: ‘true’

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP - name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-service.yaml

3.检查pod和svc的状态,可以看到正常运行了pod/kube-state-metrics-7c76bdbf68-kqqgd 和对外暴露了8080和8081端口

[root@k8s-master prometheus-k8s]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/alertmanager-5d75d5688f-fmlq6 2/2 Running 0 9dpod/coredns-5bd5f9dbd9-wv45t 1/1 Running 1 9dpod/grafana-0 1/1 Running 2 15dpod/kube-state-metrics-7c76bdbf68-kqqgd 2/2 Running 6 14dpod/kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 16dpod/prometheus-0 2/2 Running 6 15dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager ClusterIP 10.0.0.207 80/TCP 13dservice/grafana NodePort 10.0.0.74 80:30091/TCP 15dservice/kube-dns ClusterIP 10.0.0.2 53/UDP,53/TCP 14dservice/kube-state-metrics ClusterIP 10.0.0.194 8080/TCP,8081/TCP 14dservice/kubernetes-dashboard NodePort 10.0.0.127 443:30001/TCP 17dservice/prometheus NodePort 10.0.0.33 9090:30090/TCP 14d[root@k8s-master prometheus-k8s]#

### 5 使用Grafana可视化展示Prometheus监控数据

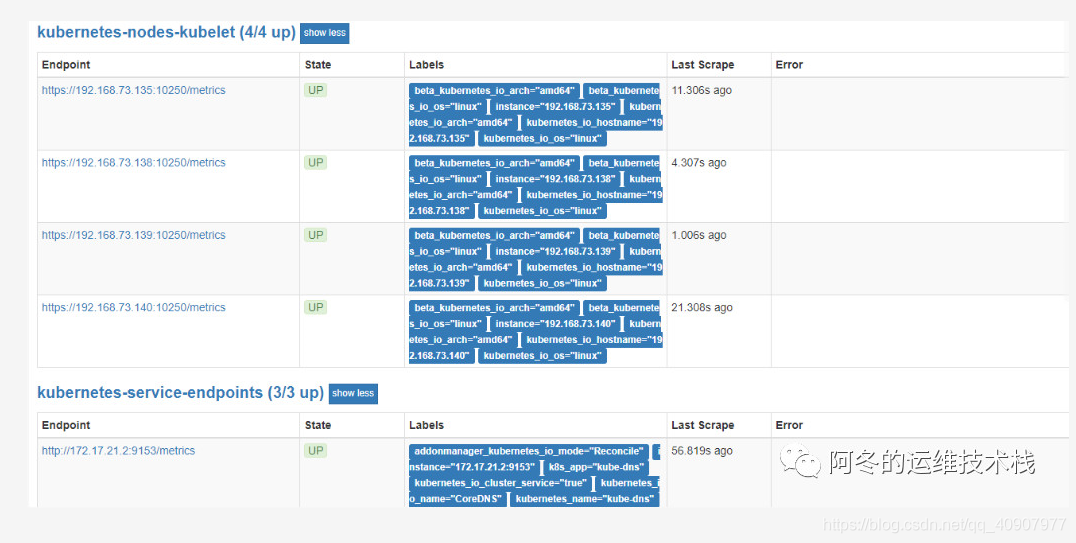

通常在使用Prometheus采集数据的时候我们需要监控K8S集群中Pod、Node、资源对象,因此我们需要安装对应的插件和资源采集器来提供api进行数据获取,在4.3中我们已经配置好,我们也可以使用Prometheus的UI界面中的Staus菜单下的Target中的各个采集器的状态情况,如图所示:

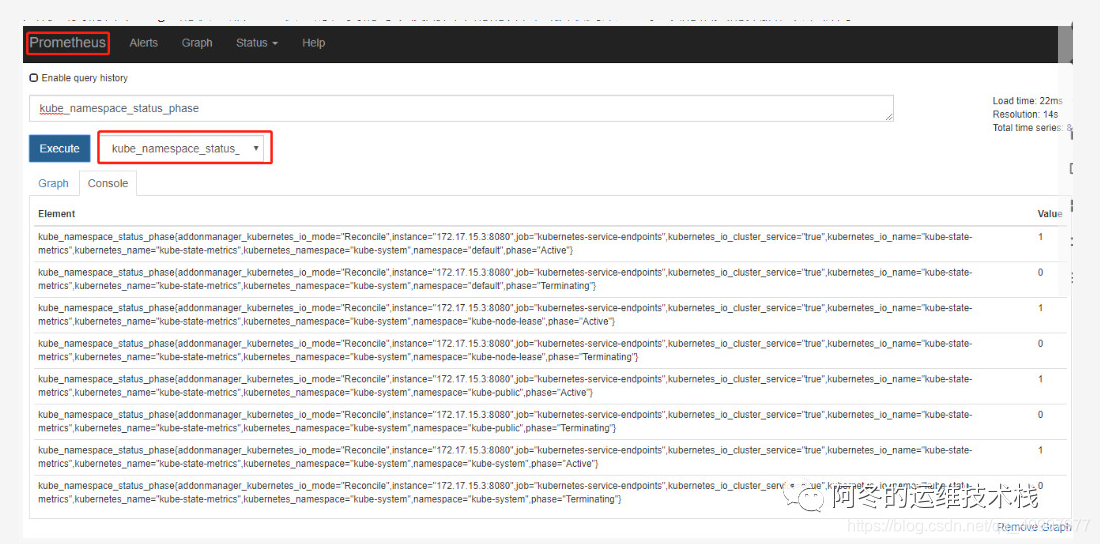

只有当我们各个Target的状态都是UP状态时,我们可以使用自带的的界面去获取到某一监控项的相关的数据,如图所示:

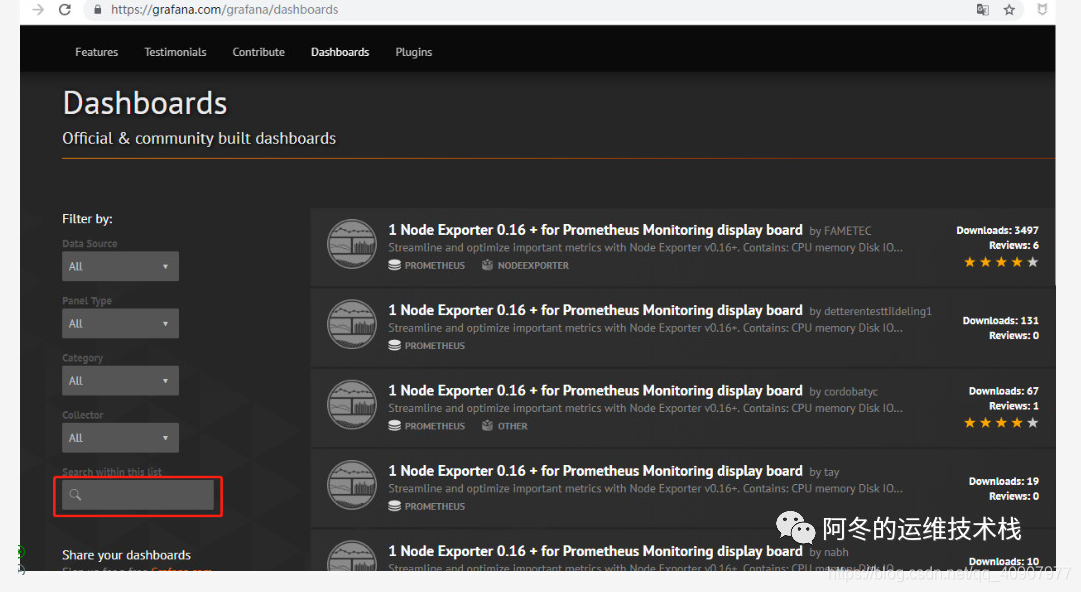

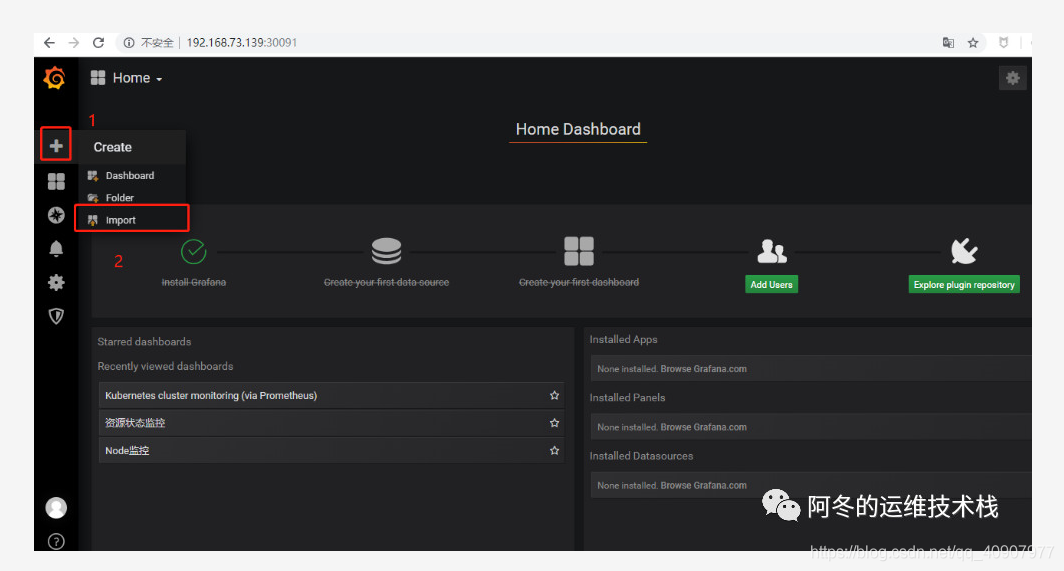

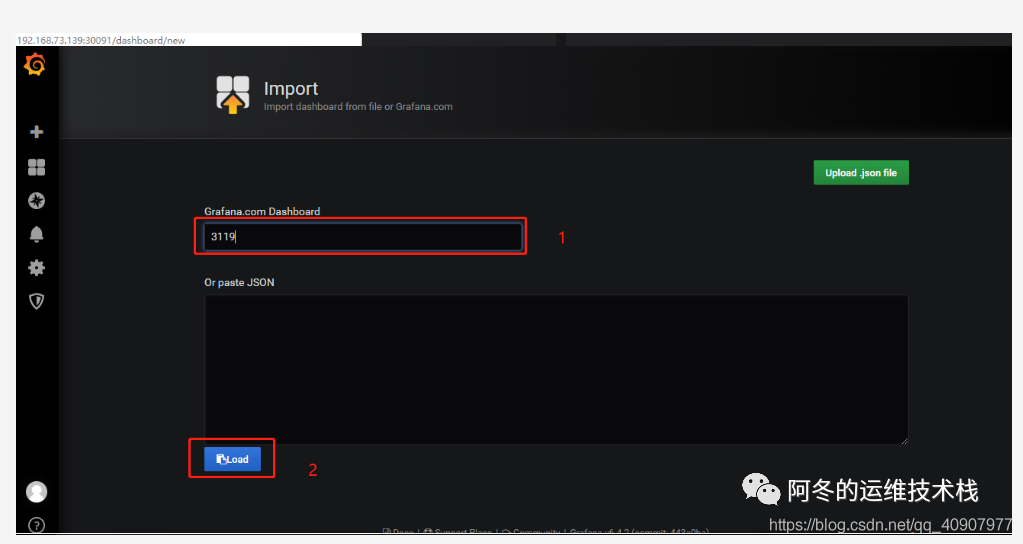

从上面的图中可以看出Prometheus的界面可视化展示的功能较单一,不能满足需求,因此我们需要结合Grafana来进行可视化展示Prometheus监控数据,在上一章节,已经成功部署了Granfana,因此需要在使用的时候添加dashboard和Panel来设计展示相关的监控项,但是实际上在Granfana社区里面有很多成熟的模板,我们可以直接使用,然后根据自己的环境修改Panel中的查询语句来获取数据

https://grafana.com/grafana/dashboards

推荐模板:

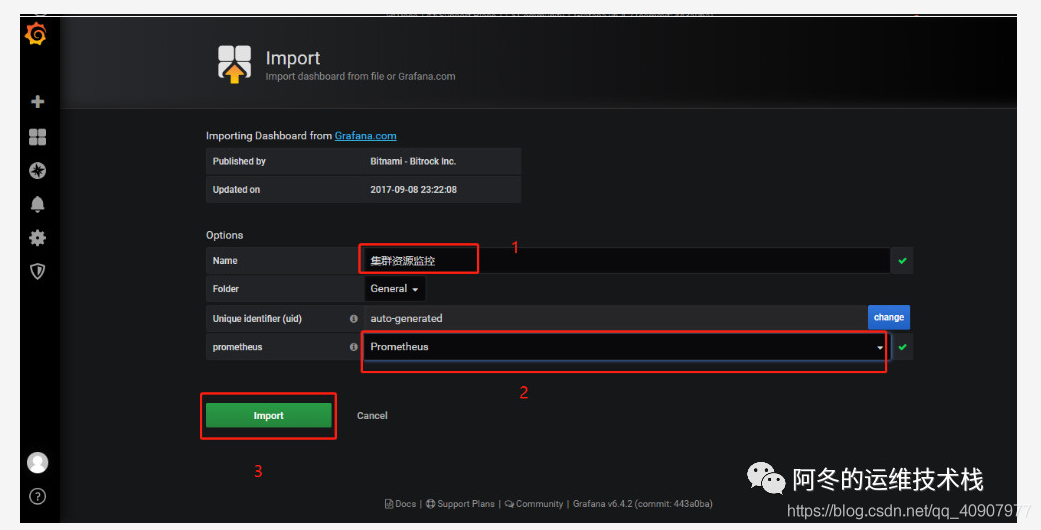

集群资源监控的模板号:3119,如图所示进行添加

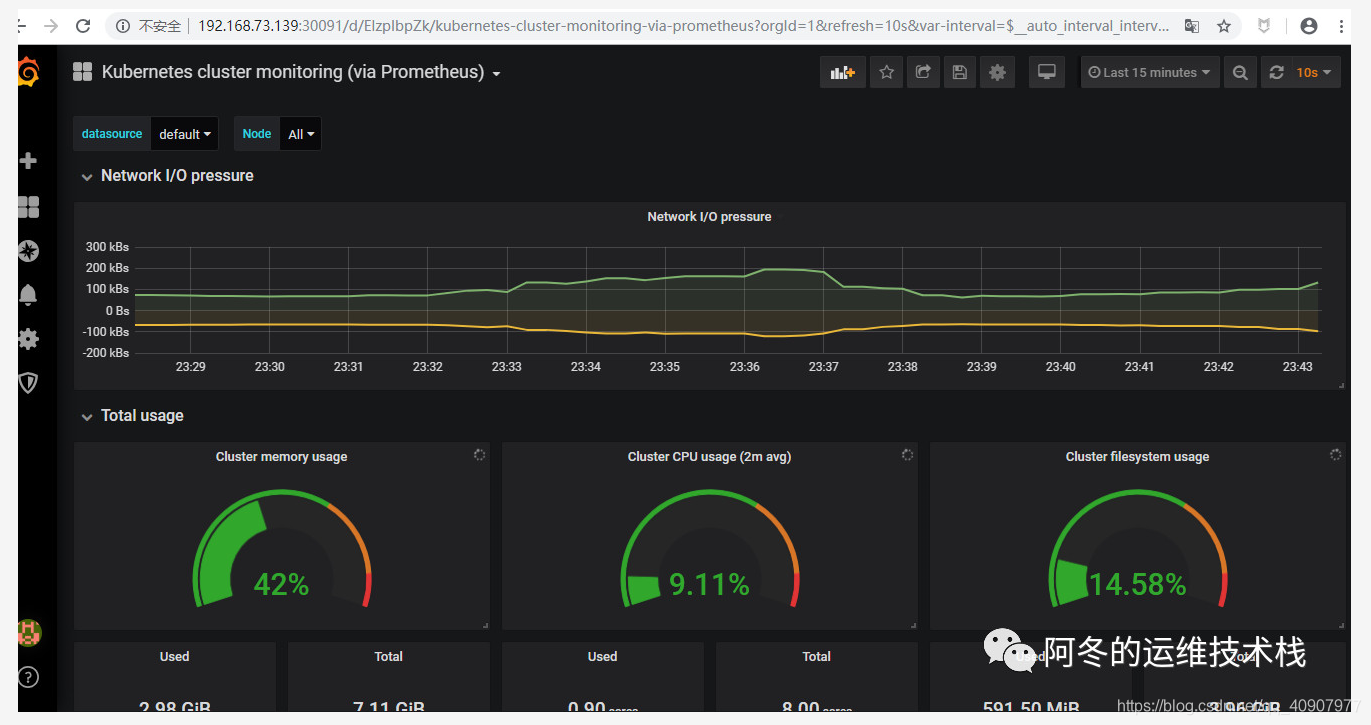

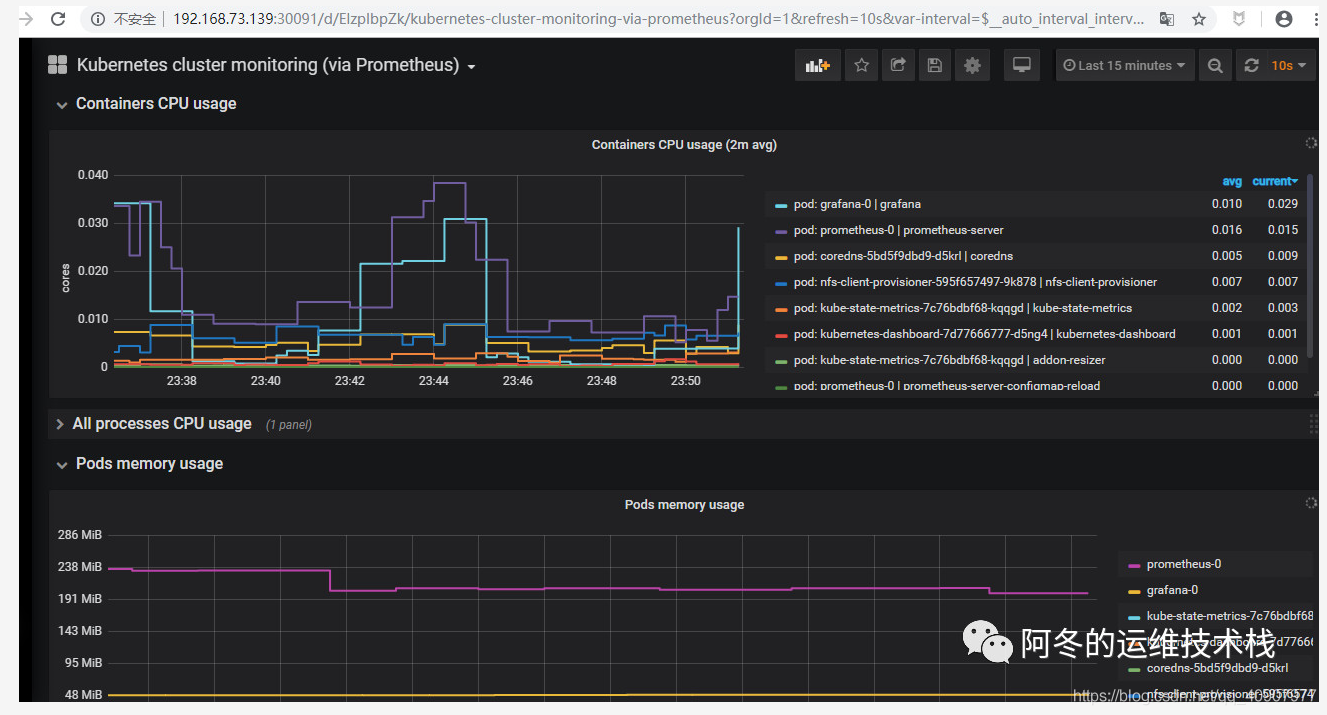

当模板添加之后如果某一个Panel不显示数据,可以点击Panel上的编辑,查询PromQL语句,然后去Prometheus自己的界面上进行调试PromQL语句是否可以获取到值,最后调整之后的监控界面如图所示

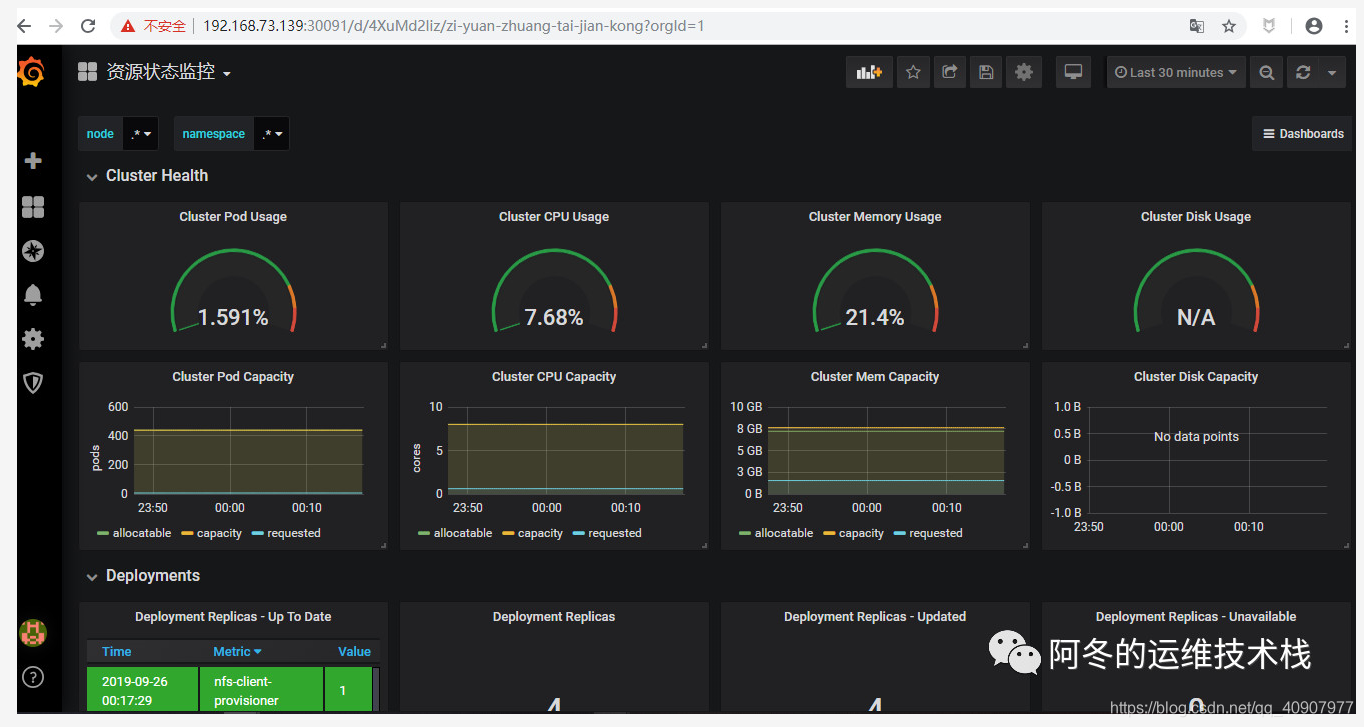

资源状态监控:6417

同理,添加资源状态的监控模板,然后经过调整之后的监控界面如图所示,可以获取到k8s中各种资源状态的监控展示

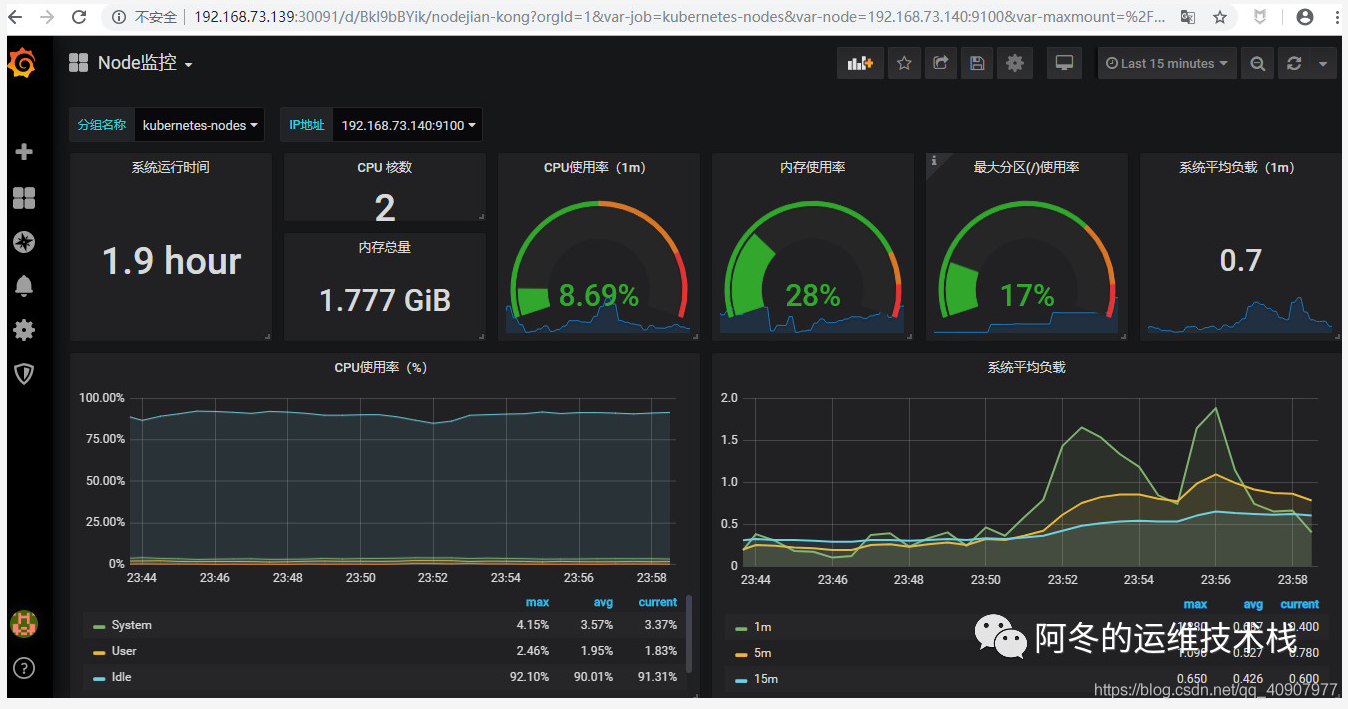

Node监控:9276

同理,添加资源状态的监控模板,然后经过调整之后的监控界面如图所示,可以获取到各个node上的基本情况

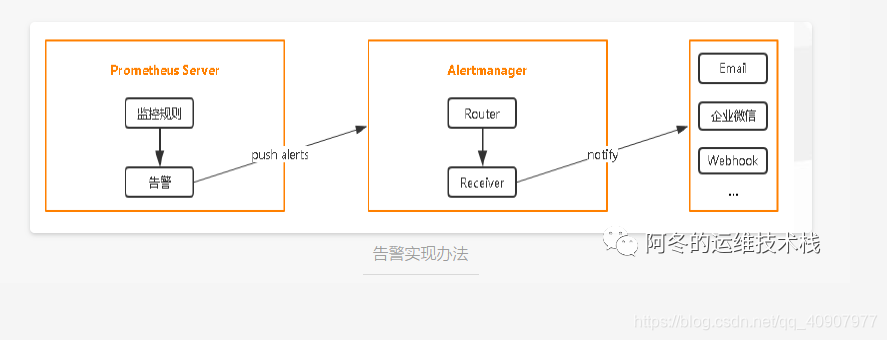

### 6 在K8S中部署Alertmanager

### 6.1 部署Alertmanager的实现步骤

### 6.2 部署告警

我们以Email来进行实现告警信息的发送

首先需要准备一个发件邮箱,开启stmp发送功能

使用configmap存储告警规则,编写报警规则的yaml文件,可根据自己的实际情况进行修改和添加报警的规则,prometheus比zabbix就麻烦在这里,所有的告警规则需要自己去定义

[root@k8s-master prometheus-k8s]# vim prometheus-rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: “Instance {{ $labels.instance }} 停止工作”

description: “{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上.”

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~“ext4|xfs”} / node_filesystem_size_bytes{fstype=~“ext4|xfs”} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: “Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高”

description: “{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})”

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 10

0 > 80

for: 1m

labels:

severity: warning

annotations:

summary: “Instance {{ $labels.instance }} 内存使用率过高”

description: “{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})”

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-rules.yaml

3.编写告警configmap的yaml文件部署,增加alertmanager告警配置,进行配置邮箱发送地址

[root@k8s-master prometheus-k8s]# vim alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

smtp_smarthost: ‘xxx.com.cn:25’ #登陆邮件进行查看

smtp_from: ‘xxx@126.com.cn’ #根据自己申请的发件邮箱进行配置

smtp_auth_username: ‘xxx@qq.com.cn’

smtp_auth_password: ‘xxxxx’

receivers:

- name: default-receiver

email_configs:

- to: "zhangdongdong27459@goldwind.com.cn"

route:

group_interval: 1m

group_wait: 10s

receiver: default-receiver

repeat_interval: 1m

[root@k8s-master prometheus-k8s]# kubectl apply -f alertmanager-configmap.yaml

4.创建PVC进行数据持久化,我这个yaml文件使用的跟Prometheus安装时用的存储类来进行自动供给,需要根据自己的实际情况修改

[root@k8s-master prometheus-k8s]# vim alertmanager-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: EnsureExists

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “2Gi”

[root@k8s-master prometheus-k8s]# kubectl apply -f alertmanager-pvc.yaml

5.编写deployment的yaml来部署alertmanager的pod

[root@k8s-master prometheus-k8s]# vim alertmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

https://bbs.csdn.net/forums/4304bb5a486d4c3ab8389e65ecb71ac0

eClaim

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: EnsureExists

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: “2Gi”

[root@k8s-master prometheus-k8s]# kubectl apply -f alertmanager-pvc.yaml

5.编写deployment的yaml来部署alertmanager的pod

[root@k8s-master prometheus-k8s]# vim alertmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

[外链图片转存中…(img-fyBRvUxw-1725770473495)]

[外链图片转存中…(img-BBdBEesT-1725770473496)]

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

https://bbs.csdn.net/forums/4304bb5a486d4c3ab8389e65ecb71ac0

377

377

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?