向导

注意

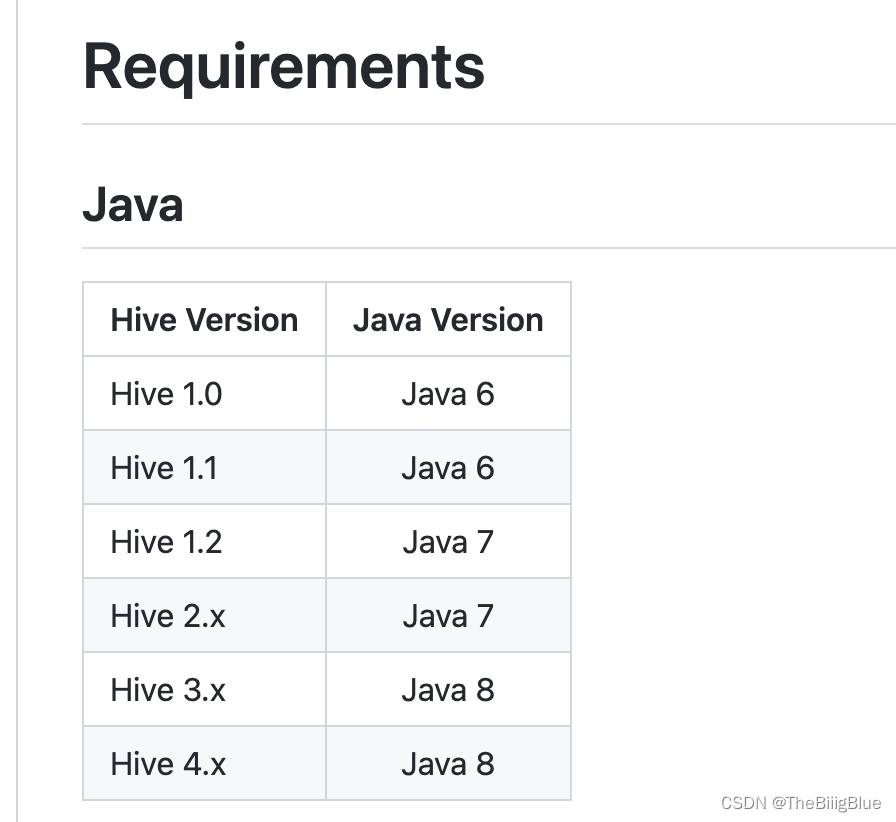

hive只能部署在jdk8的基础上,且JAVA_HOME应配置在hadoop-env.sh中才生效

安装

1. 上传tar包,解压

tar -zxvf apache-hive-3.1.3-bin.tar.gz

2. 安装hadoop

3. 安装mysql

MySQL的安装(YUM安装)

MySQL的安装(tar.gz文件安装)

MySQL的安装(RPM文件安装)

4. 配置hive-site.xml

mv apache-hive-2.3.6-bin hive

cd /opt/module/hive/conf

vi hive-site.xml

加入以下配置,修改mysql链接

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://172.16.75.137:3390/hive_meta?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>admin</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>admin@123</value>

<description>password to use against metastore database</description>

</property>

<!-- Hive默认在HDFS的工作目录 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/data/hive/warehouse</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/data/services/hive-3.1.3/logs</value>

</property>

<!-- 指定hiveserver2连接的端口号 -->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<!-- 指定hiveserver2连接的host -->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>172.16.75.101</value>

</property>

<property>

<name>hive.server2.webui.host</name>

<value>172.16.75.101</value>

</property>

<!-- hive服务的页面的端口 -->

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

<!-- 指定存储元数据要连接的地址 -->

<property>

<name>hive.metastore.uris</name>

<value>thrift://172.16.75.101:9083</value>

</property>

<!-- 元数据存储授权 -->

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<!-- Hive元数据存储版本的验证 -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!-- hiveserver2的高可用参数,开启此参数可以提高hiveserver2的启动速度 -->

<property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property>

<!-- hive方式访问客户端:打印 当前库 和 表头 -->

<property>

<name>hive.cli.print.header</name>

<value>true</value>

<description>Whether to print the names of the columns in query output.</description>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

</property>

<!--

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

<property>

<name>spark.home</name>

<value>/opt/hadoop/spark-2.3.0-bin-hadoop2.7</value>

</property>

<property>

<name>spark.master</name>

<value>yarn-cluster</value>

</property>

-->

</configuration>

5. 配置hadoop

cp conf/hive-env.sh.template conf/hive-env.sh

vi conf/hive-env.sh

# Set HADOOP_HOME to point to a specific hadoop install directory

export HADOOP_HOME=/data/services/hadoop-3.2.2

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/data/services/hive-3.1.3/conf/

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/data/services/hive-3.1.3

5. 配置日志

# 创建 日志文件

cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

# 修改配置

property.hive.log.dir = /data/services/hive-3.1.3/logs

6. 拷贝mysql驱动

mv mysql-connector-java-8.0.27.jar lib

7.解决hive3.1.3和hadoop3.2.1的guava冲突

# 删除hive包下的低版本guava

mv lib/guava-19.0.jar lib/guava-19.0.jar.bak

#复制hadoop下的高版本guava到hive

cp ../hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar ./lib/

8. 启动hdfs,yarn

start-dfs.sh

start-yarn.sh

9. 修改hdfs目录权限

hdfs dfs -mkdir /tmp

hdfs dfs -mkdir -p /data/hive/warehouse

hdfs dfs -chmod g+w /tmp

hdfs dfs -chmod g+w /data/hive/warehouse

10. 初始化DB

bin/schematool -initSchema -dbType mysql

如果是tidb作为metastore,则需要执行:set global tidb_skip_isolation_level_check = 1;

11. 启动hive metastore,hiveserver2,hive

#注意:hive2.x版本需要启动两个服务metastore和hiveserver2,否则会报错

#Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

nohup bin/hive --service metastore > metastore.log 2>&1 &

nohup bin/hive --service hiveserver2 > hiveserver2.log 2>&1 &

#启动客户端

bin/hive

##或者使用beeline连接,-u 指定jdbc连接, -n指定用户名,防止权限相关

bin/beeline -u 'jdbc:hive2://localhost:10000' -n bigdata

本文档详细介绍了在JDK8基础上安装和配置Hadoop、MySQL,然后集成Hive3.1.3的步骤,包括配置hive-site.xml,解决Guava版本冲突,启动HDFS和YARN,初始化数据库,以及启动Hive Metastore和Hive Server2等服务。

本文档详细介绍了在JDK8基础上安装和配置Hadoop、MySQL,然后集成Hive3.1.3的步骤,包括配置hive-site.xml,解决Guava版本冲突,启动HDFS和YARN,初始化数据库,以及启动Hive Metastore和Hive Server2等服务。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?