Kafka、Elasticsearch、Logstash、Kibana环境搭建见这里

Logstash如何消费Kafka中的JSON输出给Elasticsearch见这里

Maven依赖

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

application.properties

spring.kafka.bootstrap-servers=192.168.184.134:9092

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.template.default-topic=kafka-test #默认topic名称可以与logstash的input的名称一致

LogBean.java (日志记录对象)

public class LogBean {

// 这些字段与Elasticsearch模板创建的字段一致

private String ip; //请求ip

private int code; //响应码

private String url; //请求url

private String args; //请求参数

private String msg; //响应信息

private Date startTime; //响应开始时间

private long runTime; //响应耗时(毫秒)

// Getter ... Setter ...

}

ApiResult.java (Controller返回对象)

public class ApiResult {

private static final int SUCCESS = 200;

private static final int FAIL = 504;

private final int code;

private final String msg;

@JsonInclude(JsonInclude.Include.NON_NULL)

private final Object data;

public ApiResult(int code, String msg, Object data) {

this.code = code;

this.msg = msg;

this.data = data;

}

public static ApiResult success(){

return new ApiResult(SUCCESS,"操作成功",null);

}

public static ApiResult fail(){

return new ApiResult(FAIL,"操作失败",null);

}

public static ApiResult success(Object o){

return new ApiResult(SUCCESS,"操作成功",o);

}

public static ApiResult fail(String msg){

return new ApiResult(FAIL,msg,null);

}

// Getter ...

}

KafkaProducer.java (发送消息给Kafka)

@Component

public class KafkaProducer {

private KafkaTemplate<String, String> kafkaTemplate;

@Autowired

public void setKafkaTemplate(KafkaTemplate<String, String> kafkaTemplate) {

this.kafkaTemplate = kafkaTemplate;

}

@Async //异步发送消息

public void send(String msg) {

kafkaTemplate.sendDefault(msg); // 发送给默认topic

}

}

LogAspect.java (日志功能切面)

@Aspect

@Component

public class LogAspect {

private Gson gson;

private KafkaProducer kafkaProducer;

@Autowired

public void setProducer(KafkaProducer kafkaProducer) {

this.kafkaProducer = kafkaProducer;

}

public LogAspect() {

elasticsearch(); // 初始化适合Elasticsearch的Gson转换模板

}

/**

* Elasticsearch的时间格式需要存入"yyyy-MM-dd'T'HH:mm:ss+08:00",否则使用Kibana查询得到的时间会快8小时

*/

public void elasticsearch() {

int offset = TimeZone.getDefault().getRawOffset();

int a = offset / 3600000; //计算所在时区的HH

int b = (int) (((float) offset / 3600000 - a) * 60); //计算所在时区的mm

DecimalFormat df = new DecimalFormat("00");

gson = new GsonBuilder().setDateFormat("yyyy-MM-dd'T'HH:mm:ss+" + df.format(a) + ":" + df.format(b))

.setFieldNamingPolicy(FieldNamingPolicy.LOWER_CASE_WITH_UNDERSCORES)

.create();

}

@Pointcut("execution(public * com.example.kafkademo.controller.*.*(..))")

public void log() {

}

@Around("log()")

public Object around(ProceedingJoinPoint pjp) {

Instant startTime = LocalDateTime.now().atZone(ZoneId.systemDefault()).toInstant();

ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

assert attributes != null;

HttpServletRequest request = attributes.getRequest();

LogBean logBean = new LogBean();

logBean.setStartTime(Date.from(startTime));

logBean.setUrl(request.getRequestURL().toString());

logBean.setIp(request.getRemoteAddr());

Object[] args = pjp.getArgs();

logBean.setArgs(gson.toJson(args[0]));

try {

ApiResult apiResult = (ApiResult) pjp.proceed();

logBean.setCode(apiResult.getCode());

logBean.setMsg(apiResult.getMsg());

logBean.setRunTime(Duration.between(startTime,LocalDateTime.now().atZone(ZoneId.systemDefault()).toInstant()).toMillis());

kafkaProducer.send(gson.toJson(logBean));

return apiResult;

} catch (Throwable throwable) {

throwable.printStackTrace();

return ApiResult.fail("日志切面出错!");

}

}

}

TestForm.java (请求参数对象)

public class TestForm {

private String fileName;

private String filePath;

// getter ... setter ...

}

TestApi.java

@RestController

@RequestMapping("/test")

public class TestApi {

@RequestMapping("/test")

public ApiResult test(@RequestBody TestForm testForm){

return ApiResult.success(testForm);

}

}

KafkademoApplication.java (启动类)

@SpringBootApplication

@EnableAsync

public class KafkademoApplication {

public static void main(String[] args) {

SpringApplication.run(KafkademoApplication.class, args);

}

}

运行结果

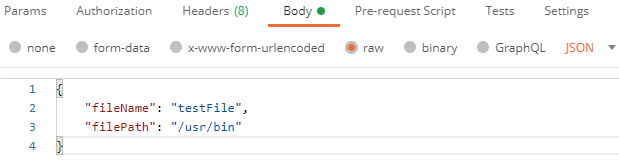

请求参数↓↓↓↓

控制台打印发送给Kafka的JSON↓↓↓↓

{"ip":"172.16.10.10","code":200,"url":"http://172.16.10.10:8080/test/test","args":"{\"file_name\":\"testFile\",\"file_path\":\"/usr/bin\"}","msg":"操作成功","start_time":"2021-01-23T20:01:37+08:00","run_time":0}

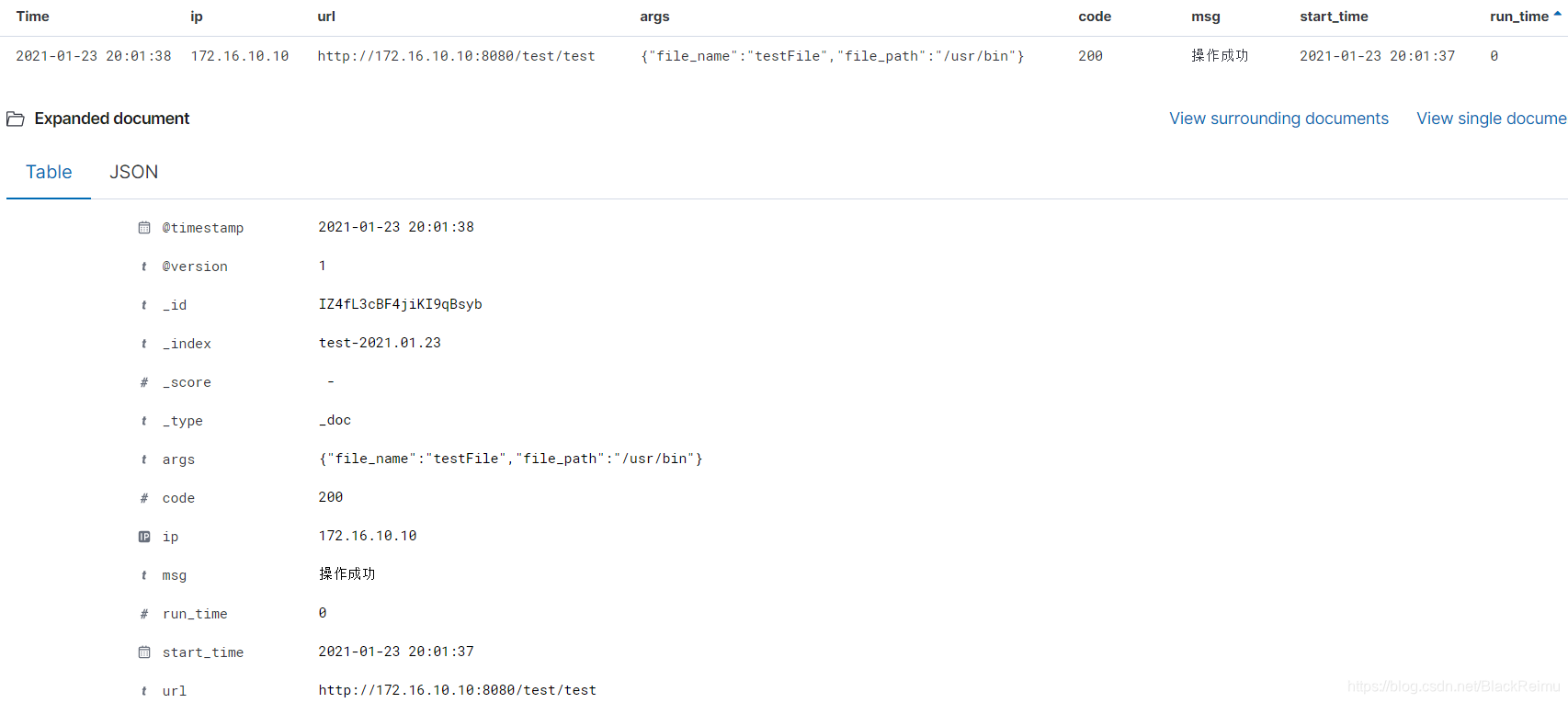

Kibana查询结果↓↓↓↓

2529

2529

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?