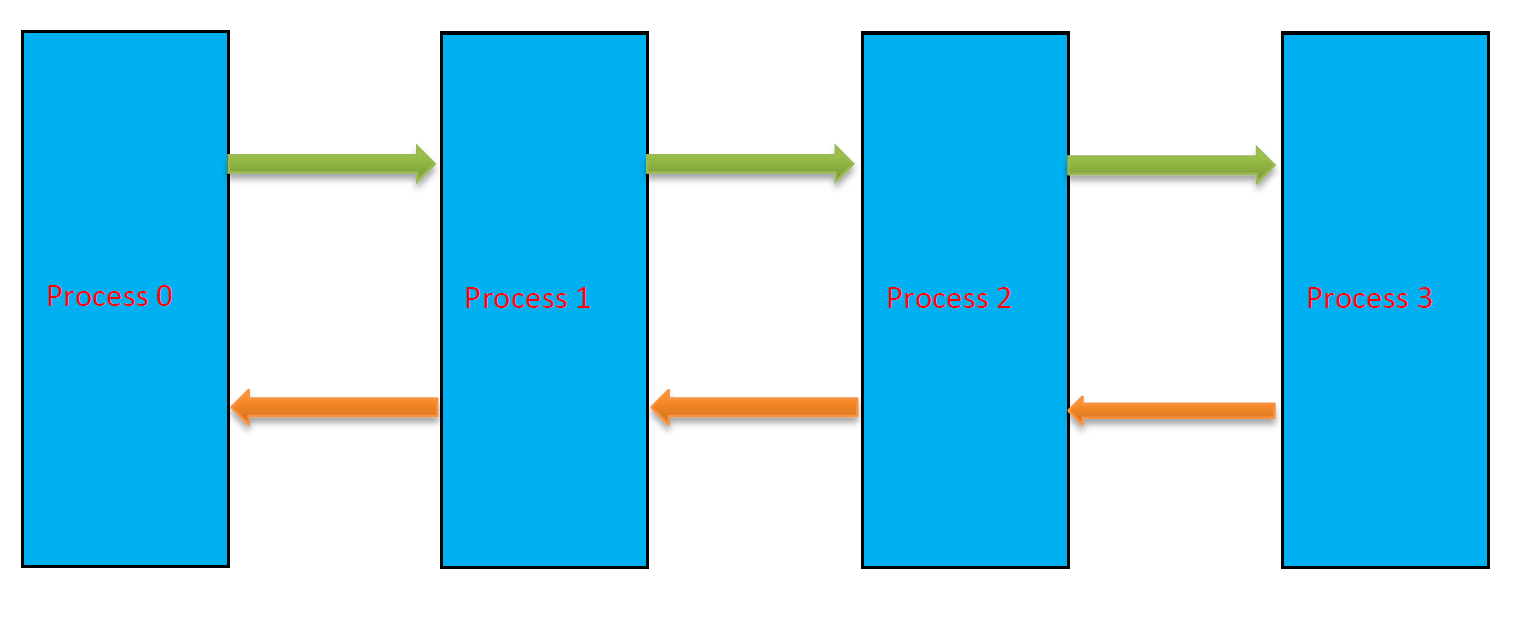

此例将例1扩展一点,根进程分散数据后,进程间再进行数据交换,具体来说,第i个进程发送一定量的数据到第i+1和第i-1个进程。注意每个进程要更新当前数据,数据交换过程如下图所示:

代码如下:

#include "mpi.h"

#include <cstdio>

#include <math.h>

#include <iostream>

#include <vector>

#include <stdlib.h>

#define np 203 //number of elements need to be scattered at root

const int MASTER = 0; // Rank of of the master process

int main(int argc, char* argv[])

{

std::vector<double> PosX(np);

std::vector<double> RecPosX;

int nProcs , Rank ;//number of processes, and id of rank

int i,j;

int reminder; //reminder if it is not divisible

double StartTime,EndTime; //timing

MPI_Status status;

MPI_Init ( & argc , & argv );

MPI_Comm_size ( MPI_COMM_WORLD , & nProcs );

MPI_Comm_rank ( MPI_COMM_WORLD , & Rank );

StartTime = MPI_Wtime(); //start timing

//initialize array

int* sendcounts = NULL ;

int* displs = NULL ;

int* TotLocalNum;

int* numsendright;

int* numsendleft;

double *sendbufright,*recvbufright;

double *sendbufleft,*recvbufleft;

int rightrank,leftrank;

//Initialize the data

if ( Rank == MASTER )

{

for(i=0;i<np;i++)

{

//PosX.push_back(i);

//PosX.resize(np,-1);

PosX[i] = i * 1.0;

}

}

sendcounts = new int [ nProcs ]; //allocate memory for array storing number of elements

numsendright = new int[ nProcs ];

numsendleft = new int[ nProcs ];

TotLocalNum = new int[ nProcs ]; //store the total number in each process

reminder = np%nProcs; //remineder

//calculate number of elements need to be scattered in each process

for(i=0;i<nProcs;i++)

{

sendcounts [i] = int(1.0*np/nProcs);

}

//number of elements in the last process

sendcounts [nProcs-1] = sendcounts [nProcs-1] + reminder;

//calculate corresponding displacement

//std::cout<<sendcounts[Rank]<<std::endl;

displs = new int [ nProcs ];

for(i=0;i<nProcs;i++)

{

displs[i] = 0;

for(j=0;j<i;j++)

{

displs[i] = displs[i] + sendcounts[j];

}

}

//allocate the receive buffer

for(i=0;i<sendcounts[Rank];i++)

{

RecPosX.push_back(i);

RecPosX.resize(sendcounts[Rank],-100);

}

MPI_Scatterv(&PosX[0], sendcounts, displs, MPI_DOUBLE,

&RecPosX[0], sendcounts[Rank], MPI_DOUBLE, 0, MPI_COMM_WORLD);

//output results after MPI_Scatterv operation

std::cout<<"My Rank = "<<Rank<<" And data I received are: "<<sendcounts[Rank]<<std::endl;

for(i=0;i<sendcounts[Rank];i++)

{

RecPosX[i] = RecPosX[i] + Rank * 10000;

std::cout<<RecPosX[i]<<" ";

if((i+1) %10 == 0)std::cout<<std::endl;

}

std::cout<<std::endl;

std::cout<<"Now Sending Data to the RIGHT neighboring process......"<<std::endl;

//specify how many elements will be sent or received in each process

for(i=0;i<nProcs;i++)

{

numsendright[i] = i + 5;

numsendleft[i] = i + 4;

}

//Allocate the memory

sendbufright = (double *)malloc(numsendright[Rank] * sizeof(double));

recvbufright = (double *)malloc(numsendright[Rank] * sizeof(double));

sendbufleft = (double *)malloc(numsendleft[Rank] * sizeof(double));

recvbufleft = (double *)malloc(numsendleft[Rank] * sizeof(double));

//randomly specify the data needs to be sent to the right neighbor

int id;

for(i=0;i<numsendright[Rank];i++)

{

id = rand() % sendcounts[Rank];

sendbufright[i] = RecPosX[id];

RecPosX.erase(RecPosX.begin()+id);

sendcounts[Rank]=sendcounts[Rank]-1;

}

TotLocalNum[Rank] = sendcounts[Rank];

rightrank = (Rank + 1) % nProcs;

leftrank = (Rank + nProcs-1)%nProcs;

//Each process sends data to its right process

MPI_Send(sendbufright,numsendright[Rank],MPI_DOUBLE,rightrank,99,MPI_COMM_WORLD);

std::cout<<"Rank= "<<Rank<<" SEND "<<numsendright[Rank]<<" Data To Rank= "<<rightrank<<std::endl;

for(i=0;i<numsendright[Rank];i++)

{

std::cout<<i<<'\t'<<sendbufright[i]<<std::endl;

}

//Each process receives data from its left process

MPI_Recv(recvbufright,numsendright[leftrank],MPI_DOUBLE,leftrank,99,MPI_COMM_WORLD,&status);

sendcounts[Rank] = sendcounts[Rank] + numsendright[leftrank];

TotLocalNum[Rank] = TotLocalNum[Rank] + numsendright[leftrank];

std::cout<<std::endl;

std::cout<<"Rank= "<<Rank<<" RECEIVE "<<numsendright[leftrank]<<" Data From Rank= "<<leftrank<<std::endl;

for(i=0;i<numsendright[leftrank];i++)

{

std::cout<<i<<'\t'<<recvbufright[i]<<std::endl;

}

//insert the received data here

for(i=0;i<numsendright[leftrank];i++)

{

RecPosX.insert(RecPosX.end(),recvbufright[i]);

}

//MPI_Barrier(MPI_COMM_WORLD);

std::cout<<std::endl;

std::cout<<"Total number in Rank= "<<Rank<<" is "<<TotLocalNum[Rank]<<std::endl;

for(i=0;i<TotLocalNum[Rank];i++)

{

std::cout<<RecPosX[i]<<" ";

if((i+1) %10 == 0)std::cout<<std::endl;

}

///Start to send data to the left neighbor//

//Step 1: Prepare data need to be sent to the left neighboring process

//randomly specify the data

std::cout<<std::endl;

std::cout<<"Now Sending Data to the LEFT neighboring process......"<<std::endl;

for(i=0;i<numsendleft[Rank];i++)

{

id = rand() % sendcounts[Rank];

sendbufleft[i] = RecPosX[id];

RecPosX.erase(RecPosX.begin()+id);

sendcounts[Rank]=sendcounts[Rank]-1;

}

TotLocalNum[Rank] = sendcounts[Rank]; //Updating local number of data

//Step 2

//Each process sends data to its left process

MPI_Send(sendbufleft,numsendleft[Rank],MPI_DOUBLE,leftrank,199,MPI_COMM_WORLD);

std::cout<<"Rank= "<<Rank<<" SEND "<<numsendleft[Rank]<<" Data To Rank= "<<leftrank<<std::endl;

for(i=0;i<numsendleft[Rank];i++)

{

std::cout<<i<<'\t'<<sendbufleft[i]<<std::endl;

}

//Each process receives data from its left process

MPI_Recv(recvbufleft,numsendleft[rightrank],MPI_DOUBLE,rightrank,199,MPI_COMM_WORLD,&status);

sendcounts[Rank] = sendcounts[Rank] + numsendleft[rightrank];

TotLocalNum[Rank] = TotLocalNum[Rank] + numsendleft[rightrank];

std::cout<<std::endl;

std::cout<<"Rank= "<<Rank<<" RECEIVE "<<numsendleft[rightrank]<<" Data From Rank= "<<rightrank<<std::endl;

for(i=0;i<numsendleft[rightrank];i++)

{

std::cout<<i<<'\t'<<recvbufleft[i]<<std::endl;

}

//insert the received data here

for(i=0;i<numsendleft[rightrank];i++)

{

RecPosX.insert(RecPosX.end(),recvbufleft[i]);

}

std::cout<<std::endl;

std::cout<<"Total number in Rank= "<<Rank<<" is "<<TotLocalNum[Rank]<<std::endl;

for(i=0;i<TotLocalNum[Rank];i++)

{

std::cout<<RecPosX[i]<<" ";

if((i+1)%10 == 0)std::cout<<std::endl;

}

EndTime = MPI_Wtime();

std::cout<<std::endl;

if(Rank==MASTER)

{

std::cout<<std::endl;

std::cout<<"Total Time Spending For: "<<nProcs<<" Is "<<EndTime-StartTime<<" :Second"<<std::endl;

}

free(sendbufright);

free(recvbufright);

free(sendbufleft);

free(recvbufleft);

MPI_Finalize();

return 0;

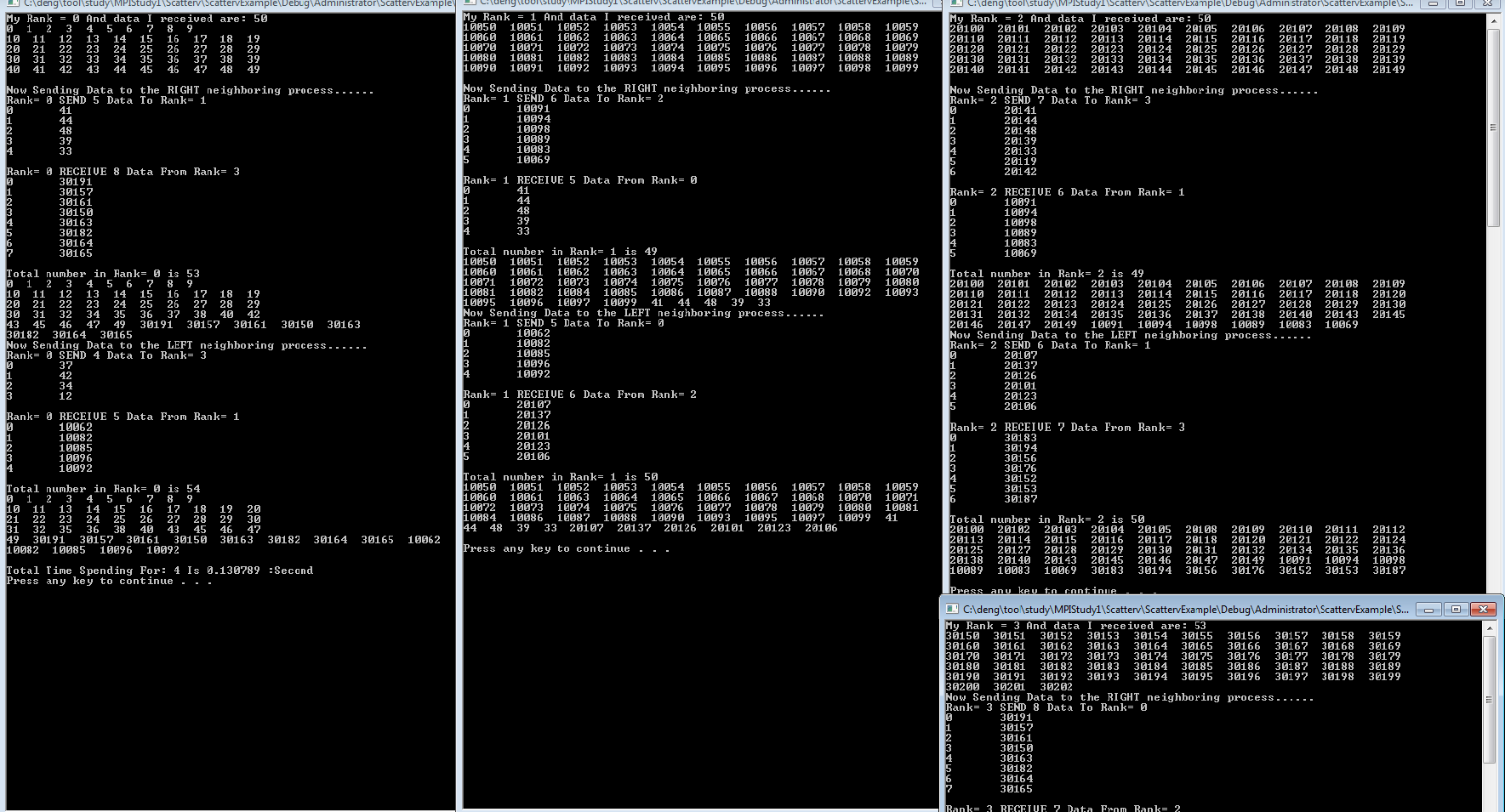

}设初始有203个数据,总共4个进程,以进程3为例,开始进程3得到53个数据:

30150————————————–30159

30160————————————–30169

30170————————————–30179

30180————————————–30189

30190————————————–30199

30200 30201 30202

然后进程3发送8个数据给进程0,随机抽取8个数据为:

第0个————30191

第1个————30157

第2个————30161

第3个————30150

第4个————30163

第5个————30182

第6个————30164

第7个————30165

进程3同时从进程2收到7个数据:

第0个————20141

第1个————20144

第2个————20148

第3个————20139

第4个————20133

第5个————20119

第6个————20142

此后,进程3共有52个数据:

30151…………………………………………………30162

30166…………………………………………………30175

30176…………………………………………………30186

30187…………………………………………………30197

30198…………………………………………………20133

20119 20142

然后开始向i-1进程发送数据,3号进程给2号发送7个数据:

第0个————30183

第1个————30194

……………………………………..

第6个————30187

然后3号进程从0号收到4个数据:

第0个————37

第1个————42

第0个————34

第1个————12

最后进程3上共有49个数据:

30151————————————-30168

30169————————————-30179

30180————————————-30193

30195————————————-20144

20148 20139——–37 42 34 12

执行完毕。

输出如图:

3414

3414

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?