要求库:

cv2、numpy、onnxruntime

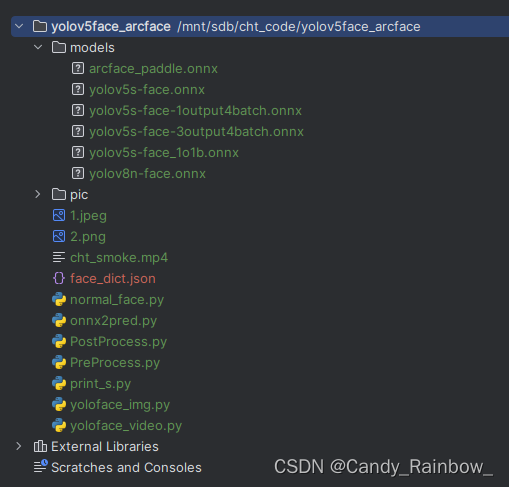

文件目录

检测图片yoloface_img.py:

import cv2

import numpy as np

from PreProcess import PreProcess

from onnx2pred import V5onnx2pred

from PostProcess import PostProcess

im = cv2.imread('1.jpeg')

img, r, dw, dh = PreProcess().process(im)#img(640*640*3) img1(1*3*640*640)

pred = np.squeeze(V5onnx2pred(p='models/yolov5s-face_1o1b.onnx').predict(img))

pro = PostProcess(conf_thresh=0.3, iou_thresh=0.5)

id, boxes, pred_m = pro.final(pred)

for i in id:

x1, y1, x2, y2 = boxes[i][:4]

x1 = pro.after2before(x1, dw, r)

x2 = pro.after2before(x2, dw, r)

y1 = pro.after2before(y1, dh, r)

y2 = pro.after2before(y2, dh, r)

#eye_left

elx, ely = pred_m[i][5], pred_m[i][6]

elx = pro.after2before(elx, dw, r)

ely = pro.after2before(ely, dh, r)

# eye_right

erx, ery = pred_m[i][7], pred_m[i][8]

erx = pro.after2before(erx, dw, r)

ery = pro.after2before(ery, dh, r)

# nose

nx, ny = pred_m[i][9], pred_m[i][10]

nx = pro.after2before(nx, dw, r)

ny = pro.after2before(ny, dh, r)

# mouth_left

mlx, mly = pred_m[i][11], pred_m[i][12]

mlx = pro.after2before(mlx, dw, r)

mly = pro.after2before(mly, dh, r)

# mouth_right

mrx, mry = pred_m[i][13], pred_m[i][14]

mrx = pro.after2before(mrx, dw, r)

mry = pro.after2before(mry, dh, r)

points = [(elx, ely), (erx, ery), (nx, ny), (mlx, mly), (mrx, mry)]

cv2.rectangle(im, (x1, y1), (x2, y2), (255, 192, 203), 2)

cv2.circle(im, (elx, ely), 3, (255, 255, 0), -1)

cv2.circle(im, (erx, ery), 3, (255, 0, 0), -1)

cv2.circle(im, (nx, ny), 3, (0, 255, 0), -1)

cv2.circle(im, (mlx, mly), 3, (0, 255, 255), -1)

cv2.circle(im, (mrx, mry), 3, (0, 0, 255), -1)

cv2.imshow('res', im)

cv2.waitKey(0)

cv2.destroyAllWindows()

检测视频yoloface_video.py:

import cv2

import numpy as np

from PreProcess import PreProcess

from onnx2pred import V5onnx2pred

from PostProcess import PostProcess

# 打开视频文件

cap = cv2.VideoCapture('cht_smoke.mp4')

# 读取视频的第一帧

ret, frame = cap.read()

if not ret:

print("无法读取视频文件")

exit()

# 预处理第一帧并进行预测

pre = PreProcess()

v5 = V5onnx2pred(p='models/yolov5s-face_1o1b.onnx')

post = PostProcess(conf_thresh=0.3, iou_thresh=0.5)

img, r, dw, dh = pre.process(frame)

pred = np.squeeze(v5.predict(img))

id, boxes, pred_m = post.final(pred)

# 创建一个循环来处理视频的每一帧

while True:

# 读取下一帧

ret, frame = cap.read()

if not ret:

break # 如果视频结束或无法读取,则退出循环

# 预处理帧并进行预测

img, _, _, _ = pre.process(frame)

pred = np.squeeze(v5.predict(img))

# 后处理预测结果

id, boxes, pred_m = post.final(pred)

# 在帧上绘制结果

for i in id:

x1, y1, x2, y2 = boxes[i][:4]

x1 = post.after2before(x1, dw, r)

x2 = post.after2before(x2, dw, r)

y1 = post.after2before(y1, dh, r)

y2 = post.after2before(y2, dh, r)

# 绘制矩形和关键点

cv2.rectangle(frame, (x1, y1), (x2, y2), (255, 192, 203), 2)

# eye_left

elx, ely = pred_m[i][5], pred_m[i][6]

elx = post.after2before(elx, dw, r)

ely = post.after2before(ely, dh, r)

# eye_right

erx, ery = pred_m[i][7], pred_m[i][8]

erx = post.after2before(erx, dw, r)

ery = post.after2before(ery, dh, r)

# nose

nx, ny = pred_m[i][9], pred_m[i][10]

nx = post.after2before(nx, dw, r)

ny = post.after2before(ny, dh, r)

# mouth_left

mlx, mly = pred_m[i][11], pred_m[i][12]

mlx = post.after2before(mlx, dw, r)

mly = post.after2before(mly, dh, r)

# mouth_right

mrx, mry = pred_m[i][13], pred_m[i][14]

mrx = post.after2before(mrx, dw, r)

mry = post.after2before(mry, dh, r)

cv2.rectangle(frame, (x1, y1), (x2, y2), (255, 192, 203), 2)

cv2.circle(frame, (elx, ely), 3, (255, 255, 0), -1)

cv2.circle(frame, (erx, ery), 3, (255, 0, 0), -1)

cv2.circle(frame, (nx, ny), 3, (0, 255, 0), -1)

cv2.circle(frame, (mlx, mly), 3, (0, 255, 255), -1)

cv2.circle(frame, (mrx, mry), 3, (0, 0, 255), -1)

# 显示帧

cv2.imshow('Video Frame', frame)

# 按'q'退出循环

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放视频对象

cap.release()

cv2.destroyAllWindows()预处理文件PreProcess.py

# w = weight, h = height, p = path, s = scale

# to(1 * 3 * 640 * 640)

import cv2

import numpy as np

def letterbox(img, new_shape=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False):

shape = img.shape[:2]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1]

if auto:

dw, dh = np.mod(dw, 64), np.mod(dh, 64)

elif scaleFill:

dw, dh = 0.0, 0.0

new_unpad = new_shape

ratio = new_shape[0] / shape[1], new_shape[1] / shape[0]

dw /= 2

dh /= 2

if shape[::-1] != new_unpad:

img = cv2.resize(img, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

img = cv2.copyMakeBorder(img, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color)

return img, ratio, (dw, dh)

class PreProcess():

def __init__(self, w=640, h=640, b=1): #weight,height,batch

self.w = w

self.h = h

self.b = b

def letterbox(self, img):

im = np.copy(img)

shape = im.shape[:2] # current shape [height, width]

new_shape = [self.w, self.h]

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2

dh /= 2

if shape[::-1] != new_unpad:

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=(128, 128, 128))

return im, r, dw, dh

def img2input(self, img):

img = np.transpose(img, (2,0,1))

img = img/255

return np.expand_dims(img, axis=0).astype(np.float32)

def process(self, p):

img, r, dw, dh = self.letterbox(p)

img = self.img2input(img)

return img, r, dw, dh

推理文件onnx2pred.py

import onnxruntime

class V5onnx2pred:

def __init__(self, p):

self.p = p

def predict(self, img):

session = onnxruntime.InferenceSession(self.p)

input_name = session.get_inputs()[0].name

label_name = session.get_outputs()[0].name

pred = session.run([label_name], {input_name: img})[0]

return pred

class V8onnx2pred:

def __init__(self, p):

self.p = p

def predict(self, img):

session = onnxruntime.InferenceSession(self.p)

input_name = session.get_inputs()[0].name

label_name0 = session.get_outputs()[0].name

pred0 = session.run([label_name0], {input_name: img})[0]

return pred0v8还没写好,后续更新

后处理文件PostProcess.py

import numpy as np

import copy

class PostProcess:

def __init__(self, conf_thresh=0.3, iou_thresh=0.5):

self.c = conf_thresh

self.i = iou_thresh

def xywh2xyxy(self, boxes): # xywh坐标变为 左上 ,右下坐标 x1,y1 x2,y2

xywh = copy.deepcopy(boxes[:, :4])

xywh[:, 0] = boxes[:, 0] - boxes[:, 2] / 2

xywh[:, 1] = boxes[:, 1] - boxes[:, 3] / 2

xywh[:, 2] = boxes[:, 0] + boxes[:, 2] / 2

xywh[:, 3] = boxes[:, 1] + boxes[:, 3] / 2

return xywh

def box_area(self, boxes):

return (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

def box_iou(self, box1, box2):

area1 = self.box_area(box1) # N

area2 = self.box_area(box2) # M

# broadcasting, 两个数组各维度大小 从后往前对比一致, 或者 有一维度值为1;

lt = np.maximum(box1[:, np.newaxis, :2], box2[:, :2])

rb = np.minimum(box1[:, np.newaxis, 2:], box2[:, 2:])

wh = rb - lt

wh = np.maximum(0, wh) # [N, M, 2]

inter = wh[:, :, 0] * wh[:, :, 1]

iou = inter / (area1[:, np.newaxis] + area2 - inter)

return iou

def normalpred(self, pred):

pred = np.squeeze(pred)

scores = pred[:, 4]

mask = scores > self.c # 置信度过滤

pred_m = pred[mask]

boxes = self.xywh2xyxy(pred_m)

scores = scores[mask]

return boxes, scores, pred_m

def numpy_nms(self, boxes, scores):

idxs = scores.argsort() # 按分数 降序排列的索引 [N]

keep = []

while idxs.size > 0: # 统计数组中元素的个数

max_score_index = idxs[-1]

max_score_box = boxes[max_score_index][None, :]

keep.append(max_score_index)

if idxs.size == 1:

break

idxs = idxs[:-1] # 将得分最大框 从索引中删除; 剩余索引对应的框 和 得分最大框 计算IoU;

other_boxes = boxes[idxs] # [?, 4]

ious = self.box_iou(max_score_box, other_boxes) # 一个框和其余框比较 1XM

idxs = idxs[ious[0] <= self.i]

return keep

def final(self, pred):

boxes, scores, pred_m = self.normalpred(pred)

id = self.numpy_nms(boxes, scores)

return id, boxes, pred_m

def after2before(self, x, d, r):

return int((x - d) / r)

444

444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?