简介

KubeKey v2.1.0 版本新增了清单(manifest)和制品(artifact)的概念,为用户离线部署 Kubernetes 集群提供了一种解决方案。manifest 是一个描述当前 Kubernetes 集群信息和定义 artifact 制品中需要包含哪些内容的文本文件。使用 KubeKey,用户只需使用清单 manifest 文件来定义将要离线部署的集群环境需要的内容,再通过该 manifest 来导出制品 artifact 文件即可完成准备工作。

离线部署时只需要 KubeKey 和 artifact 就可快速、简单的在环境中部署镜像仓库和Kubernetes 集群。

往期文章参考:

01.使用 KubeKey 在Linux上预配置生产就绪的 Kubernetes 和 KubeSphere 集群

02.Kubernetes 和 KubeSphere 集群安装配置持久化存储(nfs)并通过StatefulSet动态为pod生成pv挂载

03.使用 KubeSphere 安装Harbor并为Docker进行相关配置

版本如下

| 名称 | 版本 |

|---|---|

| openEuler | 23.03 |

| cpu架构 | arm64 |

| Kubernetes | 1.23.15 |

| KubeSphere | 3.4.0 |

| Harbor | 2.5.3 |

主机分配

| 主机名称 | IP | 角色 | 容器运行时 | 容器运行时版本 |

|---|---|---|---|---|

| master01 | 192.168.8.155 | control plane, etcd, worker | docker | 20.10.8 |

| master02 | 192.168.8.156 | worker | docker | 20.10.8 |

| node01 | 192.168.8.157 | worker | docker | 20.10.8 |

| 联网机 | 192.168.0.8 | 用于离线资源下载 | docker | 20.10.8 |

1. 离线资源准备

1.1 基础依赖项资源

1.1.1 k8s必须依赖项清单

节点要求

所有节点必须都能通过 SSH 访问。

所有节点时间同步。

所有节点都应使用 sudo/curl/openssl/tar。

所有节点都应使用 nfs-utils/ipvsadm。

| 依赖项 | Kubernetes 版本 ≥ 1.18 | Kubernetes 版本 < 1.18 |

|---|---|---|

| socat | 必须 | 可选,但建议安装 |

| conntrack | 必须 | 可选,但建议安装 |

| ebtables | 可选,但建议安装 | 可选,但建议安装 |

| ipset | 可选,但建议安装 | 可选,但建议安装 |

1.1.2 离线依赖项资源下载

由于openEuler23.03自带依赖项比较多,这里只下载了缺少的

nfs/ipvsadm/socat/conntrack/

其他操作系统可以去github查看是否有官方打包好的iso依赖包使用

如果没有就需要按照本文章下面方法自行下载依赖项

github地址:https://github.com/kubesphere/kubekey/releases/tag/v3.0.10

部分iso截图

登录到联网主机

使用如下命令下载资源包

yum install --downloadonly --downloaddir=/tmp <package-name>

以下载conntrack为例

[root@localhost ks]# yum install --downloadonly --downloaddir=/home/ks/conntrack conntrack

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.ustc.edu.cn

正在解决依赖关系

--> 正在检查事务

---> 软件包 conntrack-tools.x86_64.0.1.4.4-7.el7 将被 安装

--> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在处理依赖关系 libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在处理依赖关系 libnetfilter_queue.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在处理依赖关系 libnetfilter_cttimeout.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在处理依赖关系 libnetfilter_cthelper.so.0()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要

--> 正在检查事务

---> 软件包 libnetfilter_cthelper.x86_64.0.1.0.0-11.el7 将被 安装

---> 软件包 libnetfilter_cttimeout.x86_64.0.1.0.0-7.el7 将被 安装

---> 软件包 libnetfilter_queue.x86_64.0.1.0.2-2.el7_2 将被 安装

--> 解决依赖关系完成

依赖关系解决

==================================================================================================================================

Package 架构 版本 源 大小

==================================================================================================================================

正在安装:

conntrack-tools x86_64 1.4.4-7.el7 base 187 k

为依赖而安装:

libnetfilter_cthelper x86_64 1.0.0-11.el7 base 18 k

libnetfilter_cttimeout x86_64 1.0.0-7.el7 base 18 k

libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k

事务概要

==================================================================================================================================

安装 1 软件包 (+3 依赖软件包)

总下载量:245 k

安装大小:668 k

Background downloading packages, then exiting:

exiting because "Download Only" specified

[root@localhost ks]# ls

conntrack

[root@localhost ks]# cd conntrack

[root@localhost conntrack]# ls

conntrack-tools-1.4.4-7.el7.x86_64.rpm libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm

libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm

[root@localhost conntrack]#

可以看到相关lib依赖包也一并下载了

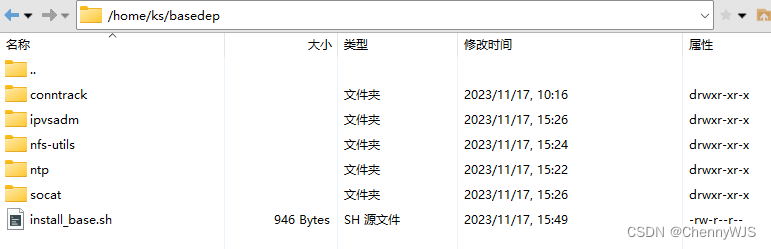

所有依赖下载如下

[root@localhost ks]# ls

conntrack ipvsadm nfs-utils socat

[root@localhost ks]

1.1.3 依赖安装脚本

编写依赖安装脚本 install_base.sh

内容如下

#!/bin/bash

echo -e "\033[34m \033[0m"

echo -e "\033[34m *****验证当前用户是否为root***** \033[0m"

echo -e ""

var_user_f=`whoami` #当前用户

if [ $var_user_f = "root" ] ; then

echo -e ""

echo -e "\033[32m OK \033[0m" #绿色字

echo -e ""

else

echo -e ""

echo -e "\033[31m请使用root用户执行!程序退出! \033[0m" #红色字

echo -e ""

exit 0

fi #结束判断条件0

work_path=`pwd`

if [ $work_path = "/" ] ; then

echo -e ""

echo -e "\033[34m *****当前工作路径为:$work_path***** \033[0m"

echo -e ""

else

work_path=$work_path"/"

echo -e ""

echo -e "\033[34m *****当前工作路径为:$work_path***** \033[0m"

echo -e ""

fi

cd $work_path/conntrack

rpm -ivh * --nodeps --force

cd $work_path/ipvsadm

rpm -ivh * --nodeps --force

cd $work_path/socat

rpm -ivh * --nodeps --force

cd $work_path/nfs-utils

rpm -ivh * --nodeps --force

exit

然后新建一个 basedep 文件夹

把刚才下载的资源和脚本移动到 basedep 内

1.2 离线 Kubernetes 集群资源

1.2.1 下载 KubeKey

直接运行以下命令

[root@node03 ks]# export KKZONE=cn

[root@node03 ks]# curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

Downloading kubekey v3.0.13 from https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v3.0.13/kubekey-v3.0.13-linux-arm64.tar.gz ...

Kubekey v3.0.13 Download Complete!

[root@node03 ks]#

或者访问 https://github.com/kubesphere/kubekey/releases下载

1.2.2 编写manifest

vim manifest-example.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- arm64

#cpu架构,下面docker镜像列表里的名称,有的会有arm64或者amd64标识,记得也要同步修改

operatingSystems:

- arch: arm64

type: linux

id: openEuler

version: "23.03"

repository:

iso:

localPath:

#localPath:/home/ks3.4.0k8s2.13.15/ubuntu-20.04-debs-amd64.iso

#官方依赖包本地带包存放路径,和下面的url在线下载路径,只需要配置一个即可

#如果采用本文章自行配置基础依赖的方式,则两个都不需要配置

url:

# url: https://github.com/kubesphere/kubekey/releases/download/v3.0.10/ubuntu-20.04-debs-amd64.iso

kubernetesDistributions:

- type: kubernetes

version: v1.23.15

components:

helm:

version: v3.9.0

cni:

version: v1.2.0

etcd:

version: v3.4.13

calicoctl:

version: v3.23.2

## For now, if your cluster container runtime is containerd, KubeKey will add a docker 20.10.8 container runtime in the below list.

## The reason is KubeKey creates a cluster with containerd by installing a docker first and making kubelet connect the socket file of containerd which docker contained.

containerRuntimes:

- type: docker

version: 20.10.8

- type: containerd

version: 1.6.4

crictl:

version: v1.24.0

docker-registry:

version: "2"

harbor:

version: v2.5.3

docker-compose:

version: v2.2.2

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/flannel:v0.12.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.21.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubefed:v0.8.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/tower:v0.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx-ingress-controller:v1.1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/metrics-server:v0.4.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:5.0.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.0.25-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14

- registry.cn-beijing.aliyuncs.com/kubesphereio/openldap:1.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/netshoot:v1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cloudcore:v1.13.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/iptables-manager:v1.13.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/edgeservice:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/gatekeeper:v3.5.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-apiserver:ks-v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-controller:ks-v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-tools:ks-v3.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.4.0-2.319.3-1

- registry.cn-beijing.aliyuncs.com/kubesphereio/inbound-agent:4.10-2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2ioperator:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2irun:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2i-binary:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-6-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-4-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-36-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-35-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-34-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-27-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd:v2.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd-applicationset:v0.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/dex:v2.30.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:6.2.6-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.7.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.39.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.31.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/grafana:8.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v2.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v2.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-curator:v5.7.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-oss:6.8.22

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch:2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch-dashboards:2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch-curator:v0.0.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.14.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.9.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:v1.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/filebeat:6.7.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-operator:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-exporter:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-ruler:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-operator:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-webhook:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/pilot:1.14.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/proxyv2:1.14.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-operator:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-agent:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-collector:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-query:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-es-index-cleaner:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali-operator:v1.50.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali:v1.50

- registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:1.31.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx:1.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/wget:1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/hello:plain-text

- registry.cn-beijing.aliyuncs.com/kubesphereio/wordpress:4.8-apache

- registry.cn-beijing.aliyuncs.com/kubesphereio/hpa-example:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentd:v1.4.2-2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/perl:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-productpage-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v2:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-details-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-ratings-v1:1.16.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/scope:1.13.0

registry:

auths: {}

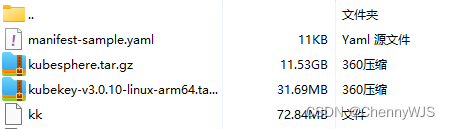

1.2.3 导出制品 artifact

联网主机直接运行以下命令

[root@node03 ks]# chmod +777 kk

[root@node03 ks]# ./kk artifact export -m manifest-sample.yaml -o kubesphere.tar.gz

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

09:21:03 CST [CheckFileExist] Check output file if existed

09:21:03 CST success: [LocalHost]

09:21:03 CST [CopyImagesToLocalModule] Copy images to a local OCI path from registries

09:21:03 CST Source: docker://registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.23.15

09:21:03 CST Destination: oci:/home/ks/kubekey/artifact/images:kubesphereio:kube-apiserver:v1.23.15-arm64

Getting image source signatures

Copying blob d806e31c7174 done

Copying blob 9880fb5de7c7 done

Copying blob 63c3e2b46e6d done

Copying config cbb46e7054 done

Writing manifest to image destination

Storing signatures

09:21:25 CST Source: docker://registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.23.15

09:21:25 CST Destination: oci:/home/ks/kubekey/artifact/images:kubesphereio:kube-controller-manager:v1.23.15-arm64

Getting image source signatures

Copying blob 82616e8852c3 done

Copying blob 9880fb5de7c7 skipped: already exists

Copying blob 63c3e2b46e6d skipped: already exists

Copying config 5bd44b41fa done

Writing manifest to image destination

Storing signatures

09:21:44 CST Source: docker://registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.23.15

09:21:44 CST Destination: oci:/home/ks/kubekey/artifact/images:kubesphereio:kube-proxy:v1.23.15-arm64

Getting image source signatures

Copying blob 59c2026c78b8 [======================>---------------] 6.2MiB / 10.5MiB

Copying blob fa2a28e08351 [=============================>--------] 19.8MiB / 25.1MiB

Copying blob beab33aa815c done

下载时间较长,图片为打包好的资源目录

可以参考下面步骤,先去其他离线主机配置基础环境依赖

2. 离线集群安装

2.1 基础环境依赖配置

以下操作需要在集群每个节点执行

2.1.1 设置主机名

# 如果是云主机可以去云主机控制台修改,示例为master01主机,其他主机也要修改

hostnamectl set-hostname master01

2.1.2 依赖组件安装

将上面步骤1.1准备好的离线包上传至每台离线的集群服务器

执行安装脚本

./install_base.sh

2.1.3 时间同步

时间不同步,k8s集群安装时会报证书过期不合法错误

选择三台服务器中的一台作为服务端

执行以下命令

cat > /etc/chrony.conf << EOF

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow 192.168.8.0/24 #服务器所在网段

local stratum 10

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

systemctl restart chronyd ; systemctl enable chronyd

剩下的服务器作为客户端

执行以下命令

cat > /etc/chrony.conf << EOF

pool 192.168.8.155 iburst #服务端ip地址

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

systemctl restart chronyd ; systemctl enable chronyd

# 使用客户端进行验证

chronyc sources -v

2.1.4 配置hosts本地解析

下面也配置了harbor的地址解析

如需安装harbor请参考:03.使用 KubeSphere 安装Harbor并为Docker进行相关配置

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.8.155 master01

192.168.8.156 master02

192.168.8.157 node01

192.168.8.158 node02

192.168.0.69 dockerhub.kubekey.local #docker私有镜像仓库harbor

2.1.5 检查DNS

请确保 /etc/resolv.conf 文件存在 ,并且其中的 DNS 地址可用,否则,可能会导致集群中的 DNS 出现问题。

会出现以下类似报错:

kubelet.go:2466] "Error getting node" err="node \"master01\" not found

2.2 创建harbor项目空间

安装harbor请参考:使用 Docker Compose 安装 Harbor 镜像仓库

harbor初始账号密码

Account: admin

Password: Harbor12345

2.2.1 编写脚本

根据实际情况修改项目脚本

#!/usr/bin/env bash

# Harbor 仓库地址

url="http://dockerhub.kubekey.local:30002"

# 访问 Harbor 仓库用户

user="admin"

# 访问 Harbor 仓库用户密码

passwd=""

# 需要创建的项目名列表,正常只需要创建一个**kubesphereio**即可,这里为了保留变量可扩展性多写了两个。

harbor_projects=(library

kubesphereio

kubesphere

argoproj

calico

coredns

openebs

csiplugin

minio

mirrorgooglecontainers

osixia

prom

thanosio

jimmidyson

grafana

elastic

istio

jaegertracing

jenkins

weaveworks

openpitrix

joosthofman

nginxdemos

fluent

kubeedge

openpolicyagent

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{\"project_name\": \"${project}\", \"metadata\": {\"public\": \"true\"}, \"storage_limit\": -1}";

done

2.2.2 执行脚本

[root@localhost ks]# ./create_project_harbor.sh

creating library

creating kubesphereio

creating kubesphere

creating argoproj

creating calico

creating coredns

creating openebs

2.3 安装k8s和ks

2.3.1 编写config

新建一个yml文件config-sample.yaml

具体内容如下,其中的组件版本号 要和1.2.2manifest中的版本号对应

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master01, address: 192.168.8.155, internalAddress: 192.168.8.155, user: root, password: "Passw0rd@155", arch: arm64}

- {name: master02, address: 192.168.8.156, internalAddress: 192.168.8.156, user: root, password: "Passw0rd@156", arch: arm64}

- {name: node01, address: 192.168.8.157, internalAddress: 192.168.8.157, user: root, password: "Passw0rd@157", arch: arm64}

roleGroups:

etcd:

- node01

- master01

- master02

control-plane:

- master01

- master02

worker:

- node01

- master01

- master02

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.15

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

type: harbor

auths:

"dockerhub.kubekey.local:30002":

username: admin

password: Harbor12345

skipTLSVerify: true

plainHTTP: true #不使用https

privateRegistry: "dockerhub.kubekey.local:30002"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.4.0

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

namespace_override: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

# resources: {}

jenkinsMemoryLim: 8Gi

jenkinsMemoryReq: 4Gi

jenkinsVolumeSize: 8Gi

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

node_exporter:

port: 9100

# resources: {}

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

istio:

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime:

enabled: false

kubeedge:

enabled: false

cloudCore:

cloudHub:

advertiseAddress:

- ""

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

# resources: {}

# hostNetWork: false

iptables-manager:

enabled: true

mode: "external"

# resources: {}

# edgeService:

# resources: {}

terminal:

timeout: 600

2.3.2 执行命令安装

如果使用的是iso基础依赖,则需要附加 ----with-packages:

./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz --with-packages

执行过程中会报错:

login registry failed, cmd: docker login --username 'admin' --password 'Harbor12345' dockerhub.kubekey.local:30002, err:Failed to exec command: sudo -E /bin/bash -c "docker login --username 'admin' --password 'Harbor12345' dockerhub.kubekey.local:30002"

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

Error response from daemon: Get "https://dockerhub.kubekey.local:30002/v2/": http: server gave HTTP response to HTTPS client: Process exited with status 1: Failed to exec command: sudo -E /bin/bash -c "docker login --username 'admin' --password 'Harbor12345' dockerhub.kubekey.local:30002"

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

这是因为docker安装好后没有配置私有harbor地址

参考2.3.3配置好后

再次执行./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz即可

[root@localhost ks3.4.0k8s2.13.15]# ./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

15:11:55 CST [GreetingsModule] Greetings

15:11:57 CST message: [node01]

Greetings, KubeKey!

15:11:57 CST message: [master01]

Greetings, KubeKey!

15:11:58 CST message: [master02]

Greetings, KubeKey!

15:11:58 CST success: [node01]

15:11:58 CST success: [master01]

15:11:58 CST success: [master02]

15:11:58 CST [NodePreCheckModule] A pre-check on nodes

15:12:07 CST success: [node01]

15:12:07 CST success: [master01]

15:12:07 CST success: [master02]

15:12:07 CST [ConfirmModule] Display confirmation form

+----------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+----------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| master01 | y | y | y | y | y | y | y | y | y | | y | y | | | CST 15:12:07 |

| master02 | y | y | y | y | y | y | y | y | y | | y | y | | | CST 15:12:07 |

| node01 | y | y | y | y | y | y | y | y | y | | y | y | | | CST 15:12:07 |

+----------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

15:12:10 CST success: [LocalHost]

15:12:10 CST [UnArchiveArtifactModule] Check the KubeKey artifact md5 value

2.3.3 添加私有仓库地址

# 修改docker配置文件添加harbor地址

# vim /etc/docker/daemon.json

{

"insecure-registries": ["dockerhub.kubekey.local:30002"]

}

# 集群每台主机需要重新启动docker加载配置

systemctl daemon-reload

systemctl restart docker

2.3.4 查看集群状态

另起窗口执行命令查看ks安装状态

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

安装完成后,会看到以下内容:

15:46:51 CST skipped: [master02]

15:46:51 CST success: [master01]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.8.155:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2023-11-22 16:02:33

#####################################################

16:02:37 CST skipped: [master02]

16:02:37 CST success: [master01]

16:02:37 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

[root@localhost ks3.4.0k8s2.13.15]#

往期文章参考:

01.使用 KubeKey 在Linux上预配置生产就绪的 Kubernetes 和 KubeSphere 集群

02.Kubernetes 和 KubeSphere 集群安装配置持久化存储(nfs)并通过StatefulSet动态为pod生成pv挂载

03.使用 KubeSphere 安装Harbor并为Docker进行相关配置

1065

1065

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?