Flink 1.13 源码解析——Flink 作业提交流程 上

Flink 1.13 源码解析——Flink 作业提交流程 下

Flink 1.13 源码解析——Graph的转化以及StreamGraph的构建

Flink 1.13 源码解析——Graph的转化以及JobGraph的构建

目录

2.3、ConsumedPartitionGroup和ConsumedVertexGroup的构建

前言

在前两章中,我们分析了Flink的StreamGraph的构成以及StreamGraph向JobGraph的转化,在本章中,我们来看JobGraph向ExecutionGraph的核心内容。

Flink 1.13 源码解析——Graph的转化以及StreamGraph的构建

Flink 1.13 源码解析——Graph的转化以及JobGraph的构建

一、ExecutionGraph概述

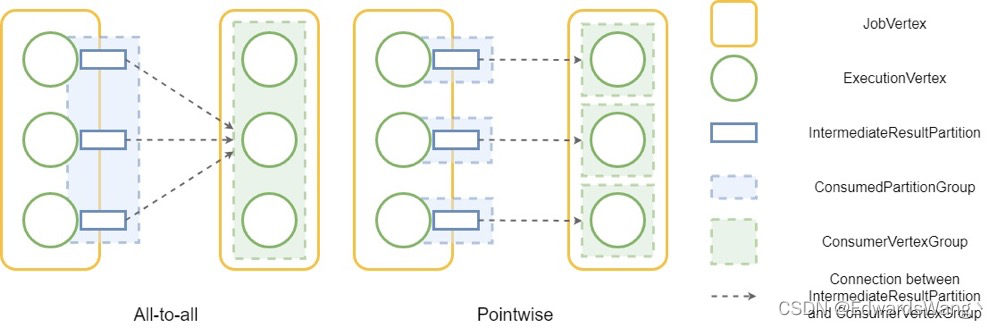

在JobGraph向ExecutionGraph转化的过程中,主要的工作内容为根据Operator的并行度来拆分JobVertex,每一个JobGraph中的JobVertex对应的ExecutionGraph中的一个ExecutionJonVertex,而每一个JobVertex根据自身并行度会拆分成多个ExecutionVertex。同时会有一个IntermediateResultPartition对象来接收ExecutionVertex的输出。对于同一个ExecutionJobVertex中的多个ExecutionVertex的多个输出IntermediateResultPartition对象组成了一个IntermediateResult对象。但是在Flink1.13版本中,ExecutionGraph不再有ExecutionEdge的概念,取而代之的是ConsumedPartitionGroup和ConsumedVertexGroup。

Flink1.12的ExecutionGraph:

在Flink的ExecutionGraph中,有两种分布模式,一对一和多对多,当上下游节点处于多对多模式时,遍历所有edge的时间复杂度为 O(n 平方 ),这意味着随着规模的增加,时间复杂度也会迅速增加。

在 Flink 1.12 中,ExecutionEdge类用于存储任务之间的连接信息。这意味着对于 all-to-all 分布模式,会有 O(n 平方 )的 ExecutionEdges,这将占用大量内存用于大规模作业。对于两个连接一个 all-to-all 边缘和 10K 并行度的JobVertices,存储 100M ExecutionEdges 将需要超过 4 GiB 的内存。由于生产作业中的顶点之间可能存在多个全对全连接,因此所需的内存量将迅速增加。

由于同一ExecutionJobVertex中的ExecutionVertex都是由同一个JobVertex根据并行度划分而来,所以承接他们输出的IntermediateResultPartition的结构是相同的,同理,IntermediateResultPartition所连接的下游的ExecutionJobVertex内的所有ExecutionVertex也都是同结构的。因此Flink根据上述条件将ExecutionVertex和IntermediateResultPartiton进行的分组:对于属于同一个ExecutionJobVertex的所有ExecutionVertex构成了一个ConsumerVertexGroup,所有对此ExecutionJobVertex的输入IntermediateResultPartition构成了一个ConsumerPartitionGroup,如下图:

在调度任务时,Flink需要遍历所有IntermediateResultPartition和所有的ExecutionVertex之间的所有连接,过去由于总共有O(n平方)条边,因此迭代的整体复杂度为O(n平方)。在Flink1.13以后,由于ExecutionEdge被替换为ConsumerPartitionGroup和ConsumedVertexGroup,由于所有同构结果分区都连接到同一个下游ConsumedVertexGroup,当调度器遍历所有连接时,它只需要遍历组一次,计算复杂度从O(n平方)降低到O(n)。于是,就有了Flink1.13的ExecutionGraph

Flink1.13的ExecutionGraph:

二、ExecutionGraph的构成

在之前 Flink 1.13 源码解析——Flink 作业提交流程 下 章节中,我们曾聊到JobMaster在Leader选举完成后的初始化工作,其中有一段如下代码:

@Override

public CompletableFuture<JobMasterService> createJobMasterService(

UUID leaderSessionId, OnCompletionActions onCompletionActions) {

return CompletableFuture.supplyAsync(

FunctionUtils.uncheckedSupplier(

// TODO 内部构建JobMaster并启动

() -> internalCreateJobMasterService(leaderSessionId, onCompletionActions)),

executor);

}

在internalCreateJobMasterService方法里,Flink完成的JobMaster的初始化工作,我们点进internalCreateJobMasterService方法:

private JobMasterService internalCreateJobMasterService(

UUID leaderSessionId, OnCompletionActions onCompletionActions) throws Exception {

final JobMaster jobMaster =

new JobMaster(

rpcService,

JobMasterId.fromUuidOrNull(leaderSessionId),

jobMasterConfiguration,

ResourceID.generate(),

jobGraph,

haServices,

slotPoolServiceSchedulerFactory,

jobManagerSharedServices,

heartbeatServices,

jobManagerJobMetricGroupFactory,

onCompletionActions,

fatalErrorHandler,

userCodeClassloader,

shuffleMaster,

lookup ->

new JobMasterPartitionTrackerImpl(

jobGraph.getJobID(), shuffleMaster, lookup),

new DefaultExecutionDeploymentTracker(),

DefaultExecutionDeploymentReconciler::new,

initializationTimestamp);

// TODO JobMaster继承了Endpoint,所以在初始化完成后会回调JobMaster的onStart方法

jobMaster.start();

return jobMaster;

}在这里调用了JobMaster的构造方法来初始化JobMaster,在初始化完成后回调JobMaster的onStart方法,我们点进JobMaster的构造方法:

/* TODO

要完成的工作:

1.要向ResourceManager注册,并且维持心跳

2.解析JobGraph,得到ExecutorGraph,其实ExecutorGraph就是JobGraph的并行化版本

3.JobMaster负责向ResourceManager去申请Slot(一个Slot启动一个StreamTask)

4.派发任务运行,并且监控他们的状态

5.维持JobMaster和StreamTask之间的心跳

6.JobMaster还需要进行zookeeper的相关操作

*/

public JobMaster(

RpcService rpcService,

JobMasterId jobMasterId,

JobMasterConfiguration jobMasterConfiguration,

ResourceID resourceId,

JobGraph jobGraph,

HighAvailabilityServices highAvailabilityService,

SlotPoolServiceSchedulerFactory slotPoolServiceSchedulerFactory,

JobManagerSharedServices jobManagerSharedServices,

HeartbeatServices heartbeatServices,

JobManagerJobMetricGroupFactory jobMetricGroupFactory,

OnCompletionActions jobCompletionActions,

FatalErrorHandler fatalErrorHandler,

ClassLoader userCodeLoader,

ShuffleMaster<?> shuffleMaster,

PartitionTrackerFactory partitionTrackerFactory,

ExecutionDeploymentTracker executionDeploymentTracker,

ExecutionDeploymentReconciler.Factory executionDeploymentReconcilerFactory,

long initializationTimestamp)

throws Exception {

// TODO 开启RPC服务

super(rpcService, AkkaRpcServiceUtils.createRandomName(JOB_MANAGER_NAME), jobMasterId);

final ExecutionDeploymentReconciliationHandler executionStateReconciliationHandler =

new ExecutionDeploymentReconciliationHandler() {

@Override

public void onMissingDeploymentsOf(

Collection<ExecutionAttemptID> executionAttemptIds, ResourceID host) {

log.debug(

"Failing deployments {} due to no longer being deployed.",

executionAttemptIds);

for (ExecutionAttemptID executionAttemptId : executionAttemptIds) {

schedulerNG.updateTaskExecutionState(

new TaskExecutionState(

executionAttemptId,

ExecutionState.FAILED,

new FlinkException(

String.format(

"Execution %s is unexpectedly no longer running on task executor %s.",

executionAttemptId, host))));

}

}

@Override

public void onUnknownDeploymentsOf(

Collection<ExecutionAttemptID> executionAttemptIds, ResourceID host) {

log.debug(

"Canceling left-over deployments {} on task executor {}.",

executionAttemptIds,

host);

for (ExecutionAttemptID executionAttemptId : executionAttemptIds) {

Tuple2<TaskManagerLocation, TaskExecutorGateway> taskManagerInfo =

registeredTaskManagers.get(host);

if (taskManagerInfo != null) {

taskManagerInfo.f1.cancelTask(executionAttemptId, rpcTimeout);

}

}

}

};

this.executionDeploymentTracker = executionDeploymentTracker;

this.executionDeploymentReconciler =

executionDeploymentReconcilerFactory.create(executionStateReconciliationHandler);

this.jobMasterConfiguration = checkNotNull(jobMasterConfiguration);

this.resourceId = checkNotNull(resourceId);

// TODO 保存JobGraph到JobMaster中

this.jobGraph = checkNotNull(jobGraph);

this.rpcTimeout = jobMasterConfiguration.getRpcTimeout();

this.highAvailabilityServices = checkNotNull(highAvailabilityService);

this.blobWriter = jobManagerSharedServices.getBlobWriter();

this.scheduledExecutorService = jobManagerSharedServices.getScheduledExecutorService();

this.jobCompletionActions = checkNotNull(jobCompletionActions);

this.fatalErrorHandler = checkNotNull(fatalErrorHandler);

this.userCodeLoader = checkNotNull(userCodeLoader);

this.initializationTimestamp = initializationTimestamp;

this.retrieveTaskManagerHostName =

jobMasterConfiguration

.getConfiguration()

.getBoolean(JobManagerOptions.RETRIEVE_TASK_MANAGER_HOSTNAME);

final String jobName = jobGraph.getName();

final JobID jid = jobGraph.getJobID();

log.info("Initializing job {} ({}).", jobName, jid);

// TODO ResourceManager leader地址接收器

resourceManagerLeaderRetriever =

highAvailabilityServices.getResourceManagerLeaderRetriever();

// TODO 创建SlotPoolService: 负责该job的slot申请和释放等slot管理工作

this.slotPoolService =

checkNotNull(slotPoolServiceSchedulerFactory).createSlotPoolService(jid);

this.registeredTaskManagers = new HashMap<>(4);

this.partitionTracker =

checkNotNull(partitionTrackerFactory)

.create(

resourceID -> {

Tuple2<TaskManagerLocation, TaskExecutorGateway>

taskManagerInfo =

registeredTaskManagers.get(resourceID);

if (taskManagerInfo == null) {

return Optional.empty();

}

return Optional.of(taskManagerInfo.f1);

});

this.shuffleMaster = checkNotNull(shuffleMaster);

this.jobManagerJobMetricGroup = jobMetricGroupFactory.create(jobGraph);

this.jobStatusListener = new JobManagerJobStatusListener();

// TODO 内部会将JobGraph变成ExecutionGraph

this.schedulerNG =

createScheduler(

slotPoolServiceSchedulerFactory,

executionDeploymentTracker,

jobManagerJobMetricGroup,

jobStatusListener);

this.heartbeatServices = checkNotNull(heartbeatServices);

this.taskManagerHeartbeatManager = NoOpHeartbeatManager.getInstance();

this.resourceManagerHeartbeatManager = NoOpHeartbeatManager.getInstance();

this.resourceManagerConnection = null;

this.establishedResourceManagerConnection = null;

this.accumulators = new HashMap<>();

}

代码很长,其中绝大多数内容是JobMaster启动相关的工作,此内容我们会在下一章中进行分析,本章主要还是来看ExecutionGraph的构成,ExecutionGraph的构成相关方法在这里:

// TODO 内部会将JobGraph变成ExecutionGraph

this.schedulerNG =

createScheduler(

slotPoolServiceSchedulerFactory,

executionDeploymentTracker,

jobManagerJobMetricGroup,

jobStatusListener);我们点进createScheduler方法:

private SchedulerNG createScheduler(

SlotPoolServiceSchedulerFactory slotPoolServiceSchedulerFactory,

ExecutionDeploymentTracker executionDeploymentTracker,

JobManagerJobMetricGroup jobManagerJobMetricGroup,

JobStatusListener jobStatusListener)

throws Exception {

// TODO

final SchedulerNG scheduler =

slotPoolServiceSchedulerFactory.createScheduler(

log,

jobGraph,

scheduledExecutorService,

jobMasterConfiguration.getConfiguration(),

slotPoolService,

scheduledExecutorService,

userCodeLoader,

highAvailabilityServices.getCheckpointRecoveryFactory(),

rpcTimeout,

blobWriter,

jobManagerJobMetricGroup,

jobMasterConfiguration.getSlotRequestTimeout(),

shuffleMaster,

partitionTracker,

executionDeploymentTracker,

initializationTimestamp,

getMainThreadExecutor(),

fatalErrorHandler,

jobStatusListener);

return scheduler;

}

再点进slotPoolServiceSchedulerFactory.createScheduler方法:

public SchedulerNG createScheduler(

Logger log,

JobGraph jobGraph,

ScheduledExecutorService scheduledExecutorService,

Configuration configuration,

SlotPoolService slotPoolService,

ScheduledExecutorService executorService,

ClassLoader userCodeLoader,

CheckpointRecoveryFactory checkpointRecoveryFactory,

Time rpcTimeout,

BlobWriter blobWriter,

JobManagerJobMetricGroup jobManagerJobMetricGroup,

Time slotRequestTimeout,

ShuffleMaster<?> shuffleMaster,

JobMasterPartitionTracker partitionTracker,

ExecutionDeploymentTracker executionDeploymentTracker,

long initializationTimestamp,

ComponentMainThreadExecutor mainThreadExecutor,

FatalErrorHandler fatalErrorHandler,

JobStatusListener jobStatusListener)

throws Exception {

// TODO

return schedulerNGFactory.createInstance(

log,

jobGraph,

scheduledExecutorService,

configuration,

slotPoolService,

executorService,

userCodeLoader,

checkpointRecoveryFactory,

rpcTimeout,

blobWriter,

jobManagerJobMetricGroup,

slotRequestTimeout,

shuffleMaster,

partitionTracker,

executionDeploymentTracker,

initializationTimestamp,

mainThreadExecutor,

fatalErrorHandler,

jobStatusListener);

}

再点进schedulerNGFactory.createInstance方法,选择DefaultSchedulerFactory实现,再去看方法的返回代码:

// TODO 返回一个DefaultScheduler对象,在该对象内部,会保存JobGraph,同时也会生成ExecutionGraph

return new DefaultScheduler(

log,

jobGraph,

ioExecutor,

jobMasterConfiguration,

schedulerComponents.getStartUpAction(),

new ScheduledExecutorServiceAdapter(futureExecutor),

userCodeLoader,

checkpointRecoveryFactory,

jobManagerJobMetricGroup,

schedulerComponents.getSchedulingStrategyFactory(),

FailoverStrategyFactoryLoader.loadFailoverStrategyFactory(jobMasterConfiguration),

restartBackoffTimeStrategy,

new DefaultExecutionVertexOperations(),

new ExecutionVertexVersioner(),

schedulerComponents.getAllocatorFactory(),

initializationTimestamp,

mainThreadExecutor,

jobStatusListener,

executionGraphFactory);我们继续来看DefaultScheduler的构造方法,并进入父类的构造方法:

public SchedulerBase(

final Logger log,

final JobGraph jobGraph,

final Executor ioExecutor,

final Configuration jobMasterConfiguration,

final ClassLoader userCodeLoader,

final CheckpointRecoveryFactory checkpointRecoveryFactory,

final JobManagerJobMetricGroup jobManagerJobMetricGroup,

final ExecutionVertexVersioner executionVertexVersioner,

long initializationTimestamp,

final ComponentMainThreadExecutor mainThreadExecutor,

final JobStatusListener jobStatusListener,

final ExecutionGraphFactory executionGraphFactory)

throws Exception {

this.log = checkNotNull(log);

this.jobGraph = checkNotNull(jobGraph);

this.executionGraphFactory = executionGraphFactory;

this.jobManagerJobMetricGroup = checkNotNull(jobManagerJobMetricGroup);

this.executionVertexVersioner = checkNotNull(executionVertexVersioner);

this.mainThreadExecutor = mainThreadExecutor;

this.checkpointsCleaner = new CheckpointsCleaner();

this.completedCheckpointStore =

SchedulerUtils.createCompletedCheckpointStoreIfCheckpointingIsEnabled(

jobGraph,

jobMasterConfiguration,

userCodeLoader,

checkNotNull(checkpointRecoveryFactory),

log);

this.checkpointIdCounter =

SchedulerUtils.createCheckpointIDCounterIfCheckpointingIsEnabled(

jobGraph, checkNotNull(checkpointRecoveryFactory));

// TODO JobGraph向ExecutionGraph的转换

// TODO 此处入参没有JobGraph是因为JobGraph已经是实例内部的一个成员变量了

this.executionGraph =

createAndRestoreExecutionGraph(

completedCheckpointStore,

checkpointsCleaner,

checkpointIdCounter,

initializationTimestamp,

mainThreadExecutor,

jobStatusListener);

registerShutDownCheckpointServicesOnExecutionGraphTermination(executionGraph);

this.schedulingTopology = executionGraph.getSchedulingTopology();

stateLocationRetriever =

executionVertexId ->

getExecutionVertex(executionVertexId).getPreferredLocationBasedOnState();

inputsLocationsRetriever =

new ExecutionGraphToInputsLocationsRetrieverAdapter(executionGraph);

this.kvStateHandler = new KvStateHandler(executionGraph);

this.executionGraphHandler =

new ExecutionGraphHandler(executionGraph, log, ioExecutor, this.mainThreadExecutor);

this.operatorCoordinatorHandler =

new DefaultOperatorCoordinatorHandler(executionGraph, this::handleGlobalFailure);

operatorCoordinatorHandler.initializeOperatorCoordinators(this.mainThreadExecutor);

this.exceptionHistory =

new BoundedFIFOQueue<>(

jobMasterConfiguration.getInteger(WebOptions.MAX_EXCEPTION_HISTORY_SIZE));

}

我们点进以下方法内:

// TODO JobGraph向ExecutionGraph的转换

// TODO 此处入参没有JobGraph是因为JobGraph已经是实例内部的一个成员变量了

this.executionGraph =

createAndRestoreExecutionGraph(

completedCheckpointStore,

checkpointsCleaner,

checkpointIdCounter,

initializationTimestamp,

mainThreadExecutor,

jobStatusListener);可以看到如下方法

private ExecutionGraph createAndRestoreExecutionGraph(

CompletedCheckpointStore completedCheckpointStore,

CheckpointsCleaner checkpointsCleaner,

CheckpointIDCounter checkpointIdCounter,

long initializationTimestamp,

ComponentMainThreadExecutor mainThreadExecutor,

JobStatusListener jobStatusListener)

throws Exception {

// TODO 创建或恢复ExecutionGraph

final ExecutionGraph newExecutionGraph =

executionGraphFactory.createAndRestoreExecutionGraph(

jobGraph,

completedCheckpointStore,

checkpointsCleaner,

checkpointIdCounter,

TaskDeploymentDescriptorFactory.PartitionLocationConstraint.fromJobType(

jobGraph.getJobType()),

initializationTimestamp,

new DefaultVertexAttemptNumberStore(),

computeVertexParallelismStore(jobGraph),

log);

newExecutionGraph.setInternalTaskFailuresListener(

new UpdateSchedulerNgOnInternalFailuresListener(this));

newExecutionGraph.registerJobStatusListener(jobStatusListener);

newExecutionGraph.start(mainThreadExecutor);

return newExecutionGraph;

}

在点进executionGraphFactory.createAndRestoreExecutionGraph里:

public ExecutionGraph createAndRestoreExecutionGraph(

JobGraph jobGraph,

CompletedCheckpointStore completedCheckpointStore,

CheckpointsCleaner checkpointsCleaner,

CheckpointIDCounter checkpointIdCounter,

TaskDeploymentDescriptorFactory.PartitionLocationConstraint partitionLocationConstraint,

long initializationTimestamp,

VertexAttemptNumberStore vertexAttemptNumberStore,

VertexParallelismStore vertexParallelismStore,

Logger log)

throws Exception {

ExecutionDeploymentListener executionDeploymentListener =

new ExecutionDeploymentTrackerDeploymentListenerAdapter(executionDeploymentTracker);

ExecutionStateUpdateListener executionStateUpdateListener =

(execution, newState) -> {

if (newState.isTerminal()) {

executionDeploymentTracker.stopTrackingDeploymentOf(execution);

}

};

// TODO 将JobGraph转换成ExecutionGraph

final ExecutionGraph newExecutionGraph =

DefaultExecutionGraphBuilder.buildGraph(

jobGraph,

configuration,

futureExecutor,

ioExecutor,

userCodeClassLoader,

completedCheckpointStore,

checkpointsCleaner,

checkpointIdCounter,

rpcTimeout,

jobManagerJobMetricGroup,

blobWriter,

log,

shuffleMaster,

jobMasterPartitionTracker,

partitionLocationConstraint,

executionDeploymentListener,

executionStateUpdateListener,

initializationTimestamp,

vertexAttemptNumberStore,

vertexParallelismStore);

final CheckpointCoordinator checkpointCoordinator =

newExecutionGraph.getCheckpointCoordinator();

// TODO 恢复ExecutionGraph

if (checkpointCoordinator != null) {

// check whether we find a valid checkpoint

if (!checkpointCoordinator.restoreInitialCheckpointIfPresent(

new HashSet<>(newExecutionGraph.getAllVertices().values()))) {

// check whether we can restore from a savepoint

tryRestoreExecutionGraphFromSavepoint(

newExecutionGraph, jobGraph.getSavepointRestoreSettings());

}

}

return newExecutionGraph;

}

终于来到了我们的目标代码处,

2.1、ExecutionGraph空壳的初始化

我们再点进DefaultExecutionGraphBuilder.buildGraph里:

public static DefaultExecutionGraph buildGraph(

JobGraph jobGraph,

Configuration jobManagerConfig,

ScheduledExecutorService futureExecutor,

Executor ioExecutor,

ClassLoader classLoader,

CompletedCheckpointStore completedCheckpointStore,

CheckpointsCleaner checkpointsCleaner,

CheckpointIDCounter checkpointIdCounter,

Time rpcTimeout,

MetricGroup metrics,

BlobWriter blobWriter,

Logger log,

ShuffleMaster<?> shuffleMaster,

JobMasterPartitionTracker partitionTracker,

TaskDeploymentDescriptorFactory.PartitionLocationConstraint partitionLocationConstraint,

ExecutionDeploymentListener executionDeploymentListener,

ExecutionStateUpdateListener executionStateUpdateListener,

long initializationTimestamp,

VertexAttemptNumberStore vertexAttemptNumberStore,

VertexParallelismStore vertexParallelismStore)

throws JobExecutionException, JobException {

checkNotNull(jobGraph, "job graph cannot be null");

final String jobName = jobGraph.getName();

final JobID jobId = jobGraph.getJobID();

final JobInformation jobInformation =

new JobInformation(

jobId,

jobName,

jobGraph.getSerializedExecutionConfig(),

jobGraph.getJobConfiguration(),

jobGraph.getUserJarBlobKeys(),

jobGraph.getClasspaths());

final int maxPriorAttemptsHistoryLength =

jobManagerConfig.getInteger(JobManagerOptions.MAX_ATTEMPTS_HISTORY_SIZE);

final PartitionReleaseStrategy.Factory partitionReleaseStrategyFactory =

PartitionReleaseStrategyFactoryLoader.loadPartitionReleaseStrategyFactory(

jobManagerConfig);

// create a new execution graph, if none exists so far

final DefaultExecutionGraph executionGraph;

try {

// TODO 构建ExecutionGraph的空壳

executionGraph =

new DefaultExecutionGraph(

jobInformation,

futureExecutor,

ioExecutor,

rpcTimeout,

maxPriorAttemptsHistoryLength,

classLoader,

blobWriter,

partitionReleaseStrategyFactory,

shuffleMaster,

partitionTracker,

partitionLocationConstraint,

executionDeploymentListener,

executionStateUpdateListener,

initializationTimestamp,

vertexAttemptNumberStore,

vertexParallelismStore);

} catch (IOException e) {

throw new JobException("Could not create the ExecutionGraph.", e);

}

// set the basic properties

try {

// TODO 将JobGraph变成Json形式

executionGraph.setJsonPlan(JsonPlanGenerator.generatePlan(jobGraph));

} catch (Throwable t) {

log.warn("Cannot create JSON plan for job", t);

// give the graph an empty plan

executionGraph.setJsonPlan("{}");

}

// initialize the vertices that have a master initialization hook

// file output formats create directories here, input formats create splits

final long initMasterStart = System.nanoTime();

log.info("Running initialization on master for job {} ({}).", jobName, jobId);

// TODO 遍历JobGraph中的所有端点,看是否有启动类

for (JobVertex vertex : jobGraph.getVertices()) {

String executableClass = vertex.getInvokableClassName();

if (executableClass == null || executableClass.isEmpty()) {

// TODO 没有则抛异常

throw new JobSubmissionException(

jobId,

"The vertex "

+ vertex.getID()

+ " ("

+ vertex.getName()

+ ") has no invokable class.");

}

try {

vertex.initializeOnMaster(classLoader);

} catch (Throwable t) {

throw new JobExecutionException(

jobId,

"Cannot initialize task '" + vertex.getName() + "': " + t.getMessage(),

t);

}

}

log.info(

"Successfully ran initialization on master in {} ms.",

(System.nanoTime() - initMasterStart) / 1_000_000);

// topologically sort the job vertices and attach the graph to the existing one

// TODO 按顺序将JobGraph中的端点放入集合中

List<JobVertex> sortedTopology = jobGraph.getVerticesSortedTopologicallyFromSources();

if (log.isDebugEnabled()) {

log.debug(

"Adding {} vertices from job graph {} ({}).",

sortedTopology.size(),

jobName,

jobId);

}

// TODO 最重要的工作,生成executionJobVertex,以及并行化,根据并行度生成多个ExecutionVertex

executionGraph.attachJobGraph(sortedTopology);

if (log.isDebugEnabled()) {

log.debug(

"Successfully created execution graph from job graph {} ({}).", jobName, jobId);

}

// TODO 解析checkpoint参数,构建checkpoint相关组件

// configure the state checkpointing

if (isCheckpointingEnabled(jobGraph)) {

JobCheckpointingSettings snapshotSettings = jobGraph.getCheckpointingSettings();

// Maximum number of remembered checkpoints

int historySize = jobManagerConfig.getInteger(WebOptions.CHECKPOINTS_HISTORY_SIZE);

CheckpointStatsTracker checkpointStatsTracker =

new CheckpointStatsTracker(

historySize,

snapshotSettings.getCheckpointCoordinatorConfiguration(),

metrics);

// load the state backend from the application settings

final StateBackend applicationConfiguredBackend;

final SerializedValue<StateBackend> serializedAppConfigured =

snapshotSettings.getDefaultStateBackend();

if (serializedAppConfigured == null) {

applicationConfiguredBackend = null;

} else {

try {

applicationConfiguredBackend =

serializedAppConfigured.deserializeValue(classLoader);

} catch (IOException | ClassNotFoundException e) {

throw new JobExecutionException(

jobId, "Could not deserialize application-defined state backend.", e);

}

}

final StateBackend rootBackend;

try {

rootBackend =

StateBackendLoader.fromApplicationOrConfigOrDefault(

applicationConfiguredBackend, jobManagerConfig, classLoader, log);

} catch (IllegalConfigurationException | IOException | DynamicCodeLoadingException e) {

throw new JobExecutionException(

jobId, "Could not instantiate configured state backend", e);

}

// load the checkpoint storage from the application settings

final CheckpointStorage applicationConfiguredStorage;

final SerializedValue<CheckpointStorage> serializedAppConfiguredStorage =

snapshotSettings.getDefaultCheckpointStorage();

if (serializedAppConfiguredStorage == null) {

applicationConfiguredStorage = null;

} else {

try {

applicationConfiguredStorage =

serializedAppConfiguredStorage.deserializeValue(classLoader);

} catch (IOException | ClassNotFoundException e) {

throw new JobExecutionException(

jobId,

"Could not deserialize application-defined checkpoint storage.",

e);

}

}

final CheckpointStorage rootStorage;

try {

rootStorage =

CheckpointStorageLoader.load(

applicationConfiguredStorage,

null,

rootBackend,

jobManagerConfig,

classLoader,

log);

} catch (IllegalConfigurationException | DynamicCodeLoadingException e) {

throw new JobExecutionException(

jobId, "Could not instantiate configured checkpoint storage", e);

}

// instantiate the user-defined checkpoint hooks

final SerializedValue<MasterTriggerRestoreHook.Factory[]> serializedHooks =

snapshotSettings.getMasterHooks();

final List<MasterTriggerRestoreHook<?>> hooks;

if (serializedHooks == null) {

hooks = Collections.emptyList();

} else {

final MasterTriggerRestoreHook.Factory[] hookFactories;

try {

hookFactories = serializedHooks.deserializeValue(classLoader);

} catch (IOException | ClassNotFoundException e) {

throw new JobExecutionException(

jobId, "Could not instantiate user-defined checkpoint hooks", e);

}

final Thread thread = Thread.currentThread();

final ClassLoader originalClassLoader = thread.getContextClassLoader();

thread.setContextClassLoader(classLoader);

try {

hooks = new ArrayList<>(hookFactories.length);

for (MasterTriggerRestoreHook.Factory factory : hookFactories) {

hooks.add(MasterHooks.wrapHook(factory.create(), classLoader));

}

} finally {

thread.setContextClassLoader(originalClassLoader);

}

}

final CheckpointCoordinatorConfiguration chkConfig =

snapshotSettings.getCheckpointCoordinatorConfiguration();

executionGraph.enableCheckpointing(

chkConfig,

hooks,

checkpointIdCounter,

completedCheckpointStore,

rootBackend,

rootStorage,

checkpointStatsTracker,

checkpointsCleaner);

}

// create all the metrics for the Execution Graph

metrics.gauge(RestartTimeGauge.METRIC_NAME, new RestartTimeGauge(executionGraph));

metrics.gauge(DownTimeGauge.METRIC_NAME, new DownTimeGauge(executionGraph));

metrics.gauge(UpTimeGauge.METRIC_NAME, new UpTimeGauge(executionGraph));

return executionGraph;

}

代码很长,里面主要做了这些事:

1、通过JobGraph拿到一些Job相关信息

2、初始化了一个ExecutionGraph对象的空壳

3、将JobGraph变成Json形式

4、检查JobGraph中的所有顶点是否有启动类

5、按顺序将JobGraph的顶点放在一个集合中

6、根据JobVertex以及并行度设置生成ExecutionVertex

7、解析checkpoint参数,构建checkpoint相关组件

8、为ExecutionGraph构建一些监控指标

以上内容中,最为核心的便是JobGraph中的JobVertex转化为ExecutionVertex的过程,我们点进下面这段代码中:

// TODO 最重要的工作,生成executionJobVertex,以及并行化,根据并行度生成多个ExecutionVertex

executionGraph.attachJobGraph(sortedTopology);可以看到:

public void attachJobGraph(List<JobVertex> topologiallySorted) throws JobException {

assertRunningInJobMasterMainThread();

LOG.debug(

"Attaching {} topologically sorted vertices to existing job graph with {} "

+ "vertices and {} intermediate results.",

topologiallySorted.size(),

tasks.size(),

intermediateResults.size());

final ArrayList<ExecutionJobVertex> newExecJobVertices =

new ArrayList<>(topologiallySorted.size());

final long createTimestamp = System.currentTimeMillis();

// TODO 遍历JobGraph的端点集合

for (JobVertex jobVertex : topologiallySorted) {

if (jobVertex.isInputVertex() && !jobVertex.isStoppable()) {

this.isStoppable = false;

}

VertexParallelismInformation parallelismInfo =

parallelismStore.getParallelismInfo(jobVertex.getID());

// TODO 为每一个JobVertex生成一个ExecutionJobVertex

// create the execution job vertex and attach it to the graph

ExecutionJobVertex ejv =

new ExecutionJobVertex(

this,

jobVertex,

maxPriorAttemptsHistoryLength,

rpcTimeout,

createTimestamp,

parallelismInfo,

initialAttemptCounts.getAttemptCounts(jobVertex.getID()));

// TODO 在Flink 1.13中 ExecutionEdge的概念被优化,将由ConsumedPartitionGroup和ConsumedVertexGroup来代替

// 此处为构建ConsumedPartitionGroup和ConsumedVertexGroup

// 详情见 https://flink.apache.org/2022/01/04/scheduler-performance-part-two.html

ejv.connectToPredecessors(this.intermediateResults);

ExecutionJobVertex previousTask = this.tasks.putIfAbsent(jobVertex.getID(), ejv);

if (previousTask != null) {

throw new JobException(

String.format(

"Encountered two job vertices with ID %s : previous=[%s] / new=[%s]",

jobVertex.getID(), ejv, previousTask));

}

for (IntermediateResult res : ejv.getProducedDataSets()) {

IntermediateResult previousDataSet =

this.intermediateResults.putIfAbsent(res.getId(), res);

if (previousDataSet != null) {

throw new JobException(

String.format(

"Encountered two intermediate data set with ID %s : previous=[%s] / new=[%s]",

res.getId(), res, previousDataSet));

}

}

this.verticesInCreationOrder.add(ejv);

this.numVerticesTotal += ejv.getParallelism();

newExecJobVertices.add(ejv);

}

registerExecutionVerticesAndResultPartitions(this.verticesInCreationOrder);

// the topology assigning should happen before notifying new vertices to failoverStrategy

executionTopology = DefaultExecutionTopology.fromExecutionGraph(this);

partitionReleaseStrategy =

partitionReleaseStrategyFactory.createInstance(getSchedulingTopology());

}

在这段代码里完成了这些工作:

1、遍历所有JobGraph中的顶点JobVertex

2、为每一个JobVertex生成一个ExecutionJobVertex

3、为每一个JobVertex构建ConsumedPartitionGroup和ConsumedVertexGroup

2.2、ExecutionVertex的构建

我们首先来看ExecutionVertex的构建过程:

@VisibleForTesting

public ExecutionJobVertex(

InternalExecutionGraphAccessor graph,

JobVertex jobVertex,

int maxPriorAttemptsHistoryLength,

Time timeout,

long createTimestamp,

VertexParallelismInformation parallelismInfo,

SubtaskAttemptNumberStore initialAttemptCounts)

throws JobException {

if (graph == null || jobVertex == null) {

throw new NullPointerException();

}

this.graph = graph;

this.jobVertex = jobVertex;

// TODO 获取并行度信息

this.parallelismInfo = parallelismInfo;

// verify that our parallelism is not higher than the maximum parallelism

if (this.parallelismInfo.getParallelism() > this.parallelismInfo.getMaxParallelism()) {

throw new JobException(

String.format(

"Vertex %s's parallelism (%s) is higher than the max parallelism (%s). Please lower the parallelism or increase the max parallelism.",

jobVertex.getName(),

this.parallelismInfo.getParallelism(),

this.parallelismInfo.getMaxParallelism()));

}

this.resourceProfile =

ResourceProfile.fromResourceSpec(jobVertex.getMinResources(), MemorySize.ZERO);

this.taskVertices = new ExecutionVertex[this.parallelismInfo.getParallelism()];

this.inputs = new ArrayList<>(jobVertex.getInputs().size());

// take the sharing group

this.slotSharingGroup = checkNotNull(jobVertex.getSlotSharingGroup());

this.coLocationGroup = jobVertex.getCoLocationGroup();

// TODO 通过 JobVertex 的 IntermediateDateaSets数量 初始化 intermediateResult的空集合

// create the intermediate results

this.producedDataSets =

new IntermediateResult[jobVertex.getNumberOfProducedIntermediateDataSets()];

// TODO 对 IntermediateDataSet 构建一个IntermediateDateSets

for (int i = 0; i < jobVertex.getProducedDataSets().size(); i++) {

final IntermediateDataSet result = jobVertex.getProducedDataSets().get(i);

// TODO 构建 IntermediateResult

this.producedDataSets[i] =

new IntermediateResult(

result.getId(),

this,

this.parallelismInfo.getParallelism(),

result.getResultType());

}

// create all task vertices

// TODO 根据并行度生成对应数量的ExecutionVertex

for (int i = 0; i < this.parallelismInfo.getParallelism(); i++) {

// TODO

ExecutionVertex vertex =

new ExecutionVertex(

this,

i,

producedDataSets,

timeout,

createTimestamp,

maxPriorAttemptsHistoryLength,

initialAttemptCounts.getAttemptCount(i));

this.taskVertices[i] = vertex;

}

// sanity check for the double referencing between intermediate result partitions and

// execution vertices

for (IntermediateResult ir : this.producedDataSets) {

if (ir.getNumberOfAssignedPartitions() != this.parallelismInfo.getParallelism()) {

throw new RuntimeException(

"The intermediate result's partitions were not correctly assigned.");

}

}

final List<SerializedValue<OperatorCoordinator.Provider>> coordinatorProviders =

getJobVertex().getOperatorCoordinators();

if (coordinatorProviders.isEmpty()) {

this.operatorCoordinators = Collections.emptyList();

} else {

final ArrayList<OperatorCoordinatorHolder> coordinators =

new ArrayList<>(coordinatorProviders.size());

try {

for (final SerializedValue<OperatorCoordinator.Provider> provider :

coordinatorProviders) {

coordinators.add(

OperatorCoordinatorHolder.create(

provider, this, graph.getUserClassLoader()));

}

} catch (Exception | LinkageError e) {

IOUtils.closeAllQuietly(coordinators);

throw new JobException(

"Cannot instantiate the coordinator for operator " + getName(), e);

}

this.operatorCoordinators = Collections.unmodifiableList(coordinators);

}

// set up the input splits, if the vertex has any

try {

@SuppressWarnings("unchecked")

InputSplitSource<InputSplit> splitSource =

(InputSplitSource<InputSplit>) jobVertex.getInputSplitSource();

if (splitSource != null) {

Thread currentThread = Thread.currentThread();

ClassLoader oldContextClassLoader = currentThread.getContextClassLoader();

currentThread.setContextClassLoader(graph.getUserClassLoader());

try {

inputSplits =

splitSource.createInputSplits(this.parallelismInfo.getParallelism());

if (inputSplits != null) {

splitAssigner = splitSource.getInputSplitAssigner(inputSplits);

}

} finally {

currentThread.setContextClassLoader(oldContextClassLoader);

}

} else {

inputSplits = null;

}

} catch (Throwable t) {

throw new JobException(

"Creating the input splits caused an error: " + t.getMessage(), t);

}

}

在这段代码里完成了以下工作:

1、获取并行度信息

2、通过JobVertex的IntermediateDataSe的数量t初始化IntermediateResult集合

3、遍历JobGraph的IntermediateDataSet,对每一个IntermediateDataSet都构建一个IntermediateResult

4、根据并行度生成对应数量的ExecutionVertex

5、根据ExecutionVertex的数量来切分输入

我们着重来看ExecutionVertex的构造方法,点进下面这段代码:

// TODO 根据并行度生成对应数量的ExecutionVertex

for (int i = 0; i < this.parallelismInfo.getParallelism(); i++) {

// TODO

ExecutionVertex vertex =

new ExecutionVertex(

this,

i,

producedDataSets,

timeout,

createTimestamp,

maxPriorAttemptsHistoryLength,

initialAttemptCounts.getAttemptCount(i));

this.taskVertices[i] = vertex;

}点进ExecutionVertex的构造方法里:

@VisibleForTesting

public ExecutionVertex(

ExecutionJobVertex jobVertex,

int subTaskIndex,

IntermediateResult[] producedDataSets,

Time timeout,

long createTimestamp,

int maxPriorExecutionHistoryLength,

int initialAttemptCount) {

this.jobVertex = jobVertex;

this.subTaskIndex = subTaskIndex;

this.executionVertexId = new ExecutionVertexID(jobVertex.getJobVertexId(), subTaskIndex);

this.taskNameWithSubtask =

String.format(

"%s (%d/%d)",

jobVertex.getJobVertex().getName(),

subTaskIndex + 1,

jobVertex.getParallelism());

this.resultPartitions = new LinkedHashMap<>(producedDataSets.length, 1);

for (IntermediateResult result : producedDataSets) {

IntermediateResultPartition irp =

new IntermediateResultPartition(

result,

this,

subTaskIndex,

getExecutionGraphAccessor().getEdgeManager());

result.setPartition(subTaskIndex, irp);

resultPartitions.put(irp.getPartitionId(), irp);

}

this.priorExecutions = new EvictingBoundedList<>(maxPriorExecutionHistoryLength);

// TODO ExecutionVertex 和 Execution

// 部署也分为两方面:

// 1.JobMaster把要部署的Task的必要信息都封装在一个对象中,然后发送给TaskExecutor

// 2.TaskExecutor接收到这个对象的时候,再次封装得到一个Task对象

// 部署:

// JobMaster 在拿到一个对应节点上的slot资源的时候,把要部署的Task的必要信息,都封装成一个Execution

// 然后执行Execution的deploy()执行部署

// 在该方法的内部就会调用Rpc请求,把必要的信息发送给TaskExecutor

// TaskExecutor在接收到这些必要的信息的时候,把这些信息封装成一个Task对象

// 然后启动这个Task就完成了部署

this.currentExecution =

new Execution(

getExecutionGraphAccessor().getFutureExecutor(),

this,

initialAttemptCount,

createTimestamp,

timeout);

getExecutionGraphAccessor().registerExecution(currentExecution);

this.timeout = timeout;

this.inputSplits = new ArrayList<>();

}

在ExecutionVertex的构造方法里,主要做了两件事:

1、根据并行度对IntermediateResult进行分区,生成IntermediateResultPartition

2、对Task所需要的一些必要信息进行封装,封装为一个Exection

JobMaster在拿到一个对应节点上的Slot资源的时候,会把要部署的Task的必要信息,都封装成一个Execution,然后执行Execution的deploy()方法执行部署。在该方法内部会调用RPC请求,把必要的信息发送给TaskExecutor。TaskExecutor在接收到这些必要信息的时候,再把这些信息封装成一个Task对象,然后启动这个Task就完成了部署

2.3、ConsumedPartitionGroup和ConsumedVertexGroup的构建

在看完了ExecutionVertex的初始化流程后,我们来看ConsumedPartitionGroup和ConsumedVertexGroup的初始化流程,我们回到executionGraph.attachJobGraph方法,点进ejv.connectToPredecessors(this.intermediateResults);方法内:

public void connectToPredecessors(

Map<IntermediateDataSetID, IntermediateResult> intermediateDataSets)

throws JobException {

// TODO 获取JobVertex的输入

List<JobEdge> inputs = jobVertex.getInputs();

if (LOG.isDebugEnabled()) {

LOG.debug(

String.format(

"Connecting ExecutionJobVertex %s (%s) to %d predecessors.",

jobVertex.getID(), jobVertex.getName(), inputs.size()));

}

for (int num = 0; num < inputs.size(); num++) {

JobEdge edge = inputs.get(num);

if (LOG.isDebugEnabled()) {

if (edge.getSource() == null) {

LOG.debug(

String.format(

"Connecting input %d of vertex %s (%s) to intermediate result referenced via ID %s.",

num,

jobVertex.getID(),

jobVertex.getName(),

edge.getSourceId()));

} else {

LOG.debug(

String.format(

"Connecting input %d of vertex %s (%s) to intermediate result referenced via predecessor %s (%s).",

num,

jobVertex.getID(),

jobVertex.getName(),

edge.getSource().getProducer().getID(),

edge.getSource().getProducer().getName()));

}

}

// fetch the intermediate result via ID. if it does not exist, then it either has not

// been created, or the order

// in which this method is called for the job vertices is not a topological order

IntermediateResult ires = intermediateDataSets.get(edge.getSourceId());

if (ires == null) {

throw new JobException(

"Cannot connect this job graph to the previous graph. No previous intermediate result found for ID "

+ edge.getSourceId());

}

this.inputs.add(ires);

// TODO 构建ConsumedPartitionGroup和ConsumedVertexGroup

EdgeManagerBuildUtil.connectVertexToResult(this, ires, edge.getDistributionPattern());

}

}

可以看到在这里做了以下工作:

1、获取JobVertex的输入

2、根据输入获取到JobVertex的输入边JobEdge

3、对每一条输入边都来获取intermediateDataSets中对应的中间结果数据IntermediateResult

4、根据IntermediateResult来构建ConsumedPartitionGroup和ConsumedVertexGroup

我们继续点进EdgeManagerBuildUtil.connectVertexToResult方法内部:

static void connectVertexToResult(

ExecutionJobVertex vertex,

IntermediateResult intermediateResult,

DistributionPattern distributionPattern) {

switch (distributionPattern) {

case POINTWISE:

connectPointwise(vertex.getTaskVertices(), intermediateResult);

break;

case ALL_TO_ALL:

connectAllToAll(vertex.getTaskVertices(), intermediateResult);

break;

default:

throw new IllegalArgumentException("Unrecognized distribution pattern.");

}

}

可以看到这里有三种Flink图端点间的拓扑模式,我们以ALL_TO_ALL为例分析,点进connectAllToAll方法:

private static void connectAllToAll(

ExecutionVertex[] taskVertices, IntermediateResult intermediateResult) {

// TODO 遍历 intermediateResultPartition 来构建ConsumedPartitionGroup

ConsumedPartitionGroup consumedPartitions =

ConsumedPartitionGroup.fromMultiplePartitions(

Arrays.stream(intermediateResult.getPartitions())

.map(IntermediateResultPartition::getPartitionId)

.collect(Collectors.toList()));

// TODO 将ConsumedPartitionGroup连接到下游ExecutionVertex

for (ExecutionVertex ev : taskVertices) {

ev.addConsumedPartitionGroup(consumedPartitions);

}

// TODO 将下游ExecutionVertex构建为ConsumerVertexGroup

ConsumerVertexGroup vertices =

ConsumerVertexGroup.fromMultipleVertices(

Arrays.stream(taskVertices)

.map(ExecutionVertex::getID)

.collect(Collectors.toList()));

// TODO 将每一个IntermediateResultPartition 连接至下游ConsumerVertexGroup

for (IntermediateResultPartition partition : intermediateResult.getPartitions()) {

partition.addConsumers(vertices);

}

}

在这个方法里完成了这些工作:

1、遍历IntermediateResult中的所有分区IntermediateResultPartition,并将这些IntermediateResultPartition构建为一个ConsumedPartitionGroup对象

2、将当前IntermediateResult所连接的下游ExecutionVertex都连接到这个IntermediateResult构建出的ConsumedPartitionGroup中

3、将当前IntermediateResult所连接的下一级ExecutionVertex都放入ConsumerVertexGroup对象中

4、将当前IntermediateResult的IntermediateResultPartition都连接到该ConsumerVertexGroup对象上

总结

至此,ExecutionGraph的主要工作就完成了,ExecutionGraph构建完毕。

在这一阶段的工作里,可以概括为:

1、根据并行度将JobGraph的JobVertex转化为ExecutionVertex

2、根据并行度将JobGraph的IntermediateDataSet转化为IntermediateResultPartition

3、根据上下游的依赖关系根据ExecutionVertex和IntermediateResultPartition生成

423

423

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?