1. HDFS Java端API

package com.itheima.demo01_hdfs;

import com.sun.jndi.toolkit.url.Uri;

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.junit.Test;

import java.io.File;

import java.net.URI;

/*

案例: 演示Java操作HDFS文件系统.

细节:

你需要在你的windows系统中, 配置Hadoop运行环境, 具体步骤如下:

1. 把hadoop-2.7.5拷贝到一个没有中文, 空格等特殊符号的合法路径下.

2. 配置Path环境变量.

3. 把 hadoop-2.7.5/bin/hadoop.dll 拷贝到 c:/windows/system32 这个路径下.

4. 重启windows系统.

*/

public class Demo01 {

/*

需求: 演示如何获取 FileSystem 文件系统对象.

Java操作HDFS, 本质上就是 Java连接HDFS文件系统, 然后进行操作, 具体的获取方式有如下三种.

*/

@Test

public void show1() throws Exception {

//方式1: 直接获取FileSystem文件系统对象, 采用默认配置, 即: 获取当前系统(即: windows系统)

//1. 创建配置文件对象.

Configuration conf = new Configuration();

//2. 根据配置文件对象, 获取其对应的 文件系统对象.

FileSystem fs1 = FileSystem.get(conf);

//3. 打印结果.

System.out.println("fs1: " + fs1);

System.out.println("---------------------------");

//方式2: 获取FileSystem文件系统对象, 采用指定配置, 例如: windows系统, HDFS文件系统. 采用当前文件系统的默认用户名.

//1. 创建配置文件对象.

Configuration conf2 = new Configuration();

//设置配置信息, 连接: HDFS文件系统.

conf2.set("fs.defaultFS", "hdfs://node1:8020");

//2. 根据配置文件对象, 获取其对应的 文件系统对象.

FileSystem fs2 = FileSystem.get(conf2);

//3. 打印结果.

System.out.println("fs2: " + fs2);

System.out.println("---------------------------");

//方式3: 获取FileSystem文件系统对象, 采用指定配置, 例如: windows系统, HDFS文件系统, 自定义用户名(术语叫: 伪装用户)

//HDFS是一个很单纯的孩子, 你告诉它你是谁, 它就认为你是谁, 它是用来防止"好人做错事儿", 而不是解决"坏人做坏事儿"

//1. 创建配置文件对象.

Configuration conf3 = new Configuration();

URI uri = new URI("hdfs://node1:8020");

//2. 根据配置文件对象, 获取其对应的 文件系统对象.

FileSystem fs3 = FileSystem.get(uri, conf, "root");

//3. 打印结果.

System.out.println("fs3: " + fs3);

System.out.println("---------------------------");

//方式4: 实际开发版, 即: 方式三的优化版.

FileSystem fs4 = FileSystem.get(new URI("hdfs://node1:8020"), new Configuration());

System.out.println("fs4: " + fs4);

}

//Java操作HDFS之遍历HDFS中所有文件

@Test

public void show2() throws Exception {

//1. 获取 HDFS的文件系统对象.

FileSystem fs = FileSystem.get(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 获取指定目录下所有的文件(目录)的 文件状态对象, 因为是多个, 所以是一个迭代器.

//参数1: 路径. 参数2: 是否递归

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

//3. 遍历迭代器, 获取每一个 文件状态对象.

while (listFiles.hasNext()) {

//fileStatus: 文件状态对象, 即: 表示每一个文件(目录)

LocatedFileStatus fileStatus = listFiles.next();

//4. 根据文件状态对象, 获取文件(目录)的 路径或者名字, 并打印.

System.out.println("路径: " + fileStatus.getPath());

System.out.println("名字: " + fileStatus.getPath().getName());

System.out.println("------------------------");

}

//5. 释放资源.

fs.close();

}

//Java操作HDFS之HDFS上创建文件夹, HDFS指令: mkdir

@Test

public void show3() throws Exception {

//1. 获取HDFS文件系统对象.

FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 创建文件.

boolean flag = fs.mkdirs(new Path("/hangge"));

System.out.println(flag ? "创建成功" : "创建失败");

//3. 释放资源

fs.close();

}

//Java操作HDFS之下载文件, HDFS指令: get, getmerge, copyToLocal, moveToLocal

@Test

public void show4() throws Exception {

//1. 获取HDFS文件系统对象.

FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 下载文件.

//如果是操作本地文件, 建议加上: file:///

//复制, 下载.

//fs.copyToLocalFile(new Path("/1.txt"), new Path("D:\\abc\\ceshi"));

//剪切, 下载.

fs.moveToLocalFile(new Path("/1.txt"), new Path("D:\\abc\\ceshi"));

//3. 释放资源

fs.close();

}

//Java操作HDFS之上传文件. HDFS指令: put, copyFromLocal, moveFromLocal

@Test

public void show5() throws Exception {

//1. 获取HDFS文件系统对象.

FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 上传文件.

//如果是操作本地文件, 建议加上: file:///

//复制, 上传.

//fs.copyFromLocalFile(new Path("file:///D:\\abc\\ceshi\\绕口令.txt"), new Path("/hangge"));

//剪切, 上传.

fs.moveFromLocalFile(new Path("file:///D:\\abc\\ceshi\\绕口令.txt"), new Path("/hangge"));

//3. 释放资源

fs.close();

}

//Java操作HDFS之 合并上传

@Test

public void show6() throws Exception {

//1. 获取本地文件系统对象, 即: windows

FileSystem localFS = FileSystem.get(new Configuration());

//2. 获取HDFS文件系统对象.

FileSystem hdfsFS = FileSystem.get(new URI("hdfs://node1:8020"), new Configuration(), "root");

//3. 获取HDFS文件的输出流对象, 将来可以往这个文件中写数据.

FSDataOutputStream fos = hdfsFS.create(new Path("/hangge/abc.txt"));

//4. 根据本地(windows)文件系统对象, 获取指定目录下所有的文件对象(文件状态对象的迭代器形式)

RemoteIterator<LocatedFileStatus> listFiles = localFS.listFiles(new Path("D:\\abc\\a123"), false);

//5. 遍历, 获取到每一个具体的 文件状态对象.

while (listFiles.hasNext()) {

//fileStatus: 就是具体的每一个文件状态对象, 即: core-site.xml, hdfs-site.xml, yarn-site.xml

LocatedFileStatus fileStatus = listFiles.next();

//6. 通过本地(windows)文件系统对象, 结合 该文件的路径, 获取该文件的输入流, 可以读取文件信息.

FSDataInputStream fis = localFS.open(fileStatus.getPath());

//7. 具体的拷贝文件的动作.

IOUtils.copy(fis, fos);

//8. 关闭此输入流

IOUtils.closeQuietly(fis);

}

//9. 释放资源.

hdfsFS.close();

localFS.close();

}

//Java操作HDFS之权限问题

@Test

public void show7() throws Exception {

//1. 获取HDFS文件系统对象.

//FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "zhangsan");

FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 下载文件.

//如果是操作本地文件, 建议加上: file:///

//复制, 下载.

fs.copyToLocalFile(new Path("/hangge/abc.txt"), new Path("D:\\abc\\ceshi"));

//剪切, 下载.

//fs.moveToLocalFile(new Path("/1.txt"), new Path("D:\\abc\\ceshi"));

//3. 释放资源

fs.close();

}

//Java操作HDFS之删除功能.

@Test

public void show8() throws Exception {

//1. 获取HDFS文件系统对象.

//FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "zhangsan");

FileSystem fs = FileSystem.newInstance(new URI("hdfs://node1:8020"), new Configuration(), "root");

//2. 删除文件.

fs.delete(new Path("/hangge/绕口令.txt"), false);

//3. 释放资源

fs.close();

}

}

2. Java编写MapReduce程序

2.1 wordcount程序

一个最简单的MR程序由Map,Reduce和主程序入口3个部分组成。下面是一个最简单的wordcount程序。

首先是MapTask

package cn.itcast.word_count;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class WordCountMapTask extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException {

System.out.println("行偏移量: " + key.get());

String line = value.toString();

System.out.println(line);

if (line!=null && !"".equals(line)){

String[] arr = line.split(" ");

for (String word : arr){

context.write(new Text(word), new IntWritable(1));

}

}

}

}

然后是ReduceTask

package cn.itcast.word_count;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class WordCountMapTask extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException {

System.out.println("行偏移量: " + key.get());

String line = value.toString();

System.out.println(line);

if (line!=null && !"".equals(line)){

String[] arr = line.split(" ");

for (String word : arr){

context.write(new Text(word), new IntWritable(1));

}

}

}

}

最后是主程序的入口

package cn.itcast.word_count;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class WordCountMapTask extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException {

System.out.println("行偏移量: " + key.get());

String line = value.toString();

System.out.println(line);

if (line!=null && !"".equals(line)){

String[] arr = line.split(" ");

for (String word : arr){

context.write(new Text(word), new IntWritable(1));

}

}

}

}

用Maven打包程序

Mvn package

提交任务

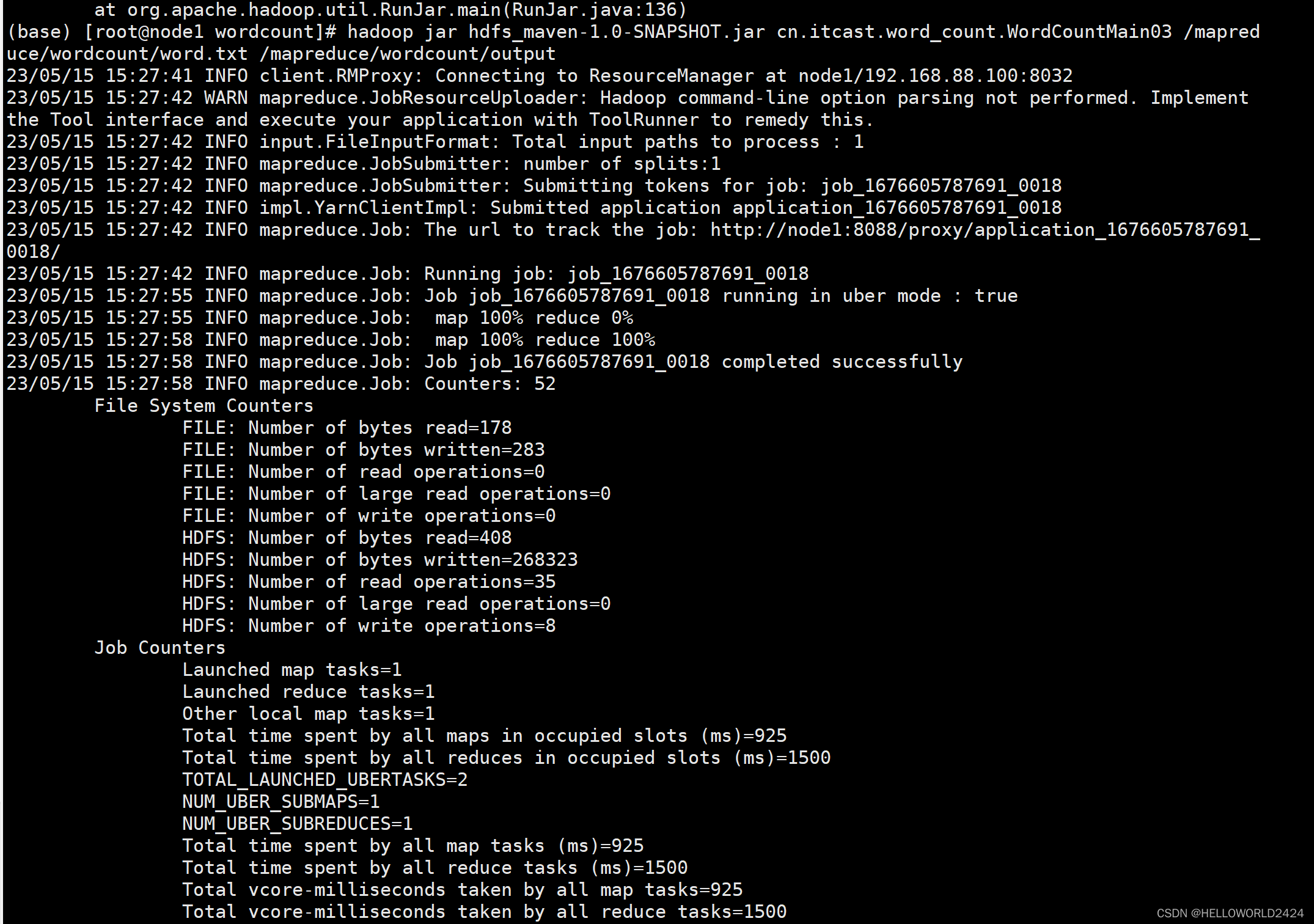

hadoop jar hdfs_maven-1.0-SNAPSHOT.jar cn.itcast.word_count.WordCountMain03 /mapreduce/wordcount/word.txt /mapreduce/wordcount/output

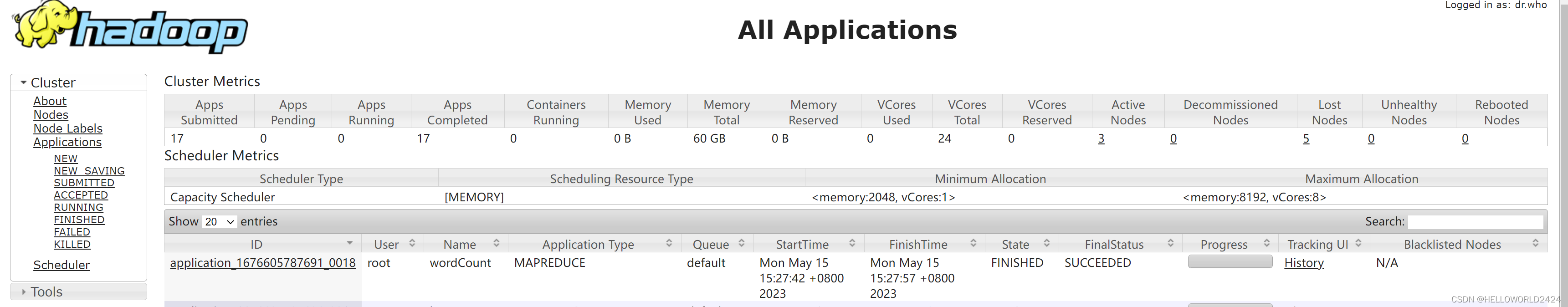

hadoop后台监控

2.2 分区

错误提示:如果找不到class,注意需要在Main函数添加下面的这句话。

job.setJarByClass(LotteryMain.class);

mapper

package org.example.demo01_lottery;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/*

自定义的Map阶段, 负责 拆 的动作.

k1: 行偏移量, LongWritable

v1: 整行数据, Text

k2: 中奖号, IntWritable

v2: 整行数据, Text

*/

public class LotteryMapper extends Mapper<LongWritable, Text, IntWritable, Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

System.out.println("行偏移量: " + key.get());

//1. 获取v1, 即: 整行数据.

String line = value.toString();

//2. 非空校验

if (line != null && !"".equals(line)) {

//3. 获取中奖号.

int num = Integer.parseInt(line.split("\t")[5]);

System.out.println(num);

//4. 输出数据, 即: k2, v2

context.write(new IntWritable(num), value);

}

}

}

reducer

package org.example.demo01_lottery;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/*

自定义的Reduce阶段, 负责 合 的动作.

k2: 中奖号, IntWritable

v2: 整行数据, Text

k3: 整行数据, Text

v3: 空

*/

public class LotteryReducer extends Reducer<IntWritable, Text, Text, NullWritable> {

/**

* 自定义的Reduce阶段, 负责 合 的动作.

*

* @param key k2, 即: 中奖号

* @param values v2的集合, 即: 整行数据

* @param context 用于输出k3, v3的

* @throws IOException

* @throws InterruptedException

*/

@Override

protected void reduce(IntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//因为values记录的是所有的 整行数据(已经分好区, 排好序, 规好约, 分好组)的数据, 直接写出即可.

for (Text value : values) {

context.write(value, NullWritable.get());

}

}

}

partitioner

package org.example.demo01_lottery;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

//自定义的分区类, 按照 中奖号(即:k2)分区, 15以下一组, 15及其以上1组.

public class MyPartitioner extends Partitioner<IntWritable, Text> {

/**

* 具体的自定义分区规则

*

* @param intWritable k2的数据类型, 即: 中奖号

* @param text v2的数据类型, 即: 整行数据

* @param numReduceTask ReduceTask任务的个数.

* @return 分区编号, 即: 打标记的结果.

*/

@Override

public int getPartition(IntWritable intWritable, Text text, int numReduceTask) {

//1. 获取中奖号.

int num = intWritable.get();

//2. 自定义分区规则.

if (num < 15)

return 0;

else

return 1;

}

}

main

package org.example.demo01_lottery;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

//驱动类.

public class LotteryMain {

public static void main(String[] args) throws Exception {

//1. 创建Job任务.

Job job = Job.getInstance(new Configuration(), "lotteryMR");

job.setJarByClass(LotteryMain.class);

//2. 封装MR程序的核心8步.

//2 .1 封装输入组件, 读取: 源数据.

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path(args[0]));

//2.2 封装Map任务, 指定: k2, v2的类型.

job.setMapperClass(LotteryMapper.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(Text.class);

//2.3 分区, 用自定义分组

job.setPartitionerClass(MyPartitioner.class);

//2.4 排序, 用默认的

//2.5 规约, 用默认的

//2.6 分组, 用默认的

//2.7 封装Reduce任务, 指定k3,v3的类型

job.setReducerClass(LotteryReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//2.8 封装输出组件, 写出数据到 目的地文件中.

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path(args[1]));

//3. 设置reduce的个数.

job.setNumReduceTasks(2);

//4. 提交任务, 等待执行结果.

boolean flag = job.waitForCompletion(true);

//5. 关闭程序.

System.exit(flag ? 0 : 1);

}

}

运行命令

hadoop jar MapReduce-1.0-SNAPSHOT.jar org.example.demo01_lottery.LotteryMain /mapreduce/lottery/partition.csv /mapreduce/lottery/output

2.2 一个排序的程序

数据

a 1

a 9

b 3

a 7

b 8

a 19

b 10

a 5

a 9

b 10

定义SortBean类

package org.example.demo02_sort;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class SortBean implements WritableComparable<SortBean> {

private String first;

private int second;

public SortBean(){}

public SortBean(String first, int second){

this.first = first;

this.second = second;

}

public String getFirst() {

return first;

}

public void setFirst(String first){

this.first = first;

}

public int getSecond() {

return second;

}

public void setSecond(int second) {

this.second = second;

}

@Override

public String toString() {

return first + "\t" + second;

}

@Override

public int compareTo(SortBean sb) {

int num = this.first.compareTo(sb.first);

int num2 = num == 0 ? sb.second-this.second:num;

return num2;

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(first);

dataOutput.writeInt(second);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

first = dataInput.readUTF();

second = dataInput.readInt();

}

}

定义mapper类

package org.example.demo02_sort;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class SortMapper extends Mapper<LongWritable, Text, SortBean, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, SortBean, NullWritable>.Context context) throws IOException, InterruptedException {

String line = value.toString();

if (line!=null && !"".equals(line)){

String[] arr = line.split("\t");

SortBean sb = new SortBean(arr[0], Integer.parseInt(arr[1]));

context.write(sb, NullWritable.get());

}

}

}

定义reducer类

package org.example.demo02_sort;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class SortReducer extends Reducer<SortBean, NullWritable, Text, NullWritable> {

@Override

protected void reduce(SortBean key, Iterable<NullWritable> values, Reducer<SortBean, NullWritable, Text, NullWritable>.Context context) throws IOException, InterruptedException {

for (NullWritable value : values) {

context.write(new Text(key.toString()), NullWritable.get());

}

}

}

main类

package org.example.demo02_sort;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class SortMain {

public static void main(String[] args) throws Exception{

Job job = Job.getInstance(new Configuration(), "sortMR");

job.setJarByClass(SortMain.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\sort\\sort.txt"));

job.setMapperClass(SortMapper.class);

job.setMapOutputKeyClass(SortBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setReducerClass(SortReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\sort\\output"));

//3. 提交任务, 等待执行结果.

boolean flag = job.waitForCompletion(true);

System.exit(flag? 0:1);

}

}

2.3 combiner实现

数据集

<<java入门宝典>>

<<Python入门宝典>>

<<spark入门宝典>>

<<UI入门宝典>>

<<乾坤大挪移>>

<<hive入门宝典>>

<<天龙八部>>

<<凌波微步>>

<<葵花点穴手>>

<<史记>>

<<PHP入门宝典>>

<<铁砂掌>>

<<葵花宝典>>

<<hadoop入门宝典>>

<<论清王朝的腐败>>

mapper

package org.example.demo03_combiner;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class BookMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException {

String line = value.toString();

if (line!=null && !"".equals(line)){

if (line.contains("入门"))

context.write(new Text("计算机类图书"), new IntWritable(1));

else if (line.contains("史记") || line.contains("论清王朝的腐败")) {

context.write(new Text("史书"), new IntWritable(1));

}

else

context.write(new Text("武林秘籍"), new IntWritable(1));

}

}

}

reducer

package org.example.demo03_combiner;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class BookMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context) throws IOException, InterruptedException {

String line = value.toString();

if (line!=null && !"".equals(line)){

if (line.contains("入门"))

context.write(new Text("计算机类图书"), new IntWritable(1));

else if (line.contains("史记") || line.contains("论清王朝的腐败")) {

context.write(new Text("史书"), new IntWritable(1));

}

else

context.write(new Text("武林秘籍"), new IntWritable(1));

}

}

}

combiner

package org.example.demo03_combiner;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class MyCombiner extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int count = 0;

for (IntWritable value:values){

count += value.get();

}

context.write(key, new IntWritable(count));

}

}

main

package org.example.demo03_combiner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.yarn.webapp.hamlet.Hamlet;

public class BookMain {

public static void main(String[] args) throws Exception{

Job job = Job.getInstance(new Configuration(), "bookMR");

job.setJarByClass(BookMain.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\book\\combiner.txt"));

job.setMapperClass(BookMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setCombinerClass(MyCombiner.class);

job.setReducerClass(BookReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\book\\output"));

boolean flag = job.waitForCompletion(true);

//4. 退出程序.

System.exit(flag ? 0 : 1);

}

}

2.4 好友案例

找出两两之间的共同好友

数据

A:B,C,D,F,E,O

B:A,C,E,K

C:F,A,D,I

D:A,E,F,L

E:B,C,D,M,L

F:A,B,C,D,E,O,M

G:A,C,D,E,F

H:A,C,D,E,O

I:A,O

J:B,O

K:A,C,D

L:D,E,F

M:E,F,G

O:A,H,I,J

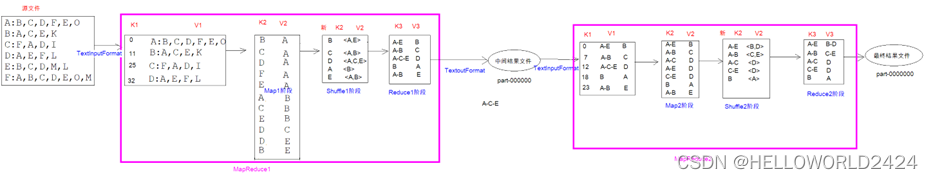

思路如下图

map1

package com.itheima.demo05_friend;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

//第一个MR的Map阶段, k2: 好友 v2: 用户

public class FriendMapper1 extends Mapper<LongWritable, Text, Text, Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1. 获取整行数据

String line = value.toString(); //"A:B,C,D"

//2. 非空校验.

if (line != null && !"".equals(line)) {

//3. 切割数据, 获取: 用户, 该用户的好友列表

String[] arr = line.split(":"); //["A", "B,C,D"]

//4. 遍历好友列表, 获取该用户所有的好友

for (String friend : arr[1].split(",")) { //"B", "C", "D"

//5. 写出结果, 即: k2, v2

context.write(new Text(friend), new Text(arr[0])); //B->A, C->A, D m->A

}

}

}

}

reduce1

package com.itheima.demo05_friend;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/*

自定义的Reduce阶段, 负责: 合

k2: 好友

v2: 用户

k3: 好友

v3: 有该好友的所有的用户

目前数据格式:

好友 有该好友的所有的用户

{{C,C,C} : {B,A,D}} -> C:B-A-D-

*/

public class FriendReduce1 extends Reducer<Text, Text, Text, Text> {

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//1. 拼接用户列表.

String users = "";

//2. 遍历, 获取到每一个用户.

for (Text value : values) {

users += (value.toString() + "-"); //B-A-D-

}

//3. 写出结果, 即: k3, v3

context.write(key, new Text(users));

}

}

main1

package com.itheima.demo05_friend;

import com.itheima.demo01_lottery.LotteryMapper;

import com.itheima.demo01_lottery.LotteryReducer;

import com.itheima.demo01_lottery.MyPartitioner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

//第一个MR的驱动类: 用来实现 谁有A, 而不是 A有谁.

public class FriendMain1 {

public static void main(String[] args) throws Exception {

//1. 创建Job任务.

Job job = Job.getInstance(new Configuration(), "friendMR1");

//2. 封装MR程序的核心8步.

//2.1 封装输入组件, 读取: 源数据.

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\abc\\共同好友\\input\\friends.txt"));

//2.2 封装Map任务, 指定: k2, v2的类型.

job.setMapperClass(FriendMapper1.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

//2.3 分区, 用默认的.

//2.4 排序, 用默认的

//2.5 规约, 用默认的

//2.6 分组, 用默认的

//2.7 封装Reduce任务, 指定k3,v3的类型

job.setReducerClass(FriendReduce1.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

//2.8 封装输出组件, 写出数据到 目的地文件中.

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\abc\\共同好友\\output"));

//3. 提交任务, 等待执行结果.

boolean flag = job.waitForCompletion(true);

//4. 关闭程序.

System.exit(flag ? 0 : 1);

}

}

mapper2

package com.itheima.demo05_friend;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.Arrays;

//自定义的Map阶段, 求共同好友.

public class FriendMapper2 extends Mapper<LongWritable, Text, Text, Text> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 好友 有该好友的用户

//目前的数据格式: C B-A-D-

//1. 获取数据.

String line = value.toString();

//2. 非空校验.

if (line != null && !"".equals(line)) {

String[] arr = line.split("\t");

//3. 获取所有的 有该好友的用户

String[] allFriends = arr[1].split("-"); //["B", "A", "D"]

//4. 对所有的用户 升序排序.

Arrays.sort(allFriends); //["A", "B", "D"]

//5. 写出数据, 即: 写出k2(有该共同好友的两个用户), v2(共同好友)

for (int i = 0; i < allFriends.length - 1; i++) { //"A", "B"

String firstUser = allFriends[i];

//获取第二个用户

for (int j = i + 1; j < allFriends.length; j++) {

String secondUser = allFriends[j];

//写出结果:写出k2(有该共同好友的两个用户), v2(共同好友)

//A-B: C, A-D: C, B-D: C

context.write(new Text(firstUser +"-"+ secondUser), new Text(arr[0]));

}

}

}

}

}

reduce2

package com.itheima.demo05_friend;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

// 两个有共同好友的用户 他们的共同好友

//目前数据格式: {{A-B,A-B} : {C, D}} => A-B: {C, D}

public class FriendReducer2 extends Reducer<Text, Text, Text, NullWritable> {

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

//1. 拼接用户列表.

String friends = "";

//2. 遍历, 获取到每一个用户.

for (Text value : values) {

friends += (value.toString() + ", "); //"C, D, "

}

//3. 获取最终k3的格式: A-B: {C, D}

String k3 = key.toString() + ": {" + friends.substring(0, friends.length() - 2) + "}";

//4. 写出结果, 即: k3, v3

context.write(new Text(k3), NullWritable.get());

}

}

main2

package com.itheima.demo05_friend;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

//第一个MR的驱动类: 用来实现 谁有A, 而不是 A有谁.

public class FriendMain2 {

public static void main(String[] args) throws Exception {

//1. 创建Job任务.

Job job = Job.getInstance(new Configuration(), "friendMR2");

//2. 封装MR程序的核心8步.

//2.1 封装输入组件, 读取: 源数据.

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("file:///D:\\abc\\共同好友\\output\\part-r-00000"));

//2.2 封装Map任务, 指定: k2, v2的类型.

job.setMapperClass(FriendMapper2.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

//2.3 分区, 用默认的.

//2.4 排序, 用默认的

//2.5 规约, 用默认的

//2.6 分组, 用默认的

//2.7 封装Reduce任务, 指定k3,v3的类型

job.setReducerClass(FriendReducer2.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

//2.8 封装输出组件, 写出数据到 目的地文件中.

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("file:///D:\\abc\\共同好友\\output2"));

//3. 提交任务, 等待执行结果.

boolean flag = job.waitForCompletion(true);

//4. 关闭程序.

System.exit(flag ? 0 : 1);

}

}

15万+

15万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?