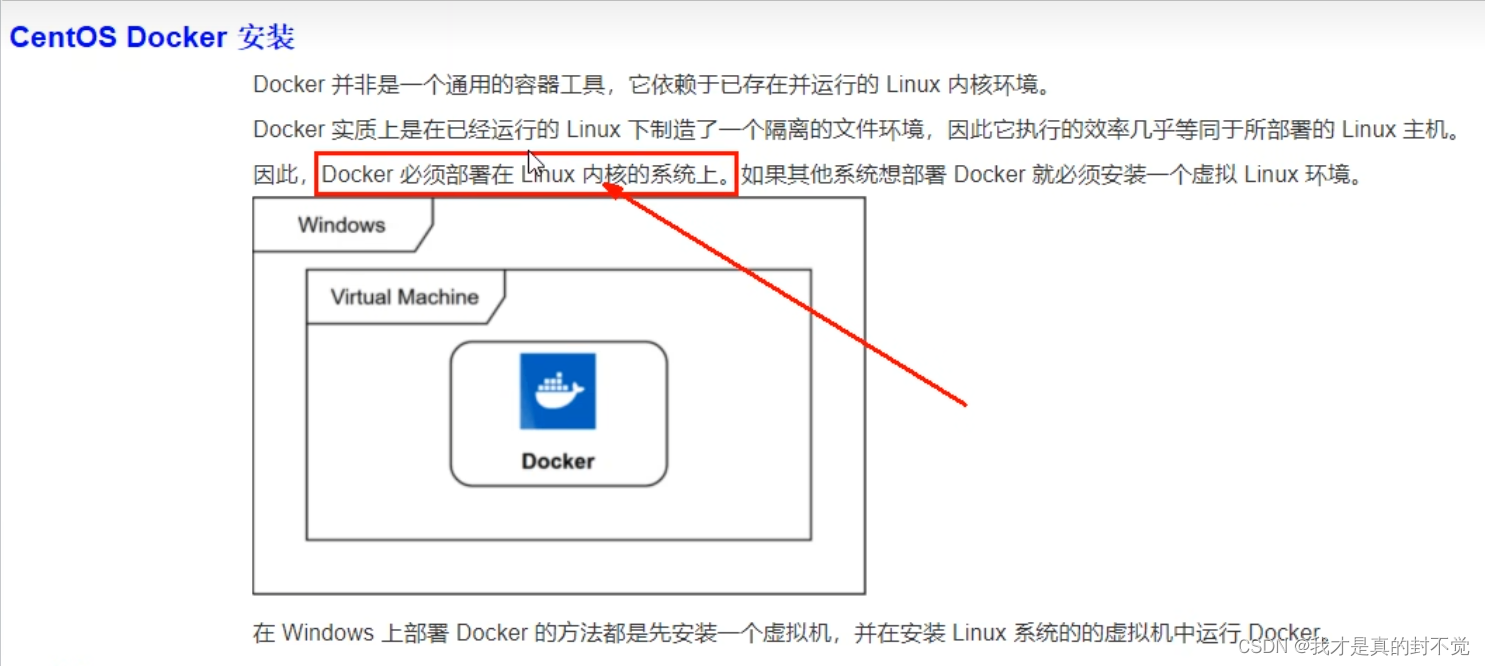

一.介绍

docker是运行在已经运行的linux环境,它是个隔离的小型的程序的运行环境 它共用linux的一些资源,执行效率基本等同于所在linux主机,极大的方便运维与部署

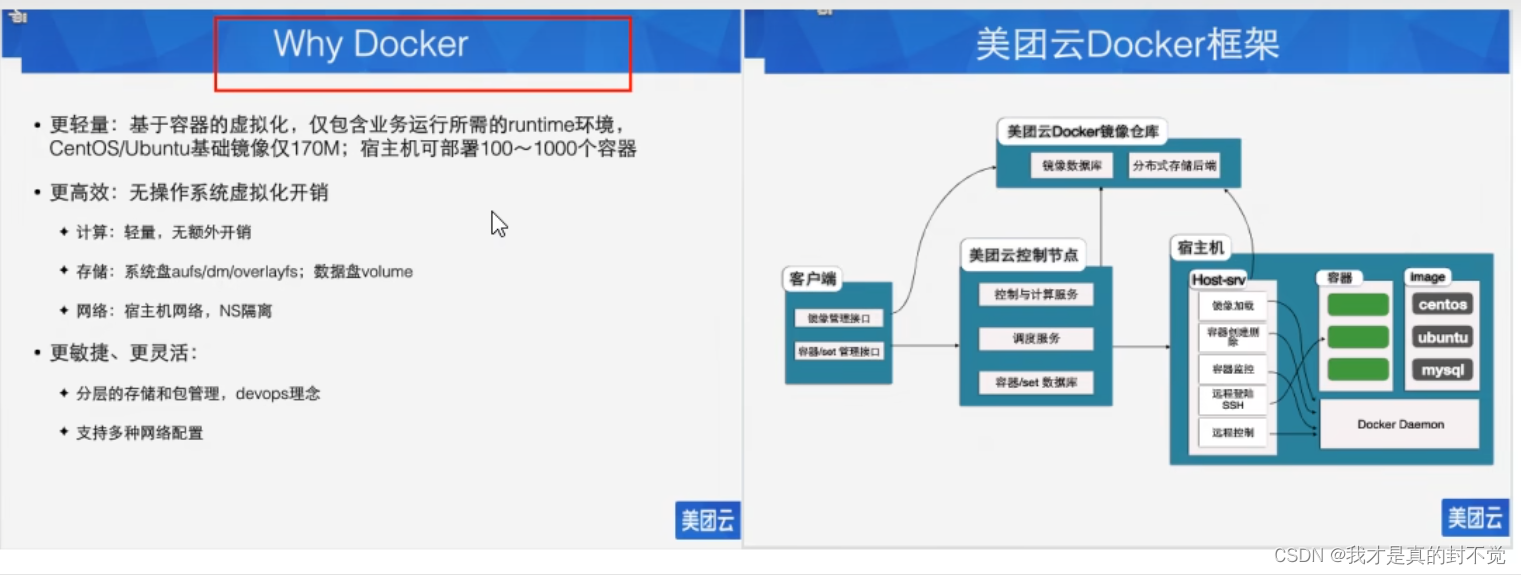

二.它的一些优点,为啥选他

总的来说,体积小占内存少只要程序运行需要的东西 移植性好 然后性能高 开发人员做好docker相关配置后 部署时直接拉取镜像 就能创建统一的运行的隔离环境 ——容器

三.安装 启动与卸载

centos7安装Docker

1、官网中安装参考手册 https://docs.docker-cn.com/engine/installation/linux/docker-ce/centos/

2、确定你是centos7及以上版本 cat /etc/redhat-release

3、yum安装gcc相关 yum -y install gcc 和 yum -y install gcc-c++

4、卸载旧版本 sudo yum remove docker docker-common docker-selinux docker-engine

5、安装需要的软件包 sudo yum install -y yum-utils device-mapper-persistent-data lvm2

6、设置stable镜像仓库 sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

7、更新yum软件包索引 sudo yum makecache fast

8、安装docker ce >> sudo yum install docker-ce

9、启动docker systemctl start docker

10、测试 docker version 和 docker run hello-world

执行helloworld时会判断 本机有镜像没 有就创建容器然后运行 没有就先从仓库拉

卸载

systemctl stop docker

yum -y remove docker-ce

rm -rf /var/lib/docker

四、镜像相关命令

4.1查看

docker images 查看当前所有镜像

-a 查看所有镜像 包含历史的

-q查看所有镜像的id

Last login: Wed Aug 24 19:39:55 2022 from 192.168.56.1

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mongo latest dfda7a2cf273 8 months ago 693MB

mysql 5.7 938b57d64674 10 months ago 448MB

redis latest 7faaec683238 10 months ago 113MB

hello-world latest feb5d9fea6a5 11 months ago 13.3kB

flink 1.10.0-scala_2.12 b001a5f6955e 2 years ago 586MB

kibana 7.4.2 230d3ded1abc 2 years ago 1.1GB

elasticsearch 7.4.2 b1179d41a7b4 2 years ago 855MB

nginx 1.10 0346349a1a64 5 years ago 182MB

[root@localhost ~]# docker images -a

REPOSITORY TAG IMAGE ID CREATED SIZE

mongo latest dfda7a2cf273 8 months ago 693MB

mysql 5.7 938b57d64674 10 months ago 448MB

redis latest 7faaec683238 10 months ago 113MB

hello-world latest feb5d9fea6a5 11 months ago 13.3kB

flink 1.10.0-scala_2.12 b001a5f6955e 2 years ago 586MB

kibana 7.4.2 230d3ded1abc 2 years ago 1.1GB

elasticsearch 7.4.2 b1179d41a7b4 2 years ago 855MB

nginx 1.10 0346349a1a64 5 years ago 182MB

[root@localhost ~]# docker images -q

dfda7a2cf273

938b57d64674

7faaec683238

feb5d9fea6a5

b001a5f6955e

230d3ded1abc

b1179d41a7b4

0346349a1a64各字段含义

4.2从镜像仓库中检索镜像

docker search redis 是检索全部redis相关镜像

而通过docker search --help 可以知道 还可以 通过 --limit num 来限定检索stars数最多的前num条

[root@localhost ~]# docker search redis --limit 5

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

redis Redis is an open source key-value store that… 11282 [OK]

bitnami/redis Bitnami Redis Docker Image 229 [OK]

circleci/redis CircleCI images for Redis 14 [OK]

redis/redis-stack redis-stack installs a Redis server with add… 11

redis/redis-stack-server redis-stack-server installs a Redis server w… 9 4.3从仓库中拉取镜像

docker pull redis就是拉取 redis最新版本镜像就等于docker pull redis:latest

docker pull redis:6.9 就是拉取指定redis版本为 6.9的镜像

4.4查看docker中资源所占空间情况

如下分别是镜像,容器,数据卷,构建缓存所占 空间

[root@localhost ~]# docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 8 7 3.772GB 787.5MB (20%)

Containers 7 5 859.7MB 0B (0%)

Local Volumes 6 2 470.5MB 134.2MB (28%)

Build Cache 0 0 0B 0B4.5删除某个镜像

下图是我要删除helloworld镜像 可以根据repository名字或者镜像id删除,如果提示不能删除,

加 -f 可以强制删除

[root@localhost ~]# docker rmi feb5d9fea6a5

Error response from daemon: conflict: unable to delete feb5d9fea6a5 (must be forced) - image is being used by stopped container 0ada6f5e1d1a

[root@localhost ~]# docker rmi -f feb5d9fea6a5

Untagged: hello-world:latest

Untagged: hello-world@sha256:2498fce14358aa50ead0cc6c19990fc6ff866ce72aeb5546e1d59caac3d0d60f

Deleted: sha256:feb5d9fea6a5e9606aa995e879d862b825965ba48de054caab5ef356dc6b3412此外删库跑路 像如下 删除多个 一般谨慎使用 特别生产环境

4.6虚玄镜像

了解就好

4.7commit与push

五.容器相关命令

5.1创建与启动与退出容器

i:interative 交互式

t:terminal 终端

/bin/bash shell 控制台

执行如下命令后相当与在docker中创建了个小 ubuntu容器

[root@localhost ~]# docker run -it ubuntu /bin/bash

root@bcc40acd606c:/# ls

bin boot dev etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var如下命令我们可以看到使用--name 根据同一镜像创建了第二个ubantu容器

[root@localhost ~]# docker run -it --name secondunbuntucontainer ubuntu bash

root@d0de5c5476a9:/# exit 5.2 查看容器

使用docker ps -a可以看到我们创建过两个 ubuntu容器

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d0de5c5476a9 ubuntu "bash" About a minute ago Exited (0) About a minute ago secondunbuntucontainer

bcc40acd606c ubuntu "/bin/bash" 8 minutes ago Exited (127) 3 minutes ago quizzical_mclaren

0ada6f5e1d1a feb5d9fea6a5 "/hello" 17 hours ago Exited (0) 17 hours ago compassionate_grothendieck

efdc8c9c68ef mongo "docker-entrypoint.s…" 8 months ago Exited (255) 7 months ago 0.0.0.0:27017->27017/tcp, :::27017->27017/tcp mongo

5bc853753904 nginx:1.10 "nginx -g 'daemon of…" 9 months ago Up 3 days 0.0.0.0:80->80/tcp, :::80->80/tcp, 443/tcp nginx还可以加如下选项来进行筛选

5.3启动删除重启强制删除删除多个容器等命令

5.4某容器日志

docker logs 容器id/名字

5.5查看容器内运行进程以及资源消耗

docker top 容器id/名字

[root@localhost ~]# docker top redis

UID PID PPID C STIME TTY TIME CMD

polkitd 1331 1297 0 Aug21 ? 00:04:40 redis-server *:63795.6查看容器内部细节

如下 docker inspect 容器id/名字 可以查看这个简易环境的内部信息 比如 主机配置,网络设置等等

[root@localhost ~]# docker inspect redis

[

{

"Id": "2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb",

"Created": "2021-10-28T11:07:56.786345376Z",

"Path": "docker-entrypoint.sh",

"Args": [

"redis-server",

"/etc/redis/redis.conf"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 1331,

"ExitCode": 0,

"Error": "",

"StartedAt": "2022-08-21T14:37:18.184401839Z",

"FinishedAt": "2022-08-21T14:37:16.540735396Z"

},

"Image": "sha256:7faaec68323851b2265bddb239bd9476c7d4e4335e9fd88cbfcc1df374dded2f",

"ResolvConfPath": "/var/lib/docker/containers/2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb/hostname",

"HostsPath": "/var/lib/docker/containers/2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb/hosts",

"LogPath": "/var/lib/docker/containers/2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb/2f800e62d4f1093bed27cd29f4bffb2bd35918f8bac110348f5e98ce77eaa2cb-json.log",

"Name": "/redis",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": [

"/mydata/redis/data:/data",

"/mydata/redis/conf/redis.conf:/etc/redis/redis.conf"

],

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {

"6379/tcp": [

{

"HostIp": "",

"HostPort": "6379"

}

]

},

"RestartPolicy": {

"Name": "always",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"CgroupnsMode": "host",

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/69fe4cd37eae73c5dd5001b6a7badaafd75ede48678e78ae3c253161ca4bd66b-init/diff:/var/lib/docker/overlay2/ac8c700336d3794dc8bb41c994746052e3f0295b0701bd72a0daf357a4a11678/diff:/var/lib/docker/overlay2/26dfee7b59392554e5ccc5d0d8298813bbbd33884fa6bf5db8f0c872a8cf0f1c/diff:/var/lib/docker/overlay2/9276527a127f83323c1ca6b6793807f93f569cade50a621c0cfb0ab67e018f8d/diff:/var/lib/docker/overlay2/e323e764522262340f38eaa00de0defe37a421cebc6eb722d39814b66d284154/diff:/var/lib/docker/overlay2/149dde4495b55cfe69206f0f70fcb62fa4c87015248b9ecebcb0ea190626a0f1/diff:/var/lib/docker/overlay2/652d9c4eea6f6a7a71bfd0c9f75811e1a59f37f39751dcee3fece37c753b314d/diff",

"MergedDir": "/var/lib/docker/overlay2/69fe4cd37eae73c5dd5001b6a7badaafd75ede48678e78ae3c253161ca4bd66b/merged",

"UpperDir": "/var/lib/docker/overlay2/69fe4cd37eae73c5dd5001b6a7badaafd75ede48678e78ae3c253161ca4bd66b/diff",

"WorkDir": "/var/lib/docker/overlay2/69fe4cd37eae73c5dd5001b6a7badaafd75ede48678e78ae3c253161ca4bd66b/work"

},

"Name": "overlay2"

},

"Mounts": [

{

"Type": "bind",

"Source": "/mydata/redis/data",

"Destination": "/data",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/mydata/redis/conf/redis.conf",

"Destination": "/etc/redis/redis.conf",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

"Config": {

"Hostname": "2f800e62d4f1",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"6379/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"GOSU_VERSION=1.12",

"REDIS_VERSION=6.2.6",

"REDIS_DOWNLOAD_URL=http://download.redis.io/releases/redis-6.2.6.tar.gz",

"REDIS_DOWNLOAD_SHA=5b2b8b7a50111ef395bf1c1d5be11e6e167ac018125055daa8b5c2317ae131ab"

],

"Cmd": [

"redis-server",

"/etc/redis/redis.conf"

],

"Image": "redis",

"Volumes": {

"/data": {}

},

"WorkingDir": "/data",

"Entrypoint": [

"docker-entrypoint.sh"

],

"OnBuild": null,

"Labels": {}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "d194b583802266655aa106ff2e061e50b68279d48c7adf7bb3038195d006daba",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"6379/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "6379"

},

{

"HostIp": "::",

"HostPort": "6379"

}

]

},

"SandboxKey": "/var/run/docker/netns/d194b5838022",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "98937676f9c2ba71ff29db308b399a01fd36a1c95c95aea6ecb3bba9bc33a349",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:02",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "7720b280fd25d2f1d1b9f5c41829b59736cb43d4159a22e816916ae17d7f07e0",

"EndpointID": "98937676f9c2ba71ff29db308b399a01fd36a1c95c95aea6ecb3bba9bc33a349",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02",

"DriverOpts": null

}

}

}

}

]5.7重新进入容器

前面我们在docker run-it 容器id/name bash运行并进入

容器内部 可以用ctrl+p+q退出容器但是不停止容器

可以看到有如下命令 我们一般执行docker exec -it 容器id/name bash来重新进入容器

[root@localhost ~]# docker exec --help

Usage: docker exec [OPTIONS] CONTAINER COMMAND [ARG...]

Run a command in a running container

Options:

-d, --detach Detached mode: run command in the background

--detach-keys string Override the key sequence for detaching a container

-e, --env list Set environment variables

--env-file list Read in a file of environment variables

-i, --interactive Keep STDIN open even if not attached

--privileged Give extended privileges to the command

-t, --tty Allocate a pseudo-TTY

-u, --user string Username or UID (format: <name|uid>[:<group|gid>])

-w, --workdir string Working directory inside the container如下代码使用exec 重新进入容器后 用exit退出 容器不会停止运行

[root@localhost ~]# docker exec -it redis bash

root@2f800e62d4f1:/data# ^C

root@2f800e62d4f1:/data# redis-cli

127.0.0.1:6379> ping

PONG

127.0.0.1:6379> set key hello

OK

127.0.0.1:6379> get

(error) ERR wrong number of arguments for 'get' command

127.0.0.1:6379> get key

"hello"

127.0.0.1:6379> exit

root@2f800e62d4f1:/data# exit

exit

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7381f2a045bc redis "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 6379/tcp blissful_bouman

d0de5c5476a9 ubuntu "bash" About an hour ago Up 42 minutes secondunbuntucontainer

5bc853753904 nginx:1.10 "nginx -g 'daemon of…" 9 months ago Up 4 days 0.0.0.0:80->80/tcp, :::80->80/tcp, 443/tcp nginx

a93fde9471cf elasticsearch:7.4.2 "/usr/local/bin/dock…" 9 months ago Up 4 days 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp elasticsearch

f9dae621180b kibana:7.4.2 "/usr/local/bin/dumb…" 9 months ago Up 4 days 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp kibana

2f800e62d4f1 redis "docker-entrypoint.s…" 10 months ago Up About a minute 0.0.0.0:6379->6379/tcp, :::6379->6379/tcp redis

8c3138489f1b mysql:5.7 "docker-entrypoint.s…" 10 months ago Up 4 days 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql5.8备份容器中文件与直接备份容器,导入容器

备份容器内文件

[root@localhost ~]# docker exec -it redis bash

root@2f800e62d4f1:/data# touch tt.txt

root@2f800e62d4f1:/data# ls

appendonly.aof dump.rdb tt.txt

root@2f800e62d4f1:/data# exit

exit

[root@localhost ~]# docker cp ^C

[root@localhost ~]# docker cp 2f800e62d4f1:tt.txt /tmp/tt.txt

Error: No such container:path: 2f800e62d4f1:tt.txt

[root@localhost ~]# docker cp 2f800e62d4f1:/tt.txt /tmp/tt.txt

Error: No such container:path: 2f800e62d4f1:/tt.txt

[root@localhost ~]# docker exec -it redis bash

root@2f800e62d4f1:/data# pwd

/data

root@2f800e62d4f1:/data# exit

exit

[root@localhost ~]# docker cp 2f800e62d4f1:/data/tt.txt /tmp/tt.txt

[root@localhost ~]# ll /tmp

total 0

drwx------. 3 root root 17 Aug 21 14:37 systemd-private-cbe823f7b580402784680c1ec50531d7-chronyd.service-wesC3y

-rw-r--r--. 1 root root 0 Aug 25 14:26 tt.txt导出容器成镜像

可用作备份 也可以用作容器的拷贝 ,比如我们创建了某容器后想要批量复制它 那么 导出为镜像后

再根据此镜像创建容器 则实现拷贝

5.9根据base 镜像封装 自己的镜像并提交

可以看到我们从docker hub上拉的ubuntu镜像只是最简易版的 vim命令是没有的

[root@localhost ~]# docker run -it ubuntu bash

root@15c0323b5378:/# ls

bin boot dev etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var

root@15c0323b5378:/# vim tmp/demo.txt

bash: vim: command not found那么我们想要添加vim命令 并且提交当前容器为 镜像该怎么做呢

1.添加 vim命令

可以看到我们安装 vim命令 有提示

After this operation, 70.3 MB of additional disk space will be used.

意思是安装这个命令会下载些东西 会添加 70.3 MB文件到磁盘

root@15c0323b5378:/# apt-get install vim

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

alsa-topology-conf alsa-ucm-conf file libasound2 libasound2-data libcanberra0 libexpat1 libgpm2 libltdl7 libmagic-mgc libmagic1 libmpdec2 libogg0 libpython3.8 libpython3.8-minimal

libpython3.8-stdlib libreadline8 libsqlite3-0 libssl1.1 libtdb1 libvorbis0a libvorbisfile3 mime-support readline-common sound-theme-freedesktop vim-common vim-runtime xxd xz-utils

Suggested packages:

libasound2-plugins alsa-utils libcanberra-gtk0 libcanberra-pulse gpm readline-doc ctags vim-doc vim-scripts

The following NEW packages will be installed:

alsa-topology-conf alsa-ucm-conf file libasound2 libasound2-data libcanberra0 libexpat1 libgpm2 libltdl7 libmagic-mgc libmagic1 libmpdec2 libogg0 libpython3.8 libpython3.8-minimal

libpython3.8-stdlib libreadline8 libsqlite3-0 libssl1.1 libtdb1 libvorbis0a libvorbisfile3 mime-support readline-common sound-theme-freedesktop vim vim-common vim-runtime xxd xz-utils

0 upgraded, 30 newly installed, 0 to remove and 0 not upgraded.

Need to get 14.9 MB of archives.

After this operation, 70.3 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://archive.ubuntu.com/ubuntu focal/main amd64 libmagic-mgc amd64 1:5.38-4 [218 kB]

Get:2 http://archive.ubuntu.com/ubuntu focal/main amd64 libmagic1 amd64 1:5.38-4 [75.9 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal/main amd64 file amd64 1:5.38-4 [23.3 kB]2.提交当前容器作为我们的新的ubuntu镜像

可以看到提交后我们再找到该镜像 启动后可以看到容器内 就可以成功使用 vim 命令了

invalid reference format: repository name must be lowercase

[root@localhost ~]# docker commit -m "add vim command" -a "robert Ren" 15c0323b5378 ubuntu/withvim:1.1

sha256:c638056f700071004520f1b88ab2bd73f68aa094c70cd1bfe315930bdbb365ba

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu/withvim 1.1 c638056f7000 2 minutes ago 162MB

redis/rediscontainer 0.5 3be94ff46e45 2 days ago 109MB

mongo latest dfda7a2cf273 8 months ago 693MB

mysql 5.7 938b57d64674 10 months ago 448MB

ubuntu latest ba6acccedd29 10 months ago 72.8MB

redis latest 7faaec683238 10 months ago 113MB

redis 6.0.8 16ecd2772934 22 months ago 104MB

flink 1.10.0-scala_2.12 b001a5f6955e 2 years ago 586MB

kibana 7.4.2 230d3ded1abc 2 years ago 1.1GB

elasticsearch 7.4.2 b1179d41a7b4 2 years ago 855MB

nginx 1.10 0346349a1a64 5 years ago 182MB

[root@localhost ~]# docker run -it ubuntu/withvim bash

Unable to find image 'ubuntu/withvim:latest' locally

^[[A^[[A^C

[root@localhost ~]# docker run -it ubuntu/withvim:1.1 bash

root@c8fd302c542f:/# vim tmp/demo.txt

root@c8fd302c542f:/# cat tmp/demo.txt

heihei5.10把本地镜像推送到阿里云 镜像仓库 并拉取下来

阿里云镜像管理

根据提示就可以把镜像推送到阿里云了

5.11私有镜像

把镜像仓库搭在公司内部服务器上 用于 组员的共享

这个用到时候再补充吧

5.12 带容器卷启动

简单的说就是给容器中重要的数据做备份时用,默认映射后 容器中文件与 主机中文件

两边有任意一边修改 任何一边都会得到同步 无论 容器状态是否是 停止

如下执行 就是使用容器卷映射的方式 运行 镜像

[root@localhost ~]# docker run -it --privileged=true -v /tmp/datainhost:/tmp/dataindockercontainer --name=ubun ubuntu/withvim:1.1

root@ddbe43e18775:/# ls

bin boot dev etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var

root@ddbe43e18775:/# cd tmp/dataindockercontainer/

root@ddbe43e18775:/tmp/dataindockercontainer# ls

root@ddbe43e18775:/tmp/dataindockercontainer# touch demo.txt

root@ddbe43e18775:/tmp/dataindockercontainer# ls

demo.txt执行完以上命令后我们再看linux主机中映射的目录下也多出了 demo.txt文件

[root@localhost ~]# cd /

[root@localhost /]# ls

bin boot dev etc home jdk lib lib64 media mnt mydata opt proc root run sbin srv swapfile sys tmp usr vagrant var

[root@localhost /]# cd tmp/datainhost/

[root@localhost datainhost]# ls

demo.txt如果我们不允许在容器中修改 那么 在 容器目录的后面加上 :ro 改为只读权限

docker run -it --privileged=true -v /tmp/datainhost:/tmp/dataindockercontainer:ro --name=ubun ubuntu/withvim:1.1

容器卷映射规则的继承

使用如下语法即可 父类就是 父容器的名称

这样的话就实现了多个容器中数据的共享

因为比如 容器1 修改了 文件 那么 linux主机上文件也会被同步 随后 容器2中的文件也会被同步修改

这里比较简单就不整命令了,外卖到了

七.常用命令概览

八.安装运行各种软件

8.1tomcat

常规操作 安装运行

[root@localhost ~]# docker pull tomcat

Using default tag: latest

latest: Pulling from library/tomcat

0e29546d541c: Pull complete

9b829c73b52b: Pull complete

cb5b7ae36172: Pull complete

6494e4811622: Pull complete

668f6fcc5fa5: Pull complete

dc120c3e0290: Pull complete

8f7c0eebb7b1: Pull complete

77b694f83996: Pull complete

0f611256ec3a: Pull complete

4f25def12f23: Pull complete

Digest: sha256:9dee185c3b161cdfede1f5e35e8b56ebc9de88ed3a79526939701f3537a52324

Status: Downloaded newer image for tomcat:latest

docker.io/library/tomcat:latest

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7381f2a045bc redis "docker-entrypoint.s…" 3 days ago Up 3 days 6379/tcp blissful_bouman

d0de5c5476a9 ubuntu "bash" 3 days ago Up 3 days secondunbuntucontainer

5bc853753904 nginx:1.10 "nginx -g 'daemon of…" 9 months ago Up 7 days 0.0.0.0:80->80/tcp, :::80->80/tcp, 443/tcp nginx

a93fde9471cf elasticsearch:7.4.2 "/usr/local/bin/dock…" 9 months ago Up 7 days 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp elasticsearch

f9dae621180b kibana:7.4.2 "/usr/local/bin/dumb…" 9 months ago Up 7 days 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp kibana

8c3138489f1b mysql:5.7 "docker-entrypoint.s…" 10 months ago Up 7 days 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu/withvim 1.1 c638056f7000 12 hours ago 162MB

redis/rediscontainer 0.5 3be94ff46e45 3 days ago 109MB

tomcat latest fb5657adc892 8 months ago 680MB

mongo latest dfda7a2cf273 8 months ago 693MB

registry latest b8604a3fe854 9 months ago 26.2MB

mysql 5.7 938b57d64674 10 months ago 448MB

ubuntu latest ba6acccedd29 10 months ago 72.8MB

redis latest 7faaec683238 10 months ago 113MB

redis 6.0.8 16ecd2772934 22 months ago 104MB

flink 1.10.0-scala_2.12 b001a5f6955e 2 years ago 586MB

kibana 7.4.2 230d3ded1abc 2 years ago 1.1GB

elasticsearch 7.4.2 b1179d41a7b4 2 years ago 855MB

nginx 1.10 0346349a1a64 5 years ago 182MB

[root@localhost ~]# ^C

[root@localhost ~]# docker run -it -p 8080:8080 tomcat bash

root@925599a8a318:/usr/local/tomcat# ls

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE README.md RELEASE-NOTES RUNNING.txt bin conf lib logs native-jni-lib temp webapps webapps.dist work关于新版本 tomcat不能直接通过8080访问首页是因为 它把首页从 webapps 目录放到 webapps.dist内了 ,删除webapps 然后 mv webapps.dist webapps即可

8.2mysql

首先docker pull mysql:5.7后,然后按以下命令运行

[root@localhost ~]# docker run --name mysqlzz -e MYSQL_ROOT_PASSWORD=root -d mysql:5.7

docker: Error response from daemon: Conflict. The container name "/mysqlzz" is already in use by container "8b1c8232a644e052d2636b85f865bdafe04c709ac8e5ff40e4e05f9aac840aee". You have to remove (or rename) that container to be able to reuse that name.

See 'docker run --help'.

[root@localhost ~]# docker exec -it mysqlaa bash

root@31e36f35d688:/# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> 至于数据的备份

这里懒得贴命令了,前面整过 就是以容器卷的方式

至于插入中文乱码

就是在conf目录下 进行如下操作

8.3redis

也没啥好说的 安装运行差不多

主要是个容器卷的文件映射要注意下 防止容器被删数据丢失

docker run -p 6379:6379 --name redis -v /mydata/redis/data:/data -v /mydata/redis/conf/redis.conf:/etc/redis/redis.conf -d redis redis-server /etc/redis/redis.conf不进行如下设置的话 redis退出后数据就没了 所以持久化到磁盘可以进行如下设置

vi /mydata/redis/conf/redis.conf

添加appendonly yes

九.mysql主从复制

9.1创建主节点

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5bc853753904 nginx:1.10 "nginx -g 'daemon of…" 9 months ago Up 17 minutes 0.0.0.0:80->80/tcp, :::80->80/tcp, 443/tcp nginx

a93fde9471cf elasticsearch:7.4.2 "/usr/local/bin/dock…" 10 months ago Up 17 minutes 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp, 0.0.0.0:9300->9300/tcp, :::9300->9300/tcp elasticsearch

f9dae621180b kibana:7.4.2 "/usr/local/bin/dumb…" 10 months ago Up 17 minutes 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp kibana

8c3138489f1b 938b57d64674 "docker-entrypoint.s…" 10 months ago Up 17 minutes 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql

[root@localhost ~]# docker run -p 3307:3306 --name mysql-master \

> -v /mydata/mysql-master/log:/var/log/mysql \

> -v /mydata/mysql-master/data:/var/lib/mysql \

> -v /mydata/mysql-master/conf:/etc/mysql \

> -e MYSQL_ROOT_PASSWORD=root \

> -d mysql:5.7

d047e686648b8829a1ded5b8f8d1ab9aecd95f7ee7e655559470b142ee4baa589.2添加配置文件

[root@localhost ~]# vi /mydata/mysql-master/conf/my.cnf文件内容如下

[mysqld]

## 设置server_id,同一局域网中需要唯一

server_id=101

## 指定不需要同步的数据库名称

binlog-ignore-db=mysql

## 开启二进制日志功能

log-bin=mall-mysql-bin

## 设置二进制日志使用内存大小(事务)

binlog_cache_size=1M

## 设置使用的二进制日志格式(mixed,statement,row)

binlog_format=mixed

## 二进制日志过期清理时间。默认值为0,表示不自动清理。

expire_logs_days=7

## 跳过主从复制中遇到的所有错误或指定类型的错误,避免slave端复制中断。

## 如:1062错误是指一些主键重复,1032错误是因为主从数据库数据不一致

slave_skip_errors=1062

9.3重启主库

docker restart mysql-master

9.4进入master

docker exec -it mysql-master /bin/bash

mysql -uroot -proot

9.5master容器实例内创建数据同步用户

CREATE USER 'slave'@'%' IDENTIFIED BY '123456';

GRANT REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'slave'@'%';

9.6新建从服务器容器实例3308

docker run -p 3308:3306 --name mysql-slave \

-v /mydata/mysql-slave/log:/var/log/mysql \

-v /mydata/mysql-slave/data:/var/lib/mysql \

-v /mydata/mysql-slave/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.79.7进入/mydata/mysql-slave/conf目录下新建my.cnf

记得改server_id

vi my.cnf

[mysqld]

## 设置server_id,同一局域网中需要唯一

server_id=102

## 指定不需要同步的数据库名称

binlog-ignore-db=mysql

## 开启二进制日志功能,以备Slave作为其它数据库实例的Master时使用

log-bin=mall-mysql-slave1-bin

## 设置二进制日志使用内存大小(事务)

binlog_cache_size=1M

## 设置使用的二进制日志格式(mixed,statement,row)

binlog_format=mixed

## 二进制日志过期清理时间。默认值为0,表示不自动清理。

expire_logs_days=7

## 跳过主从复制中遇到的所有错误或指定类型的错误,避免slave端复制中断。

## 如:1062错误是指一些主键重复,1032错误是因为主从数据库数据不一致

slave_skip_errors=1062

## relay_log配置中继日志

relay_log=mall-mysql-relay-bin

## log_slave_updates表示slave将复制事件写进自己的二进制日志

log_slave_updates=1

## slave设置为只读(具有super权限的用户除外)

read_only=1

9.8修改完配置后重启slave实例

docker restart mysql-slave

9.9在主数据库中查看主从同步状态

show master status;

9.10剩下步骤

因为跟前面基本

主从复制命令:change master to master_host='172.17.0.6', master_user='slave', master_password='root', master_port=3307, master_log_file='mall-mysql-bin.000001', master_log_pos=154, master_connect_retry=30;

- 主从复制命令参数说明

master_host:主数据库的IP地址;

master_port:主数据库的运行端口;

master_user:在主数据库创建的用于同步数据的用户账号;

master_password:在主数据库创建的用于同步数据的用户密码;

master_log_file:指定从数据库要复制数据的日志文件,通过查看主数据的状态,获取File参数;

master_log_pos:指定从数据库从哪个位置开始复制数据,通过查看主数据的状态,获取Position参数;

master_connect_retry:连接失败重试的时间间隔,单位为秒。

十.分布式存储算法

这里我们是说的redis

我们存储都是根据key来的,因为取值要根据key取,有三台redis机器,现在有10个redis键值对,那么要怎样存储呢

10.1hash取余算法

如字面意思 就是 先算key的hash值 再对机器数 取余

比如现在 key1算出来hash值为 12 那么对机器数3取余就是0 那么这个键值对就放到机器0

10.2一致性hash算法

背景:

一致性哈希算法在1997年由麻省理工学院中提出的,设计目标是为了解决

分布式缓存数据变动和映射问题,某个机器宕机了,分母数量改变了,自然取余数不OK了。

目的:

是当服务器个数发生变动时,尽量减少影响客户端到服务器的映射关系

步骤:

一致性哈希环

一致性哈希算法必然有个hash函数并按照算法产生hash值,这个算法的所有可能哈希值会构成一个全量集,这个集合可以成为一个hash空间[0,2^32-1],这个是一个线性空间,但是在算法中,我们通过适当的逻辑控制将它首尾相连(0 = 2^32),这样让它逻辑上形成了一个环形空间。

步骤1:它也是按照使用取模的方法,前面笔记介绍的节点取模法是对节点(服务器)的数量进行取模。而一致性Hash算法是对2^32取模,简单来说,一致性Hash算法将整个哈希值空间组织成一个虚拟的圆环,如假设某哈希函数H的值空间为0-2^32-1(即哈希值是一个32位无符号整形),整个哈希环如下图:整个空间按顺时针方向组织,圆环的正上方的点代表0,0点右侧的第一个点代表1,以此类推,2、3、4、……直到2^32-1,也就是说0点左侧的第一个点代表2^32-1, 0和2^32-1在零点中方向重合,我们把这个由2^32个点组成的圆环称为Hash环。

步骤2:

步骤3:

优点:

容错性

假设Node C宕机,可以看到此时对象A、B、D不会受到影响,只有C对象被重定位到Node D。一般的,在一致性Hash算法中,如果一台服务器不可用,则受影响的数据仅仅是此服务器到其环空间中前一台服务器(即沿着逆时针方向行走遇到的第一台服务器)之间数据,其它不会受到影响。简单说,就是C挂了,受到影响的只是B、C之间的数据,并且这些数据会转移到D进行存储。

缺点:

缺点:

小总结:

为了在节点数目发生改变时尽可能少的迁移数据

将所有的存储节点排列在收尾相接的Hash环上,每个key在计算Hash后会顺时针找到临近的存储节点存放。

而当有节点加入或退出时仅影响该节点在Hash环上顺时针相邻的后续节点。

优点

加入和删除节点只影响哈希环中顺时针方向的相邻的节点,对其他节点无影响。

缺点

数据的分布和节点的位置有关,因为这些节点不是均匀的分布在哈希环上的,所以数据在进行存储时达不到均匀分布的效果。

10.3hash槽算法

十一.docker中 redis集群之主从与扩缩容 实现

11.1先创建6个redis容器

为了搭建一个三主三从的集群, 先创建6个redis容器

docker run -d --name redis-node-1 --net host --privileged=true -v /data/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381

docker run -d --name redis-node-2 --net host --privileged=true -v /data/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host --privileged=true -v /data/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host --privileged=true -v /data/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host --privileged=true -v /data/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host --privileged=true -v /data/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386命令解释:

- 命令分步解释

- docker run

- 创建并运行docker容器实例

- --name redis-node-6

- 容器名字

- --net host

- 使用宿主机的IP和端口,默认

- --privileged=true

- 获取宿主机root用户权限

- -v /data/redis/share/redis-node-6:/data

- 容器卷,宿主机地址:docker内部地址

- redis:6.0.8

- redis镜像和版本号

- --cluster-enabled yes

- 开启redis集群

- --appendonly yes

- 开启持久化

- --port 6386

- redis端口号

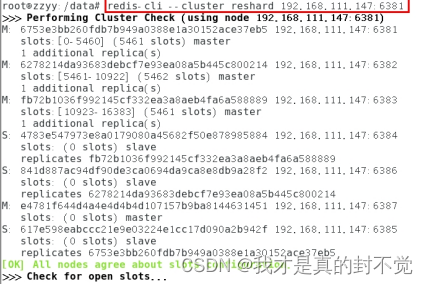

11.2搭建集群 配置副本数

执行如下第一行指令

redis-cli --cluster create 192.168.56.10:6381 192.168.56.10:6382 192.168.56.10:6383 192.168.56.10:6384 192.168.56.10:6385 192.168.56.10:6386 --cluster-replicas 1

--cluster-replicas 1 表示为每个master创建一个slave节点

后面的信息打印说明集群创建成功,从提示中可以看到 使用的hash槽算法,然后三个主节点把所有槽位分为三份,然后可以看到副本 对应主节点的关系

master:6381 slave:6385

master:6382 slave:6386

master:6383 slave:6384

root@localhost:/data# redis-cli --cluster create 192.168.56.10:6381 192.168.56.10:6382 192.168.56.10:6383 192.168.56.10:6384 192.168.56.10:6385 192.168.56.10:6386 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.56.10:6385 to 192.168.56.10:6381

Adding replica 192.168.56.10:6386 to 192.168.56.10:6382

Adding replica 192.168.56.10:6384 to 192.168.56.10:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: 9c62b58df19039614fc32dc6fa32faa9c90845a6 192.168.56.10:6381

slots:[0-5460] (5461 slots) master

M: 50acd693ad90ecb9957f7923e3fff1731b04fe41 192.168.56.10:6382

slots:[5461-10922] (5462 slots) master

M: ef14ef41e4740cff53699403c15ca4ec0015c008 192.168.56.10:6383

slots:[10923-16383] (5461 slots) master

S: 5564c11b92952c35fbe4002ec5ef4eb9f8b10bb8 192.168.56.10:6384

replicates 50acd693ad90ecb9957f7923e3fff1731b04fe41

S: 40a46efd6c3fbc91d0c551acc2f99a2e31450dd2 192.168.56.10:6385

replicates ef14ef41e4740cff53699403c15ca4ec0015c008

S: 79d85f999d062de627263079baf474f6973fb080 192.168.56.10:6386

replicates 9c62b58df19039614fc32dc6fa32faa9c90845a6

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

>>> Performing Cluster Check (using node 192.168.56.10:6381)

M: 9c62b58df19039614fc32dc6fa32faa9c90845a6 192.168.56.10:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ef14ef41e4740cff53699403c15ca4ec0015c008 192.168.56.10:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 79d85f999d062de627263079baf474f6973fb080 192.168.56.10:6386

slots: (0 slots) slave

replicates 9c62b58df19039614fc32dc6fa32faa9c90845a6

S: 40a46efd6c3fbc91d0c551acc2f99a2e31450dd2 192.168.56.10:6385

slots: (0 slots) slave

replicates ef14ef41e4740cff53699403c15ca4ec0015c008

M: 50acd693ad90ecb9957f7923e3fff1731b04fe41 192.168.56.10:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 5564c11b92952c35fbe4002ec5ef4eb9f8b10bb8 192.168.56.10:6384

slots: (0 slots) slave

replicates 50acd693ad90ecb9957f7923e3fff1731b04fe41

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.可以看到cluster配置成功,但是我们在6381节点插入某键值对 key1 value1 报错,说想要move到 6382节点 为啥呢 因为我们 key1 求hash值对16384取余的结果 在 6382的槽位区间

root@localhost:/data# redis-cli -p 6381

127.0.0.1:6381> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:352

cluster_stats_messages_pong_sent:369

cluster_stats_messages_sent:721

cluster_stats_messages_ping_received:364

cluster_stats_messages_pong_received:352

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:721

127.0.0.1:6381> set key1 value1

(error) MOVED 9189 192.168.56.10:6382所以我们启动redis客户端时加配置 -c,让数据自动重定向存储

root@localhost:/data# redis-cli -p 6381 -c

127.0.0.1:6381> set key1 value1

-> Redirected to slot [9189] located at 192.168.56.10:6382

OK我们再检查集群的信息

就可以看到master节点 6382中 多了一条数据

root@localhost:/data# redis-cli --cluster check 192.168.56.10:6381

192.168.56.10:6381 (9c62b58d...) -> 0 keys | 5461 slots | 1 slaves.

192.168.56.10:6383 (ef14ef41...) -> 0 keys | 5461 slots | 1 slaves.

192.168.56.10:6382 (50acd693...) -> 1 keys | 5462 slots | 1 slaves.

[OK] 1 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.56.10:6381)

M: 9c62b58df19039614fc32dc6fa32faa9c90845a6 192.168.56.10:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ef14ef41e4740cff53699403c15ca4ec0015c008 192.168.56.10:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 79d85f999d062de627263079baf474f6973fb080 192.168.56.10:6386

slots: (0 slots) slave

replicates 9c62b58df19039614fc32dc6fa32faa9c90845a6

S: 40a46efd6c3fbc91d0c551acc2f99a2e31450dd2 192.168.56.10:6385

slots: (0 slots) slave

replicates ef14ef41e4740cff53699403c15ca4ec0015c008

M: 50acd693ad90ecb9957f7923e3fff1731b04fe41 192.168.56.10:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 5564c11b92952c35fbe4002ec5ef4eb9f8b10bb8 192.168.56.10:6384

slots: (0 slots) slave

replicates 50acd693ad90ecb9957f7923e3fff1731b04fe41

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.容错切换迁移

主6382和从机切换,先停止主机6382

我们新开一个窗口停掉 6382

[root@localhost ~]# docker stop redis-node-2

redis-node-26382主机停了,对应的真实从机上位

6382作为2号主机分配的从机以实际情况为准,具体是几号机器就是几号

中间需要等待一会儿,大概两秒,docker集群重新响应。

再次查看集群信息,可以看到2号主机的副本机器 变成了 主机 然后 数据也在里面

root@localhost:/data# redis-cli --cluster check 192.168.56.10:6381

Could not connect to Redis at 192.168.56.10:6382: Connection refused

192.168.56.10:6381 (9c62b58d...) -> 0 keys | 5461 slots | 1 slaves.

192.168.56.10:6383 (ef14ef41...) -> 0 keys | 5461 slots | 1 slaves.

192.168.56.10:6384 (5564c11b...) -> 1 keys | 5462 slots | 0 slaves.

[OK] 1 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.56.10:6381)

M: 9c62b58df19039614fc32dc6fa32faa9c90845a6 192.168.56.10:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ef14ef41e4740cff53699403c15ca4ec0015c008 192.168.56.10:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 79d85f999d062de627263079baf474f6973fb080 192.168.56.10:6386

slots: (0 slots) slave

replicates 9c62b58df19039614fc32dc6fa32faa9c90845a6

S: 40a46efd6c3fbc91d0c551acc2f99a2e31450dd2 192.168.56.10:6385

slots: (0 slots) slave

replicates ef14ef41e4740cff53699403c15ca4ec0015c008

M: 5564c11b92952c35fbe4002ec5ef4eb9f8b10bb8 192.168.56.10:6384

slots:[5461-10922] (5462 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

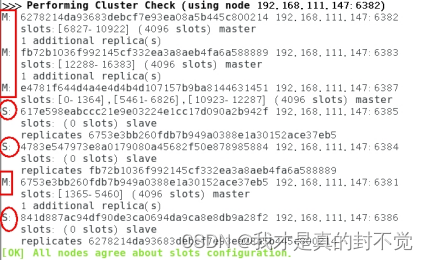

[OK] All 16384 slots covered.扩缩容:

- 主从扩容案例

- 新建6387、6388两个节点+新建后启动+查看是否8节点

docker run -d --name redis-node-7 --net host --privileged=true -v /data/redis/share/redis-node-7:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6387

docker run -d --name redis-node-8 --net host --privileged=true -v /data/redis/share/redis-node-8:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6388

docker ps

- 进入6387容器实例内部

- docker exec -it redis-node-7 /bin/bash

- 将新增的6387节点(空槽号)作为master节点加入原集群

将新增的6387作为master节点加入集群

redis-cli --cluster add-node 自己实际IP地址:6387 自己实际IP地址:6381

6387 就是将要作为master新增节点

6381 就是原来集群节点里面的领路人,相当于6387拜拜6381的码头从而找到组织加入集群

- 检查集群情况第1次

redis-cli --cluster check 真实ip地址:6381

- 重新分派槽号

重新分派槽号

命令:redis-cli --cluster reshard IP地址:端口号

redis-cli --cluster reshard 192.168.111.147:6381

- 检查集群情况第2次

redis-cli --cluster check 真实ip地址:6381

- 槽号分派说明

为什么6387是3个新的区间,以前的还是连续?

重新分配成本太高,所以前3家各自匀出来一部分,从6381/6382/6383三个旧节点分别匀出1364个坑位给新节点6387

- 为主节点6387分配从节点6388

命令:redis-cli --cluster add-node ip:新slave端口 ip:新master端口 --cluster-slave --cluster-master-id 新主机节点ID

redis-cli --cluster add-node 192.168.111.147:6388 192.168.111.147:6387 --cluster-slave --cluster-master-id e4781f644d4a4e4d4b4d107157b9ba8144631451-------这个是6387的编号,按照自己实际情况

- 检查集群情况第3次

redis-cli --cluster check 192.168.111.147:6382

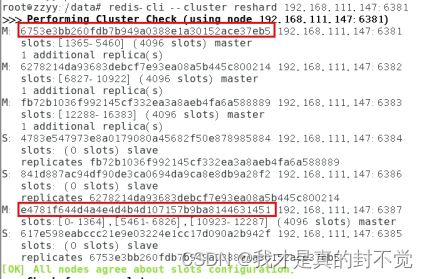

- 主从缩容案例

- 目的:6387和6388下线

- 检查集群情况1获得6388的节点ID

redis-cli --cluster check 192.168.111.147:6382

- 将6388删除 从集群中将4号从节点6388删除

命令:redis-cli --cluster del-node ip:从机端口 从机6388节点ID

redis-cli --cluster del-node 192.168.111.147:6388 5d149074b7e57b802287d1797a874ed7a1a284a8

redis-cli --cluster check 192.168.111.147:6382

检查一下发现,6388被删除了,只剩下7台机器了。

- 将6387的槽号清空,重新分配,本例将清出来的槽号都给6381

redis-cli --cluster reshard 192.168.111.147:6381

- 检查集群情况第二次

redis-cli --cluster check 192.168.111.147:6381

4096个槽位都指给6381,它变成了8192个槽位,相当于全部都给6381了,不然要输入3次,一锅端

- 将6387删除

命令:redis-cli --cluster del-node ip:端口 6387节点ID

redis-cli --cluster del-node 192.168.111.147:6387 e4781f644d4a4e4d4b4d107157b9ba8144631451

- 检查集群情况第三次

redis-cli --cluster check 192.168.111.147:6381

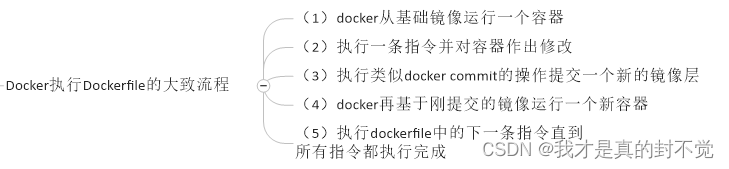

十二.docker file

12.1基础知识

12.2大致执行流程

12.3docker执行dockerfile的流程

12.4dockerfile常见保留字指令

这里以tomcat镜像构建dockerfile为例

tomcat/Dockerfile at master · docker-library/tomcat · GitHub

除了上面这些,还有些保留字 用时可参考下图

12.5自定义centos镜像 带 vim跟ifconfig

12.5.1下载jdk镜像 并放到 mifile目录

[root@localhost myfile]# ls

Dockerfile jdk-8u171-linux-x64.tar.gz

12.5.2创建并编辑 Dockerfile

记得首字母大写(规范),在里面放以下内容

遇到俩错 通过换成 centos7 与 修改 fastmirror配置解决fastestmirror 报错

FROM centos:centos7

MAINTAINER zzyy<zzyybs@126.com>

ENV MYPATH /usr/local

WORKDIR $MYPATH

#安装vim编辑器

#RUN yum -y install vim

安装ifconfig命令查看网络IP

RUN yum -y install net-tools

#安装java8及lib库

RUN yum -y install glibc.i686

RUN mkdir /usr/local/java

#ADD 是相对路径jar,把jdk-8u171-linux-x64.tar.gz添加到容器中,安装包必须要和Dockerfile文件在同一位置

ADD jdk-8u171-linux-x64.tar.gz /usr/local/java/

#配置java环境变量

ENV JAVA_HOME /usr/local/java/jdk1.8.0_171

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib:$CLASSPATH

ENV PATH $JAVA_HOME/bin:$PATH

EXPOSE 80

CMD echo $MYPATH

CMD echo "success--------------ok"

CMD /bin/bash

12.5.3构建镜像

docker build -t centos7withjdk:1.1 .

12.5.4创建并进入容器

执行以下命令没有报错可以看到我们使用dockerfile构建镜像成功

[root@d48b39ecd71a local]# pwd

/usr/local

[root@d48b39ecd71a local]# vim jj.txt

12.5.5各命令所属生命周期

十三.虚玄镜像

13.1定义

看这名字挺复杂,其实意思就是 构建了个错误的没用的镜像

定义:仓库名、标签都是<none>的镜像,俗称dangling image

13.2构建步骤

构建虚玄镜像步骤

13.3查看命令

docker image ls -f dangling=true

13.4删除命令

docker image prune

操作

[root@localhost myfile]# mkdir mystery

[root@localhost myfile]# ls

Dockerfile jdk-8u171-linux-x64.tar.gz mystery

[root@localhost myfile]# cd mystery/

[root@localhost mystery]# touch Dockerfile

[root@localhost mystery]# vi Dockerfile

from centos:centos7

CMD echo 'action is success'

~

~ 构建之后可以看到虚玄镜像创建成功

[root@localhost mystery]# docker build .

Sending build context to Docker daemon 2.048kB

Step 1/2 : from centos:centos7

---> eeb6ee3f44bd

Step 2/2 : CMD echo 'action is success'

---> Running in 52c47c594b76

Removing intermediate container 52c47c594b76

---> 741b4a129fba

Successfully built 741b4a129fba

[root@localhost mystery]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

<none> <none> 741b4a129fba 7 seconds ago 204MB

centos7withjdk 1.1 a6fe45f231f9 38 minutes ago 1.23GB

<none> <none> 6792826dbf60 About an hour ago 231MB

ubuntu/withvim 1.1 c638056f7000 3 weeks ago 162MB

redis/rediscontainer 0.5 3be94ff46e45 3 weeks ago 109MB

tomcat latest fb5657adc892 8 months ago 680MB十四.docker 部署微服务项目并运行

14.1创建微服务项目

14.2添加相关依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.5.6</version>

<relativePath/>

</parent>

<groupId>com.atguigu.docker</groupId>

<artifactId>docker_boot</artifactId>

<version>0.0.1-SNAPSHOT</version>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<junit.version>4.12</junit.version>

<log4j.version>1.2.17</log4j.version>

<lombok.version>1.16.18</lombok.version>

<mysql.version>5.1.47</mysql.version>

<druid.version>1.1.16</druid.version>

<mapper.version>4.1.5</mapper.version>

<mybatis.spring.boot.version>1.3.0</mybatis.spring.boot.version>

</properties>

<dependencies>

<!--SpringBoot通用依赖模块-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!--test-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

<version>3.1.0</version>

</plugin>

</plugins>

</build>

</project>

14.3整个接口用于测试

package com.robert.docker_boot.controller;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.UUID;

@RestController

public class TestController {

@Value("${server.port}")

private String port;

@RequestMapping("/test/helloworld")

public String helloWorld(){

return "i will be deploy in docker port:"+port+" hello world user"+ UUID.randomUUID();

}

}

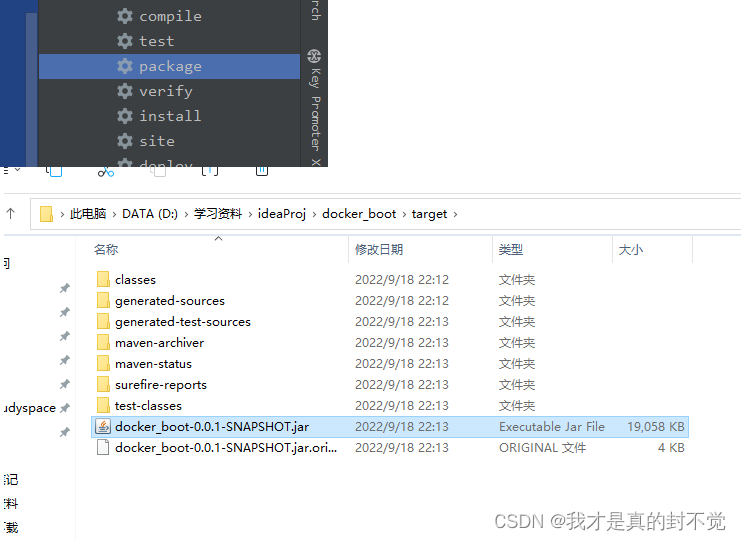

14.4打包

打包后复制到对应Dockerfile所在目录

14.5构建Dockerfile

内容如下,结合第6节的 保留字含义与 所属生命周期可知:

以java8镜像为父镜像 维护者为 Robert

就是把微服务的jar包放到容器中 并且更名为 firsrBootDockerDemo.jar

然后ENTRYPOINT 后面是容器运行时执行的命令 就是运行 这个微服务jar包 并且暴露于

6001端口

FROM java:8

MAINTAINER Robert

VOLUME /tmp

ADD docker_boot-0.0.1-SNAPSHOT.jar firsrBootDockerDemo.jar

ENTRYPOINT ["java","-jar","/firsrBootDockerDemo.jar"]

EXPOSE 600114.6运行镜像生成容器

暴露于 6002端口

docker run -it -p 6002:6001 f691c81ecc2a bash14.7测试

可以看到测试成功

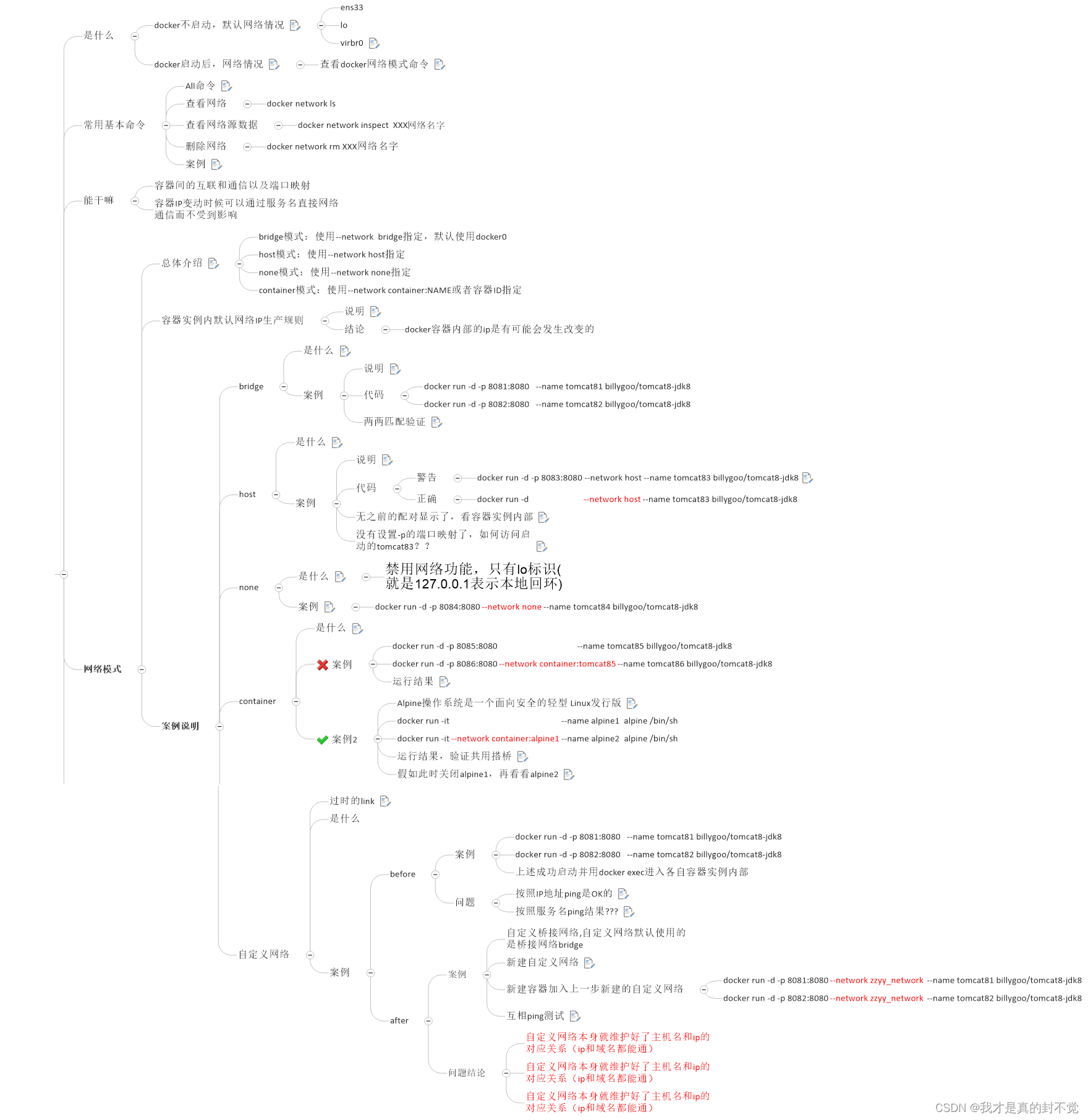

十五.docker network

再运行镜像创建docker容器 时 加 --network 网络类型的方式来指定网络

docker 其实就四个

bridge:docker创建的network默认都是这个,他会递增的给每个新建的容器分配端口 以及自己有套自己的网络设置

host:跟着宿主机走 ip跟网络设置一样

none:没有ip跟相应网络设置网卡啥的需要自己创建 一般用不到

container:指定 跟别的哪个容器共享 同一ip 与网络设置 当那个容器 挂掉时 这个 容器的网络设置也会无效

6万+

6万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?