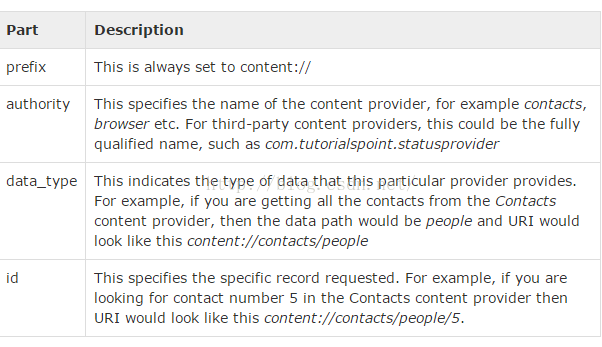

<prefix>://<authority>/<data_type>/<id>

Create Content Provider

This involves number of simple steps to create your own content provider.

-

First of all you need to create a Content Provider class that extends theContentProviderbaseclass.

-

Second, you need to define your content provider URI address which will be used to access the content.

-

Next you will need to create your own database to keep the content. Usually, Android uses SQLite database and framework needs to overrideonCreate() method which will use SQLite Open Helper method to create or open the provider's database. When your application is launched, theonCreate() handler of each of its Content Providers is called on the main application thread.

-

Next you will have to implement Content Provider queries to perform different database specific operations.

-

Finally register your Content Provider in your activity file using <provider> tag.

1232

1232

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?