接中午的理论部分

clc;

clear;

%x0 = 1,h(x) = x0*sita0 + x1*sita1+........

x = [1 1.15

1,1.9

1,3.06

1,4.66

1,6.84

1,7.95];

y = [1.37,2.4,3.02,3.06,4.22,5.42];

subplot(1,2,1);

plot(x(:,2),y);

hold on;

%×î´óµü´ú´ÎÊý

loop_max = 10000;

%ÊÕÁ²µÄ¾«¶È

epsilon = 0.0001;

%µü´ú²½³¤

alpha =0.001;

%

diff = 0;

lastsita0 = 0;

lastsita1 = 0;

m = size(x,1);

sita = [0,0];

count = 0;

finish = 0;

n = input('choose the method of gradient descent:1.incremental gradient descent 2.batch gradient descent\n');

switch(n)

case(1)

%ÔöÁ¿ÌݶÈϽµ

while count<loop_max

count = count+1

for i = 1:1:m

diff = sita(1)*x(i,1) + sita(2)*x(i,2) - y(i);

sita(1) = sita(1) - alpha * diff*x(i,1);

sita(2) = sita(2) - alpha * diff*x(i,2);

end

%ÅжÏÊÇ·ñÐèÒª¼ÌÐøµü´ú

if(abs(sita(1) - lastsita0)<epsilon && abs(sita(2) - lastsita1)<epsilon)

finish = 1;

else

lastsita0 = sita(1);

lastsita1 = sita(2);

sita

diff

subplot(1,2,2);

line(count,diff,'Marker','.');

ylabel('×îС¾ù·½');

xlabel('µü´ú´ÎÊý');

hold on;

end

if (finish ==1)

break;

end

end%end of while

%ÅúÌݶÈϽµ

case (2)

sum = 0;

while count<loop_max

count = count+1

for i = 1:1:m

diff = sita(1)*x(i,1) + sita(2)*x(i,2) - y(i);

sum= sum +diff*x(i,1);

end

sita(1) = sita(1)- alpha*sum;

sum =0;

for i = 1:1:m

diff = sita(1)*x(i,1) + sita(2)*x(i,2) - y(i);

sum= sum +diff*x(i,2);

end

sita(2) = sita(2)- alpha*sum;

if(abs(sita(1) - lastsita0)<epsilon && abs(sita(2) - lastsita1)<epsilon)

finish = 1;

else

lastsita0 = sita(1);

lastsita1 = sita(2);

sita

diff

subplot(1,2,2);

line(count,diff,'Marker','.');

ylabel('×îС¾ù·½');

xlabel('µü´ú´ÎÊý');

hold on;

end

if (finish ==1)

break;

end

end%end of while

end%end of switch

subplot(1,2,1);

plot(x(:,2),sita(1)+sita(2)*x(:,2),'r')

注释全乱码了。。。。–!

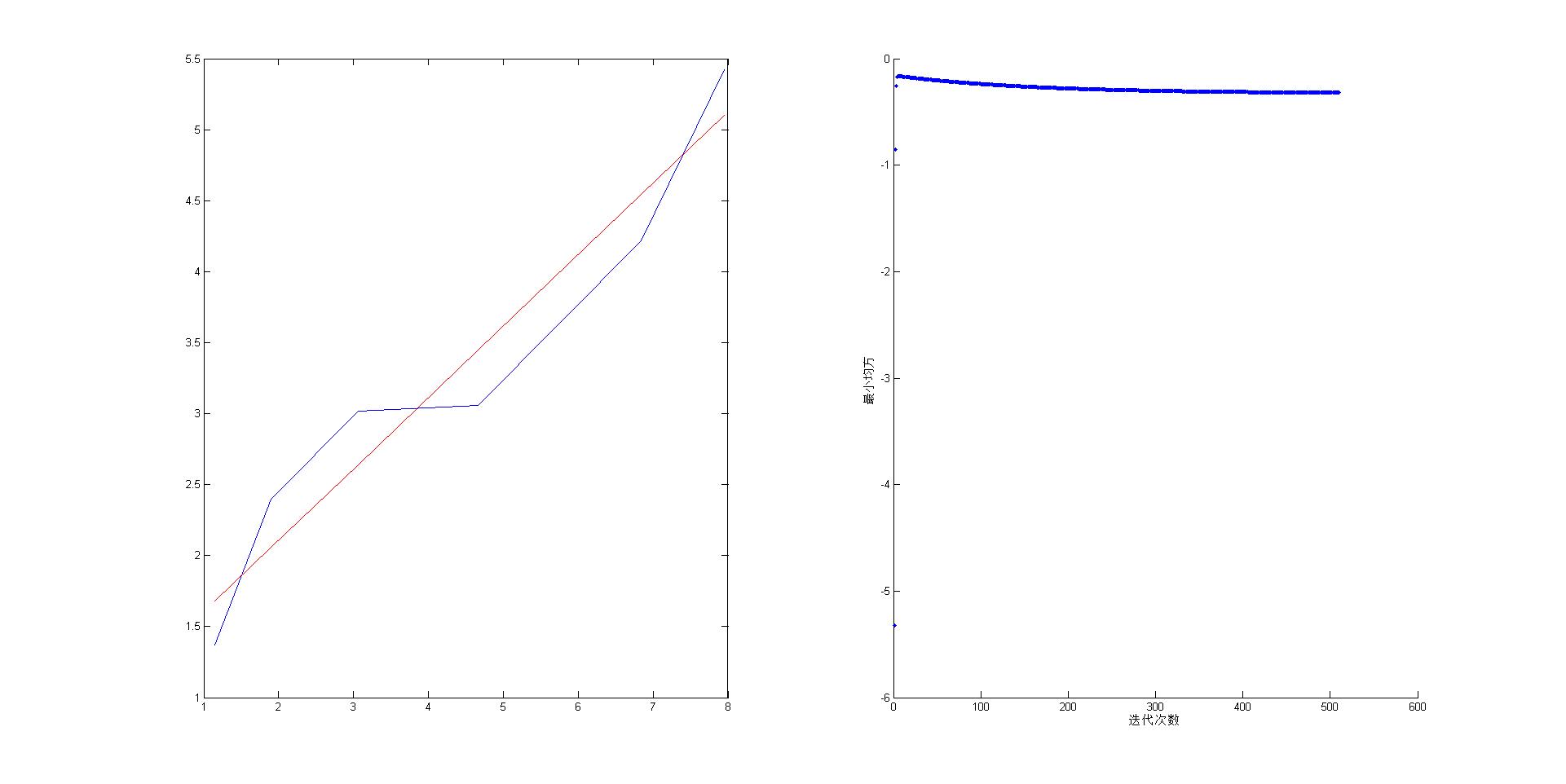

准备用线性回归来拟合y = 1+0.5x。

分别用了增量梯度下降和批梯度下降来计算LMS的最小值。理想的真实值当然是

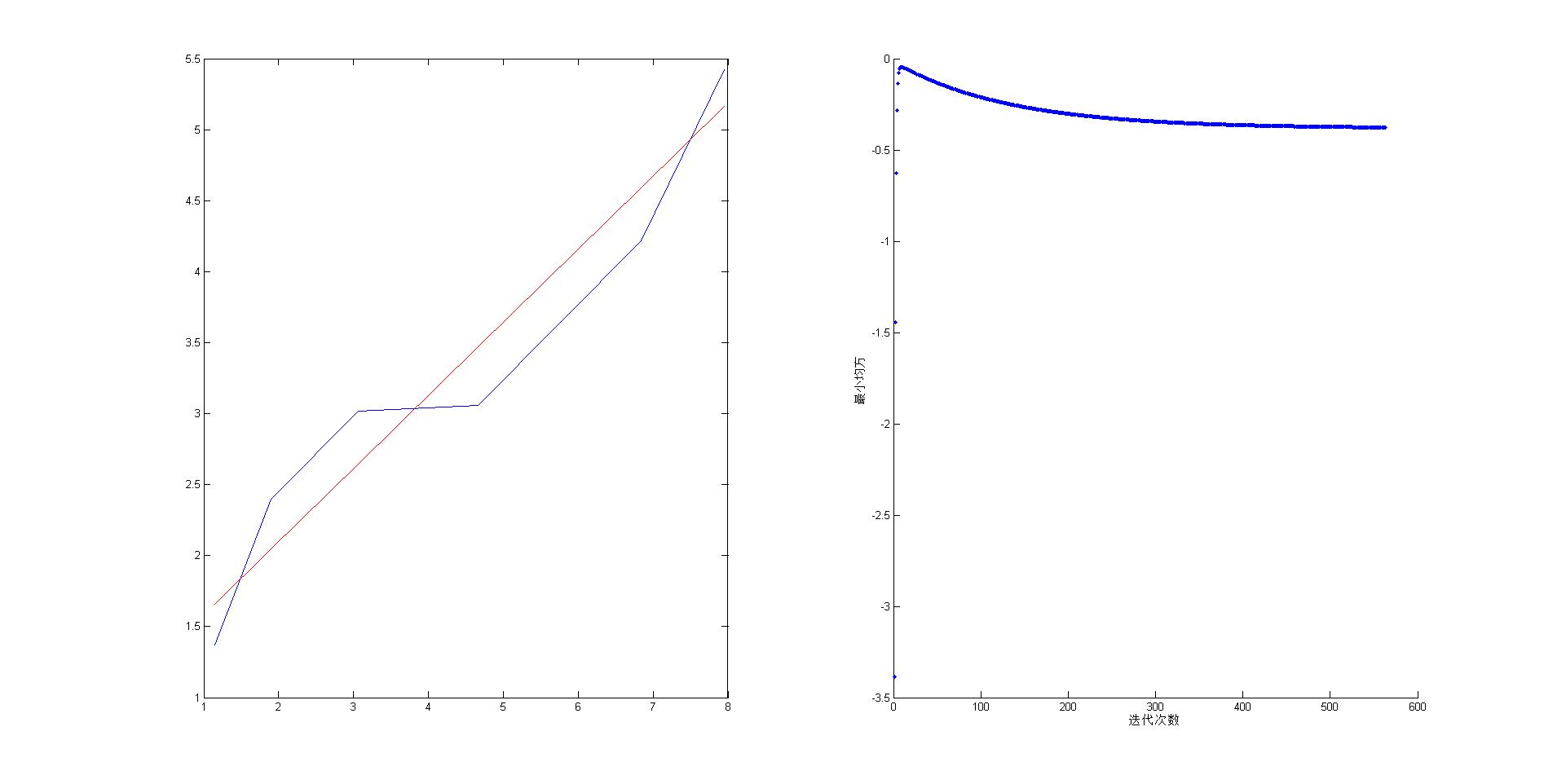

步长为0.005,增量梯度下降

564次迭代 结果为1.0664,0.5154

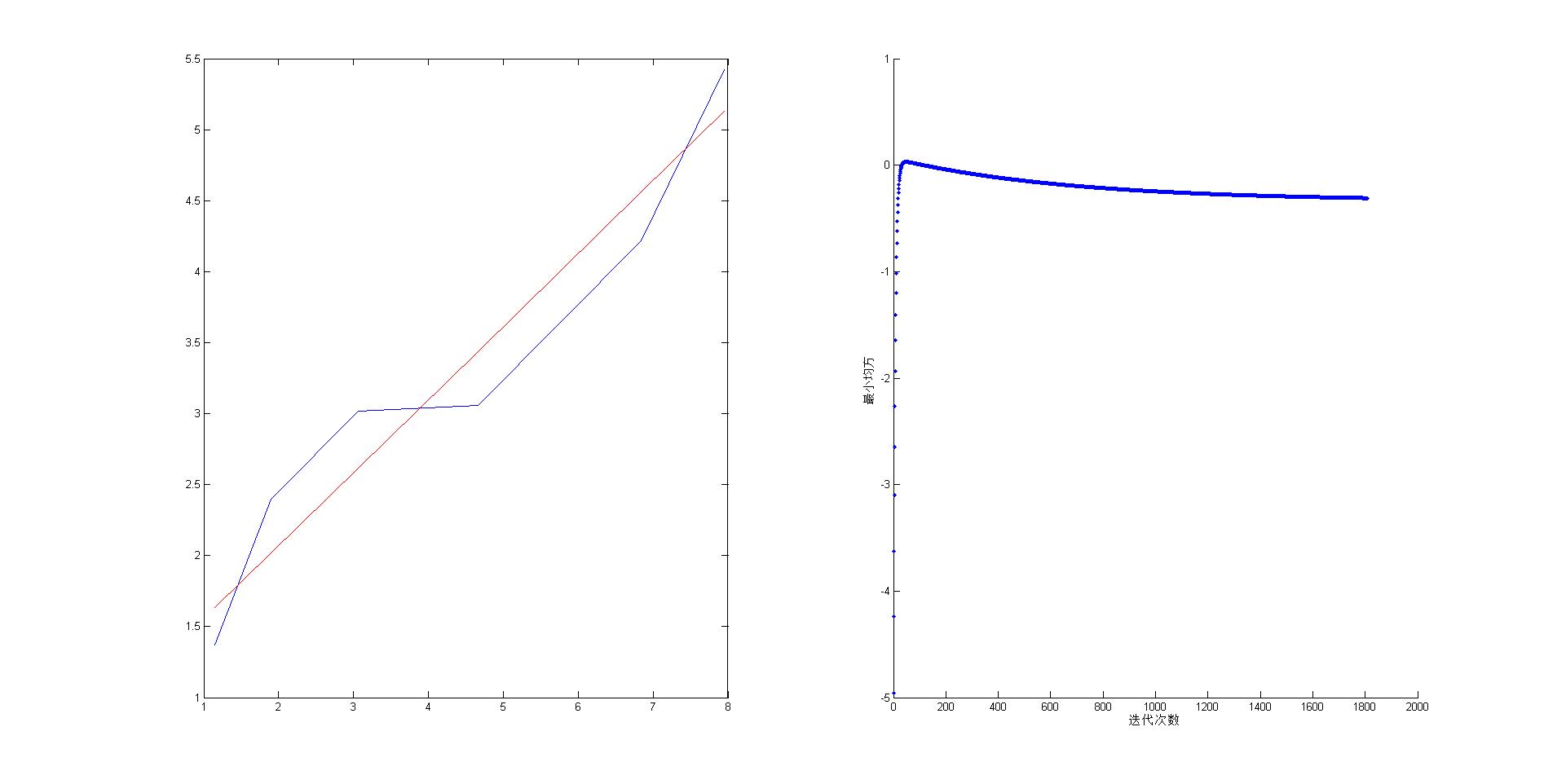

步长为0.001,增量梯度下降

1809次迭代 结果为1.0439,0.5140

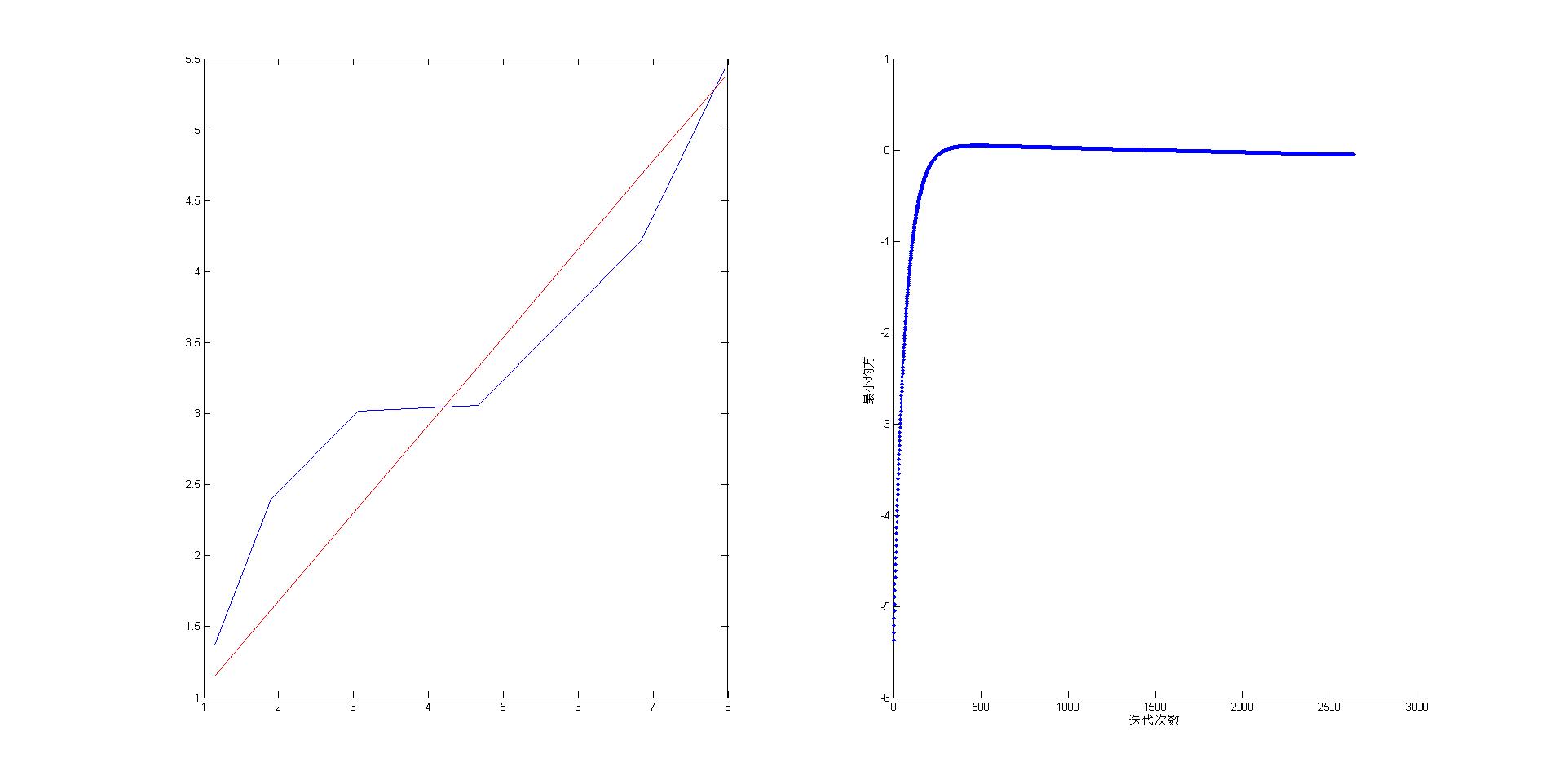

步长为0.0001,增量梯度下降

2633次迭代 结果为0.4413,0.6196,步长太小结果不理想(原因)

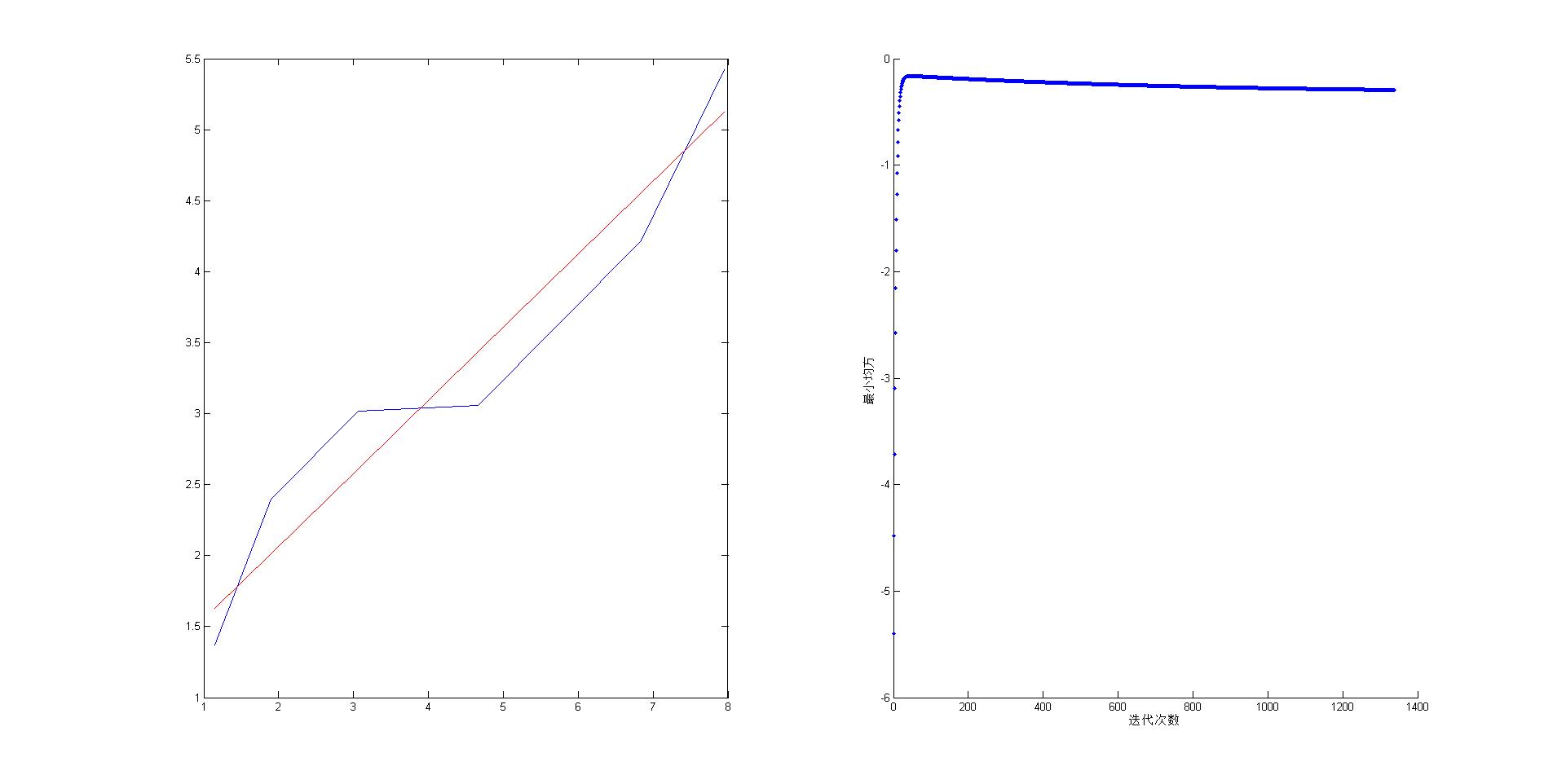

步长为0.001,批梯度下降

511次迭代 结果为1.1022,0.5029

步长为0.005,批梯度下降

1340次迭代 结果为1.039,0.5140

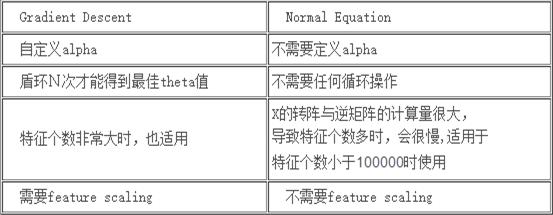

在把批梯度和增量梯度的区别发出来看下

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?