Linux 内核系列文章

Linux 内核设计与实现

深入理解 Linux 内核

Linux 设备驱动程序

Linux设备驱动开发详解

文章目录

一、前言

本章主要用来摘录《Linux 内核设计与实现》一书中学习知识点,其基于 Linux 2.6.34 。

二、进程管理

1、task_struct

// include/linux/sched.h

struct task_struct {

volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */

void *stack;

atomic_t usage;

unsigned int flags; /* per process flags, defined below */

unsigned int ptrace;

int lock_depth; /* BKL lock depth */

#ifdef CONFIG_SMP

#ifdef __ARCH_WANT_UNLOCKED_CTXSW

int oncpu;

#endif

#endif

int prio, static_prio, normal_prio;

unsigned int rt_priority;

const struct sched_class *sched_class;

struct sched_entity se;

struct sched_rt_entity rt;

#ifdef CONFIG_PREEMPT_NOTIFIERS

/* list of struct preempt_notifier: */

struct hlist_head preempt_notifiers;

#endif

/*

* fpu_counter contains the number of consecutive context switches

* that the FPU is used. If this is over a threshold, the lazy fpu

* saving becomes unlazy to save the trap. This is an unsigned char

* so that after 256 times the counter wraps and the behavior turns

* lazy again; this to deal with bursty apps that only use FPU for

* a short time

*/

unsigned char fpu_counter;

#ifdef CONFIG_BLK_DEV_IO_TRACE

unsigned int btrace_seq;

#endif

unsigned int policy;

cpumask_t cpus_allowed;

#ifdef CONFIG_TREE_PREEMPT_RCU

int rcu_read_lock_nesting;

char rcu_read_unlock_special;

struct rcu_node *rcu_blocked_node;

struct list_head rcu_node_entry;

#endif /* #ifdef CONFIG_TREE_PREEMPT_RCU */

#if defined(CONFIG_SCHEDSTATS) || defined(CONFIG_TASK_DELAY_ACCT)

struct sched_info sched_info;

#endif

struct list_head tasks;

struct plist_node pushable_tasks;

struct mm_struct *mm, *active_mm;

#if defined(SPLIT_RSS_COUNTING)

struct task_rss_stat rss_stat;

#endif

/* task state */

int exit_state;

int exit_code, exit_signal;

int pdeath_signal; /* The signal sent when the parent dies */

/* ??? */

unsigned int personality;

unsigned did_exec:1;

unsigned in_execve:1; /* Tell the LSMs that the process is doing an

* execve */

unsigned in_iowait:1;

/* Revert to default priority/policy when forking */

unsigned sched_reset_on_fork:1;

pid_t pid;

pid_t tgid;

#ifdef CONFIG_CC_STACKPROTECTOR

/* Canary value for the -fstack-protector gcc feature */

unsigned long stack_canary;

#endif

/*

* pointers to (original) parent process, youngest child, younger sibling,

* older sibling, respectively. (p->father can be replaced with

* p->real_parent->pid)

*/

struct task_struct *real_parent; /* real parent process */

struct task_struct *parent; /* recipient of SIGCHLD, wait4() reports */

/*

* children/sibling forms the list of my natural children

*/

struct list_head children; /* list of my children */

struct list_head sibling; /* linkage in my parent's children list */

struct task_struct *group_leader; /* threadgroup leader */

/*

* ptraced is the list of tasks this task is using ptrace on.

* This includes both natural children and PTRACE_ATTACH targets.

* p->ptrace_entry is p's link on the p->parent->ptraced list.

*/

struct list_head ptraced;

struct list_head ptrace_entry;

/*

* This is the tracer handle for the ptrace BTS extension.

* This field actually belongs to the ptracer task.

*/

struct bts_context *bts;

/* PID/PID hash table linkage. */

struct pid_link pids[PIDTYPE_MAX];

struct list_head thread_group;

struct completion *vfork_done; /* for vfork() */

int __user *set_child_tid; /* CLONE_CHILD_SETTID */

int __user *clear_child_tid; /* CLONE_CHILD_CLEARTID */

cputime_t utime, stime, utimescaled, stimescaled;

cputime_t gtime;

#ifndef CONFIG_VIRT_CPU_ACCOUNTING

cputime_t prev_utime, prev_stime;

#endif

unsigned long nvcsw, nivcsw; /* context switch counts */

struct timespec start_time; /* monotonic time */

struct timespec real_start_time; /* boot based time */

/* mm fault and swap info: this can arguably be seen as either mm-specific or thread-specific */

unsigned long min_flt, maj_flt;

struct task_cputime cputime_expires;

struct list_head cpu_timers[3];

/* process credentials */

const struct cred *real_cred; /* objective and real subjective task

* credentials (COW) */

const struct cred *cred; /* effective (overridable) subjective task

* credentials (COW) */

struct mutex cred_guard_mutex; /* guard against foreign influences on

* credential calculations

* (notably. ptrace) */

struct cred *replacement_session_keyring; /* for KEYCTL_SESSION_TO_PARENT */

char comm[TASK_COMM_LEN]; /* executable name excluding path

- access with [gs]et_task_comm (which lock

it with task_lock())

- initialized normally by setup_new_exec */

/* file system info */

int link_count, total_link_count;

#ifdef CONFIG_SYSVIPC

/* ipc stuff */

struct sysv_sem sysvsem;

#endif

#ifdef CONFIG_DETECT_HUNG_TASK

/* hung task detection */

unsigned long last_switch_count;

#endif

/* CPU-specific state of this task */

struct thread_struct thread;

/* filesystem information */

struct fs_struct *fs;

/* open file information */

struct files_struct *files;

/* namespaces */

struct nsproxy *nsproxy;

/* signal handlers */

struct signal_struct *signal;

struct sighand_struct *sighand;

sigset_t blocked, real_blocked;

sigset_t saved_sigmask; /* restored if set_restore_sigmask() was used */

struct sigpending pending;

unsigned long sas_ss_sp;

size_t sas_ss_size;

int (*notifier)(void *priv);

void *notifier_data;

sigset_t *notifier_mask;

struct audit_context *audit_context;

#ifdef CONFIG_AUDITSYSCALL

uid_t loginuid;

unsigned int sessionid;

#endif

seccomp_t seccomp;

/* Thread group tracking */

u32 parent_exec_id;

u32 self_exec_id;

/* Protection of (de-)allocation: mm, files, fs, tty, keyrings, mems_allowed,

* mempolicy */

spinlock_t alloc_lock;

#ifdef CONFIG_GENERIC_HARDIRQS

/* IRQ handler threads */

struct irqaction *irqaction;

#endif

/* Protection of the PI data structures: */

raw_spinlock_t pi_lock;

#ifdef CONFIG_RT_MUTEXES

/* PI waiters blocked on a rt_mutex held by this task */

struct plist_head pi_waiters;

/* Deadlock detection and priority inheritance handling */

struct rt_mutex_waiter *pi_blocked_on;

#endif

#ifdef CONFIG_DEBUG_MUTEXES

/* mutex deadlock detection */

struct mutex_waiter *blocked_on;

#endif

#ifdef CONFIG_TRACE_IRQFLAGS

unsigned int irq_events;

unsigned long hardirq_enable_ip;

unsigned long hardirq_disable_ip;

unsigned int hardirq_enable_event;

unsigned int hardirq_disable_event;

int hardirqs_enabled;

int hardirq_context;

unsigned long softirq_disable_ip;

unsigned long softirq_enable_ip;

unsigned int softirq_disable_event;

unsigned int softirq_enable_event;

int softirqs_enabled;

int softirq_context;

#endif

#ifdef CONFIG_LOCKDEP

# define MAX_LOCK_DEPTH 48UL

u64 curr_chain_key;

int lockdep_depth;

unsigned int lockdep_recursion;

struct held_lock held_locks[MAX_LOCK_DEPTH];

gfp_t lockdep_reclaim_gfp;

#endif

/* journalling filesystem info */

void *journal_info;

/* stacked block device info */

struct bio_list *bio_list;

/* VM state */

struct reclaim_state *reclaim_state;

struct backing_dev_info *backing_dev_info;

struct io_context *io_context;

unsigned long ptrace_message;

siginfo_t *last_siginfo; /* For ptrace use. */

struct task_io_accounting ioac;

#if defined(CONFIG_TASK_XACCT)

u64 acct_rss_mem1; /* accumulated rss usage */

u64 acct_vm_mem1; /* accumulated virtual memory usage */

cputime_t acct_timexpd; /* stime + utime since last update */

#endif

#ifdef CONFIG_CPUSETS

nodemask_t mems_allowed; /* Protected by alloc_lock */

int cpuset_mem_spread_rotor;

#endif

#ifdef CONFIG_CGROUPS

/* Control Group info protected by css_set_lock */

struct css_set *cgroups;

/* cg_list protected by css_set_lock and tsk->alloc_lock */

struct list_head cg_list;

#endif

#ifdef CONFIG_FUTEX

struct robust_list_head __user *robust_list;

#ifdef CONFIG_COMPAT

struct compat_robust_list_head __user *compat_robust_list;

#endif

struct list_head pi_state_list;

struct futex_pi_state *pi_state_cache;

#endif

#ifdef CONFIG_PERF_EVENTS

struct perf_event_context *perf_event_ctxp;

struct mutex perf_event_mutex;

struct list_head perf_event_list;

#endif

#ifdef CONFIG_NUMA

struct mempolicy *mempolicy; /* Protected by alloc_lock */

short il_next;

#endif

atomic_t fs_excl; /* holding fs exclusive resources */

struct rcu_head rcu;

/*

* cache last used pipe for splice

*/

struct pipe_inode_info *splice_pipe;

#ifdef CONFIG_TASK_DELAY_ACCT

struct task_delay_info *delays;

#endif

#ifdef CONFIG_FAULT_INJECTION

int make_it_fail;

#endif

struct prop_local_single dirties;

#ifdef CONFIG_LATENCYTOP

int latency_record_count;

struct latency_record latency_record[LT_SAVECOUNT];

#endif

/*

* time slack values; these are used to round up poll() and

* select() etc timeout values. These are in nanoseconds.

*/

unsigned long timer_slack_ns;

unsigned long default_timer_slack_ns;

struct list_head *scm_work_list;

#ifdef CONFIG_FUNCTION_GRAPH_TRACER

/* Index of current stored address in ret_stack */

int curr_ret_stack;

/* Stack of return addresses for return function tracing */

struct ftrace_ret_stack *ret_stack;

/* time stamp for last schedule */

unsigned long long ftrace_timestamp;

/*

* Number of functions that haven't been traced

* because of depth overrun.

*/

atomic_t trace_overrun;

/* Pause for the tracing */

atomic_t tracing_graph_pause;

#endif

#ifdef CONFIG_TRACING

/* state flags for use by tracers */

unsigned long trace;

/* bitmask of trace recursion */

unsigned long trace_recursion;

#endif /* CONFIG_TRACING */

#ifdef CONFIG_CGROUP_MEM_RES_CTLR /* memcg uses this to do batch job */

struct memcg_batch_info {

int do_batch; /* incremented when batch uncharge started */

struct mem_cgroup *memcg; /* target memcg of uncharge */

unsigned long bytes; /* uncharged usage */

unsigned long memsw_bytes; /* uncharged mem+swap usage */

} memcg_batch;

#endif

};

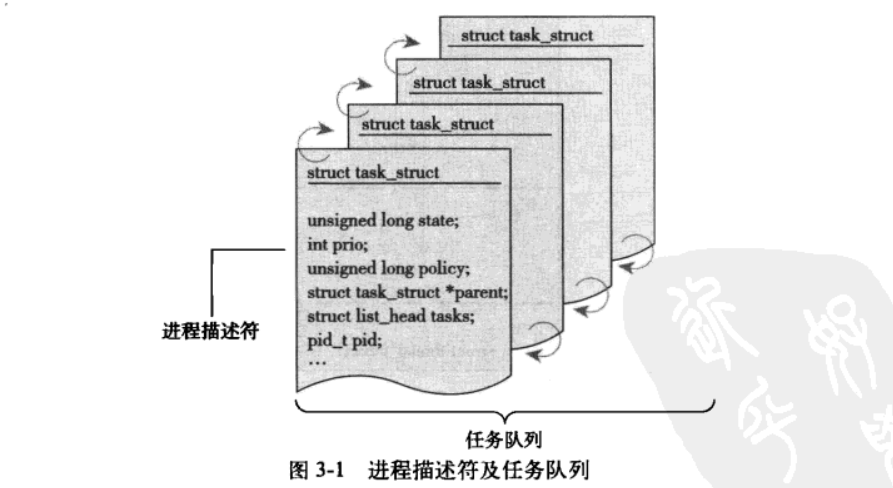

进程描述符 task_struct 包含了一个具体进程的所有信息。其相对较大,在 32 位机器上,它大约有 1.7KB。

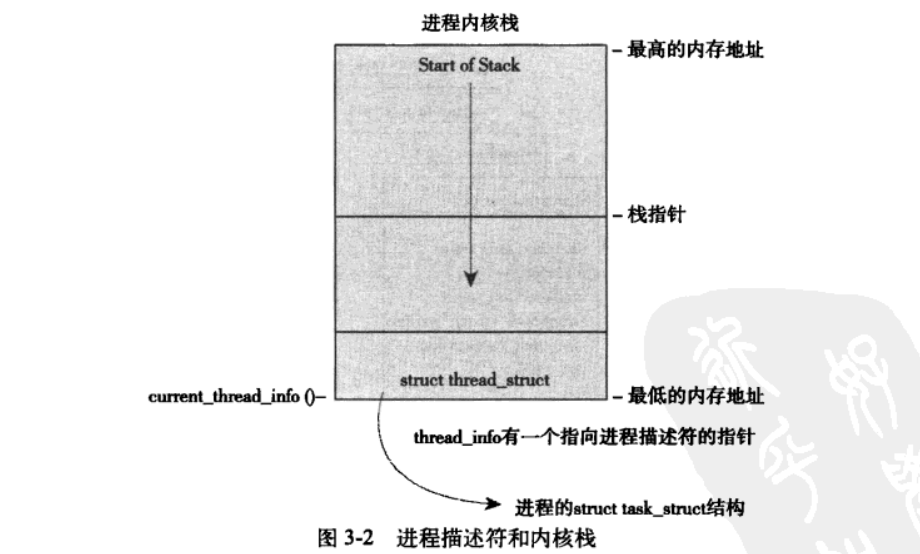

Linux 通过 slab 分配器分配 task_struct 结构。在 2.6 以前的内核中,各个进程的 task_struct 存放在它们内核栈的尾端。这样做是为了让那些像 x86 那样寄存器较少的硬件体系结构只要通过栈指针就能计算出它的位置,而避免使用额外的寄存器专门记录。由于现在用 slab 分配器动态生成 task_struct ,所以只需要在栈底创建一个新的结构 struct thread_info 。

2、thread_info

// arch/x86/include/asm/thread_info.h

struct thread_info {

struct task_struct *task; /* main task structure */

struct exec_domain *exec_domain; /* execution domain */

__u32 flags; /* low level flags */

__u32 status; /* thread synchronous flags */

__u32 cpu; /* current CPU */

int preempt_count; /* 0 => preemptable,

<0 => BUG */

mm_segment_t addr_limit;

struct restart_block restart_block;

void __user *sysenter_return;

#ifdef CONFIG_X86_32

unsigned long previous_esp; /* ESP of the previous stack in

case of nested (IRQ) stacks

*/

__u8 supervisor_stack[0];

#endif

int uaccess_err;

};

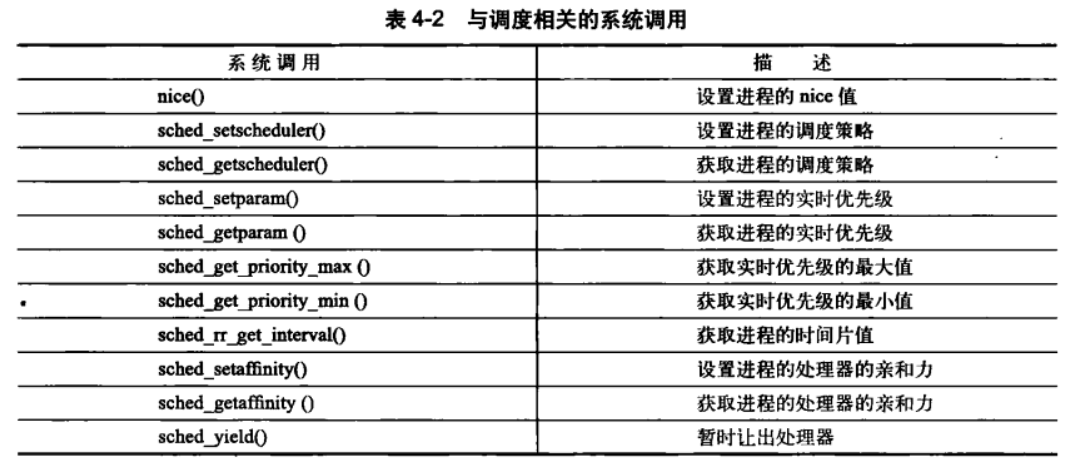

三、调度

CFS 调度

四、系统调用

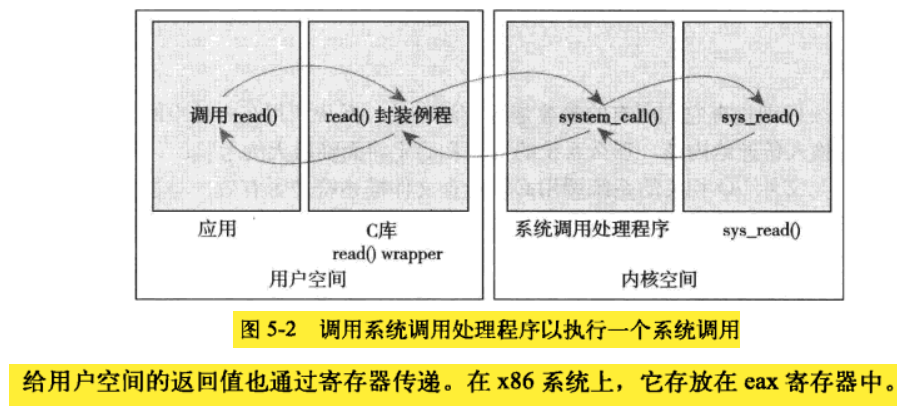

用户空间的程序无法直接执行内核代码。它们不能直接调用内核空间中的函数,因为内核驻留在受保护的地址空间上。

x86 系统通过 int $0x80 指令触发系统调用。系统调用号是通过 eax 寄存器传递给内核的。其响应函数 system_call() 函数通过将给定的系统调用号与 NR_syscalls 做比较来检查其有效性。如果它大于或者等于 NR_syscalls ,该函数就返回 -ENOSYS 。否则,就执行相应的系统调用:

call *sys_call_table(, %rax, 8)

由于系统调用表中的表项是以 64 位(8字节)类型存放的,所以内核需要将给定的系统调用号乘以 8,然后用所得的成果在该表中查询其位置。

除了系统调用号以外,大部分系统调用都还需要一些外部的参数输入。所以,在发生陷入的时候,应该把这些参数从用户空间传给内核。在 x86-32 系统上, ebx、ecx、edx、esi 和 edi 按照顺序存放前五个参数。需要六个或六个以上参数则应该用一个单独的寄存器存放指向所有这些参数在用户空间地址的指针。

五、内核数据结构

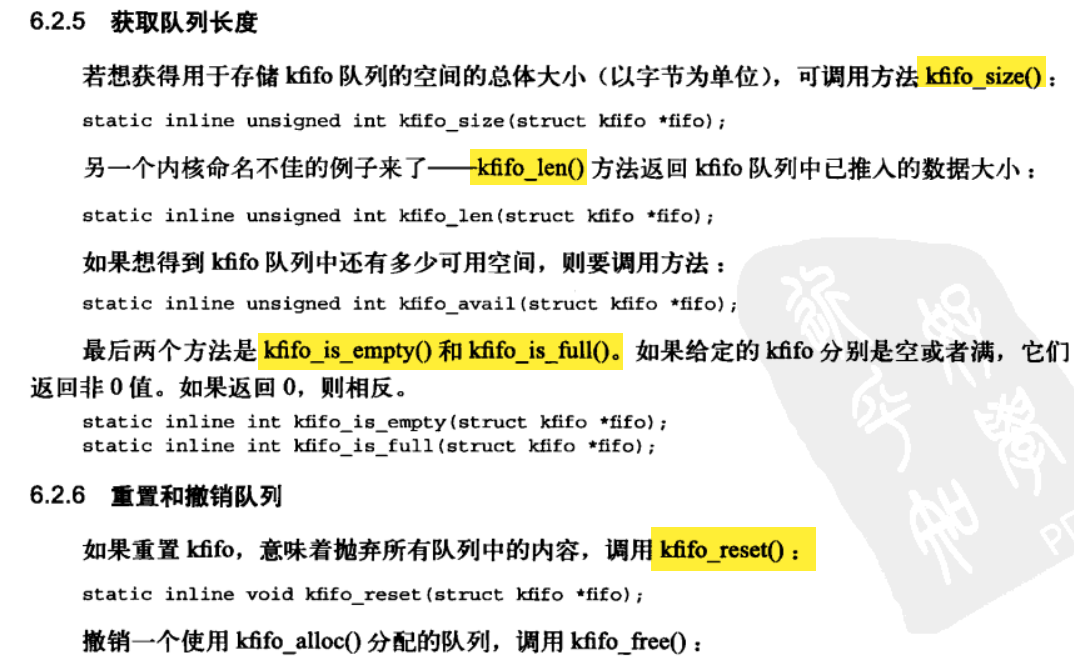

1、kfifo

// kernel/kfifo.c

/**

* 使用 buffer 内存创建并初始化kfifo队列

*

* @param fifo kfifo队列

* @param buffer 指向内存地址

* @param size 内存大小,必须是 2 的幂

*/

void kfifo_init(struct kfifo *fifo, void *buffer, unsigned int size);

/**

* 动态创建并初始化kfifo队列

*

* @param fifo kfifo队列

* @param size 创建kfifo队列大小

* @param gfp_mask 标识

* @return

*/

int kfifo_alloc(struct kfifo *fifo, unsigned int size, gfp_t gfp_mask);

/**

* 把数据推入到队列。该函数把 from 指针所指的 len 字节数据拷贝到 fifo 所指的队列中,如果成功,

* 则返回推入数据的字节大小。如果队列中的空闲字节小于 len,则该函数值最多可拷贝队列可用空间那么多

* 的数据,这样的话,返回值可能小于 len。

*/

unsigned int kfifo_in(struct kfifo *fifo, const void *from, unsigned int len);

/**

* 该函数从 fifo 所指向的队列中拷贝出长度为 len 字节的数据到 to 所指的缓冲中。如果成功,

* 该函数则返回拷贝的数据长度。如果队列中数据大小小于 len, 则该函数拷贝出的数据必然小于

* 需要的数据大小

*/

unsigned int kfifo_out(struct kfifo *fifo, void *to, unsigned int len);

/**

* 与 kfifo_out 类似,获取数据,但读后不删除数据。参数 offset 指向队列中的索引位置,如果

* 该参数为 0,则读队列头,这时候和 kfifo_out 一样。

*/

unsigned int kfifo_out_peek(struct kfifo *fifo, void *to, unsigned int len, unsigned offset);

2、映射

// include/linux/idr.h

void *idr_find(struct idr *idp, int id);

int idr_pre_get(struct idr *idp, gfp_t gfp_mask);

int idr_get_new(struct idr *idp, void *ptr, int *id);

int idr_get_new_above(struct idr *idp, void *ptr, int starting_id, int *id);

int idr_for_each(struct idr *idp,

int (*fn)(int id, void *p, void *data), void *data);

void *idr_get_next(struct idr *idp, int *nextid);

void *idr_replace(struct idr *idp, void *ptr, int id);

void idr_remove(struct idr *idp, int id);

void idr_remove_all(struct idr *idp);

void idr_destroy(struct idr *idp);

void idr_init(struct idr *idp);

3、二叉树

// include/linux/rbtree.h

struct rb_node

{

unsigned long rb_parent_color;

#define RB_RED 0

#define RB_BLACK 1

struct rb_node *rb_right;

struct rb_node *rb_left;

} __attribute__((aligned(sizeof(long))));

/* The alignment might seem pointless, but allegedly CRIS needs it */

struct rb_root

{

struct rb_node *rb_node;

};

#define rb_parent(r) ((struct rb_node *)((r)->rb_parent_color & ~3))

#define rb_color(r) ((r)->rb_parent_color & 1)

#define rb_is_red(r) (!rb_color(r))

#define rb_is_black(r) rb_color(r)

#define rb_set_red(r) do { (r)->rb_parent_color &= ~1; } while (0)

#define rb_set_black(r) do { (r)->rb_parent_color |= 1; } while (0)

static inline void rb_set_parent(struct rb_node *rb, struct rb_node *p)

{

rb->rb_parent_color = (rb->rb_parent_color & 3) | (unsigned long)p;

}

static inline void rb_set_color(struct rb_node *rb, int color)

{

rb->rb_parent_color = (rb->rb_parent_color & ~1) | color;

}

#define RB_ROOT (struct rb_root) { NULL, }

#define rb_entry(ptr, type, member) container_of(ptr, type, member)

#define RB_EMPTY_ROOT(root) ((root)->rb_node == NULL)

#define RB_EMPTY_NODE(node) (rb_parent(node) == node)

#define RB_CLEAR_NODE(node) (rb_set_parent(node, node))

extern void rb_insert_color(struct rb_node *, struct rb_root *);

extern void rb_erase(struct rb_node *, struct rb_root *);

/* Find logical next and previous nodes in a tree */

extern struct rb_node *rb_next(const struct rb_node *);

extern struct rb_node *rb_prev(const struct rb_node *);

extern struct rb_node *rb_first(const struct rb_root *);

extern struct rb_node *rb_last(const struct rb_root *);

/* Fast replacement of a single node without remove/rebalance/add/rebalance */

extern void rb_replace_node(struct rb_node *victim, struct rb_node *new,

struct rb_root *root);

static inline void rb_link_node(struct rb_node * node, struct rb_node * parent,

struct rb_node ** rb_link)

{

node->rb_parent_color = (unsigned long )parent;

node->rb_left = node->rb_right = NULL;

*rb_link = node;

}

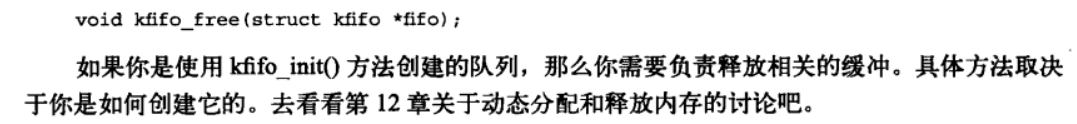

六、中断

1、软中断

// include/linux/interrupt.h

/**

* struct irqaction - per interrupt action descriptor

* @handler: interrupt handler function

* @flags: flags (see IRQF_* above)

* @name: name of the device

* @dev_id: cookie to identify the device

* @next: pointer to the next irqaction for shared interrupts

* @irq: interrupt number

* @dir: pointer to the proc/irq/NN/name entry

* @thread_fn: interupt handler function for threaded interrupts

* @thread: thread pointer for threaded interrupts

* @thread_flags: flags related to @thread

*/

struct irqaction {

irq_handler_t handler;

unsigned long flags;

const char *name;

void *dev_id;

struct irqaction *next;

int irq;

struct proc_dir_entry *dir;

irq_handler_t thread_fn;

struct task_struct *thread;

unsigned long thread_flags;

};

struct softirq_action

{

void (*action)(struct softirq_action *);

};

2、tasklet

// include/linux/interrupt.h

/* Tasklets --- multithreaded analogue of BHs.

Main feature differing them of generic softirqs: tasklet

is running only on one CPU simultaneously.

Main feature differing them of BHs: different tasklets

may be run simultaneously on different CPUs.

Properties:

* If tasklet_schedule() is called, then tasklet is guaranteed

to be executed on some cpu at least once after this.

* If the tasklet is already scheduled, but its excecution is still not

started, it will be executed only once.

* If this tasklet is already running on another CPU (or schedule is called

from tasklet itself), it is rescheduled for later.

* Tasklet is strictly serialized wrt itself, but not

wrt another tasklets. If client needs some intertask synchronization,

he makes it with spinlocks.

*/

struct tasklet_struct

{

struct tasklet_struct *next;

unsigned long state;

atomic_t count;

void (*func)(unsigned long);

unsigned long data;

};

3、工作队列

// kernel/workqueue.c

struct cpu_workqueue_struct {

spinlock_t lock;

struct list_head worklist;

wait_queue_head_t more_work;

struct work_struct *current_work;

struct workqueue_struct *wq;

struct task_struct *thread;

} ____cacheline_aligned;

/*

* The externally visible workqueue abstraction is an array of

* per-CPU workqueues:

*/

struct workqueue_struct {

struct cpu_workqueue_struct *cpu_wq;

struct list_head list;

const char *name;

int singlethread;

int freezeable; /* Freeze threads during suspend */

int rt;

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

};

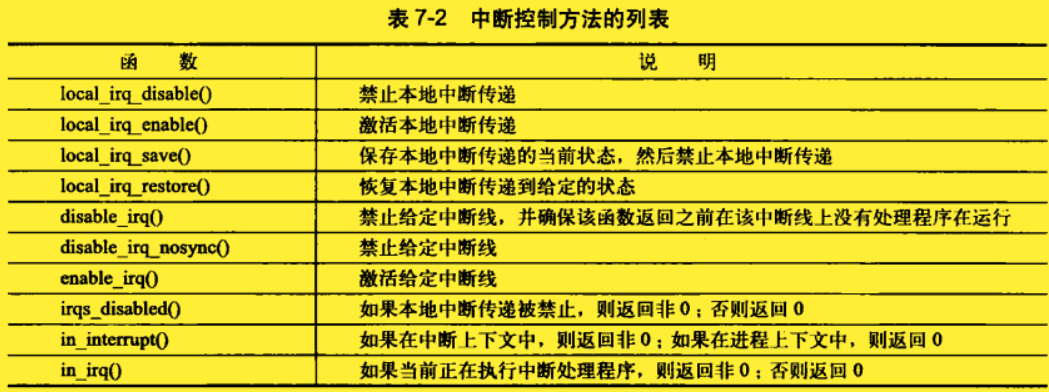

4、下半部机制的选择

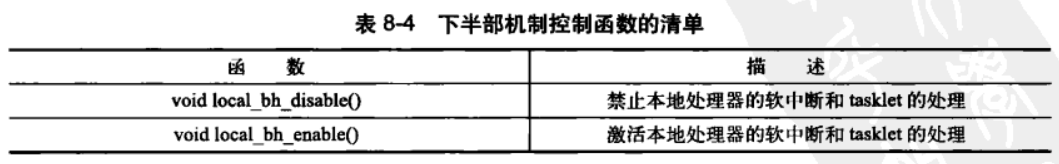

5、下半部禁止与使能

七、内核同步方法

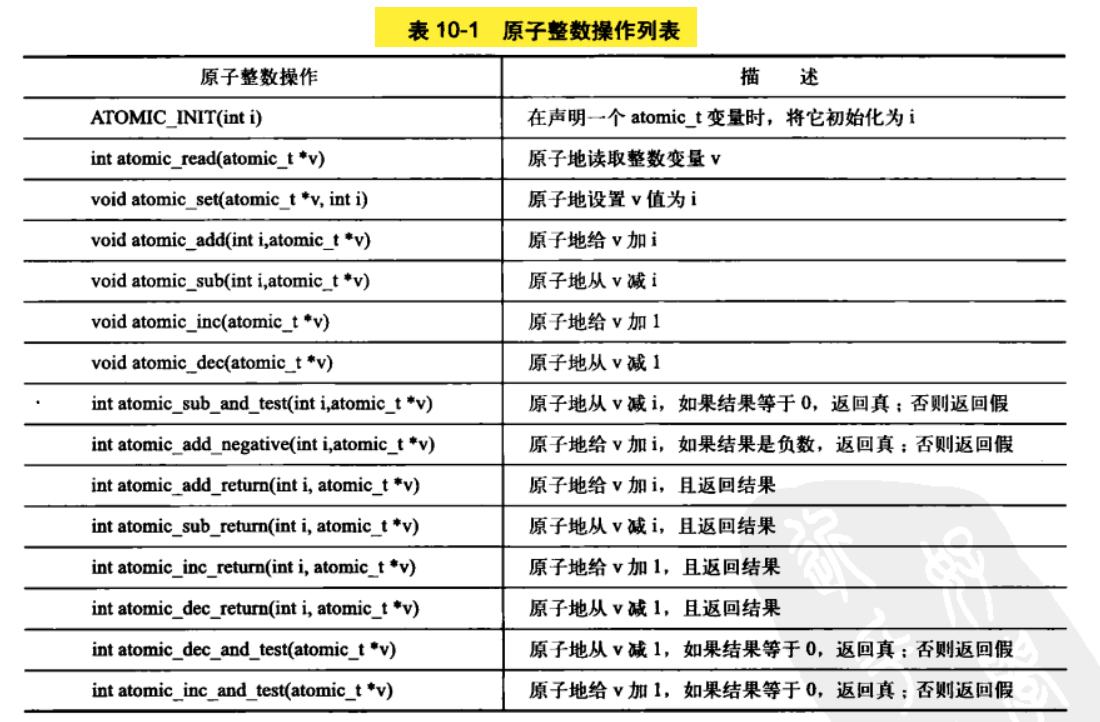

1、原子操作

在 x86 上,实现在 arch/x86/include/asm/atomic.h 文件中。

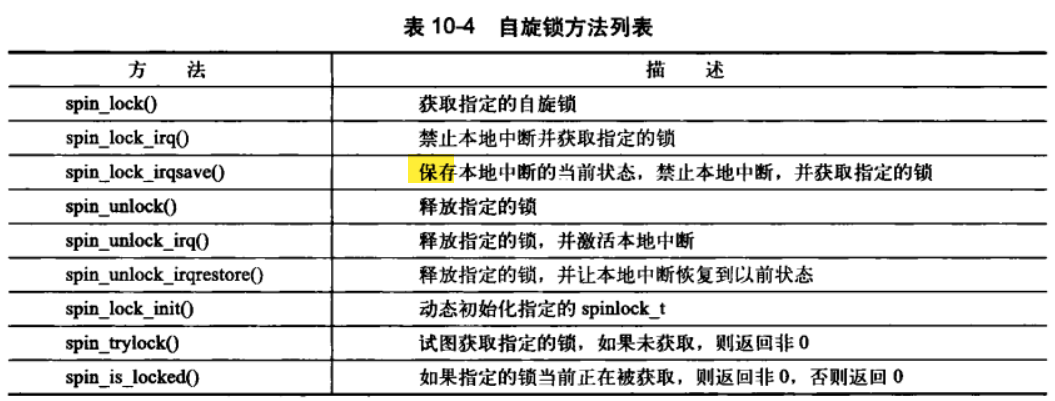

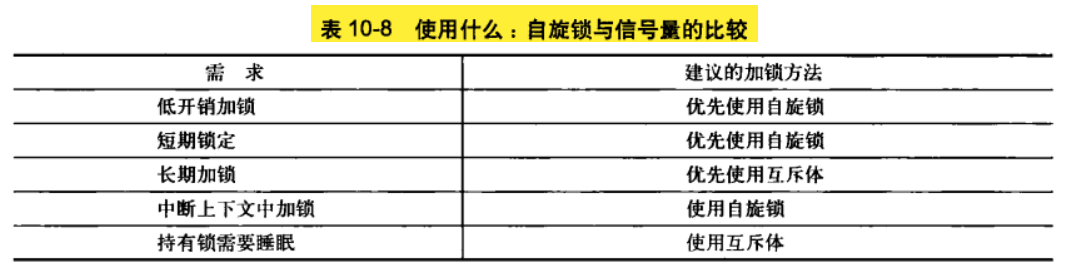

2、自旋锁

(1)结构体

// include/linux/spinlock_types.h

typedef struct spinlock {

union {

struct raw_spinlock rlock;

#ifdef CONFIG_DEBUG_LOCK_ALLOC

# define LOCK_PADSIZE (offsetof(struct raw_spinlock, dep_map))

struct {

u8 __padding[LOCK_PADSIZE];

struct lockdep_map dep_map;

};

#endif

};

} spinlock_t;

typedef struct raw_spinlock {

arch_spinlock_t raw_lock;

#ifdef CONFIG_GENERIC_LOCKBREAK

unsigned int break_lock;

#endif

#ifdef CONFIG_DEBUG_SPINLOCK

unsigned int magic, owner_cpu;

void *owner;

#endif

#ifdef CONFIG_DEBUG_LOCK_ALLOC

struct lockdep_map dep_map;

#endif

} raw_spinlock_t;

// arch/x86/include/asm/spinlock_types.h

typedef struct arch_spinlock {

unsigned int slock;

} arch_spinlock_t;

(2)方法说明

函数定义在文件 include/linux/spinlock.h 中

(3)raw_spin_lock 函数分析

- raw_spin_lock 函数

// include/linux/spinlock.h

#define raw_spin_lock(lock) _raw_spin_lock(lock)

static inline void spin_lock(spinlock_t *lock)

{

raw_spin_lock(&lock->rlock);

}

- _raw_spin_lock 函数

// // kernel/spinlock.c

#ifndef CONFIG_INLINE_SPIN_LOCK

void __lockfunc _raw_spin_lock(raw_spinlock_t *lock)

{

__raw_spin_lock(lock);

}

EXPORT_SYMBOL(_raw_spin_lock);

#endif

- __raw_spin_lock 函数

// include/linux/spinlock_api_smp.h

static inline void __raw_spin_lock(raw_spinlock_t *lock)

{

preempt_disable();

spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);

LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

}

- do_raw_spin_lock 函数

// include/linux/spinlock.h

static inline void do_raw_spin_lock(raw_spinlock_t *lock) __acquires(lock)

{

__acquire(lock);

arch_spin_lock(&lock->raw_lock);

}

- arch_spin_lock 函数

// arch/x86/include/asm/spinlock.h

static __always_inline void arch_spin_lock(arch_spinlock_t *lock)

{

__ticket_spin_lock(lock);

}

static __always_inline void __ticket_spin_lock(arch_spinlock_t *lock)

{

short inc = 0x0100;

asm volatile (

LOCK_PREFIX "xaddw %w0, %1\n"

"1:\t"

"cmpb %h0, %b0\n\t"

"je 2f\n\t"

"rep ; nop\n\t"

"movb %1, %b0\n\t"

/* don't need lfence here, because loads are in-order */

"jmp 1b\n"

"2:"

: "+Q" (inc), "+m" (lock->slock)

:

: "memory", "cc");

}

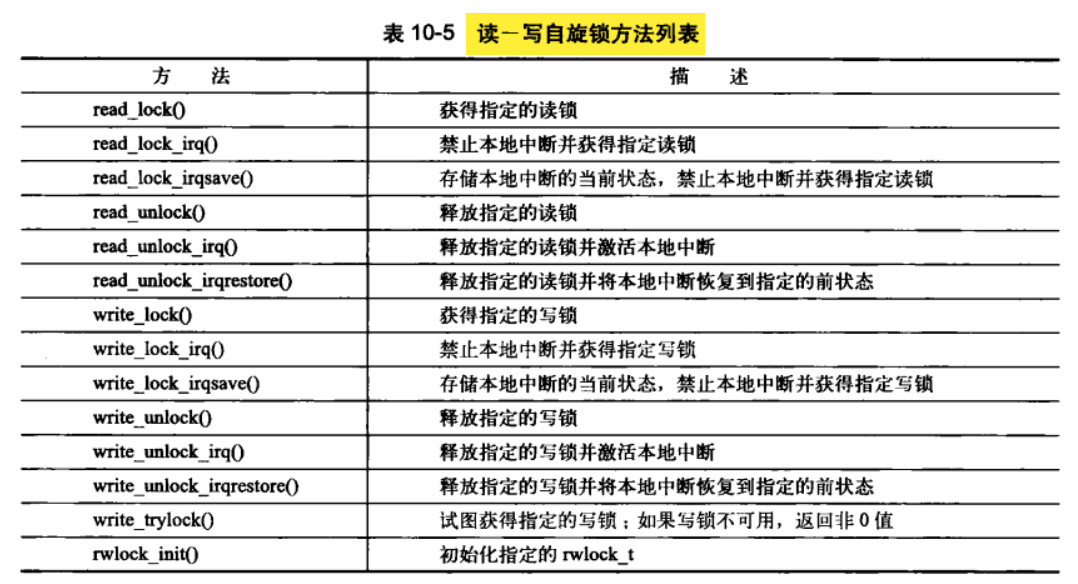

3、读写自旋锁

(1)结构体

// include/linux/rwlock_types.h

typedef struct {

arch_rwlock_t raw_lock;

#ifdef CONFIG_GENERIC_LOCKBREAK

unsigned int break_lock;

#endif

#ifdef CONFIG_DEBUG_SPINLOCK

unsigned int magic, owner_cpu;

void *owner;

#endif

#ifdef CONFIG_DEBUG_LOCK_ALLOC

struct lockdep_map dep_map;

#endif

} rwlock_t;

// arch/x86/include/asm/spinlock_types.h

typedef struct {

unsigned int lock;

} arch_rwlock_t;

(2)方法说明

函数定义在文件 include/linux/rwlock.h 中

(3)read_lock函数分析

- read_lock 函数

// include/linux/rwlock.h

#define read_lock(lock) _raw_read_lock(lock)

- _raw_read_lock 函数

// include/linux/rwlock_api_smp.h

#ifdef CONFIG_INLINE_READ_LOCK

#define _raw_read_lock(lock) __raw_read_lock(lock)

#endif

// include/linux/rwlock_api_smp.h

static inline void __raw_read_lock(rwlock_t *lock)

{

preempt_disable();

rwlock_acquire_read(&lock->dep_map, 0, 0, _RET_IP_);

LOCK_CONTENDED(lock, do_raw_read_trylock, do_raw_read_lock);

}

- do_raw_read_lock 函数

// include/linux/rwlock.h

# define do_raw_read_lock(rwlock) do {__acquire(lock); arch_read_lock(&(rwlock)->raw_lock); } while (0)

- arch_read_lock 函数

// arch/x86/include/asm/spinlock.h

static inline void arch_read_lock(arch_rwlock_t *rw)

{

asm volatile(LOCK_PREFIX " subl $1,(%0)\n\t"

"jns 1f\n"

"call __read_lock_failed\n\t"

"1:\n"

::LOCK_PTR_REG (rw) : "memory");

}

4、信号量

(1)结构体

struct semaphore {

spinlock_t lock;

unsigned int count;

struct list_head wait_list;

};

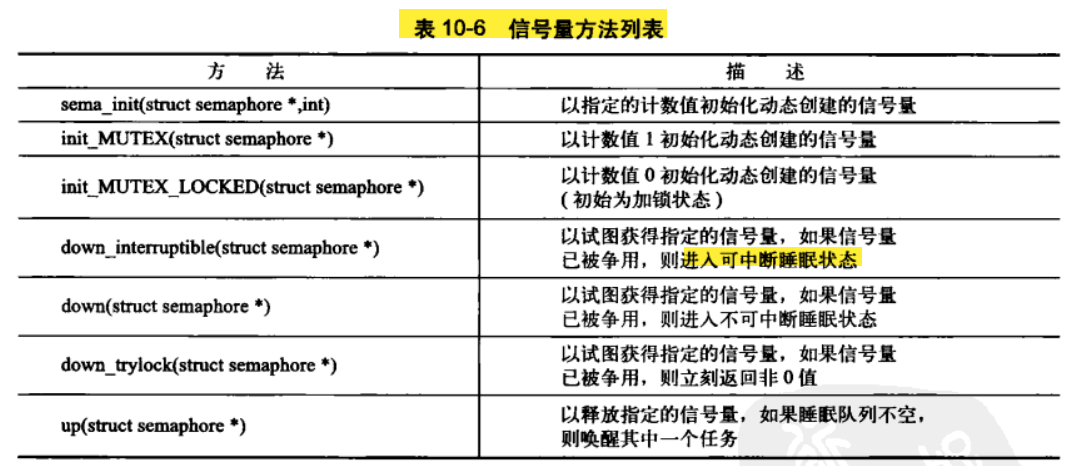

(2)方法说明

函数定义在文件 include/linux/semaphore.h 中,其允许进入睡眠。不能用在中断上下文,只能用在进程上下文。

(3)down_interruptible 函数分析

- down_interruptible 函数

// include/linux/semaphore.h

extern int __must_check down_interruptible(struct semaphore *sem);

// kernel/semaphore.c

/**

* down_interruptible - acquire the semaphore unless interrupted

* @sem: the semaphore to be acquired

*

* Attempts to acquire the semaphore. If no more tasks are allowed to

* acquire the semaphore, calling this function will put the task to sleep.

* If the sleep is interrupted by a signal, this function will return -EINTR.

* If the semaphore is successfully acquired, this function returns 0.

*/

int down_interruptible(struct semaphore *sem)

{

unsigned long flags;

int result = 0;

spin_lock_irqsave(&sem->lock, flags);

if (likely(sem->count > 0))

sem->count--;

else

result = __down_interruptible(sem);

spin_unlock_irqrestore(&sem->lock, flags);

return result;

}

EXPORT_SYMBOL(down_interruptible);

- __down_interruptible 函数

// kernel/semaphore.c

static noinline int __sched __down_interruptible(struct semaphore *sem)

{

return __down_common(sem, TASK_INTERRUPTIBLE, MAX_SCHEDULE_TIMEOUT);

}

- __down_common 函数

// kernel/semaphore.c

/*

* Because this function is inlined, the 'state' parameter will be

* constant, and thus optimised away by the compiler. Likewise the

* 'timeout' parameter for the cases without timeouts.

*/

static inline int __sched __down_common(struct semaphore *sem, long state,

long timeout)

{

struct task_struct *task = current;

struct semaphore_waiter waiter;

list_add_tail(&waiter.list, &sem->wait_list);

waiter.task = task;

waiter.up = 0;

for (;;) {

if (signal_pending_state(state, task))

goto interrupted;

if (timeout <= 0)

goto timed_out;

__set_task_state(task, state);

spin_unlock_irq(&sem->lock);

timeout = schedule_timeout(timeout);

spin_lock_irq(&sem->lock);

if (waiter.up)

return 0;

}

timed_out:

list_del(&waiter.list);

return -ETIME;

interrupted:

list_del(&waiter.list);

return -EINTR;

}

5、读写信号量

(1)结构体

rw_semaphore 结构体定义在各个体系结构下的 rwsem.h 文件中

// arch/x86/include/asm/rwsem.h

struct rw_semaphore {

rwsem_count_t count;

spinlock_t wait_lock;

struct list_head wait_list;

#ifdef CONFIG_DEBUG_LOCK_ALLOC

struct lockdep_map dep_map;

#endif

};

(2)方法说明

读写信号量函数定义

// include/linux/rwsem.h

/*

* lock for reading

*/

extern void down_read(struct rw_semaphore *sem);

/*

* trylock for reading -- returns 1 if successful, 0 if contention

*/

extern int down_read_trylock(struct rw_semaphore *sem);

/*

* lock for writing

*/

extern void down_write(struct rw_semaphore *sem);

/*

* trylock for writing -- returns 1 if successful, 0 if contention

*/

extern int down_write_trylock(struct rw_semaphore *sem);

/*

* release a read lock

*/

extern void up_read(struct rw_semaphore *sem);

/*

* release a write lock

*/

extern void up_write(struct rw_semaphore *sem);

/*

* downgrade write lock to read lock

*/

extern void downgrade_write(struct rw_semaphore *sem);

(3)down_read 函数分析

- down_read 函数

// kernel/rwsem.c

/*

* lock for reading

*/

void __sched down_read(struct rw_semaphore *sem)

{

might_sleep();

rwsem_acquire_read(&sem->dep_map, 0, 0, _RET_IP_);

LOCK_CONTENDED(sem, __down_read_trylock, __down_read);

}

EXPORT_SYMBOL(down_read);

- __up_read 函数

// arch/x86/include/asm/rwsem.h

/*

* lock for reading

*/

static inline void __down_read(struct rw_semaphore *sem)

{

asm volatile("# beginning down_read\n\t"

LOCK_PREFIX _ASM_INC "(%1)\n\t"

/* adds 0x00000001, returns the old value */

" jns 1f\n"

" call call_rwsem_down_read_failed\n"

"1:\n\t"

"# ending down_read\n\t"

: "+m" (sem->count)

: "a" (sem)

: "memory", "cc");

}

6、互斥体

(1)结构体

// include/linux/mutex.h

/*

* Simple, straightforward mutexes with strict semantics:

*

* - only one task can hold the mutex at a time

* - only the owner can unlock the mutex

* - multiple unlocks are not permitted

* - recursive locking is not permitted

* - a mutex object must be initialized via the API

* - a mutex object must not be initialized via memset or copying

* - task may not exit with mutex held

* - memory areas where held locks reside must not be freed

* - held mutexes must not be reinitialized

* - mutexes may not be used in hardware or software interrupt

* contexts such as tasklets and timers

*

* These semantics are fully enforced when DEBUG_MUTEXES is

* enabled. Furthermore, besides enforcing the above rules, the mutex

* debugging code also implements a number of additional features

* that make lock debugging easier and faster:

*

* - uses symbolic names of mutexes, whenever they are printed in debug output

* - point-of-acquire tracking, symbolic lookup of function names

* - list of all locks held in the system, printout of them

* - owner tracking

* - detects self-recursing locks and prints out all relevant info

* - detects multi-task circular deadlocks and prints out all affected

* locks and tasks (and only those tasks)

*/

struct mutex {

/* 1: unlocked, 0: locked, negative: locked, possible waiters */

atomic_t count;

spinlock_t wait_lock;

struct list_head wait_list;

#if defined(CONFIG_DEBUG_MUTEXES) || defined(CONFIG_SMP)

struct thread_info *owner;

#endif

#ifdef CONFIG_DEBUG_MUTEXES

const char *name;

void *magic;

#endif

#ifdef CONFIG_DEBUG_LOCK_ALLOC

struct lockdep_map dep_map;

#endif

};

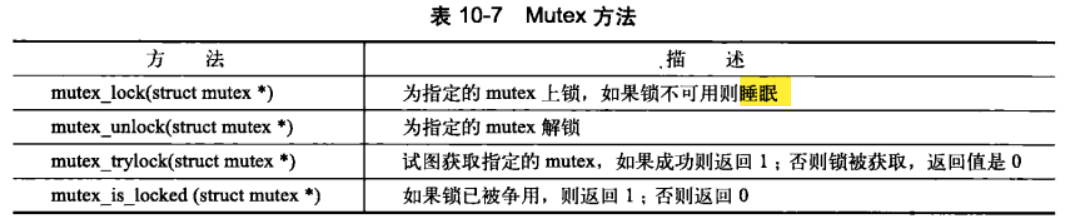

(2)方法说明

函数定义在文件 include/linux/mutex.h 中

(3)mutex_lock 函数分析

- mutex_lock 函数

// include/linux/mutex.h

extern void mutex_lock(struct mutex *lock);

// kernel/mutex.c

/***

* mutex_lock - acquire the mutex

* @lock: the mutex to be acquired

*

* Lock the mutex exclusively for this task. If the mutex is not

* available right now, it will sleep until it can get it.

*

* The mutex must later on be released by the same task that

* acquired it. Recursive locking is not allowed. The task

* may not exit without first unlocking the mutex. Also, kernel

* memory where the mutex resides mutex must not be freed with

* the mutex still locked. The mutex must first be initialized

* (or statically defined) before it can be locked. memset()-ing

* the mutex to 0 is not allowed.

*

* ( The CONFIG_DEBUG_MUTEXES .config option turns on debugging

* checks that will enforce the restrictions and will also do

* deadlock debugging. )

*

* This function is similar to (but not equivalent to) down().

*/

void __sched mutex_lock(struct mutex *lock)

{

might_sleep();

/*

* The locking fastpath is the 1->0 transition from

* 'unlocked' into 'locked' state.

*/

__mutex_fastpath_lock(&lock->count, __mutex_lock_slowpath);

mutex_set_owner(lock);

}

EXPORT_SYMBOL(mutex_lock);

(4)应用比较

7、完成变量

(1)结构体

// include/linux/completion.h

/**

* struct completion - structure used to maintain state for a "completion"

*

* This is the opaque structure used to maintain the state for a "completion".

* Completions currently use a FIFO to queue threads that have to wait for

* the "completion" event.

*

* See also: complete(), wait_for_completion() (and friends _timeout,

* _interruptible, _interruptible_timeout, and _killable), init_completion(),

* and macros DECLARE_COMPLETION(), DECLARE_COMPLETION_ONSTACK(), and

* INIT_COMPLETION().

*/

struct completion {

unsigned int done;

wait_queue_head_t wait;

};

// include/linux/wait.h

struct __wait_queue_head {

spinlock_t lock;

struct list_head task_list;

};

typedef struct __wait_queue_head wait_queue_head_t;

struct __wait_queue {

unsigned int flags;

#define WQ_FLAG_EXCLUSIVE 0x01

void *private;

wait_queue_func_t func;

struct list_head task_list;

};

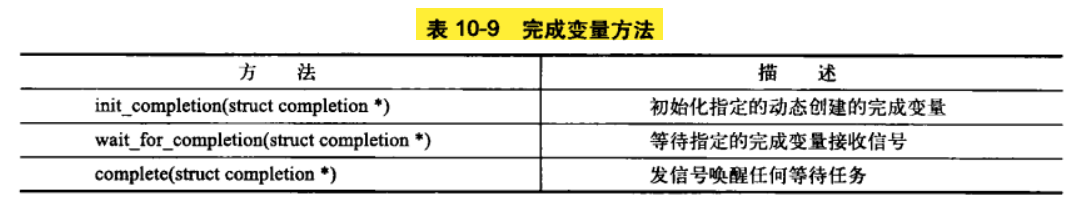

(2)方法说明

函数定义在文件 include/linux/completion.h 中

(3)complete 函数分析

- complete 函数

// kernel/sched.c

void complete(struct completion *x)

{

unsigned long flags;

spin_lock_irqsave(&x->wait.lock, flags);

x->done++;

__wake_up_common(&x->wait, TASK_NORMAL, 1, 0, NULL);

spin_unlock_irqrestore(&x->wait.lock, flags);

}

EXPORT_SYMBOL(complete);

- __wake_up_common 函数

// kernel/sched.c

static void __wake_up_common(wait_queue_head_t *q, unsigned int mode,

int nr_exclusive, int wake_flags, void *key)

{

wait_queue_t *curr, *next;

list_for_each_entry_safe(curr, next, &q->task_list, task_list) {

unsigned flags = curr->flags;

if (curr->func(curr, mode, wake_flags, key) &&

(flags & WQ_FLAG_EXCLUSIVE) && !--nr_exclusive)

break;

}

}

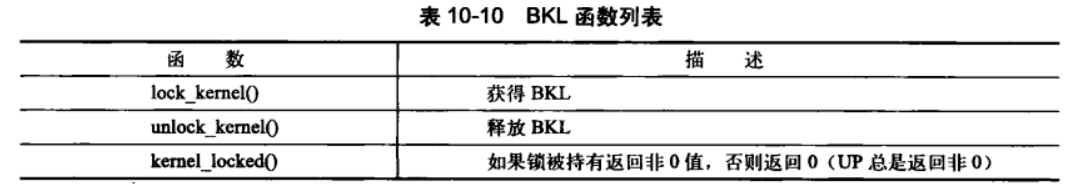

8、BLK:大内核锁

(1)方法说明

函数定义在文件 include/linux/smp_lock.h 中

(2)lock_kernel 函数分析

- lock_kernel 函数

// include/linux/smp_lock.h

#define lock_kernel() do { \

_lock_kernel(__func__, __FILE__, __LINE__); \

} while (0)

- _lock_kernel 函数

// lib/kernel_lock.c

void __lockfunc _lock_kernel(const char *func, const char *file, int line)

{

int depth = current->lock_depth + 1;

trace_lock_kernel(func, file, line);

if (likely(!depth)) {

might_sleep();

__lock_kernel();

}

current->lock_depth = depth;

}

- __lock_kernel 函数

// lib/kernel_lock.c

static inline void __lock_kernel(void)

{

do_raw_spin_lock(&kernel_flag);

}

9、顺序锁

(1)结构体

// include/linux/seqlock.h

typedef struct {

unsigned sequence;

spinlock_t lock;

} seqlock_t;

(2)方法说明

// include/linux/seqlock.h

/*

* These macros triggered gcc-3.x compile-time problems. We think these are

* OK now. Be cautious.

*/

#define __SEQLOCK_UNLOCKED(lockname) \

{ 0, __SPIN_LOCK_UNLOCKED(lockname) }

#define SEQLOCK_UNLOCKED \

__SEQLOCK_UNLOCKED(old_style_seqlock_init)

#define seqlock_init(x) \

do { \

(x)->sequence = 0; \

spin_lock_init(&(x)->lock); \

} while (0)

#define DEFINE_SEQLOCK(x) \

seqlock_t x = __SEQLOCK_UNLOCKED(x)

/* Lock out other writers and update the count.

* Acts like a normal spin_lock/unlock.

* Don't need preempt_disable() because that is in the spin_lock already.

*/

static inline void write_seqlock(seqlock_t *sl)

{

spin_lock(&sl->lock);

++sl->sequence;

smp_wmb();

}

static inline void write_sequnlock(seqlock_t *sl)

{

smp_wmb();

sl->sequence++;

spin_unlock(&sl->lock);

}

static inline int write_tryseqlock(seqlock_t *sl)

{

int ret = spin_trylock(&sl->lock);

if (ret) {

++sl->sequence;

smp_wmb();

}

return ret;

}

/* Start of read calculation -- fetch last complete writer token */

static __always_inline unsigned read_seqbegin(const seqlock_t *sl)

{

unsigned ret;

repeat:

ret = sl->sequence;

smp_rmb();

if (unlikely(ret & 1)) {

cpu_relax();

goto repeat;

}

return ret;

}

/*

* Test if reader processed invalid data.

*

* If sequence value changed then writer changed data while in section.

*/

static __always_inline int read_seqretry(const seqlock_t *sl, unsigned start)

{

smp_rmb();

return (sl->sequence != start);

}

/*

* Version using sequence counter only.

* This can be used when code has its own mutex protecting the

* updating starting before the write_seqcountbeqin() and ending

* after the write_seqcount_end().

*/

typedef struct seqcount {

unsigned sequence;

} seqcount_t;

#define SEQCNT_ZERO { 0 }

#define seqcount_init(x) do { *(x) = (seqcount_t) SEQCNT_ZERO; } while (0)

/* Start of read using pointer to a sequence counter only. */

static inline unsigned read_seqcount_begin(const seqcount_t *s)

{

unsigned ret;

repeat:

ret = s->sequence;

smp_rmb();

if (unlikely(ret & 1)) {

cpu_relax();

goto repeat;

}

return ret;

}

/*

* Test if reader processed invalid data because sequence number has changed.

*/

static inline int read_seqcount_retry(const seqcount_t *s, unsigned start)

{

smp_rmb();

return s->sequence != start;

}

/*

* Sequence counter only version assumes that callers are using their

* own mutexing.

*/

static inline void write_seqcount_begin(seqcount_t *s)

{

s->sequence++;

smp_wmb();

}

static inline void write_seqcount_end(seqcount_t *s)

{

smp_wmb();

s->sequence++;

}

/*

* Possible sw/hw IRQ protected versions of the interfaces.

*/

#define write_seqlock_irqsave(lock, flags) \

do { local_irq_save(flags); write_seqlock(lock); } while (0)

#define write_seqlock_irq(lock) \

do { local_irq_disable(); write_seqlock(lock); } while (0)

#define write_seqlock_bh(lock) \

do { local_bh_disable(); write_seqlock(lock); } while (0)

#define write_sequnlock_irqrestore(lock, flags) \

do { write_sequnlock(lock); local_irq_restore(flags); } while(0)

#define write_sequnlock_irq(lock) \

do { write_sequnlock(lock); local_irq_enable(); } while(0)

#define write_sequnlock_bh(lock) \

do { write_sequnlock(lock); local_bh_enable(); } while(0)

#define read_seqbegin_irqsave(lock, flags) \

({ local_irq_save(flags); read_seqbegin(lock); })

#define read_seqretry_irqrestore(lock, iv, flags) \

({ \

int ret = read_seqretry(lock, iv); \

local_irq_restore(flags); \

ret; \

})

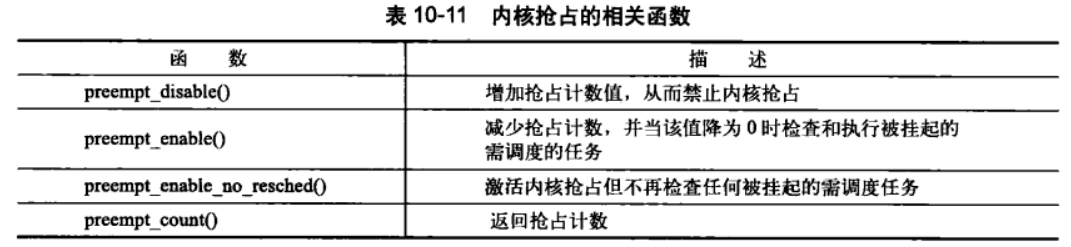

10、禁止抢占

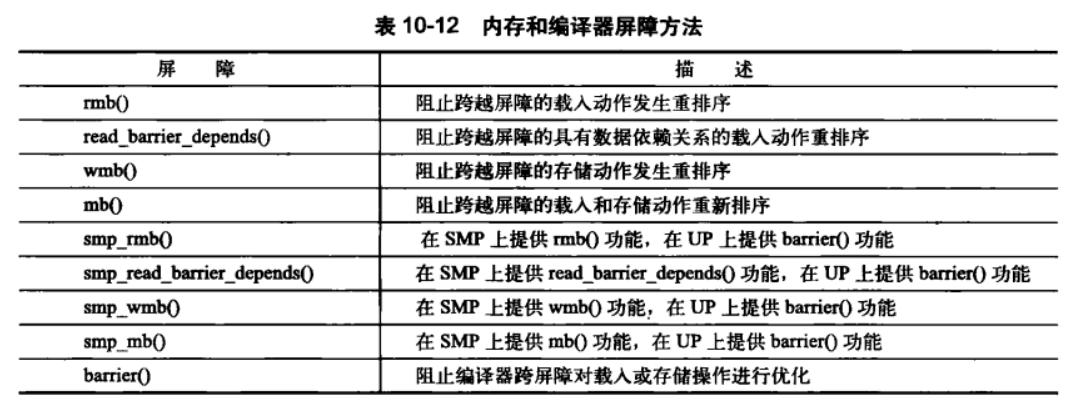

11、顺序与屏障

八、定时器和时间管理

1、节拍率 HZ

// include/linux/param.h

#ifndef _LINUX_PARAM_H

#define _LINUX_PARAM_H

#include <asm/param.h>

#endif

// arch/x86/include/asm/param.h

#include <asm-generic/param.h>

// include/asm-generic/param.h

#ifdef __KERNEL__

# define HZ CONFIG_HZ /* Internal kernel timer frequency */

# define USER_HZ 100 /* some user interfaces are */

# define CLOCKS_PER_SEC (USER_HZ) /* in "ticks" like times() */

#endif

#ifndef HZ

#define HZ 100

#endif

2、jiffies

(1)jiffies 的内部表示

// include/linux/jiffies.h

extern u64 __jiffy_data jiffies_64;

extern unsigned long volatile __jiffy_data jiffies;

#if (BITS_PER_LONG < 64)

u64 get_jiffies_64(void);

#else

static inline u64 get_jiffies_64(void)

{

return (u64)jiffies;

}

#endif

在 32 位体系结构上是 32 位,在 64 位体系结构上是 64 位。

(2)jiffies 的回绕

#define time_after(a,b) \

(typecheck(unsigned long, a) && \

typecheck(unsigned long, b) && \

((long)(b) - (long)(a) < 0))

#define time_before(a,b) time_after(b,a)

#define time_after_eq(a,b) \

(typecheck(unsigned long, a) && \

typecheck(unsigned long, b) && \

((long)(a) - (long)(b) >= 0))

#define time_before_eq(a,b) time_after_eq(b,a)

/*

* Calculate whether a is in the range of [b, c].

*/

#define time_in_range(a,b,c) \

(time_after_eq(a,b) && \

time_before_eq(a,c))

/*

* Calculate whether a is in the range of [b, c).

*/

#define time_in_range_open(a,b,c) \

(time_after_eq(a,b) && \

time_before(a,c))

/* Same as above, but does so with platform independent 64bit types.

* These must be used when utilizing jiffies_64 (i.e. return value of

* get_jiffies_64() */

#define time_after64(a,b) \

(typecheck(__u64, a) && \

typecheck(__u64, b) && \

((__s64)(b) - (__s64)(a) < 0))

#define time_before64(a,b) time_after64(b,a)

#define time_after_eq64(a,b) \

(typecheck(__u64, a) && \

typecheck(__u64, b) && \

((__s64)(a) - (__s64)(b) >= 0))

#define time_before_eq64(a,b) time_after_eq64(b,a)

/*

* These four macros compare jiffies and 'a' for convenience.

*/

/* time_is_before_jiffies(a) return true if a is before jiffies */

#define time_is_before_jiffies(a) time_after(jiffies, a)

/* time_is_after_jiffies(a) return true if a is after jiffies */

#define time_is_after_jiffies(a) time_before(jiffies, a)

/* time_is_before_eq_jiffies(a) return true if a is before or equal to jiffies*/

#define time_is_before_eq_jiffies(a) time_after_eq(jiffies, a)

/* time_is_after_eq_jiffies(a) return true if a is after or equal to jiffies*/

#define time_is_after_eq_jiffies(a) time_before_eq(jiffies, a)

/*

* Have the 32 bit jiffies value wrap 5 minutes after boot

* so jiffies wrap bugs show up earlier.

*/

#define INITIAL_JIFFIES ((unsigned long)(unsigned int) (-300*HZ))

(3)用户空间和 HZ

在 2.6 版以前的内核中,如果改变内核中 HZ 的值,会给用户空间中某些程序造成异常结果。这是因为内核是以 节拍数 / 秒 的形式给用户空间导出这个值的,在这个接口稳定了很长一段时间后,应用程序便逐渐依赖于这个特定的 HZ 值了。所以如果在内核中更改了 HZ 的定义值,就打破了用户空间的常量关系——用户空间并不知道新的 HZ 值。所以用户空间可能认为系统运行时间已经是 20 个小时了,但实际上系统仅仅启动了两个小时。

要像避免上面的错误,内核必须更改所有导出的 jiffies 值。因而内核定义了 USER_HZ 来代表用户空间看到的 HZ 值。在 x86 体系结构上,由于 HZ 值原来一直是 100,所以 USER_HZ 值就定义为 100。内核可以使用函数 jiffies_to_clock_t() 将一个由 HZ 表示的节拍数转换成一个由 USER_HZ 表示的节拍技术。

// kernel/time.c

clock_t jiffies_to_clock_t(long x)

{

#if (TICK_NSEC % (NSEC_PER_SEC / USER_HZ)) == 0

# if HZ < USER_HZ

return x * (USER_HZ / HZ);

# else

return x / (HZ / USER_HZ);

# endif

#else

return div_u64((u64)x * TICK_NSEC, NSEC_PER_SEC / USER_HZ);

#endif

}

EXPORT_SYMBOL(jiffies_to_clock_t);

// include/linux/types.h

typedef __kernel_clock_t clock_t;

// arch/x86/include/asm/posix_types_64.h

typedef long __kernel_clock_t;

3、定时器

// include/linux/timer.h

struct timer_list {

struct list_head entry; /* 定时器链表的入口 */

unsigned long expires; /* 以 jiffies 为单位的定时值 */

void (*function)(unsigned long); /* 定时器处理函数 */

unsigned long data; /* 传给处理函数的长整型参数 */

struct tvec_base *base; /* 定时器内部值,用户不要使用 */

#ifdef CONFIG_TIMER_STATS

void *start_site;

char start_comm[16];

int start_pid;

#endif

#ifdef CONFIG_LOCKDEP

struct lockdep_map lockdep_map;

#endif

};

4、延迟执行

(1)忙等待

unsigned long timeout = jiffies + 10;

while (time_before(jiffies, timeout))

;

unsigned long timeout = jiffies + 5 * HZ;

while (time_before(jiffies, timeout))

cond_resched();

(2)短延迟

(a)方法说明

void udelay(unsigned long usecs);

void ndelay(unsigned long nsecs);

void mdelay(unsigned long msecs);

(b)mdelay 函数

- mdelay 函数

// include/linux/delay.h

#ifndef mdelay

#define mdelay(n) (\

(__builtin_constant_p(n) && (n)<=MAX_UDELAY_MS) ? udelay((n)*1000) : \

({unsigned long __ms=(n); while (__ms--) udelay(1000);}))

#endif

#ifndef ndelay

static inline void ndelay(unsigned long x)

{

udelay(DIV_ROUND_UP(x, 1000));

}

#define ndelay(x) ndelay(x)

#endif

extern unsigned long lpj_fine;

void calibrate_delay(void);

void msleep(unsigned int msecs);

unsigned long msleep_interruptible(unsigned int msecs);

static inline void ssleep(unsigned int seconds)

{

msleep(seconds * 1000);

}

- udelay 函数

// arch/x86/include/asm/delay.h

/* 0x10c7 is 2**32 / 1000000 (rounded up) */

#define udelay(n) (__builtin_constant_p(n) ? \

((n) > 20000 ? __bad_udelay() : __const_udelay((n) * 0x10c7ul)) : \

__udelay(n))

/* 0x5 is 2**32 / 1000000000 (rounded up) */

#define ndelay(n) (__builtin_constant_p(n) ? \

((n) > 20000 ? __bad_ndelay() : __const_udelay((n) * 5ul)) : \

__ndelay(n))

- __const_udelay 函数

// arch/x86/lib/delay.c

inline void __const_udelay(unsigned long xloops)

{

int d0;

xloops *= 4;

asm("mull %%edx"

:"=d" (xloops), "=&a" (d0)

:"1" (xloops), "0"

(cpu_data(raw_smp_processor_id()).loops_per_jiffy * (HZ/4)));

__delay(++xloops);

}

EXPORT_SYMBOL(__const_udelay);

void __udelay(unsigned long usecs)

{

__const_udelay(usecs * 0x000010c7); /* 2**32 / 1000000 (rounded up) */

}

EXPORT_SYMBOL(__udelay);

void __ndelay(unsigned long nsecs)

{

__const_udelay(nsecs * 0x00005); /* 2**32 / 1000000000 (rounded up) */

}

EXPORT_SYMBOL(__ndelay);

(3)schedule_timeout 函数

// kernel/timer.c

signed long __sched schedule_timeout(signed long timeout)

{

struct timer_list timer;

unsigned long expire;

switch (timeout)

{

case MAX_SCHEDULE_TIMEOUT:

/*

* These two special cases are useful to be comfortable

* in the caller. Nothing more. We could take

* MAX_SCHEDULE_TIMEOUT from one of the negative value

* but I' d like to return a valid offset (>=0) to allow

* the caller to do everything it want with the retval.

*/

schedule();

goto out;

default:

/*

* Another bit of PARANOID. Note that the retval will be

* 0 since no piece of kernel is supposed to do a check

* for a negative retval of schedule_timeout() (since it

* should never happens anyway). You just have the printk()

* that will tell you if something is gone wrong and where.

*/

if (timeout < 0) {

printk(KERN_ERR "schedule_timeout: wrong timeout "

"value %lx\n", timeout);

dump_stack();

current->state = TASK_RUNNING;

goto out;

}

}

expire = timeout + jiffies;

setup_timer_on_stack(&timer, process_timeout, (unsigned long)current);

__mod_timer(&timer, expire, false, TIMER_NOT_PINNED);

schedule();

del_singleshot_timer_sync(&timer);

/* Remove the timer from the object tracker */

destroy_timer_on_stack(&timer);

timeout = expire - jiffies;

out:

return timeout < 0 ? 0 : timeout;

}

EXPORT_SYMBOL(schedule_timeout);

九、内存管理

1、页

// include/linux/mm_types.h

struct page {

unsigned long flags; /* Atomic flags, some possibly

* updated asynchronously */

atomic_t _count; /* Usage count, see below. */

union {

atomic_t _mapcount; /* Count of ptes mapped in mms,

* to show when page is mapped

* & limit reverse map searches.

*/

struct { /* SLUB */

u16 inuse;

u16 objects;

};

};

union {

struct {

unsigned long private; /* Mapping-private opaque data:

* usually used for buffer_heads

* if PagePrivate set; used for

* swp_entry_t if PageSwapCache;

* indicates order in the buddy

* system if PG_buddy is set.

*/

struct address_space *mapping; /* If low bit clear, points to

* inode address_space, or NULL.

* If page mapped as anonymous

* memory, low bit is set, and

* it points to anon_vma object:

* see PAGE_MAPPING_ANON below.

*/

};

#if USE_SPLIT_PTLOCKS

spinlock_t ptl;

#endif

struct kmem_cache *slab; /* SLUB: Pointer to slab */

struct page *first_page; /* Compound tail pages */

};

union {

pgoff_t index; /* Our offset within mapping. */

void *freelist; /* SLUB: freelist req. slab lock */

};

struct list_head lru; /* Pageout list, eg. active_list

* protected by zone->lru_lock !

*/

/*

* On machines where all RAM is mapped into kernel address space,

* we can simply calculate the virtual address. On machines with

* highmem some memory is mapped into kernel virtual memory

* dynamically, so we need a place to store that address.

* Note that this field could be 16 bits on x86 ... ;)

*

* Architectures with slow multiplication can define

* WANT_PAGE_VIRTUAL in asm/page.h

*/

#if defined(WANT_PAGE_VIRTUAL)

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

#endif /* WANT_PAGE_VIRTUAL */

#ifdef CONFIG_WANT_PAGE_DEBUG_FLAGS

unsigned long debug_flags; /* Use atomic bitops on this */

#endif

#ifdef CONFIG_KMEMCHECK

/*

* kmemcheck wants to track the status of each byte in a page; this

* is a pointer to such a status block. NULL if not tracked.

*/

void *shadow;

#endif

};

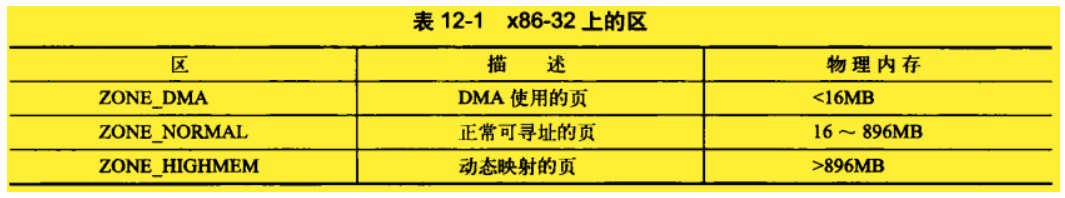

2、区

3、页操作

函数定义在文件 include/linux/gfp.h 中

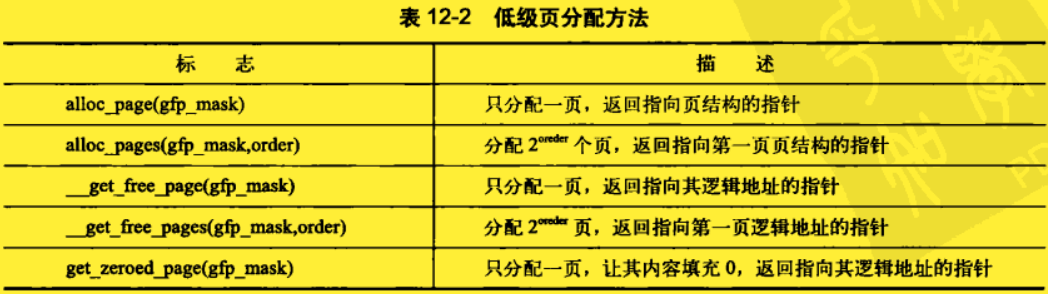

(1)获取页

(2)释放页

extern void __free_pages(struct page *page, unsigned int order);

extern void free_pages(unsigned long addr, unsigned int order);

extern void free_hot_cold_page(struct page *page, int cold);

#define __free_page(page) __free_pages((page), 0)

#define free_page(addr) free_pages((addr),0)

4、kmalloc 函数

kmalloc() 函数与用户空间的 malloc() 一族函数非常类似,只不过它多了一个 flags 参数。它可以获得以字节为单位的一块内核内存。

// include/linux/slab.h

#ifdef CONFIG_SLUB

#include <linux/slub_def.h>

#elif defined(CONFIG_SLOB)

#include <linux/slob_def.h>

#else

#include <linux/slab_def.h>

#endif

static inline void *kcalloc(size_t n, size_t size, gfp_t flags)

{

if (size != 0 && n > ULONG_MAX / size)

return NULL;

return __kmalloc(n * size, flags | __GFP_ZERO);

}

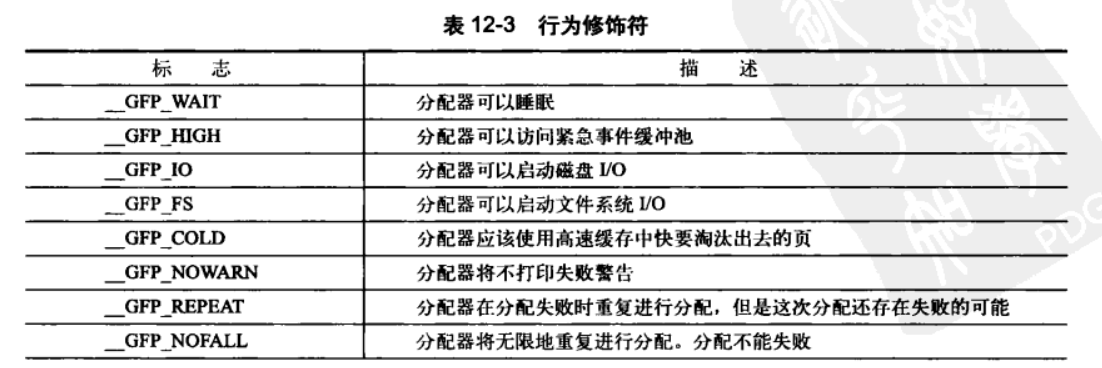

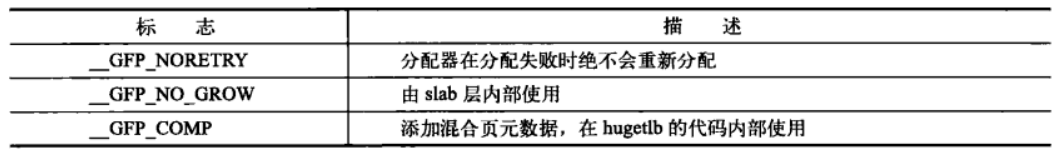

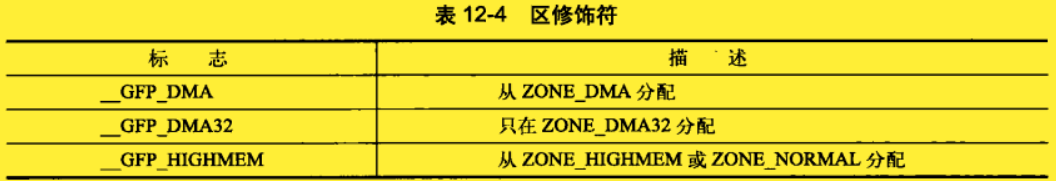

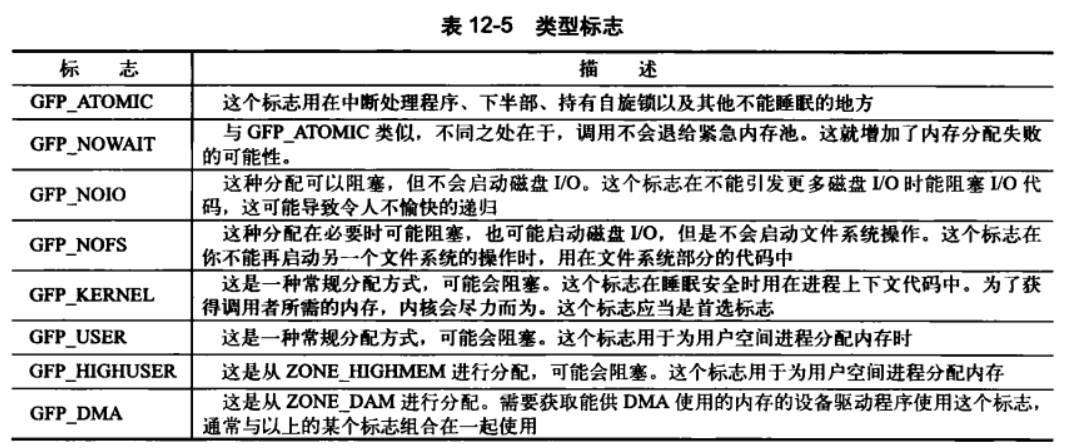

(1)gfp_t flags

- 行为修饰符

- 区修饰符

- 类型标志

(2)kmalloc 函数分析

- kmalloc 函数

// include/linux/slab_def.h

static __always_inline void *kmalloc(size_t size, gfp_t flags)

{

struct kmem_cache *cachep;

void *ret;

if (__builtin_constant_p(size)) {

int i = 0;

if (!size)

return ZERO_SIZE_PTR;

#define CACHE(x) \

if (size <= x) \

goto found; \

else \

i++;

#include <linux/kmalloc_sizes.h>

#undef CACHE

return NULL;

found:

#ifdef CONFIG_ZONE_DMA

if (flags & GFP_DMA)

cachep = malloc_sizes[i].cs_dmacachep;

else

#endif

cachep = malloc_sizes[i].cs_cachep;

ret = kmem_cache_alloc_notrace(cachep, flags);

trace_kmalloc(_THIS_IP_, ret,

size, slab_buffer_size(cachep), flags);

return ret;

}

return __kmalloc(size, flags);

}

- __kmalloc 函数

// mm/slab.c

void *__kmalloc(size_t size, gfp_t flags)

{

return __do_kmalloc(size, flags, NULL);

}

EXPORT_SYMBOL(__kmalloc);

- __do_kmalloc 函数

static __always_inline void *__do_kmalloc(size_t size, gfp_t flags,

void *caller)

{

struct kmem_cache *cachep;

void *ret;

/* If you want to save a few bytes .text space: replace

* __ with kmem_.

* Then kmalloc uses the uninlined functions instead of the inline

* functions.

*/

cachep = __find_general_cachep(size, flags);

if (unlikely(ZERO_OR_NULL_PTR(cachep)))

return cachep;

ret = __cache_alloc(cachep, flags, caller);

trace_kmalloc((unsigned long) caller, ret,

size, cachep->buffer_size, flags);

return ret;

}

- __cache_alloc 函数

static __always_inline void *

__cache_alloc(struct kmem_cache *cachep, gfp_t flags, void *caller)

{

unsigned long save_flags;

void *objp;

flags &= gfp_allowed_mask;

lockdep_trace_alloc(flags);

if (slab_should_failslab(cachep, flags))

return NULL;

cache_alloc_debugcheck_before(cachep, flags);

local_irq_save(save_flags);

objp = __do_cache_alloc(cachep, flags);

local_irq_restore(save_flags);

objp = cache_alloc_debugcheck_after(cachep, flags, objp, caller);

kmemleak_alloc_recursive(objp, obj_size(cachep), 1, cachep->flags,

flags);

prefetchw(objp);

if (likely(objp))

kmemcheck_slab_alloc(cachep, flags, objp, obj_size(cachep));

if (unlikely((flags & __GFP_ZERO) && objp))

memset(objp, 0, obj_size(cachep));

return objp;

}

5、vmalloc 函数

vmalloc 函数的工作方式类似于 kmalloc(),只不过 vmalloc 分配的内存虚拟地址是连续的,而物理地址则无须连续。

// include/linux/vmalloc.h

void *vmalloc(unsigned long size);

// mm/vmalloc.c

void *vmalloc(unsigned long size)

{

return __vmalloc_node(size, 1, GFP_KERNEL | __GFP_HIGHMEM, PAGE_KERNEL,

-1, __builtin_return_address(0));

}

EXPORT_SYMBOL(vmalloc);

6、slab

(1)kmem_cache 结构体

slab 根据配置不同,引用了不同的文件,具体如下:

#ifdef CONFIG_SLUB

#include <linux/slub_def.h>

#elif defined(CONFIG_SLOB)

#include <linux/slob_def.h>

#else

#include <linux/slab_def.h>

#endif

以下列举的是文件 slab_def.h 的信息。

// include/linux/slab_def.h

struct kmem_cache {

/* 1) per-cpu data, touched during every alloc/free */

struct array_cache *array[NR_CPUS];

/* 2) Cache tunables. Protected by cache_chain_mutex */

unsigned int batchcount;

unsigned int limit;

unsigned int shared;

unsigned int buffer_size;

u32 reciprocal_buffer_size;

/* 3) touched by every alloc & free from the backend */

unsigned int flags; /* constant flags */

unsigned int num; /* # of objs per slab */

/* 4) cache_grow/shrink */

/* order of pgs per slab (2^n) */

unsigned int gfporder;

/* force GFP flags, e.g. GFP_DMA */

gfp_t gfpflags;

size_t colour; /* cache colouring range */

unsigned int colour_off; /* colour offset */

struct kmem_cache *slabp_cache;

unsigned int slab_size;

unsigned int dflags; /* dynamic flags */

/* constructor func */

void (*ctor)(void *obj);

/* 5) cache creation/removal */

const char *name;

struct list_head next;

/* 6) statistics */

#ifdef CONFIG_DEBUG_SLAB

unsigned long num_active;

unsigned long num_allocations;

unsigned long high_mark;

unsigned long grown;

unsigned long reaped;

unsigned long errors;

unsigned long max_freeable;

unsigned long node_allocs;

unsigned long node_frees;

unsigned long node_overflow;

atomic_t allochit;

atomic_t allocmiss;

atomic_t freehit;

atomic_t freemiss;

/*

* If debugging is enabled, then the allocator can add additional

* fields and/or padding to every object. buffer_size contains the total

* object size including these internal fields, the following two

* variables contain the offset to the user object and its size.

*/

int obj_offset;

int obj_size;

#endif /* CONFIG_DEBUG_SLAB */

/*

* We put nodelists[] at the end of kmem_cache, because we want to size

* this array to nr_node_ids slots instead of MAX_NUMNODES

* (see kmem_cache_init())

* We still use [MAX_NUMNODES] and not [1] or [0] because cache_cache

* is statically defined, so we reserve the max number of nodes.

*/

struct kmem_list3 *nodelists[MAX_NUMNODES];

/*

* Do not add fields after nodelists[]

*/

};

kmem_list3 结构体

// mm/slab.c

struct kmem_list3 {

struct list_head slabs_partial; /* partial list first, better asm code */

struct list_head slabs_full;

struct list_head slabs_free;

unsigned long free_objects;

unsigned int free_limit;

unsigned int colour_next; /* Per-node cache coloring */

spinlock_t list_lock;

struct array_cache *shared; /* shared per node */

struct array_cache **alien; /* on other nodes */

unsigned long next_reap; /* updated without locking */

int free_touched; /* updated without locking */

};

(2)slab 结构体

// mm/slab.c

struct slab {

struct list_head list;

unsigned long colouroff;

void *s_mem; /* including colour offset */

unsigned int inuse; /* num of objs active in slab */

kmem_bufctl_t free;

unsigned short nodeid;

};

(3)函数

// mm/slab.c

static void *kmem_getpages(struct kmem_cache *cachep, gfp_t flags, int nodeid);

struct kmem_cache *

kmem_cache_create (const char *name, size_t size, size_t align,

unsigned long flags, void (*ctor)(void *));

void kmem_cache_destroy(struct kmem_cache *cachep);

void *kmem_cache_alloc(struct kmem_cache *cachep, gfp_t flags);

void kmem_cache_free(struct kmem_cache *cachep, void *objp);

7、栈

内核栈一般为 8K,可动态配置,范围为 4~16K。当 1 页栈激活,中断处理程序获得自己的栈(中断栈),不再使用内核栈。

8、高端内存映射

// include/linux/highmem.h

static inline void *kmap(struct page *page)

{

might_sleep();

return page_address(page);

}

static inline void kunmap(struct page *page)

{

}

static inline void *kmap_atomic(struct page *page, enum km_type idx);

#define kunmap_atomic(addr, idx) do { pagefault_enable(); } while (0)

9、分配函数的选择

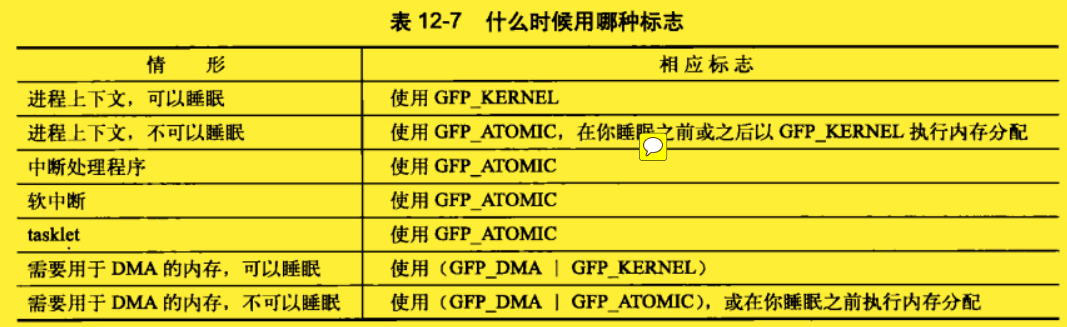

在这么多分配函数和方法中,有时并不能搞清楚到底该选择那种方式分配——但这确实很重要。如果你需要连续的物理页,就可以使用某个低级页分配器或 kmalloc()。这是内核中内存分配的常用方式,也是大多数情况下你自己应该使用的内存分配方式。回忆一下,传递给这些函数的两个最常用的标志是 GFP_ATOMIC 和 GFP_KERNEL。GFP_ATOMIC 表示进行不睡眠的高优先级分配,这是中断处理程序和其他不能睡眠的代码段的需要。对于可以睡眠的代码,(比如没有持自旋锁的进程上下文代码)则应该使用 GFP_KERNEL获取所需的内存。这个标志表示如果有必要,分配时可以睡眠。

如果你想从高端内存进行分配,就使用 alloc_pages()。alloc_pages() 函数返回一个指向 struct page 结构的指针,而不是一个指向某个逻辑地址的指针。因为高端内存很可能并没有被映射,因此,访问它的唯一方式就是通过相应的 struct page 结构。为了获得真正的指针,应该调用 kmap(),把高端内存映射到内核的逻辑地址空间。

如果你不需要物理上连续的页,而仅仅需要虚拟地址上连续的页,那么就使用 vmalloc()(不过要记住 vmalloc() 相对 kmalloc() 来说,有一定的性能损失)。vmalloc() 函数分配的内存虚地址是连续的,但它本身并不保证物理上的连续。这与用户空间的分配非常类似,它也是把物理内存块映射到连续的逻辑地址空间上。

如果你要创建和撤销很多大的数据结构,那么考虑建立 slab 高速缓存。slab 层会给每个处理器维持一个对象高速缓存(空闲链表),这种高速缓存会极大地提高对象分配和回收的性能。slab 层不是频繁地分配和释放内存,而是为你把事先分配好的对象存放到高速缓存中。当你需要一块新的内存来存放数据结构时,slab 层一般无须另外去分配内存,而只需要从高速缓存中得到一个对象就可以了。

十、虚拟文件系统

1、VFS

VFS中有四个主要的对象类型,它们分别是:

- 超级块对象,它代表一个具体的已安装文件系统。

- 索引节点对象,它代表一个具体文件。

- 目录项对象,它代表一个目录项,是路径的一个组成部分。

- 文件对象,它代表由进程打开的文件。

2、超级块

(1)super_block 结构体

// include/linux/fs.h

struct super_block {

/* 指向所有超级块的链表 */

struct list_head s_list; /* Keep this first */

/* 设备标识符 */

dev_t s_dev; /* search index; _not_ kdev_t */

/* 修改(脏)标志 */

unsigned char s_dirt;

/* 以位为单位的块大小 */

unsigned char s_blocksize_bits;

/* 以字节为单位的块大小 */

unsigned long s_blocksize;

/* 文件大小上限 */

loff_t s_maxbytes; /* Max file size */

/* 文件系统类型 */

struct file_system_type *s_type;

/* 超级块方法 */

const struct super_operations *s_op;

/* 磁盘限额方法 */

const struct dquot_operations *dq_op;

/* 限额控制方法 */

const struct quotactl_ops *s_qcop;

/* 导出方法 */

const struct export_operations *s_export_op;

/* 挂载标志 */

unsigned long s_flags;

/* 文件系统的幻数 */

unsigned long s_magic;

/* 目录挂载点 */

struct dentry *s_root;

/* 卸载信号量 */

struct rw_semaphore s_umount;

/* 超级块互斥体 */

struct mutex s_lock;

/* 超级块引用计数 */

int s_count;

/* 尚未同步标志 */

int s_need_sync;

/* 活动引用计数 */

atomic_t s_active;

#ifdef CONFIG_SECURITY

/* 安全模块 */

void *s_security;

#endif

/* 扩展的属性操作 */

struct xattr_handler **s_xattr;

/* inodes 链表 */

struct list_head s_inodes; /* all inodes */

/* 匿名目录项 */

struct hlist_head s_anon; /* anonymous dentries for (nfs) exporting */

/* 被分配文件链表 */

struct list_head s_files;

/* s_dentry_lru and s_nr_dentry_unused are protected by dcache_lock */

/* 未被使用目录项链表 */

struct list_head s_dentry_lru; /* unused dentry lru */

/* 链表中目录项的数目 */

int s_nr_dentry_unused; /* # of dentry on lru */

/* 相关的块设备 */

struct block_device *s_bdev;

/* */

struct backing_dev_info *s_bdi;

/* 存储磁盘信息 */

struct mtd_info *s_mtd;

/* 该类型文件系统 */

struct list_head s_instances;

/* 限额相关选项 */

struct quota_info s_dquot; /* Diskquota specific options */

/* frozen 标志位 */

int s_frozen;

/* 冻结的等待队列 */

wait_queue_head_t s_wait_unfrozen;

/* 文本名字 */

char s_id[32]; /* Informational name */

/* 文件系统特殊信息 */

void *s_fs_info; /* Filesystem private info */

/* 安装权限 */

fmode_t s_mode;

/* 时间戳粒度 */

/* Granularity of c/m/atime in ns.

Cannot be worse than a second */

u32 s_time_gran;

/*

* The next field is for VFS *only*. No filesystems have any business

* even looking at it. You had been warned.

*/

/* */

struct mutex s_vfs_rename_mutex; /* Kludge */

/*

* Filesystem subtype. If non-empty the filesystem type field

* in /proc/mounts will be "type.subtype"

*/

/* 子类型名称 */

char *s_subtype;

/*

* Saved mount options for lazy filesystems using

* generic_show_options()

*/

/* 已存安装选项 */

char *s_options;

};

(2)super_operations 结构体

struct super_operations {

struct inode *(*alloc_inode)(struct super_block *sb);

void (*destroy_inode)(struct inode *);

void (*dirty_inode) (struct inode *);

int (*write_inode) (struct inode *, struct writeback_control *wbc);

void (*drop_inode) (struct inode *);

void (*delete_inode) (struct inode *);

void (*put_super) (struct super_block *);

void (*write_super) (struct super_block *);

int (*sync_fs)(struct super_block *sb, int wait);

int (*freeze_fs) (struct super_block *);

int (*unfreeze_fs) (struct super_block *);

int (*statfs) (struct dentry *, struct kstatfs *);

int (*remount_fs) (struct super_block *, int *, char *);

void (*clear_inode) (struct inode *);

void (*umount_begin) (struct super_block *);

int (*show_options)(struct seq_file *, struct vfsmount *);

int (*show_stats)(struct seq_file *, struct vfsmount *);

#ifdef CONFIG_QUOTA

ssize_t (*quota_read)(struct super_block *, int, char *, size_t, loff_t);

ssize_t (*quota_write)(struct super_block *, int, const char *, size_t, loff_t);

#endif

int (*bdev_try_to_free_page)(struct super_block*, struct page*, gfp_t);

};

3、索引节点

(1)inode 结构体

// include/linux/fs.h

struct inode {

/* 散列表 */

struct hlist_node i_hash;

/* 索引节点链表 */

struct list_head i_list; /* backing dev IO list */

/* 超级块链表 */

struct list_head i_sb_list;

/* 目录项链表 */

struct list_head i_dentry;

/* 节点号 */

unsigned long i_ino;

/* 引用计数 */

atomic_t i_count;

/* 硬链接数 */

unsigned int i_nlink;

/* 使用者的 id */

uid_t i_uid;

/* 使用组的 id */

gid_t i_gid;

/* 实际设备标识符 */

dev_t i_rdev;

/* 以位为单位的块大小 */

unsigned int i_blkbits;

/* 版本号 */

u64 i_version;

/* 以字节为单位的文件大小 */

loff_t i_size;

#ifdef __NEED_I_SIZE_ORDERED

/* 对 i_size 进行串行计数 */

seqcount_t i_size_seqcount;

#endif

/* 最后访问时间 */

struct timespec i_atime;

/* 最后修改时间 */

struct timespec i_mtime;

/* 最后改变时间 */

struct timespec i_ctime;

/* 文件的块数 */

blkcnt_t i_blocks;

/* 使用的字节数 */

unsigned short i_bytes;

/* 访问权限 */

umode_t i_mode;

/* 自旋锁 */

spinlock_t i_lock; /* i_blocks, i_bytes, maybe i_size */

/* */

struct mutex i_mutex;

/* 嵌入 i_sem 内部 */

struct rw_semaphore i_alloc_sem;

/* 索引节点操作表 */

const struct inode_operations *i_op;

/* 缺省的索引节点操作 */

const struct file_operations *i_fop; /* former ->i_op->default_file_ops */

/* 相关的超级块 */

struct super_block *i_sb;

/* 文件锁链表 */

struct file_lock *i_flock;

/* 相关的地址映射 */

struct address_space *i_mapping;

/* 设备地址映射 */

struct address_space i_data;

#ifdef CONFIG_QUOTA

/* 索引节点的磁盘限额 */

struct dquot *i_dquot[MAXQUOTAS];

#endif

/* 块设备链表 */

struct list_head i_devices;

union {

/* 管道信息 */

struct pipe_inode_info *i_pipe;

/* 块设备驱动 */

struct block_device *i_bdev;

/* 字符设备驱动 */

struct cdev *i_cdev;

};

/* */

__u32 i_generation;

#ifdef CONFIG_FSNOTIFY

/* */

__u32 i_fsnotify_mask; /* all events this inode cares about */

/* */

struct hlist_head i_fsnotify_mark_entries; /* fsnotify mark entries */

#endif

#ifdef CONFIG_INOTIFY

/* 索引节点通知监测链表 */

struct list_head inotify_watches; /* watches on this inode */

/* 保护 inotify_watches */

struct mutex inotify_mutex; /* protects the watches list */

#endif

/* 状态标志 */

unsigned long i_state;

/* 第一次弄脏数据的时间 */

unsigned long dirtied_when; /* jiffies of first dirtying */

/* 文件系统标志 */

unsigned int i_flags;

/* 写者计数 */

atomic_t i_writecount;

#ifdef CONFIG_SECURITY

/* 安全模块 */

void *i_security;

#endif

#ifdef CONFIG_FS_POSIX_ACL

/* */

struct posix_acl *i_acl;

/* */

struct posix_acl *i_default_acl;

#endif

/* fs 私有指针 */

void *i_private; /* fs or device private pointer */

};

(2)inode_operations结构体

struct inode_operations {

int (*create) (struct inode *,struct dentry *,int, struct nameidata *);

struct dentry * (*lookup) (struct inode *,struct dentry *, struct nameidata *);

int (*link) (struct dentry *,struct inode *,struct dentry *);

int (*unlink) (struct inode *,struct dentry *);

int (*symlink) (struct inode *,struct dentry *,const char *);

int (*mkdir) (struct inode *,struct dentry *,int);

int (*rmdir) (struct inode *,struct dentry *);

int (*mknod) (struct inode *,struct dentry *,int,dev_t);

int (*rename) (struct inode *, struct dentry *,

struct inode *, struct dentry *);

int (*readlink) (struct dentry *, char __user *,int);

void * (*follow_link) (struct dentry *, struct nameidata *);

void (*put_link) (struct dentry *, struct nameidata *, void *);

void (*truncate) (struct inode *);

int (*permission) (struct inode *, int);

int (*check_acl)(struct inode *, int);

int (*setattr) (struct dentry *, struct iattr *);

int (*getattr) (struct vfsmount *mnt, struct dentry *, struct kstat *);

int (*setxattr) (struct dentry *, const char *,const void *,size_t,int);

ssize_t (*getxattr) (struct dentry *, const char *, void *, size_t);

ssize_t (*listxattr) (struct dentry *, char *, size_t);

int (*removexattr) (struct dentry *, const char *);

void (*truncate_range)(struct inode *, loff_t, loff_t);

long (*fallocate)(struct inode *inode, int mode, loff_t offset,

loff_t len);

int (*fiemap)(struct inode *, struct fiemap_extent_info *, u64 start,

u64 len);

};

4、目录项

(1)dentry 结构体

// include/linux/dcache.h

struct dentry {

/* 使用记数 */

atomic_t d_count;

/* 目录项标识 */

unsigned int d_flags; /* protected by d_lock */

/* 单目录项锁 */

spinlock_t d_lock; /* per dentry lock */

/* 是登录点的目录项吗? */

int d_mounted;

/* 相关联的索引节点 */

struct inode *d_inode; /* Where the name belongs to - NULL is

* negative */

/*

* The next three fields are touched by __d_lookup. Place them here

* so they all fit in a cache line.

*/

/* 散列表 */

struct hlist_node d_hash; /* lookup hash list */

/* 父目录的目录项对象 */

struct dentry *d_parent; /* parent directory */

/* 目录项名称 */

struct qstr d_name;

/* 未使用的链表 */

struct list_head d_lru; /* LRU list */

/*

* d_child and d_rcu can share memory

*/

union {

/* 目录项内部形成的链表 */

struct list_head d_child; /* child of parent list */

/* RCU 加锁 */

struct rcu_head d_rcu;

} d_u;

/* 子目录链表 */

struct list_head d_subdirs; /* our children */

/* 索引节点别名链表 */

struct list_head d_alias; /* inode alias list */

/* 重置时间 */

unsigned long d_time; /* used by d_revalidate */

/* 目录项操作指针 */

const struct dentry_operations *d_op;

/* 文件的超级块 */

struct super_block *d_sb; /* The root of the dentry tree */

/* 文件系统特有数据 */

void *d_fsdata; /* fs-specific data */

/* 短文件名 */

unsigned char d_iname[DNAME_INLINE_LEN_MIN]; /* small names */

};

(2)super_operations 结构体

// include/linux/dcache.h

struct dentry_operations {

int (*d_revalidate)(struct dentry *, struct nameidata *);

int (*d_hash) (struct dentry *, struct qstr *);

int (*d_compare) (struct dentry *, struct qstr *, struct qstr *);

int (*d_delete)(struct dentry *);

void (*d_release)(struct dentry *);

void (*d_iput)(struct dentry *, struct inode *);

char *(*d_dname)(struct dentry *, char *, int);

};

5、文件

(1)file 结构体

// include/linux/fs.h

struct file {

/*

* fu_list becomes invalid after file_free is called and queued via

* fu_rcuhead for RCU freeing

*/

union {

/* 文件对象链表 */

struct list_head fu_list;

/* 释放之后的 RCU 链表 */

struct rcu_head fu_rcuhead;

} f_u;

/* 包含目录项 */

struct path f_path;

#define f_dentry f_path.dentry

#define f_vfsmnt f_path.mnt

/* 文件操作表 */

const struct file_operations *f_op;

/* 单个文件结构锁 */

spinlock_t f_lock; /* f_ep_links, f_flags, no IRQ */

/* 文件对象的使用计数 */

atomic_long_t f_count;

/* 当打开文件时所指定的标志 */

unsigned int f_flags;

/* 文件的访问模式 */

fmode_t f_mode;

/* 文件当前的位移量(文件指针) */

loff_t f_pos;

/* 拥有者通过信号进行异步 I/O 数据的传送 */

struct fown_struct f_owner;

/* 文件的信任状 */

const struct cred *f_cred;

/* 预读状态 */

struct file_ra_state f_ra;

/* 版本号 */

u64 f_version;

#ifdef CONFIG_SECURITY

/* 安全模块 */

void *f_security;

#endif

/* needed for tty driver, and maybe others */

/* tty 设备驱动的钩子 */

void *private_data;

#ifdef CONFIG_EPOLL

/* Used by fs/eventpoll.c to link all the hooks to this file */

/* 事件池链表 */

struct list_head f_ep_links;

#endif /* #ifdef CONFIG_EPOLL */

/* 页缓存映射 */

struct address_space *f_mapping;

#ifdef CONFIG_DEBUG_WRITECOUNT

/* 调试状态 */

unsigned long f_mnt_write_state;

#endif

};

(2)file_operations 结构体

// include/linux/fs.h

struct file_operations {

struct module *owner;

loff_t (*llseek) (struct file *, loff_t, int);

ssize_t (*read) (struct file *, char __user *, size_t, loff_t *);

ssize_t (*write) (struct file *, const char __user *, size_t, loff_t *);

ssize_t (*aio_read) (struct kiocb *, const struct iovec *, unsigned long, loff_t);

ssize_t (*aio_write) (struct kiocb *, const struct iovec *, unsigned long, loff_t);

int (*readdir) (struct file *, void *, filldir_t);

unsigned int (*poll) (struct file *, struct poll_table_struct *);

int (*ioctl) (struct inode *, struct file *, unsigned int, unsigned long);

long (*unlocked_ioctl) (struct file *, unsigned int, unsigned long);

long (*compat_ioctl) (struct file *, unsigned int, unsigned long);

int (*mmap) (struct file *, struct vm_area_struct *);

int (*open) (struct inode *, struct file *);

int (*flush) (struct file *, fl_owner_t id);

int (*release) (struct inode *, struct file *);

int (*fsync) (struct file *, struct dentry *, int datasync);

int (*aio_fsync) (struct kiocb *, int datasync);

int (*fasync) (int, struct file *, int);

int (*lock) (struct file *, int, struct file_lock *);

ssize_t (*sendpage) (struct file *, struct page *, int, size_t, loff_t *, int);

unsigned long (*get_unmapped_area)(struct file *, unsigned long, unsigned long, unsigned long, unsigned long);

int (*check_flags)(int);

int (*flock) (struct file *, int, struct file_lock *);

ssize_t (*splice_write)(struct pipe_inode_info *, struct file *, loff_t *, size_t, unsigned int);

ssize_t (*splice_read)(struct file *, loff_t *, struct pipe_inode_info *, size_t, unsigned int);

int (*setlease)(struct file *, long, struct file_lock **);

};

6、和文件系统相关的数据结构

(1)file_system_type 结构体

文件系统类型

// include/linux/fs.h

struct file_system_type {

/* 文件系统的名字 */

const char *name;

/* 文件系统类型标志 */

int fs_flags;

/* 用来从磁盘中读取超级块 */

int (*get_sb) (struct file_system_type *, int,

const char *, void *, struct vfsmount *);

/* 用来终止访问超级块 */

void (*kill_sb) (struct super_block *);

/* 文件系统模块 */

struct module *owner;

/* 链表中下一个文件系统类型 */

struct file_system_type * next;

/* 超级块对象链表 */

struct list_head fs_supers;

/* 剩下的几个字段运行时使锁生效 */

struct lock_class_key s_lock_key;

struct lock_class_key s_umount_key;

struct lock_class_key i_lock_key;

struct lock_class_key i_mutex_key;

struct lock_class_key i_mutex_dir_key;

struct lock_class_key i_alloc_sem_key;

};

(2)vfsmount 结构体

VFS 文件安装点

// include/linux/mount.h

struct vfsmount {

/* 散列表 */

struct list_head mnt_hash;

/* 父文件系统 */

struct vfsmount *mnt_parent; /* fs we are mounted on */

/* 安装点的目录项 */

struct dentry *mnt_mountpoint; /* dentry of mountpoint */

/* 该文件系统的根目录项 */

struct dentry *mnt_root; /* root of the mounted tree */

/* 该文件系统的超级块 */

struct super_block *mnt_sb; /* pointer to superblock */

/* 子文件系统链表 */

struct list_head mnt_mounts; /* list of children, anchored here */

/* 子文件系统链表 */

struct list_head mnt_child; /* and going through their mnt_child */

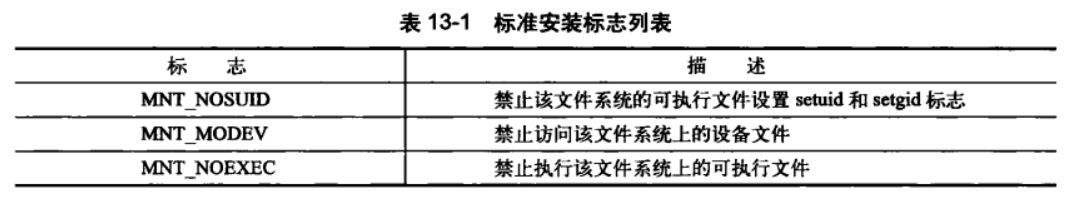

/* 安装标志 */

int mnt_flags;

/* 4 bytes hole on 64bits arches */

/* 设备文件名 */

const char *mnt_devname; /* Name of device e.g. /dev/dsk/hda1 */

/* 描述符链表 */

struct list_head mnt_list;

/* 在到期链表中的入口 */

struct list_head mnt_expire; /* link in fs-specific expiry list */

/* 在共享安装链表中的入口 */

struct list_head mnt_share; /* circular list of shared mounts */

/* 从安装链表 */

struct list_head mnt_slave_list;/* list of slave mounts */

/* 从安装链表中的入口 */

struct list_head mnt_slave; /* slave list entry */

/* 从安装链表的主入 */

struct vfsmount *mnt_master; /* slave is on master->mnt_slave_list */

/* 相关的命名空间 */

struct mnt_namespace *mnt_ns; /* containing namespace */

/* 安装标识符 */

int mnt_id; /* mount identifier */

/* 组标识符 */

int mnt_group_id; /* peer group identifier */

/*

* We put mnt_count & mnt_expiry_mark at the end of struct vfsmount

* to let these frequently modified fields in a separate cache line

* (so that reads of mnt_flags wont ping-pong on SMP machines)

*/

/* 使用计数 */

atomic_t mnt_count;

/* 如果标记为到期,则值为真 */

int mnt_expiry_mark; /* true if marked for expiry */

/* 钉住进程计数 */

int mnt_pinned;

/* 镜像引用计数 */

int mnt_ghosts;

#ifdef CONFIG_SMP

/* 写者引用计数 */

int __percpu *mnt_writers;

#else

/* 写者引用计数 */

int mnt_writers;

#endif

};

7、和进程相关的数据结构

(1)files_struct 结构体

该结构体由进程描述符中的 files 目录项指向。

// include/linux/fdtable.h

struct files_struct {

/*

* read mostly part

*/

/* 结构的使用计数 */

atomic_t count;

/* 指向其他 fd 表的指针 */

struct fdtable *fdt;

/* 基 fd 表 */

struct fdtable fdtab;

/*

* written part on a separate cache line in SMP

*/

/* 单个文件的锁? */

spinlock_t file_lock ____cacheline_aligned_in_smp;

/* 缓存下一个可用的 fd */

int next_fd;

/* exec() 时关闭的文件描述符链表 */

struct embedded_fd_set close_on_exec_init;

/* 打开的文件描述符链表 */

struct embedded_fd_set open_fds_init;

/* 缺省的文件对象数组 */

struct file * fd_array[NR_OPEN_DEFAULT];

};

(2)fs_struct 结构体

该结构体包含文件系统和进程相关的信息。

// include/linux/fs_struct.h

struct fs_struct {

int users; // 用户数目

rwlock_t lock; // 保护该结构体的锁

int umask; // 掩码

int in_exec; // 当前正在执行的文件

struct path root; // 跟目录路径

struct path pwd; // 当前工作目录的路径

};

(3)mnt_namespace 结构体

单进程命名空间,它使得每一个进程在系统中都看到唯一的安装文件系统——不仅是唯一的根目录,而且是唯一的文件系统层次结构。

// include/linux/mnt_namespace.h

struct mnt_namespace {

atomic_t count; // 结构的使用计数

struct vfsmount * root; // 根目录的安装点对象

struct list_head list; // 安装点链表

wait_queue_head_t poll; // 轮询的等待队列

int event; // 事件计数

};

十一、块 I/O 层

1、缓冲区

(1)buffer_head

// include/linux/buffer_head.h

struct buffer_head {

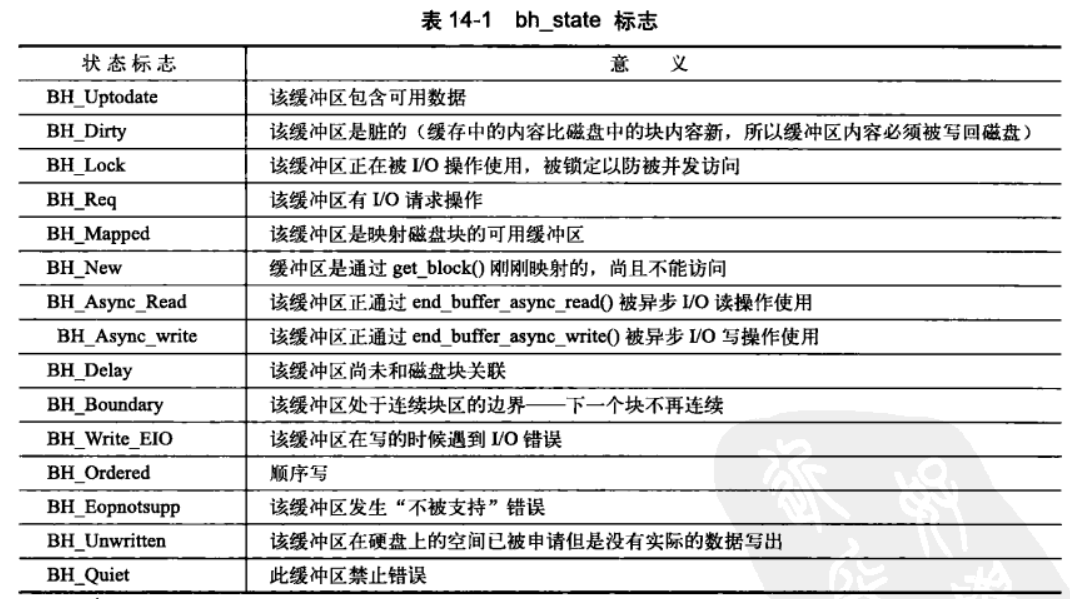

/* 缓冲区状态标志 */

unsigned long b_state; /* buffer state bitmap (see above) */

/* 页面中的缓冲区 */

struct buffer_head *b_this_page;/* circular list of page's buffers */

/* 存储缓冲区的页面 */