zookeeper是一个挺好玩的东西

有着独特的选举机制,一般在中小型集群中,zookeeper一般装在三个节点

其中只有一个节点对外提供服务,处于leader状态,另外两台未follower状态

这得益于zookeeper独特的选举机制,可以保证leader节点的主机挂掉后,

从节点可以通过选举机制很快成为leader节点并对外提供服务。

zookeeper的结构为树状结构,其每个树状节点中存储着其他组件的元数据。

本篇内容主讲znode节点的一些功能和使用,以及ACL的一些问题。

#找到zookeeper的运行目录

cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/

运行脚本zkCli.sh

[root@cloud01 bin]# ./zkCli.sh

Connecting to localhost:2181

2024-04-07 11:29:08,782 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.5-cdh6.3.2–1, built on 02/01/2022 16:17 GMT

2024-04-07 11:29:08,786 [myid:] - INFO [main:Environment@100] - Client environment:host.name=cloud01

2024-04-07 11:29:08,786 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.8.0_161

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/jdk8/jre

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/build/classes:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/build/lib/.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/slf4j-log4j12.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/slf4j-log4j12-1.7.25.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/slf4j-api-1.7.25.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/netty-3.10.6.Final.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/log4j-1.2.17.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/jline-2.11.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/commons-cli-1.2.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/lib/audience-annotations-0.5.0.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/zookeeper-3.4.5-cdh6.3.2.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/src/java/lib/.jar:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin/…/conf:.:/usr/local/jdk8/lib:/usr/local/jdk8/jre/lib:

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=

2024-04-07 11:29:08,790 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2024-04-07 11:29:08,791 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2024-04-07 11:29:08,791 [myid:] - INFO [main:Environment@100] - Client environment:os.version=3.10.0-1127.13.1.el7.x86_64

2024-04-07 11:29:08,791 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2024-04-07 11:29:08,791 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2024-04-07 11:29:08,791 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p5253.21605619/lib/zookeeper/bin

2024-04-07 11:29:08,791 [myid:] - INFO [main:ZooKeeper@619] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain M y W a t c h e r @ 7506 e 922 W e l c o m e t o Z o o K e e p e r ! 2024 − 04 − 0711 : 29 : 08 , 895 [ m y i d : ] − I N F O [ m a i n − S e n d T h r e a d ( l o c a l h o s t : 2181 ) : C l i e n t C n x n MyWatcher@7506e922 Welcome to ZooKeeper! 2024-04-07 11:29:08,895 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn MyWatcher@7506e922WelcometoZooKeeper!2024−04−0711:29:08,895[myid:]−INFO[main−SendThread(localhost:2181):ClientCnxnSendThread@1118] - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2024-04-07 11:29:09,008 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn S e n d T h r e a d @ 962 ] − S o c k e t c o n n e c t i o n e s t a b l i s h e d , i n i t i a t i n g s e s s i o n , c l i e n t : / 127.0.0.1 : 33928 , s e r v e r : l o c a l h o s t / 127.0.0.1 : 21812024 − 04 − 0711 : 29 : 09 , 068 [ m y i d : ] − I N F O [ m a i n − S e n d T h r e a d ( l o c a l h o s t : 2181 ) : C l i e n t C n x n SendThread@962] - Socket connection established, initiating session, client: /127.0.0.1:33928, server: localhost/127.0.0.1:2181 2024-04-07 11:29:09,068 [myid:] - INFO [main-SendThread(localhost:2181):ClientCnxn SendThread@962]−Socketconnectionestablished,initiatingsession,client:/127.0.0.1:33928,server:localhost/127.0.0.1:21812024−04−0711:29:09,068[myid:]−INFO[main−SendThread(localhost:2181):ClientCnxnSendThread@1378] - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0xff8ea1e469371d73, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0]

查看zookeeper下面的节点

[zk: localhost:2181(CONNECTED) 2] ls /

[cluster, controller, brokers, zookeeper, yarn-leader-election, hadoop-ha, admin, isr_change_notification, log_dir_event_notification, controller_epoch, rmstore, consumers, hive_zookeeper_namespace_hive, latest_producer_id_block, config, hbase, sentry]

zookeeper在cdh集群中又扮演什么角色呢?

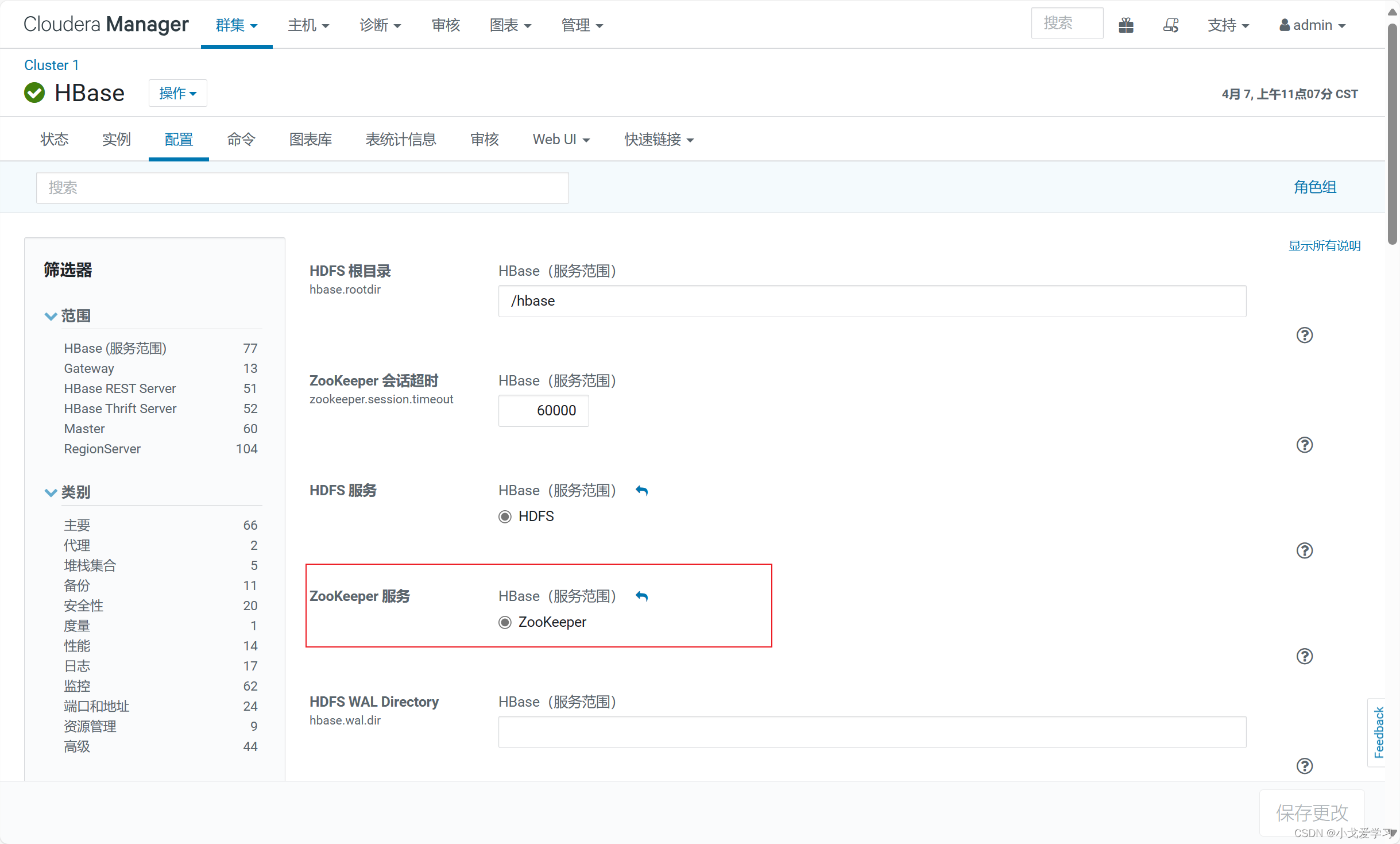

这是在hbase配置中的设置

ZooKeeper 是 HBase 集群的协调服务,它存储了 HBase 集群的状态信息、表结构信息、RegionServer 的信息。一般存储在zookeeper中的hbase节点下

[zk: localhost:2181(CONNECTED) 3] ls /hbase

[meta-region-server, rs, splitWAL, backup-masters, table-lock, flush-table-proc, master-maintenance, online-snapshot, acl, switch, master, running, balancer, tokenauth, draining, namespace, hbaseid, table]

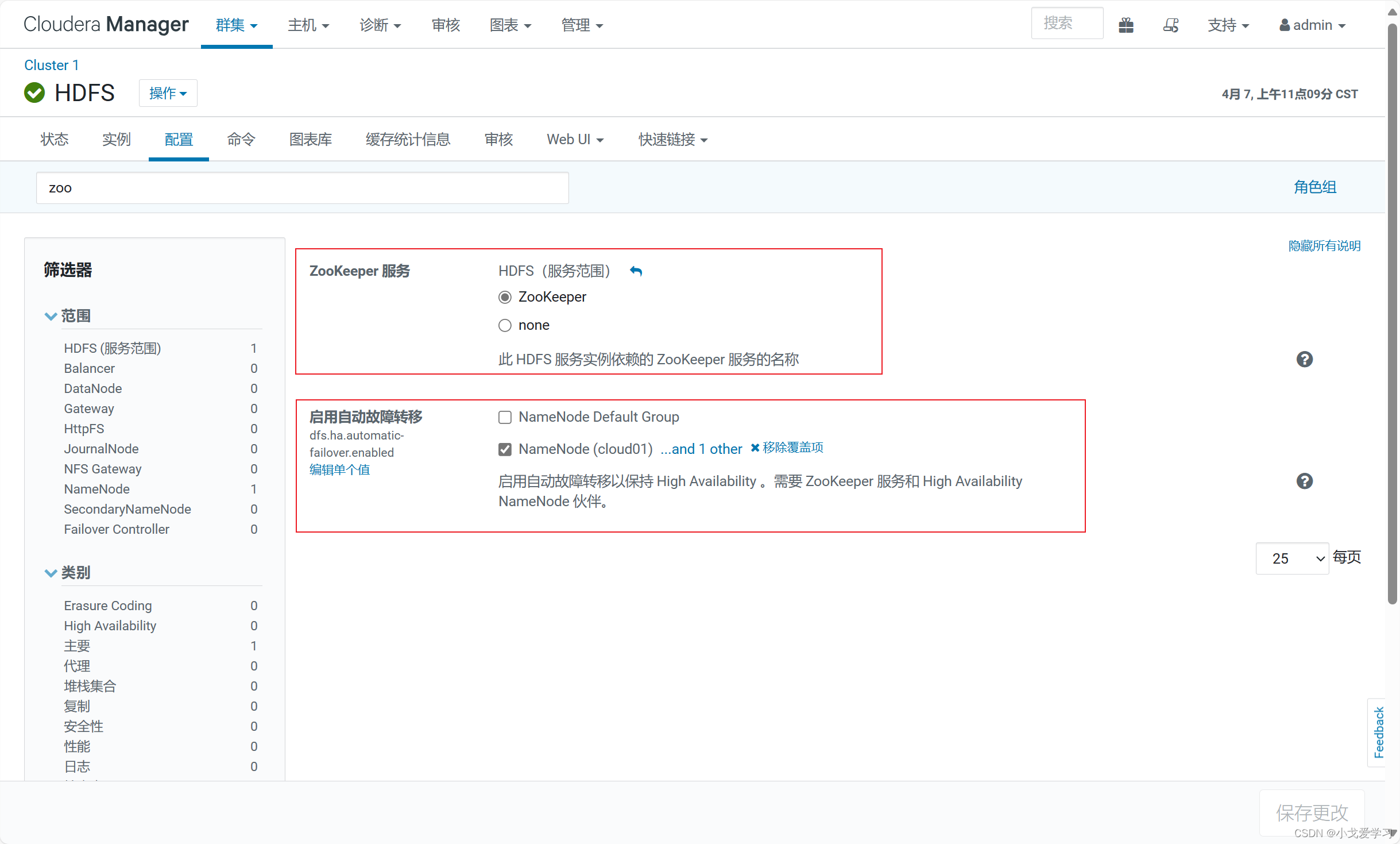

这是hdfs组件中的配置

hdfs要依赖于zookeeper来实现自身的高可用性(zkfc机制);

[zk: localhost:2181(CONNECTED) 4] ls /

[cluster, controller, brokers, zookeeper, yarn-leader-election, hadoop-ha, admin, isr_change_notification, log_dir_event_notification, controller_epoch, rmstore, consumers, hive_zookeeper_namespace_hive, latest_producer_id_block, config, hbase, sentry]

[zk: localhost:2181(CONNECTED) 5] ls /hadoop-ha

[nameservice1]

[zk: localhost:2181(CONNECTED) 6]

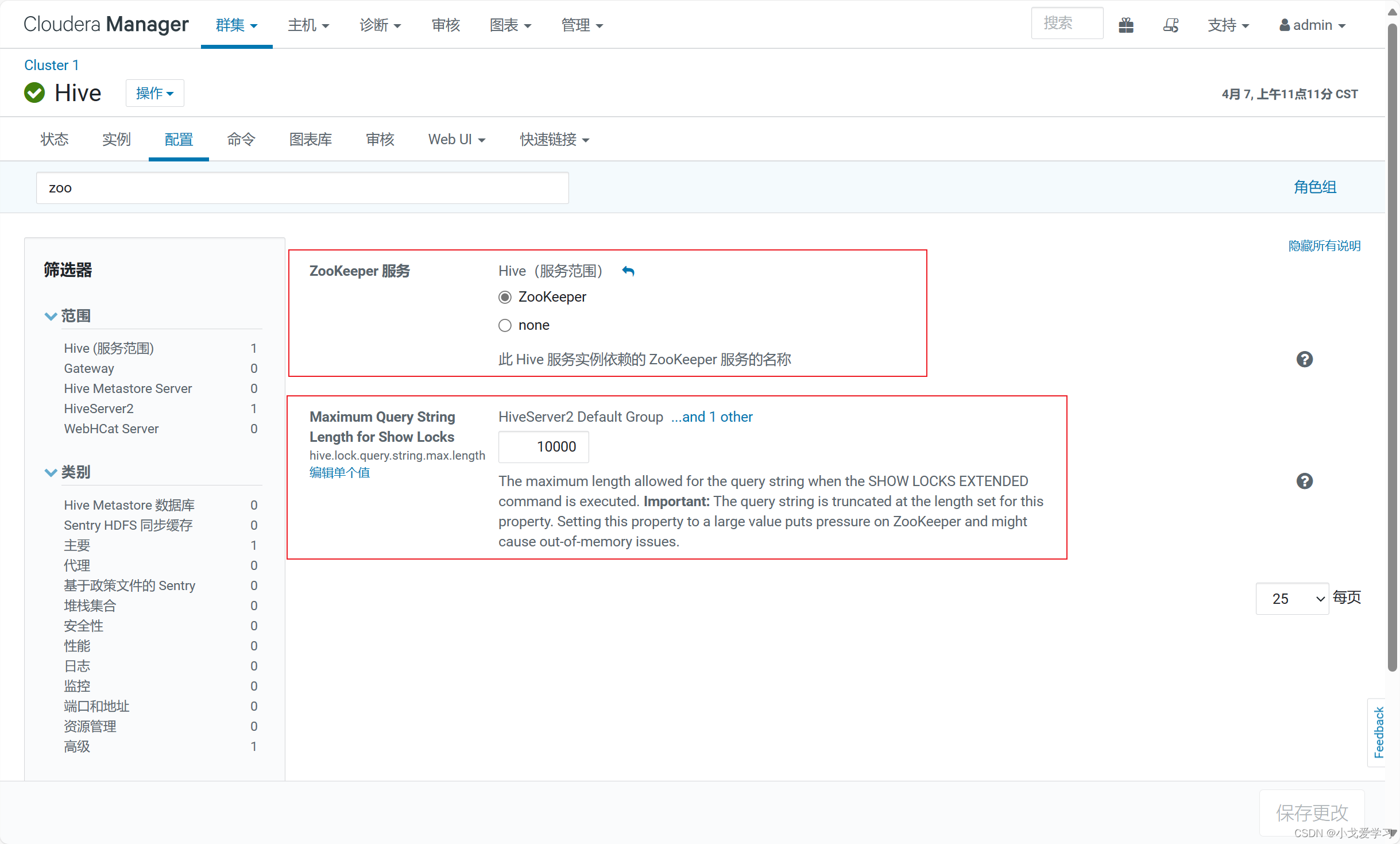

这是hive中的配置

Hive 的 HA 配置:在配置 Hive 的高可用性(High Availability,HA)环境时,ZooKeeper 可以用于管理和协调 Hive 的主从节点,确保 Hive 元数据服务的可用性和一致性。

这是impala中的配置

ZooKeeper 可以用作元数据存储,帮助 Impala 管理元数据信息的分布和一致性。

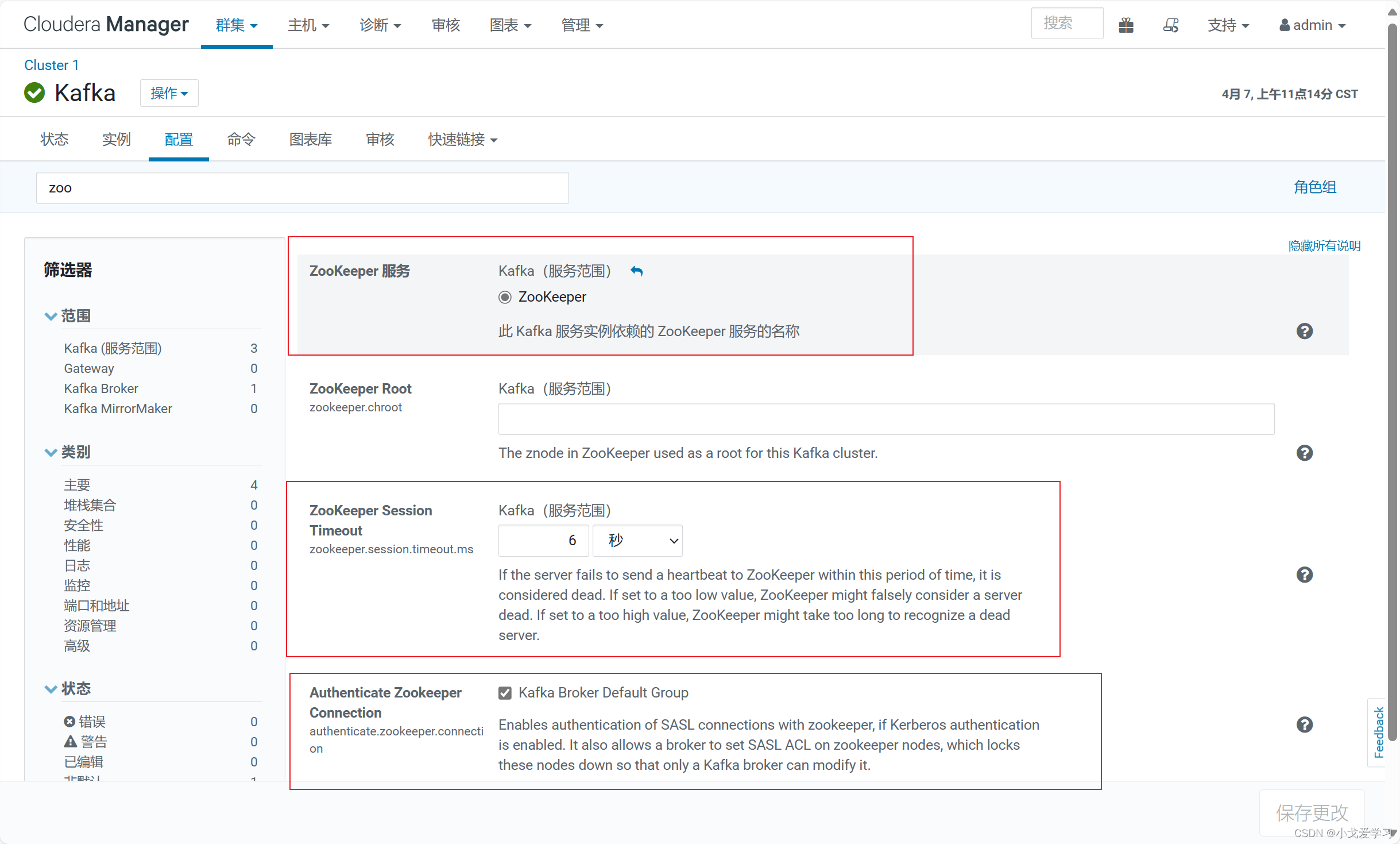

这是在kafka上的配置

做元数据存储,还有就是协助选举leader

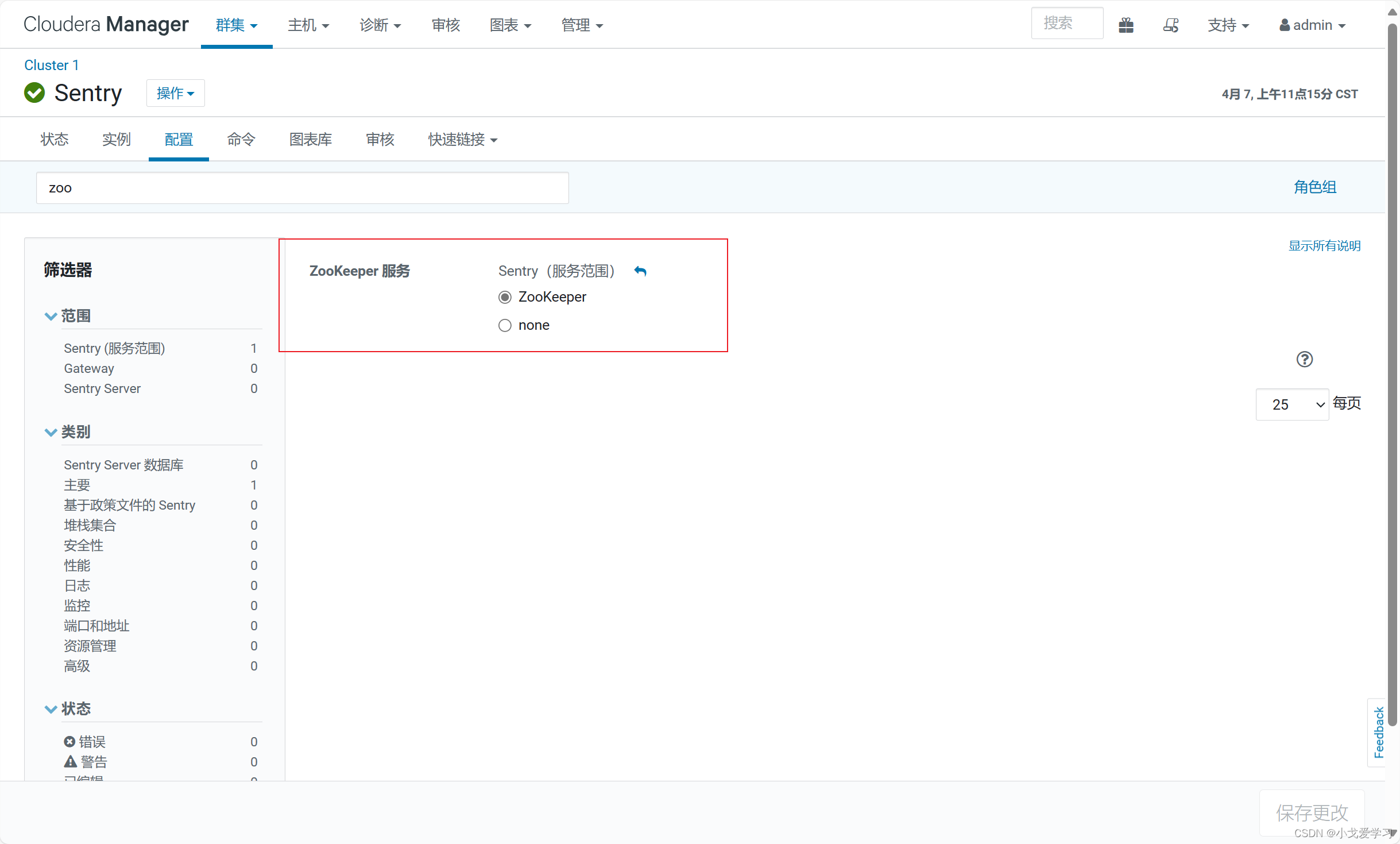

这是在Sentry中的配置

元数据存储和管理。

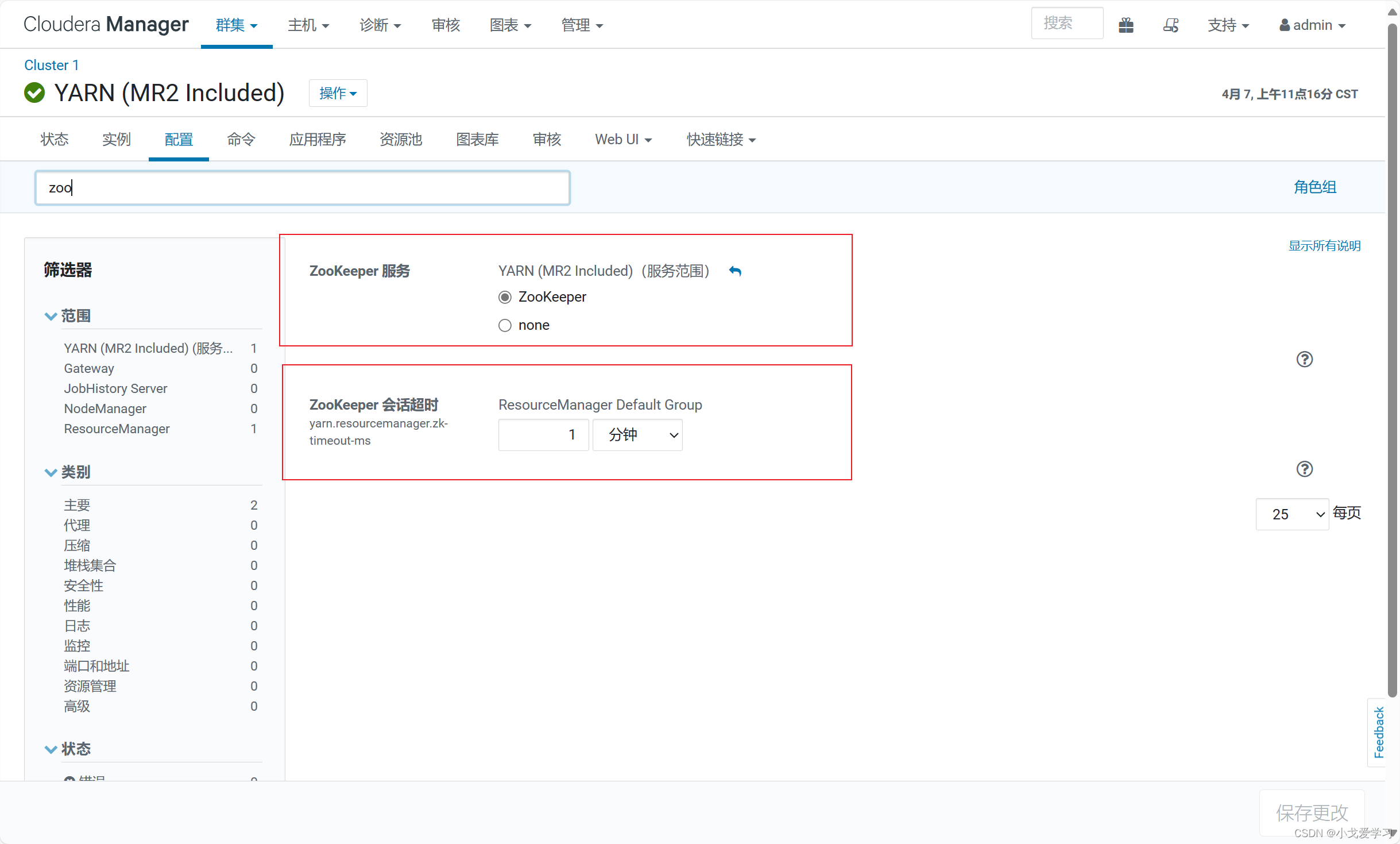

这是在yarn中的配置

高可用,元数据存储。

关于ACL权限,开启Kerberos认证后,这个就不开了。

而且这个权限每个目录节点的权限都是独立的。

8618

8618

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?