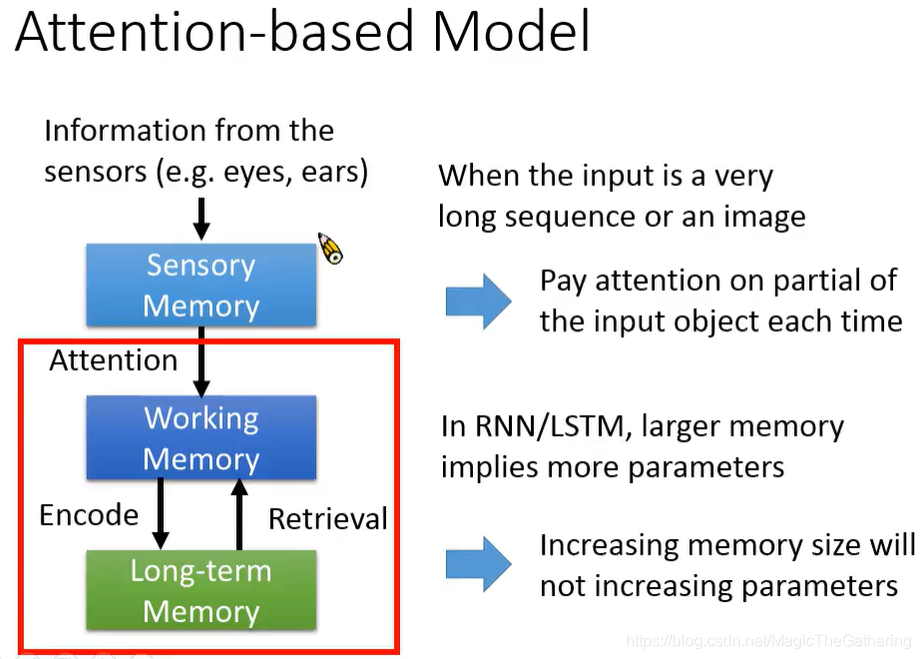

1. Why does attention make sense?

- Focus on important partial of the input

- Increasing memory size without parameters increasing

2. Related topics

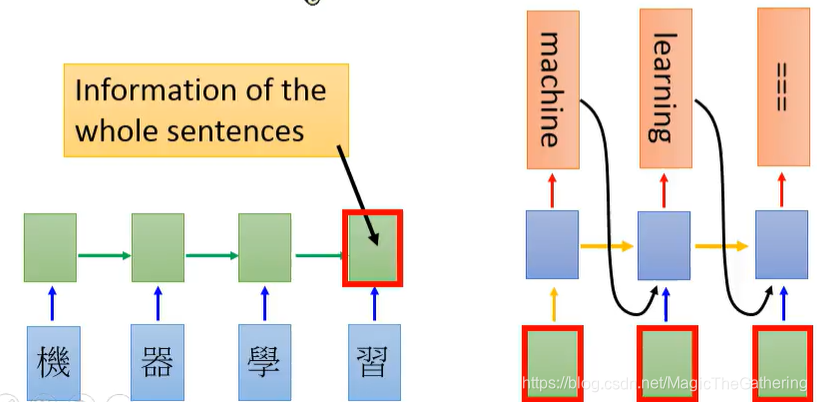

Seq2Seq: input and output are both sequences with different lengths (e.g. Machine trasnslation).

- The last vector cannot represent the meaning of whole sentense well (might forget long-term information)

- Performance is ok but doesn't reach SOTA

- Attention to improve its performance

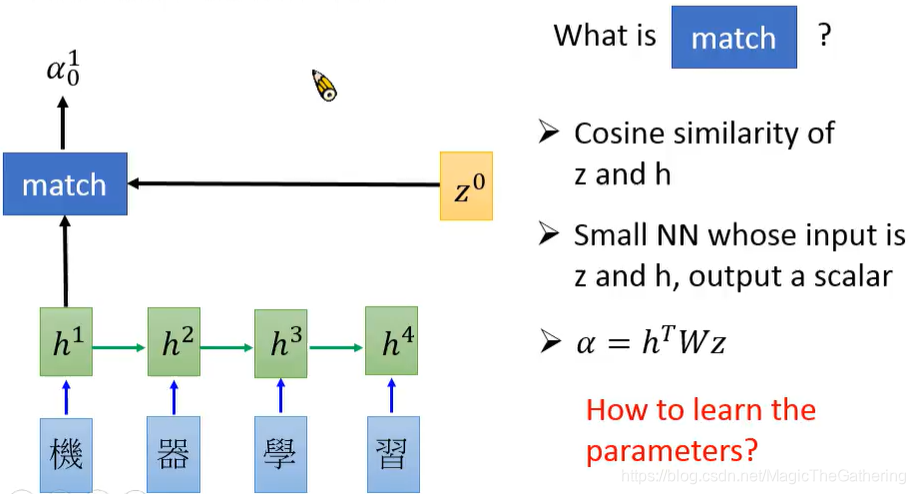

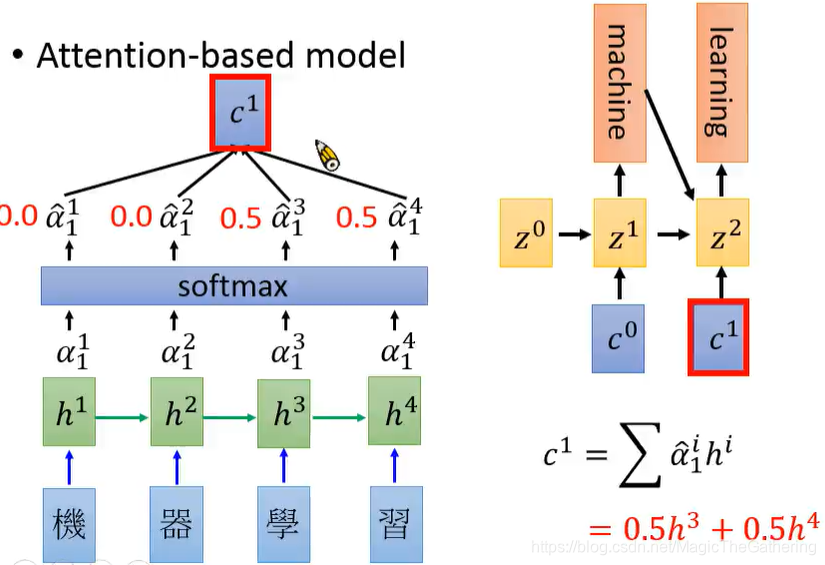

3. Attention-based model

4. Applications

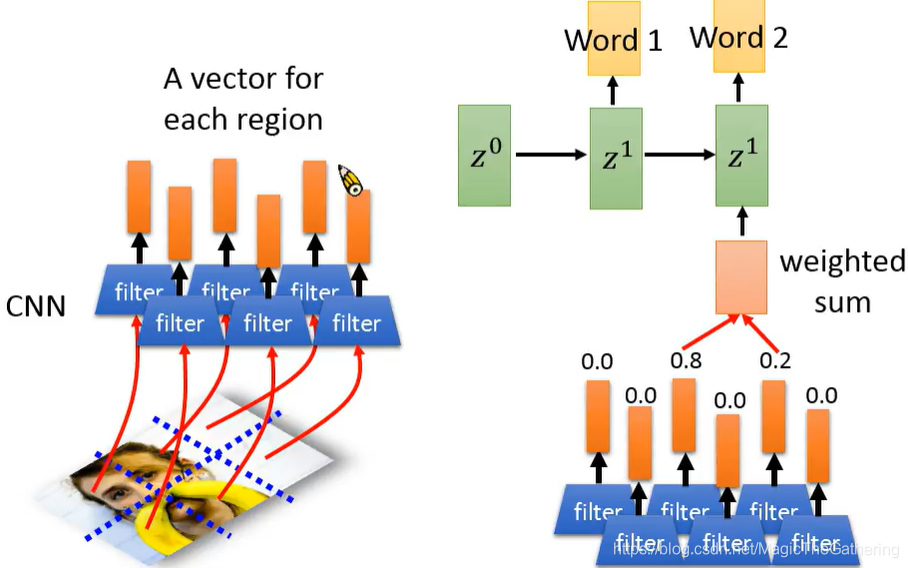

(1) Image Caption Generation

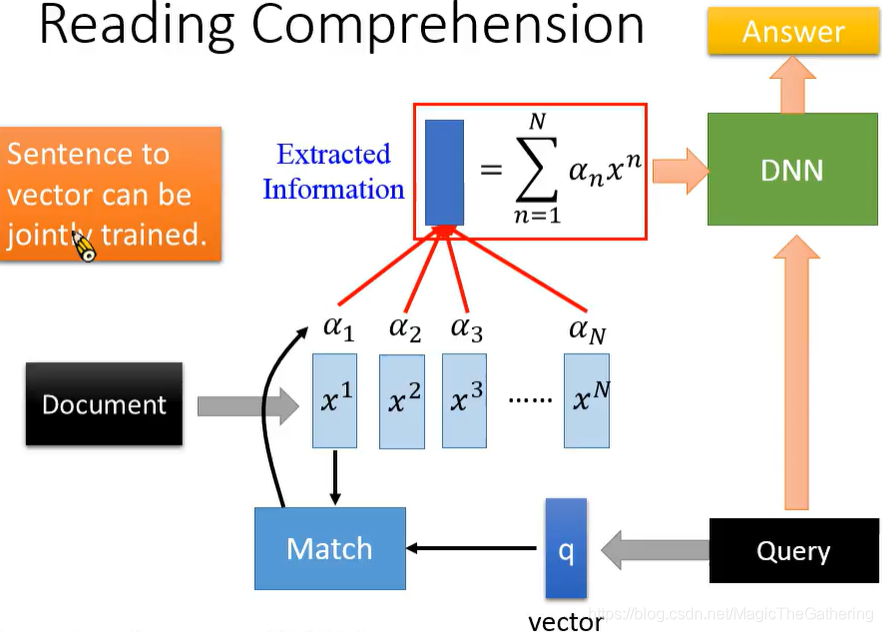

(2) Reading Comprehension

5. Other Attention Models

(1) Self Attention:

- When source == target. Make it easy to capture inner feature instead of forgetting them with the increase of sequence length

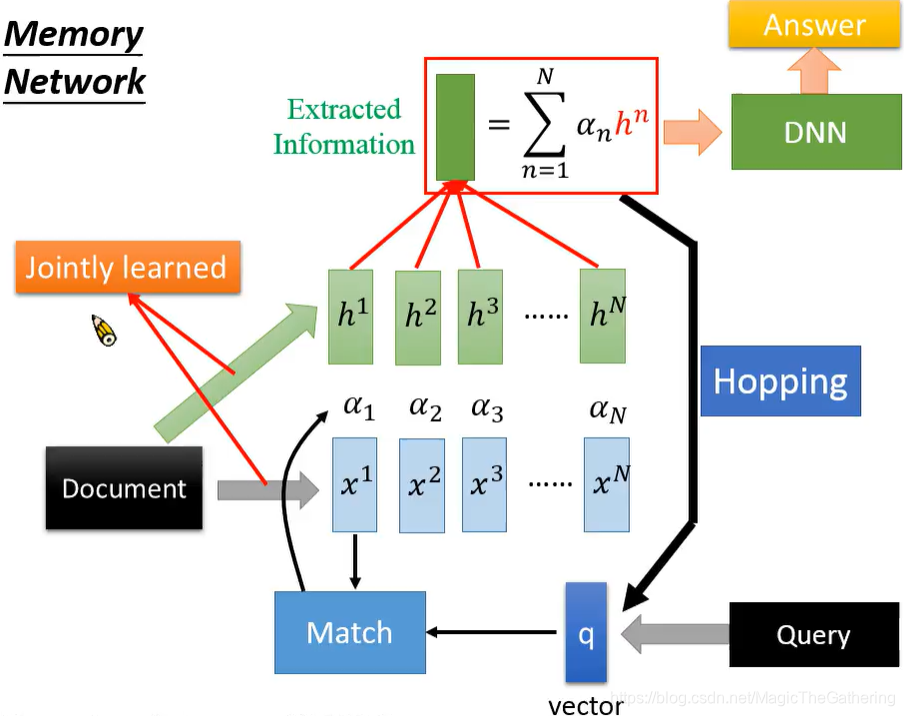

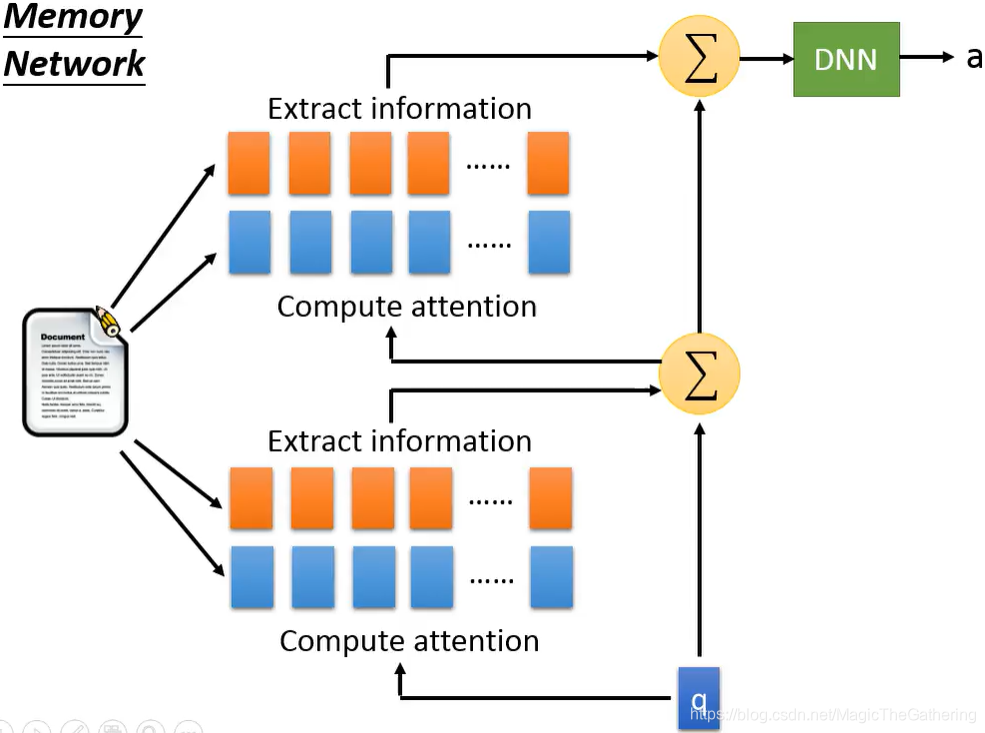

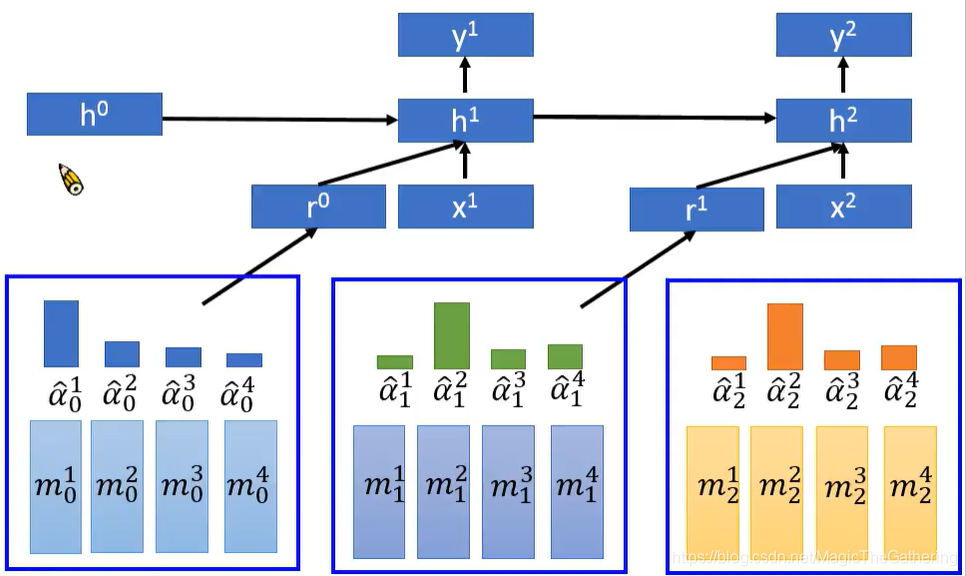

(2) Memory Network:

- Use 2 different DNN nets to extract information and do attention

- Hopping

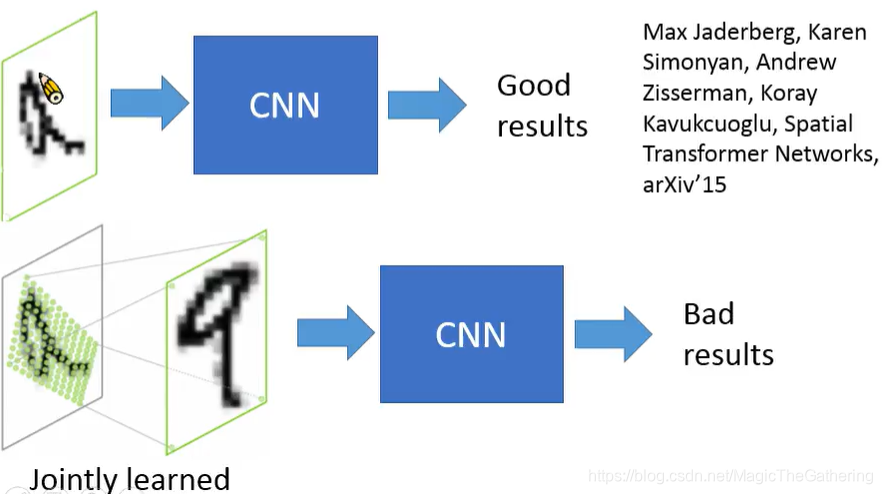

(3) Spatial Tranformers

(4) Multi-head attention

- Do linear transformations on Q, K, and V. Then do attention and concatenate results.

- Is able to do parallel computing

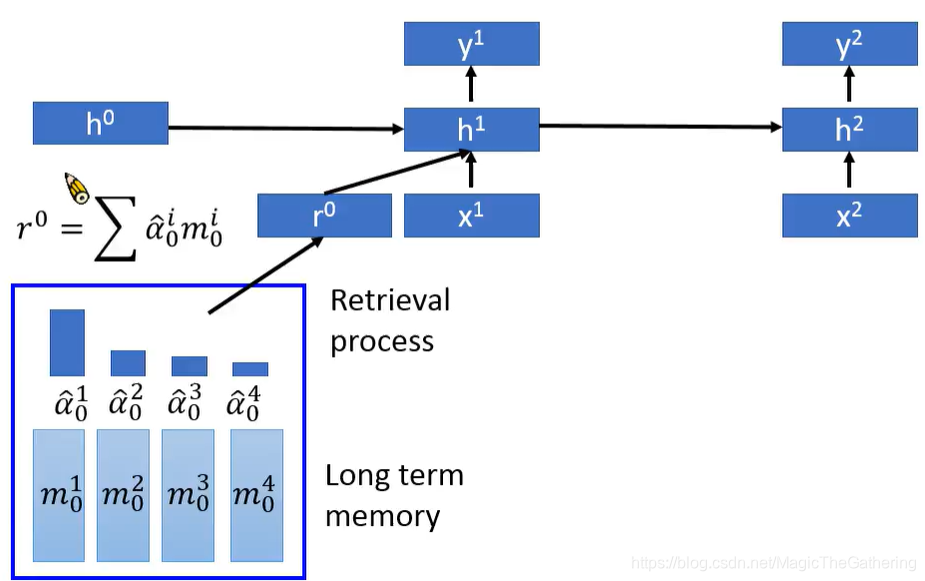

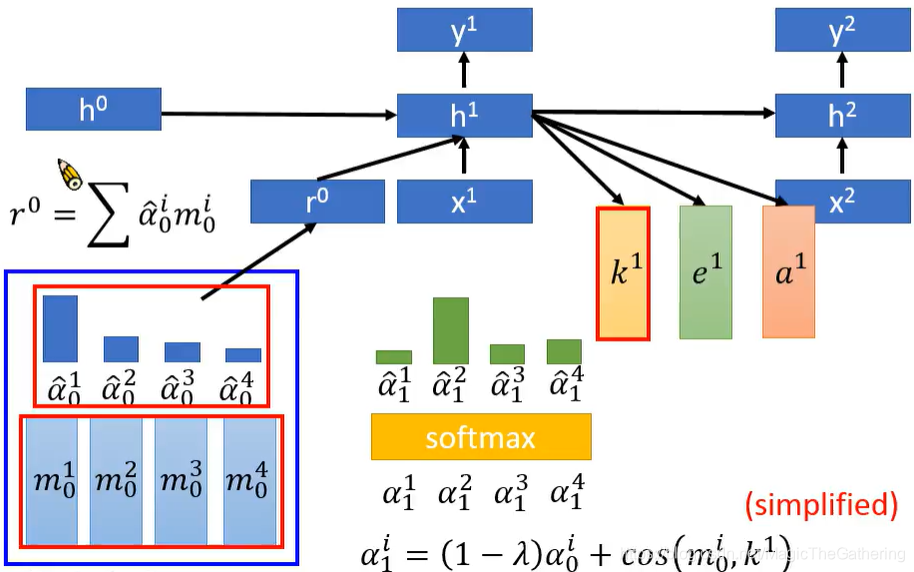

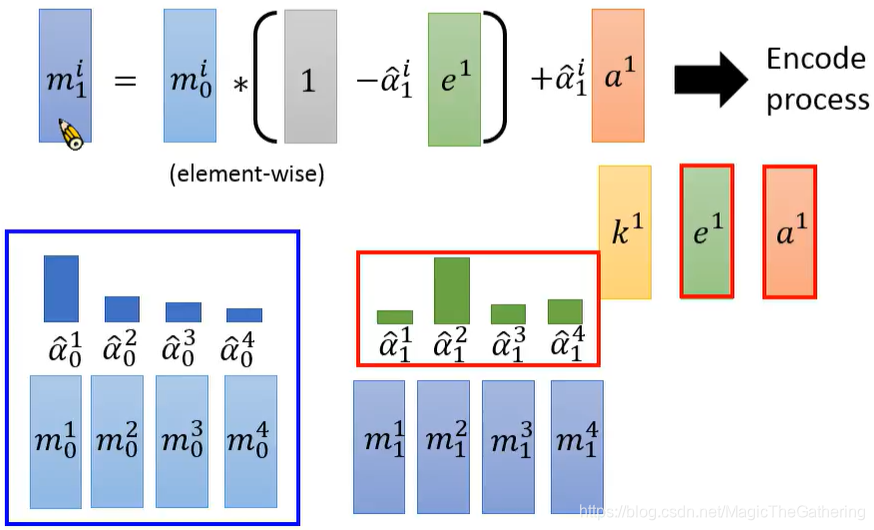

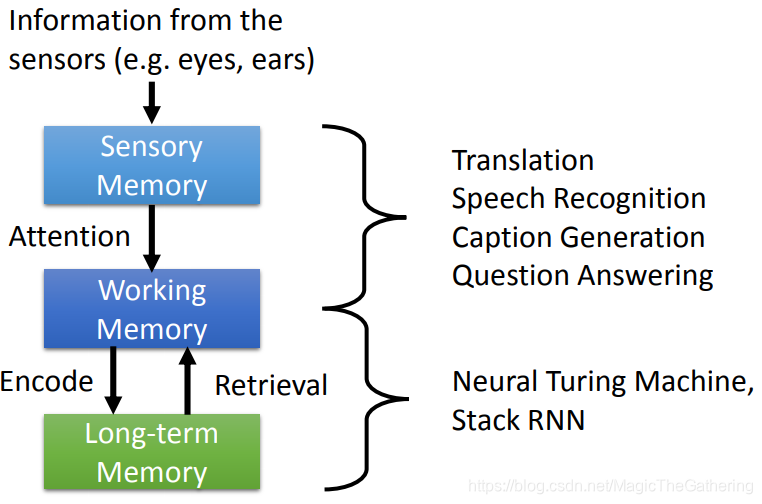

(5) Attention on memory

Neural Turing Machine

- Advanced RNN/LSTM

- Add long-term memory

k is used to generate 'focus' distribution. e is the information need to be erased in the memory. a is the information need to be added into memory.

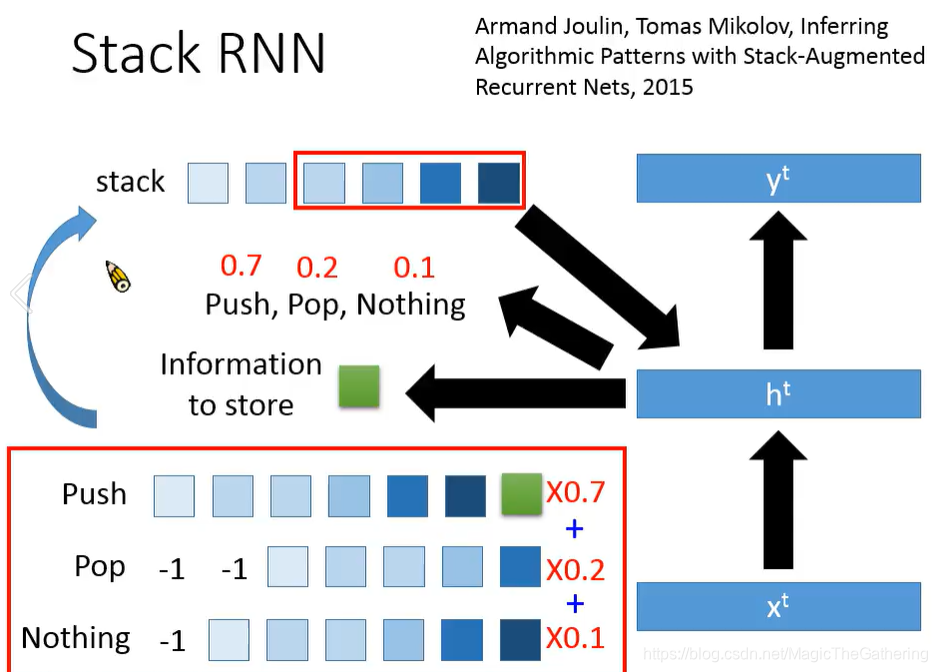

Stack RNN

6. Summary

Attention

深度解析:注意力机制在NLP中的应用

深度解析:注意力机制在NLP中的应用

注意力机制在自然语言处理中扮演了重要角色,解决了传统序列到序列模型中长距离依赖的问题。它允许模型关注输入序列的重要部分,提高了在图像标题生成和阅读理解等任务中的性能。此外,还介绍了多种注意力模型,如自注意力、记忆网络、空间变换器、多头注意力以及神经图灵机和堆栈RNN,这些模型分别以不同方式增强模型的表示能力和处理能力。

注意力机制在自然语言处理中扮演了重要角色,解决了传统序列到序列模型中长距离依赖的问题。它允许模型关注输入序列的重要部分,提高了在图像标题生成和阅读理解等任务中的性能。此外,还介绍了多种注意力模型,如自注意力、记忆网络、空间变换器、多头注意力以及神经图灵机和堆栈RNN,这些模型分别以不同方式增强模型的表示能力和处理能力。

608

608

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?