https://blog.csdn.net/sld880311/article/details/77840343

基本概念

bridge是一个虚拟网络设备,具有网络设备的特性(可以配置IP、MAC地址等);而且bridge还是一个虚拟交换机,和物理交换机设备功能类似。网桥是一种在链路层实现中继,对帧进行转发的技术,根据MAC分区块,可隔离碰撞,将网络的多个网段在数据链路层连接起来的网络设备。

对于普通的物理设备来说,只有两端,从一段进来的数据会从另一端出去,比如物理网卡从外面网络中收到的数据会转发到内核协议栈中,而从协议栈过来的数据会转发到外面的物理网络中。而bridge不同,bridge有多个端口,数据可以从任何端口进来,进来之后从哪个口出去原理与物理交换机类似,需要看mac地址。

bridge是建立在从设备上(物理设备、虚拟设备、vlan设备等,即attach一个从设备,类似于现实世界中的交换机和一个用户终端之间连接了一根网线),并且可以为bridge配置一个IP(参考LinuxBridge MAC地址行为),这样该主机就可以通过这个bridge设备与网络中的其他主机进行通信了。另外它的从设备被虚拟化为端口port,它们的IP及MAC都不在可用,且它们被设置为接受任何包,最终由bridge设备来决定数据包的去向:接收到本机、转发、丢弃、广播。

作用

bridge是用于连接两个不同网段的常见手段,不同网络段通过bridge连接后就如同在一个网段一样,工作原理很简单就是L2数据链路层进行数据包的转发。

工作原理

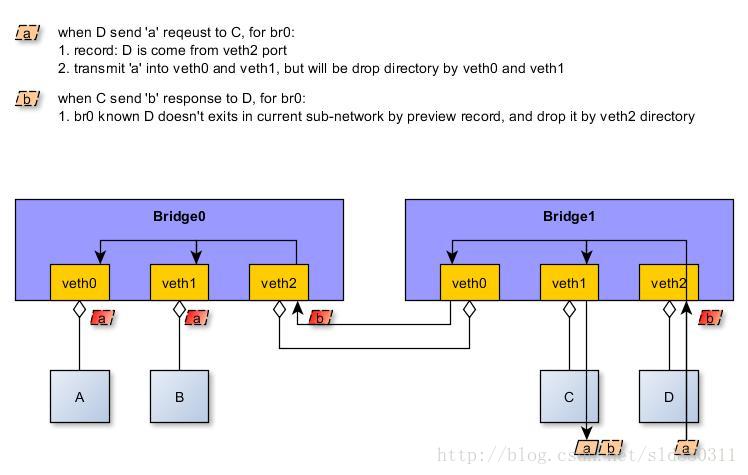

基本原理图如下(来源于网络):

bridge和route比较相似,都可以用来分发网络数据包,它们的本质不同在于:route在L3网络层,使用路由协议、bridge在L2数据链路层,通过学习和缓存在链路上传输的数据包中的源地址以及物理层的输入端口:

-

收到新数据包时,记录源MAC地址和输入端口

-

根据数据包中的目的MAC地址查找本地缓存,如果能找到对应的MAC地址记录

- 若发现记录不在本地网络,直接丢弃数据包

- 若发现记录存在对应的端口,则将数据包直接从该端口转发出去

- 如果本地缓存中不存在任何记录,则在本网段中进行广播。

因此,通过Linux bridge来实现打通容器网络是一个非常有效的方法,同步bridge,我们可以做到:

- 连接同宿主机内所有容器的虚拟网络

- 打通容器内网与外网,通过bridge将数据转发到真实的往里网卡eth0中。

常用命令

brctl

安装与配置

[root@localhost ~]# yum install -y bridge-utils ^C

[root@localhost ~]# modprobe bridge ^C

[root@localhost ~]# lsmod | grep bridge

bridge 196608 0

stp 16384 1 bridge

llc 16384 2 bridge,stp

[root@localhost ~]# mod

modifyrepo modinfo modprobe modutil

[root@localhost ~]# modinfo bridge

filename: /lib/modules/4.18.0+/kernel/net/bridge/bridge.ko

alias: rtnl-link-bridge

version: 2.3

license: GPL

rhelversion: 8.2

srcversion: 099F458758086399F1B4025

depends: stp,llc

intree: Y

name: bridge

vermagic: 4.18.0+ SMP mod_unload modversions

[root@localhost ~]# echo "1">/proc/sys/net/ipv4/ip_forward命令显示

[root@localhost ~]# brctl --help

Usage: brctl [commands]

commands:

addbr <bridge> add bridge

delbr <bridge> delete bridge

addif <bridge> <device> add interface to bridge

delif <bridge> <device> delete interface from bridge

hairpin <bridge> <port> {on|off} turn hairpin on/off

setageing <bridge> <time> set ageing time

setbridgeprio <bridge> <prio> set bridge priority

setfd <bridge> <time> set bridge forward delay

sethello <bridge> <time> set hello time

setmaxage <bridge> <time> set max message age

setpathcost <bridge> <port> <cost> set path cost

setportprio <bridge> <port> <prio> set port priority

show [ <bridge> ] show a list of bridges

showmacs <bridge> show a list of mac addrs

showstp <bridge> show bridge stp info

stp <bridge> {on|off} turn stp on/off创建bridge

网桥接口的混杂模式取决于三个因素,一个是自身的混杂模式设置;第二是vlan过滤是否开启;最后是处于自动状态(auto_port)的子接口的数量

混杂模式设置https://blog.csdn.net/sinat_20184565/article/details/80852155

如下通过命令设置网桥br_2为混杂模式,此时,所有网桥子接口都将处于混杂模式(eth1与eth2 promiscuity mode):

ip link add br_2 type bridge

ip link set eth1 master br_2

ip link set eth2 master br_2

通过ip命令设置接口的混杂模式:

ip link set br_2 promisc on

去除混杂模式

ip link set br_2 -promisc

#增加一个网桥

[root@localhost ~]# brctl addbr br0

#将现有网卡连接到往前,由于该网卡开启了混杂模式,可以不需要配置IP,因为bridge工作在L2链路层

[root@localhost ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: enp49s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether d4:5d:64:07:a8:ea brd ff:ff:ff:ff:ff:ff

3: enp49s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br-sunld08-test state UP mode DEFAULT group default qlen 1000

link/ether d4:5d:64:07:a8:eb brd ff:ff:ff:ff:ff:ff

4: br-sunld08-test: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 32:22:21:3a:e1:a4 brd ff:ff:ff:ff:ff:ff

5: test-veth09@test-veth08: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether f6:24:88:93:fc:36 brd ff:ff:ff:ff:ff:ff

6: test-veth08@test-veth09: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-sunld08-test state UP mode DEFAULT group default qlen 1000

link/ether 32:22:21:3a:e1:a4 brd ff:ff:ff:ff:ff:ff

[root@localhost ~]# ifconfig enp49s0f1 0.0.0.0 promisc

[root@localhost ~]# brctl addif br0 enp49s0f1

#查看已有网桥

[root@localhost ~]# brctl show

#给bridge配置IP

[root@localhost ~]# bridge link

[root@localhost ~]# ifconfig br0 10.10.1.1 netmask 255.255.0.0 up删除bridge

[root@localhost ~]# brctl delbr br0

[root@localhost ~]# 关闭生成树

#关闭生成树协议,减少数据包污染

[root@localhost ~]# brctl stp br0 off==========================================

一步一步实现bridge

创建bridge

[root@localhost ~]# ip link add br-sunld08-test type bridge

[root@localhost ~]# ip link set dev br-sunld08-test up当创建一个bridge时,它是一个独立的网络设备,只有一个端口连接者协议栈,其他端口什么都没有连接,此时bridge没有任何实际功能,如下图所示:

[root@localhost ~]# ip link show br-sunld08-test

48: br-sunld08-test: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether 1a:7b:cf:3b:08:53 brd ff:ff:ff:ff:ff:ff

[root@localhost ~]# brctl addbr br0

[root@localhost ~]# brctl show br-sunld08-test

bridge name bridge id STP enabled interfaces

br-sunld08-test 8000.000000000000 no

创建veth并连接到br-sunld08-test上

创建

#创建veth

[root@localhost ~]# ip link add test-veth08 type veth peer name test-veth09

#配置IP

[root@localhost ~]# ifconfig test-veth08 192.168.209.135/24 up

[root@localhost ~]# ifconfig test-veth09 192.168.209.136/24 up

#启动设备

[root@localhost ~]# ip link set dev test-veth08 up

[root@localhost ~]# ip link set dev test-veth09 up

#把test-veth08连接到br-sunld08-test

r[root@localhost ~]# ip link set dev test-veth08 master br-sunld08-test

#查看信息

[root@localhost ~]# bridge link | grep test-veth08

50: test-veth08 state UP : <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br-sunld08-test state forwarding priority 32 cost 2 部署图

发生的变化

当test-veth08连接到br-sunld08-test时发生的变化:

-

br-sunld08-test和test-veth08连接起来,并且是双向的通道

-

协议栈和test-veth08之间变成单向通道,协议栈能发数据给test-veth08,但是test-veth08从外面收到的数据不能转发给协议栈

-

br-sunld08-test的mac地址变成了test-veth08的mac地址(可以通过 ip a查看

)相当于bridge在test-veth08和协议栈之间做了一次拦截,将test-veth08本来要转发给协议栈的数据拦截,全部转发给了bridge,同时bridge也可以向test-veth08发送数据。

连通性

注意:对于非debian系统,这里有可能ping不通,主要是因为内核中的一些ARP相关配置导致sunld-veth1不返回ARP应答包,如ubuntu上就会出现这种情况,解决办法如下:

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/conf/test-veth08/accept_local

[root@localhost ~]# echo 1 > /proc/sys/net/ipv4/conf/test-veth09/accept_local

[root@localhost ~]# echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter

[root@localhost ~]# echo 0 > /proc/sys/net/ipv4/conf/test-veth08/rp_filter

[root@localhost ~]# echo 0 > /proc/sys/net/ipv4/conf/test-veth09/rp_filter通过test-veth08 ping test-veth09:ping不通

[root@localhost ~]# ping -c 4 192.168.209.136 -I test-veth08

PING 192.168.209.136 (192.168.209.136) from 192.168.209.135 test-veth08: 56(84) bytes of data.

From 192.168.209.135 icmp_seq=1 Destination Host Unreachable

From 192.168.209.135 icmp_seq=2 Destination Host Unreachable

From 192.168.209.135 icmp_seq=3 Destination Host Unreachable

From 192.168.209.135 icmp_seq=4 Destination Host Unreachable查看数据包

#由于test-veth08的arp缓存里没有test-veth09的mac地址,所以ping之前先发出arp请求

#从test-veth09上抓包来看,test-veth09收到了arp请求,并且返回了应答

[root@localhost ~]# tcpdump -n -i test-veth09

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on test-veth09, link-type EN10MB (Ethernet), capture size 262144 bytes

00:22:38.369683 ARP, Request who-has 192.168.209.136 tell 192.168.209.135, length 28

00:22:38.369698 ARP, Reply 192.168.209.136 is-at 6a:11:bf:6b:21:8f, length 28

#从veth0上抓包来看,数据包也发出去了,并且也收到了返回

[root@localhost ~]# tcpdump -n -i test-veth08

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on test-veth08, link-type EN10MB (Ethernet), capture size 262144 bytes

00:22:11.736906 ARP, Request who-has 192.168.209.136 tell 192.168.209.135, length 28

00:22:11.736918 ARP, Reply 192.168.209.136 is-at 6a:11:bf:6b:21:8f, length 28#再看br0上的数据包,发现只有应答

[root@localhost ~]# tcpdump -n -i br-sunld08-test

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on br-sunld08-test, link-type EN10MB (Ethernet), capture size 262144 bytes

00:24:48.601526 ARP, Reply 192.168.209.136 is-at 6a:11:bf:6b:21:8f, length 28从上面的抓包可以看出,去和回来的流程都没有问题,问题就出在test-veth08收到应答包后没有给协议栈,而是给了br-sunld08-test,于是协议栈得不到test-veth09的mac地址,从而通信失败。

bridge配置IP

通过上面的分析可以看出,给test-veth08配置IP没有意义,因为就算协议栈传数据给test-veth08,应当包也回不来。这里我们将test-veth08的ip配置给bridge。

[root@localhost ~]# ip addr del 192.168.209.135/24 dev test-veth08

[root@localhost ~]# ip addr add 192.168.209.135/24 dev br-sunld08-test部署图

连通性

[root@localhost ~]# ping -c 4 192.168.209.136 -I br-sunld08-test

PING 192.168.209.136 (192.168.209.136) from 192.168.209.135 br-sunld08-test: 56(84) bytes of data.

64 bytes from 192.168.209.136: icmp_seq=1 ttl=64 time=0.238 ms

64 bytes from 192.168.209.136: icmp_seq=2 ttl=64 time=0.089 ms

64 bytes from 192.168.209.136: icmp_seq=3 ttl=64 time=0.243 ms

64 bytes from 192.168.209.136: icmp_seq=4 ttl=64 time=0.139 ms但ping网关还是失败,因为这个bridge上只有两个网络设备,分别是192.168.209.135和192.168.209.136,br0不知道192.168.209.1在哪。

[root@localhost ~]# ping -c 4 192.168.209.2 -I br-sunld08-test

PING 192.168.209.2 (192.168.209.2) from 192.168.209.135 br-sunld08-test: 56(84) bytes of data.

^C

--- 192.168.209.2 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2000ms将物理网卡绑定到bridge

[root@localhost ~]# ip link set dev eth0 master br-sunld08-test

[root@localhost ~]# bridge link

2: eth0 state UP : <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br-sunld08-test state forwarding priority 32 cost 4

50: test-veth08 state UP : <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br-sunld08-test state forwarding priority 32 cost 2 bridge根本不区分接入进来的是物理设备还是虚拟设备,对于bridge来说都是一样,都是网络设备,所以当eth0加入bridge之后,和test-veth08的功能一样,从外面网络收到的数据包将无条件的转发给br0,自己变成一根网线。

这时通过eth0 平网关则失败,由于bridge通过eth0这根网线连接上了外面的物理机,所以连接到bridge上的设备可以ping通网关。这里连接到bridge的设备是test-veth09和自己veth1是通过veth0这根网线连上去的,而br0可以理解为自己有一块自带的网卡。

删除物理网卡的IP

由于eth0已经变成了和网线差不多的功能,所以在eth0上配置IP已经没有什么意义了,并且还会影响协议栈的路由选择,比如如果上面ping的时候不指定网卡的话,协议栈有可能优先选择eth0,导致ping不通,所以这里需要将eth0上的IP去掉。

部署图

bridge是否需要必须配置IP

内核实现

工作过程

如下图所示(图片来源于网络):

注意事项

IP地址

当一个设备attach到bridge上时,该设备上的IP则变为无效,Linux不在使用那个IP在三层接受数据。此时应该把该设备的IP赋值给bridge设备。

数据流向

对于一个被attach到bridge上的设备来说,只有当它收到数据时,此包数据才会被转发到bridge上,进而完成查找表广播等后续操作。当请求是发送类型时,数据是不会被转发到bridge上的,它会寻址下一个发送出口。用户在配置网络时经常忽略这一点从而造成网络故障。

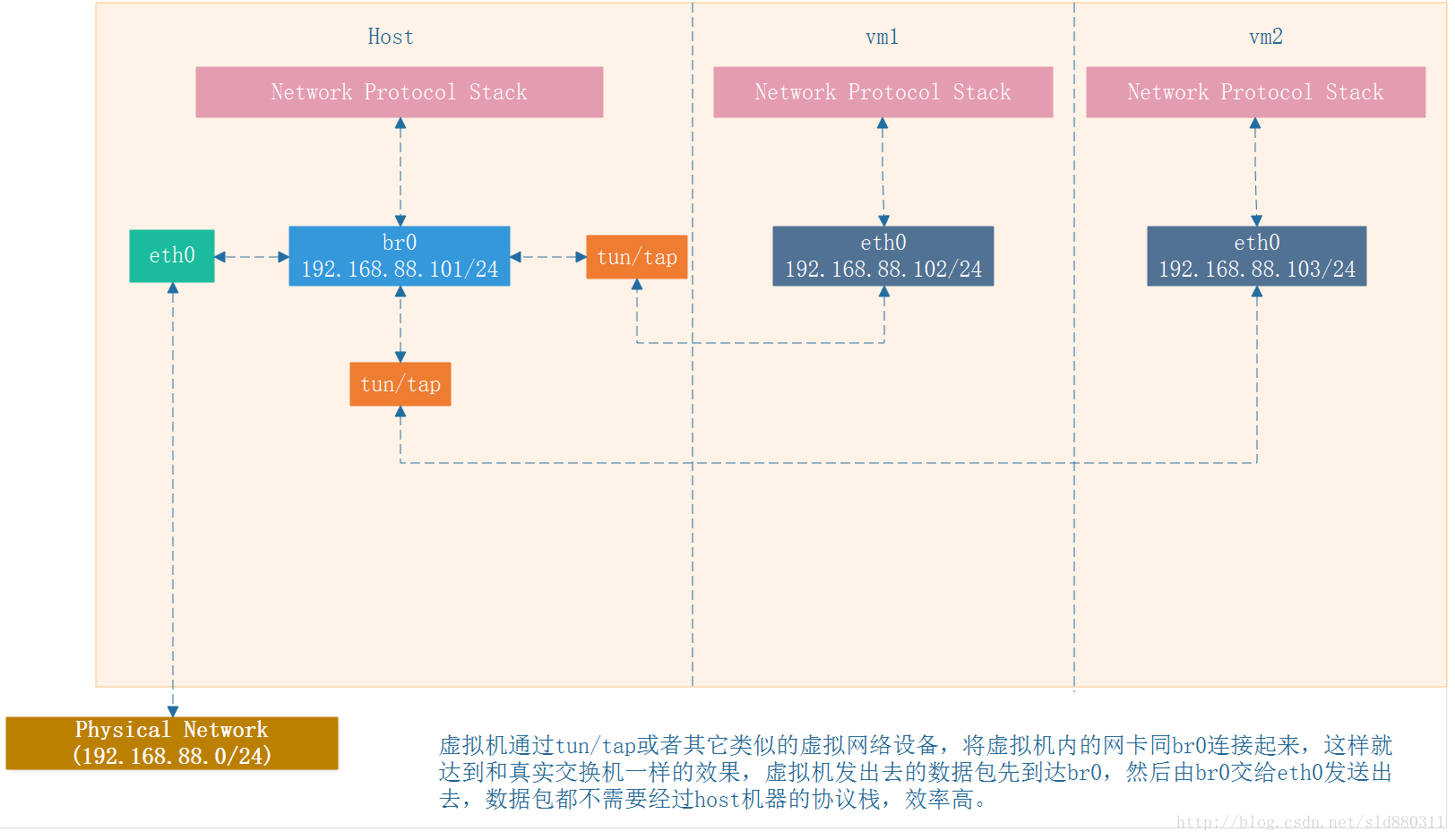

应用场景

vm

docker

其他

LinuxBridge MAC地址行为

#创建bridge,查看默认mac

[root@localhost ~]# ip link add br-mac type bridge

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether f6:b0:c9:7c:04:1d brd ff:ff:ff:ff:ff:ff

#创建设备veth

[root@localhost ~]# ip link add mac-veth01 type veth peer name mac-veth02

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether f6:b0:c9:7c:04:1d brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 92:a2:23:d5:88:56 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#attach mac-veth01(大MAC)

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50(自动变为mac-veth01的mac) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 92:a2:23:d5:88:56 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#attach mac-veth02(小MAC)

[root@localhost ~]# ip link set dev mac-veth02 master br-mac

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether 92:a2:23:d5:88:56(变化为小mac,mac-veth02的mac) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether 92:a2:23:d5:88:56 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#增加mac-veth02的mac

[root@localhost ~]# ifconfig mac-veth02 hw ether de:ee:ff:8d:0c:51

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50(变化为小mac,mac-veth01的mac) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:51 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#更改br-mac的mac(大mac)

[root@localhost ~]# ifconfig br-mac hw ether de:ee:ff:8d:0c:52

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:52(变化为指定的mac) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:51 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#设置br-mac same as mac-veth01,mac-veth02 mac减小

[root@localhost ~]# ifconfig br-mac hw ether de:ee:ff:8d:0c:50

[root@localhost ~]# ifconfig mac-veth02 hw ether de:ee:ff:8d:0c:49

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50(和设置的mac一样,不变) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:49 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

#增加mac-veth01的mac

[root@localhost ~]# ifconfig mac-veth01 hw ether de:ee:ff:8d:0c:51

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50(mac不变) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:49 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:51 brd ff:ff:ff:ff:ff:ff

#增加新的设备

[root@localhost ~]# ip link add mac-veth03 type veth peer name mac-veth04

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50 brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:49 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:51 brd ff:ff:ff:ff:ff:ff

23: mac-veth04: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 46:62:dd:cd:4f:41 brd ff:ff:ff:ff:ff:ff

24: mac-veth03: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether c6:3f:65:95:e0:93 brd ff:ff:ff:ff:ff:ff

#attach mac-veth04(小mac)

[root@localhost ~]# brctl addif br-mac mac-veth04

20: br-mac: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default

link/ether de:ee:ff:8d:0c:50(不变) brd ff:ff:ff:ff:ff:ff

21: mac-veth02: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:49 brd ff:ff:ff:ff:ff:ff

22: mac-veth01: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether de:ee:ff:8d:0c:51 brd ff:ff:ff:ff:ff:ff

23: mac-veth04: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master br-mac state DOWN group default qlen 1000

link/ether 46:62:dd:cd:4f:41 brd ff:ff:ff:ff:ff:ff

24: mac-veth03: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether c6:3f:65:95:e0:93 brd ff:ff:ff:ff:ff:ff

结论

br0如果没有指定hw MAC, br0的MAC地址会根据bridge中port的变化,自动选择port中最小的一个MAC地址作为br0的MAC地址。br0只能指定port中有的interface的MAC作为br0的MAC地址。

参考资料

基于veth的网络虚拟化

Linux虚拟网络设备之bridge(桥)

Linux 网桥配置命令:brctl

Linux-虚拟网络设备-veth pair

Linux下的虚拟Bridge实现

bridge

linux网桥设置MAC地址时的行为

br_device.c

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?