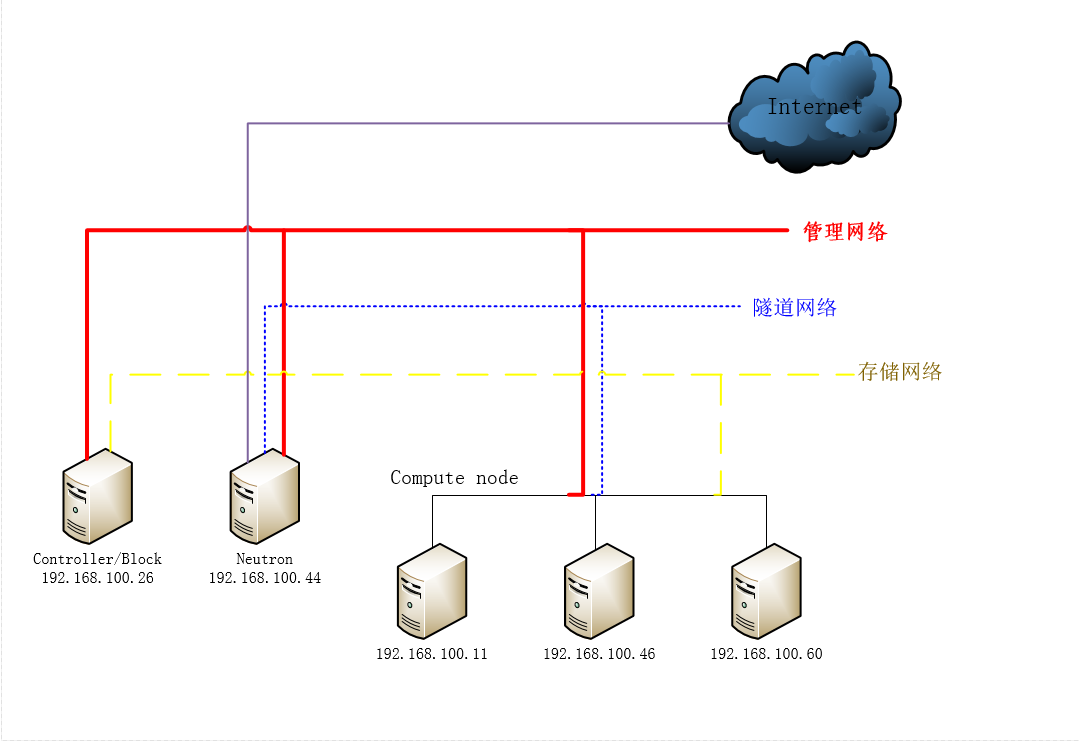

拓扑图

部署前准备

安装ubuntu14.04

ssh设置

修改/etc/ssh/sshd_config 配置文档中的下面一句

# vi /etc/ssh/sshd_configPermitRootLogin yes# service ssh restart网络设置

ip根据自己情况设置,这儿只列出一台的设置

# vi /etc/network/interfaces# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto p4p1

iface p4p1 inet static

address 192.168.100.26

netmask 255.255.255.0

network 192.168.100.0

broadcast 192.168.100.255

gateway 192.168.100.254

# dns-* options are implemented by the resolvconf package, if installed

dns-nameservers 8.8.8.8域名解析设置

# vi /etc/hosts127.0.0.1 localhost

192.168.100.26 compute-26

192.168.100.44 neutron

192.168.100.26 controller

192.168.100.11 compute1

192.168.100.46 compute2

192.168.100.60 compute3部署

环境说明:

1.通过上面的域名解析可以看出控制节点、网络节点、计算节点分别对应的主机及ip

2.下面密码都用cloud

3.本次为单网卡配置

4.关于openstack的有关概念可以自行查阅官方文档或者查看其他网站

安装并配置ntp同步时间

安装ntp

# apt-get install ntp配置控制节点

(国内常用NTP服务器地址及IPhttp://www.douban.com/note/171309770/)

# vi /etc/ntp.confserver 210.72.145.44 iburst

restrict -4 default kod notrap nomodify

restrict -6 default kod notrap nomodify其他节点

# vi /etc/ntp.confserver controller iburst重启ntp服务

# service ntp restart安装openstack包并添加openstack源(所有节点都执行)

# apt-get install ubuntu-cloud-keyring

# echo "deb http://ubuntu-cloud.archive.canonical.com/ubuntu" \"trusty-updates/juno main" > /etc/apt/sources.list.d/cloudarchive-juno.list更新源和升级包

# apt-get update && apt-get dist-upgrade安装并配置数据库(控制节点)

安装数据库

一直都选ok即可,数据库初始密码为空,按照自己情况设定自己的密码(本例中密码均为cloud)

# apt-get install mariadb-server python-mysqldb配置数据库

# vi /etc/mysql/my.cnf[mysqld]

...

bind-address = 192.168.100.26

#使得其他节点通过这个ip访问控制节点数据库

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

#解决数据库乱码问题

max_connections=1000

#数据库系统允许的最大可连接数max_connections默认是100。最大是16384,如果没设置则是默认的100连接数,超过100数据库会因访问量过多而出现崩溃,不稳定等现象,在下面max_connections的值可设置位1~16384中的任意值如果数据库出现授权错误,可以看一下这儿 http://blog.csdn.net/moolight_shadow/article/details/45132509

重启数据库服务

# service mysql restart初始化数据库

按照个人情况初始化

# mysql_secure_installation安装并配置消息传输服务器(控制节点)

安装rabbitmq

# apt-get install rabbitmq-server配置rabbitmq的用户名和密码,下面用户名为”guest”密码为”cloud”。

注意:若此处修改之后,在后面的配置文档中(例如glance-api.conf)也要修改相应配置

# rabbitmqctl change_password guest RABBIT_PASS重启后生效

# service rabbitmq-server restart 添加服务

在此之前,我们先了解下openstack的一些基本组件

openstack由以下部分来组成:

Identity(代号为“Keystone”)

Dashboard(代号为“Horizon”)

Image Service(代号为“Glance”)

Network(代号为“Neutron”)

Block Storage(代号为“Cinder”)

Object Storage(代号为“Swift”)

Orchestration(代号为“heat”)

Telemetry(代号为“ceilometer”)

Database (代号为“trove”)

Data processing(代号为“sahara”)

一、 添加客户服务(控制节点)

创建keystone数据库,并授权

# mysql -u root -p

Enter password:CREATE DATABASE keystone;

#创建keystone数据库

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'cloud';

#让本地主机上所有的以"keystone"为名的配置文件都能通过密码"cloud"对mysql中的keystone数据库进行所有操作

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'cloud';

#让任何主机上的以"keystone"为名的配置文件都能通过密码"cloud"对mysql中的keystone数据库进行所有操作

flush privileges;

#刷新数据库

exit安装配置keystone组件

安装keystone

# apt-get install keystone python-keystoneclient配置keystone.conf

# vi /etc/keystone/keystone.conf[DEFAULT]

...

admin_token = $(openssl rand -hex 10)

#这里的值用一个加密字符串代替可以是独特的任何字符串,在官方文档中用的是用openssl命令产生的随机数

[database]

...

connection = mysql://keystone:cloud@controller/keystone

#配置keystone.conf可以连接到keystone数据库,此处的密码一定要与上面的数据库中密码一模一样

[token]

...

provider = keystone.token.providers.uuid.Provider

driver = keystone.token.persistence.backends.sql.Token

#配置令牌提供者的uuid和sql的驱动

[revoke]

...

driver = keystone.contrib.revoke.backends.sql.Revoke

[DEFAULT]

...

verbose = True

#启动详细日志同步keystone数据库

# su -s /bin/sh -c "keystone-manage db_sync" keystone重启keystone服务

# service keystone restartubuntu系统会没人的产生一个轻量级数据库,而我们已经创建了自己的数据库,所以将系统自动生成的删掉

# rm -f /var/lib/keystone/keystone.db系统默认的会将已经生成的令牌无期限的存储到数据库中,令牌过期后也会一直保存在数据库中,这样的话会造成很大的资源浪费,故我们可以使用下面的crontab命令产生一个定时任务,让他在每小时都自动清理过期的令牌

# (crontab -l -u keystone 2>&1 | grep -q token_flush) || echo '@hourly /usr/bin/keystone-manage token_flush >/var/log/keystone/keystone-tokenflush.log 2>&1' >> /var/spool/cron/crontabs/keystone创建租户、用户、角色

keystone包含的概念(参考 http://www.aboutyun.com/thread-10124-1-1.html)

1. User

User即用户,他们代表可以通过keystone进行访问的人或程序。Users通过认证信息(credentials,如密码、API Keys等)进行验证。

2. Tenant

Tenant即租户,它是各个服务中的一些可以访问的资源集合。例如,在Nova中一个tenant可以是一些机器,在Swift和Glance中一个tenant可以是一些镜像存储,在Quantum中一个tenant可以是一些网络资源。Users默认的总是绑定到某些tenant上。

3. Role

Role即角色,Roles代表一组用户可以访问的资源权限,例如Nova中的虚拟机、Glance中的镜像。Users可以被添加到任意一个全局的 或 租户内的角色中。在全局的role中,用户的role权限作用于所有的租户,即可以对所有的租户执行role规定的权限;在租户内的role中,用户仅能在当前租户内执行role规定的权限。

4. Service

Service即服务,如Nova、Glance、Swift。根据前三个概念(User,Tenant和Role)一个服务可以确认当前用户是否具有访问其资源的权限。但是当一个user尝试着访问其租户内的service时,他必须知道这个service是否存在以及如何访问这个service,这里通常使用一些不同的名称表示不同的服务。在上文中谈到的Role,实际上也是可以绑定到某个service的。例如,当swift需要一个管理员权限的访问进行对象创建时,对于相同的role我们并不一定也需要对nova进行管理员权限的访问。为了实现这个目标,我们应该创建两个独立的管理员role,一个绑定到swift,另一个绑定到nova,从而实现对swift进行管理员权限访问不会影响到Nova或其他服务。

5. Endpoint

Endpoint,翻译为“端点”,我们可以理解它是一个服务暴露出来的访问点,如果需要访问一个服务,则必须知道他的endpoint。因此,在keystone中包含一个endpoint模板(endpoint template,在安装keystone的时候我们可以在conf文件夹下看到这个文件),这个模板提供了所有存在的服务endpoints信息。一个endpoint template包含一个URLs列表,列表中的每个URL都对应一个服务实例的访问地址,并且具有public、private和admin这三种权限。public url可以被全局访问(如http://compute.example.com),private url只能被局域网访问(如http://compute.example.local),admin url被从常规的访问中分离。

配置环境变量

# export OS_SERVICE_TOKEN=$(openssl rand -hex 10)

#设置管理令牌,这儿等号后面的内容一定要和/etc/kesytone/kesytone.conf中的[default]节中的admin_token一致

# export OS_SERVICE_ENDPOINT=http://controller:35357/v2.0

配置endpoint环境变量创建租户、用户、角色

创建admin租户

# keystone tenant-create --name admin --description "Admin Tenant"创建admin用户

# keystone user-create --name admin --pass cloud --email 1403383953@qq.com创建admin角色

# keystone role-create --name admin链接admin用户、租户、角色

# keystone user-role-add --user admin --tenant admin --role admin#创建服务实体和API端点

创建客户服务的服务实体

# keystone service-create --name keystone --type identity --description "OpenStack Identity"创建客户服务的api端点

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ identity / {print $2}') \

--publicurl http://controller:5000/v2.0 \

--internalurl http://controller:5000/v2.0 \

--adminurl http://controller:35357/v2.0 \

--region regionOne到这儿,keystone服务算是告一段落了 ,接下来验证下之前操作的正确性

验证操作

取消掉刚才设置的两个环境变量

# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT以管理租户,管理用户身份获得一个验证令牌

# keystone --os-tenant-name admin --os-username admin --os-password cloud --os-auth-url http://controller:35357/v2.0 token-get以管理租户,管理用户身份获得租户列表

# keystone --os-tenant-name admin --os-username admin --os-password cloud --os-auth-url http://controller:35357/v2.0 tenant-list以管理租户,管理用户身份获得用户列表

# keystone --os-tenant-name admin --os-username admin --os-password cloud --os-auth-url http://controller:35357/v2.0 user-list以管理租户,管理用户身份获得角色列表

# keystone --os-tenant-name admin --os-username admin --os-password cloud --os-auth-url http://controller:35357/v2.0 role-list创建openstack客户端环境变量脚本

# vi admin.shexport OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=cloud

export OS_AUTH_URL=http://controller:35357/v2.0加载环境变量脚本

# source admin.sh二、 添加镜像服务(控制节点)

安装配置glance服务

创建glance数据库,并授权

# mysql -u root -p

Enter password:CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' BY IDENTIFIED BY 'cloud';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'cloud';

flush privileges;

exit创建glance用户、服务、api端点

运行环境变量脚本,进入admin专属的命令行

# source admin.sh创建glance用户

# keystone user-create --name glance --pass cloud --email 1403383953@qq.com链接admin角色与glance用户

# keystone user-role-add --user glance --tenant service --role admin创建image服务实体

# keystone service-create --name glance --type image --description "OpenStack Image Service"创建image服务的api端口

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ image / {print $2}') \

--publicurl http://controller:9292 \

--internalurl http://controller:9292 \

--adminurl http://controller:9292 \

--region regionOne安装配置glance服务组件

安装glance组件

# apt-get install glance python-glanceclient配置glance组件

# vi /etc/glance/glance-api.conf[default]

...

rabbit_host = localhost

rabbit_port = 5672

rabbit_use_ssl = false

rabbit_userid = guest

rabbit_password = cloud

rabbit_virtual_host = /

rabbit_notification_exchange = glance

rabbit_notification_topic = notifications

rabbit_durable_queues = False

[database]

...

connection = mysql://glance:cloud@controller/glance

[keystone_authtoken]

#keystone验证

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = glance

admin_password = cloud

[paste_deploy]

#keystone服务入口

...

flavor = keystone

[glance_store]

#镜像存储配置

...

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[DEFAULT]

#配置此处禁用消息通知机制,消息通知机制只属于监控服务

...

notification_driver = noop

[DEFAULT]

#启动详细日志记录

...

verbose = True# vi /etc/glance/glance-registry.conf[default]

rabbit_host = 192.168.100.26

rabbit_port = 5672

rabbit_use_ssl = false

rabbit_userid = guest

rabbit_password = cloud

rabbit_virtual_host = /

rabbit_notification_exchange = glance

rabbit_notification_topic = notifications

rabbit_durable_queues = False

[database]

...

connection = mysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = glance

admin_password = cloud

[paste_deploy]

...

flavor = keystone

[DEFAULT]

...

notification_driver = noop

[DEFAULT]

...

verbose = True更新数据库

# su -s /bin/sh -c "glance-manage db_sync" glance重启镜像服务

# service glance-registry restart

# service glance-api restart删除自动生成的轻量级数据库

# rm -f /var/lib/glance/glance.sqlite验证操作

自己选择一个目录,保存将要下载的openstack测试镜像

# mkdir /tmp/images

#目录选择依个人情况而定

# cd /tmp/image

# wget -P /tmp/images http://cdn.download.cirros-cloud.net/0.3.3/cirros-0.3.3-x86_64-disk.img

#下载测试镜像使环境变生效

# source admin.sh上传镜像

# glance image-create --name "cirros-0.3.3-x86_64" --file /tmp/images/cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --is-public True --progress查看已上传的镜像

# glance image-list三、 添加计算服务

安装配置控制节点

创建nova数据库并授权

# mysql -u root -p

Enter password:CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'cloud';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'cloud';

flush privileges;

exit创建nova用户、服务实体、api端点

使得admin环境变量生效

# source admin-openrc.sh创建nova用户

# keystone user-create --name nova --pass cloud --email 1403383953@qq.com连接admin角色和nova用户

# keystone user-role-add --user nova --tenant service --role admin创建nova服务实体

# keystone service-create --name nova --type compute --description "OpenStack Compute"创建nova的api端点

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ compute / {print $2}') \

--publicurl http://controller:8774/v2/%\(tenant_id\)s \

--internalurl http://controller:8774/v2/%\(tenant_id\)s \

--adminurl http://controller:8774/v2/%\(tenant_id\)s \

--region regionOne安装配置计算控制组件

安装计算控制组件

# apt-get install nova-api nova-cert nova-conductor nova-consoleauth nova-novncproxy nova-scheduler python-novaclient配置计算控制组件

# vi /etc/nova/nova.conf [database]

...

connection = mysql://nova:cloud@controller/nova

[DEFAULT]

#配置rabbitmq,rabbitmq负责openstack各组件之间的通信

...

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

[DEFAULT]

#认证机制

...

auth_strategy = keystone

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = nova

admin_password = cloud

[DEFAULT]

...

my_ip = 192.168.100.26

[DEFAULT]

...

vncserver_listen = 192.168.100.26

#vnc监听地址

vncserver_proxyclient_address = 192.168.100.26

#vnc代理客户端地址

[glance]

#配置镜像的位置

host = controller

[DEFAULT]

...

verbose = True更新数据库

# su -s /bin/sh -c "nova-manage db sync" nova重启计算服务

# service nova-api restart

# service nova-cert restart

# service nova-consoleauth restart

# service nova-scheduler restart

# service nova-conductor restart

# service nova-novncproxy restart删除自动生成的轻量级数据库

添加计算节点(无论有多少台计算节点,都按照下面说明安装配置)

安装包

# apt-get install nova-compute sysfsutils配置计算节点的计算服务

# vi /etc/nova/nova.conf [DEFAULT]

...

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

[DEFAULT]

...

auth_strategy = keystone

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = nova

admin_password = cloud

[DEFAULT]

...

my_ip = 192.168.100.**

#计算节点自己的ip

[DEFAULT]

#vnc配置

...

vnc_enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 192.168.100.**

novncproxy_base_url = http://controller:6080/vnc_auto.html

#使得计算节点可以向控制台提交请求

[glance]

...

host = controller

[DEFAULT]

...

verbose = True测试该计算节点是否支持虚拟机硬件加速

# egrep -c '(vmx|svm)' /proc/cpuinfo

#若反馈的数字不等于0则说明该主机支持虚拟机硬件加速

#若不为0不做处理,若为零,执行下面操作

# vi /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemu重启计算服务

# service nova-compute restart测试该计算是否支持kvm技术

root@compute-26:~# kvm-ok

The program 'kvm-ok' is currently not installed. You can install it by typing:

apt-get install cpu-checker# apt-get install cpu-checker# kvm-ok

INFO: /dev/kvm exists

KVM acceleration can be used若反馈上面信息说明该主机支持kvm技术,若反馈下面信息的话,你就要去bios打开cpu VT技术了

INFO: KVM (vmx) is disabled by your BIOS

HINT: Enter your BIOS setup and enable Virtualization Technology (VT)验证操作

# source admin.sh列出nova服务列表,在state列中up是正常的down则不正常

root@compute-26:~# nova service-list

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+

| 1 | nova-consoleauth | compute-26 | internal | enabled | up | 2015-04-28T14:07:22.000000 | - |

| 2 | nova-cert | compute-26 | internal | enabled | up | 2015-04-28T14:07:23.000000 | - |

| 3 | nova-scheduler | compute-26 | internal | enabled | up | 2015-04-28T14:07:23.000000 | - |

| 4 | nova-conductor | compute-26 | internal | enabled | up | 2015-04-28T14:07:23.000000 | - |

| 5 | nova-compute | compute-11 | nova | enabled | up | 2015-04-28T14:07:26.000000 | - |

| 6 | nova-compute | compute-46 | nova | enabled | up | 2015-04-28T14:07:24.000000 | - |

| 7 | nova-compute | compute-60 | nova | enabled | up | 2015-04-28T14:07:20.000000 | - |

+----+------------------+------------+----------+---------+-------+----------------------------+-----------------+nova-manage 服务列表

root@compute-26:~# nova-manage service list

Binary Host Zone Status State Updated_At

nova-consoleauth compute-26 internal enabled :-) 2015-04-28 14:10:03

nova-cert compute-26 internal enabled :-) 2015-04-28 14:10:03

nova-scheduler compute-26 internal enabled :-) 2015-04-28 14:10:03

nova-conductor compute-26 internal enabled :-) 2015-04-28 14:10:03

nova-compute compute-11 nova enabled :-) 2015-04-28 14:09:56

nova-compute compute-46 nova enabled :-) 2015-04-28 14:09:55

nova-compute compute-60 nova enabled :-) 2015-04-28 14:10:00nova镜像列表

root@compute-26:~# nova image-list

+--------------------------------------+---------------------+--------+--------------------------------------+

| ID | Name | Status | Server |

+--------------------------------------+---------------------+--------+--------------------------------------+

| 5c795a8c-d670-4737-8f89-b1add4d72cd2 | cirros-0.3.3-x86_64 | ACTIVE | |

| 492fc9af-4dca-4e26-9485-14555d834850 | snap | ACTIVE | afa453c3-4d21-4602-a73c-8c062decf811 |

| c58fda82-68d2-4334-b2ed-91c08496fbc7 | ubuntu12.04 | ACTIVE | |

| 3d0d616d-4d7b-4c8f-a252-e0e7ebc21b77 | win2003 | ACTIVE | |

+--------------------------------------+---------------------+--------+--------------------------------------+四、 增加网络组件(neutron)

Openstack的网络一共有5种,其实对于租户网络来说,应该就是4种。所谓Flat模式,并不是租户的网络,而是把虚拟机直接放在 provider networks,就是管理员创建一个网络,让所有的租户直接连接到外网,获得的是公网的IP,不需要经过NAT。

local模式:这主要是给测试使用,只能是all in one,不能再添加节点

GRE模式:隧道数量没有限制,性能有点问题。

Vlan模式:vlan数量有4096的限制

VXlan模式:vlan数量没有限制,性能比GRE好。

参考 http://www.chenshake.com/how-node-installation-centos-6-4-openstack-havana-ovsgre/#i

安装配置网络控制组件(控制节点操作)

创建neutron数据库,并授权

# mysql -u root -p

Enter password: CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'cloud';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'cloud';

flush privileges;

exit创建neutron用户、服务实体、api端点

使admin环境变量生效

# source admin.sh创建neutron用户

# keystone user-create --name neutron --pass cloud --email 1403383953@qq.com连接admin角色和neuron用户

# keystone user-role-add --user neutron --tenant service --role admin创建neutron服务实体

# keystone service-create --name neutron --type network --description "OpenStack Networking"创建网络服务的api端口

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ network / {print $2}') \

--publicurl http://controller:9696 \

--adminurl http://controller:9696 \

--internalurl http://controller:9696 \

--region regionOne安装配置网络组件

安装网络组件

# apt-get install neutron-server neutron-plugin-ml2 python-neutronclient配置网络组件

# vi /etc/neureon/neutron.conf[DEFAULT]

...

verbose = True

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

#ml插件,服务插件,

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

nova_url = http://controller:8774/v2

nova_admin_auth_url = http://controller:35357/v2.0

nova_region_name = regionOne

nova_admin_username = nova

nova_admin_tenant_id = SERVICE_TENANT_ID

#SERVICE_TENANT_ID可通过 "keystone tenant-get service"命令获得

nova_admin_password =cloud

#网络拓扑改变设置

[database]

...

connection = mysql://neutron:cloud@controller/neutron

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = neutron

admin_password = cloudroot@compute-26:~# keystone tenant-get service

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Service Tenant |

| enabled | True |

| id | 2bfff95cfe46442a8232988586b07094

|# 这个id便是上面配置文档中的SERVICE_TENANT_ID了

| name | service |

+-------------+----------------------------------+配置ml2插件

ml2使用OVS机制建立实例的虚拟网络框架.因为控制节点不需要处理实例的网络流量,所以控制节点不用安装OVS

OVS有关配置

OVS.integration_bridge:br-int

OVS.tunnel_bridge:br-tun

OVS.local_ip:GRE网络使用:就是内部的IP,这里就是eth1上的IP地址

OVS.tunnel_id_ranges:1:1000,就是ID的范围,GRE网络的隧道数量。

OVS.bridge_mappings :physnet1:br-eth1,vlan模式使用该参数

OVS.network_vlan_ranges:physnet1:10:20,vlan模式使用该参数

参考http://www.chenshake.com/how-node-installation-centos-6-4-openstack-havana-ovsgre/#i

# vi /etc/neutron/plugins/ml2/ml2_conf.ini[ml2]

...

type_drivers = flat,gre

#网络类型:flat和gre(通用路由封装),从网络类型驱动命名空间加载网络类型列表

tenant_network_types = gre

#租户网络类型

mechanism_drivers = openvswitch

#ml2采用ovs机制

[ml2_type_gre]

tunnel_id_ranges = 1:1000

#可用于租户网络配置的隧道id范围

[securitygroup]

#安全组配置

...

enable_security_group = True

#使用安全组

enable_ipset = True

#使用ipset加速iptables安全组,使ipset支持ipset安装在L2代理节点上

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

#防火墙设置配置计算组件使用网络

发布包默认计算组件使用 legacy网络,我们要使用neutron网络的话必须手动配置

# vi /etc/nova/nova.confnetwork_api_class = nova.network.neutronv2.api.API

security_group_api = neutron

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#防火墙设置

[neutron]

...

url = http://controller:9696

auth_strategy = keystone

admin_auth_url = http://controller:35357/v2.0

admin_tenant_name = service

admin_username = neutron

admin_password = cloud

#访问参数配置,使得nova可以访问neutron网络更新数据库

# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade juno" neutron重启服务

# service nova-api restart

# service nova-scheduler restart

# service nova-conductor restart

# service neutron-server restart验证操作

使admin环境变量生效

# source admin.sh加载验证成功之后的neutron-server程序

# neutron ext-list

+-----------------------+-----------------------------------------------+

| alias | name |

+-----------------------+-----------------------------------------------+

| security-group | security-group |

| l3_agent_scheduler | L3 Agent Scheduler |

| ext-gw-mode | Neutron L3 Configurable external gateway mode |

| binding | Port Binding |

| provider | Provider Network |

| agent | agent |

| quotas | Quota management support |

| dhcp_agent_scheduler | DHCP Agent Scheduler |

| l3-ha | HA Router extension |

| multi-provider | Multi Provider Network |

| external-net | Neutron external network |

| router | Neutron L3 Router |

| allowed-address-pairs | Allowed Address Pairs |

| extraroute | Neutron Extra Route |

| extra_dhcp_opt | Neutron Extra DHCP opts |

| dvr | Distributed Virtual Router |

+-----------------------+-----------------------------------------------+安装和配置网络节点(网络节点操作)

配置内核网络设置

# vi /etc/sysctl.conf ```

net.ipv4.ip_forward=1

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0实现改变

# sysctl -p安装配置网络组件

安装网络组件

# apt-get install neutron-plugin-ml2 neutron-plugin-openvswitch-agent neutron-l3-agent neutron-dhcp-agent配置网络组件

配置网络通用设置

# vi /etc/neutron/neutron.conf[DEFAULT]

...

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

verbose = True

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = neutron

admin_password = cloud配置ml2插件

# /etc/neutron/plugins/ml2/ml2_conf.ini [ml2]

...

type_drivers = flat,gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_flat]

...

flat_networks = external

[ml2_type_gre]

...

tunnel_id_ranges = 1:1000

[securitygroup]

...

enable_security_group = True

enable_ipset = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

local_ip = 192.168.100.44

#GRE网络配置就是内部的IP

enable_tunneling = True

bridge_mappings = external:br-ex

#vlan模式网络配置

[agent]

tunnel_types = gre

#代理隧道类型采用greL3代理配置

此代理为虚拟网络提供路由服务

[DEFAULT]

verbose = True

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

#L3接口驱动程序设置,我们在这儿使用ovs接口驱动

use_namespaces = True

external_network_bridge = br-ex

#外部网桥设置

router_delete_namespaces = TrueDHCP代理配置

DHCP代理提供虚拟网络的DHCP服务

# vi /etc/neutron/dhcp_agent.ini [DEFAULT]

verbose = True

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

use_namespaces = True

dhcp_delete_namespaces = True因为采用GRE网络,你需要把MTU设置为1400,默认的是1500,文档可参考

https://ask.openstack.org/en/question/6140/quantum-neutron-gre-slow-performance/

# vi /etc/neutron/dhcp_agent.ini[DEFAULT]

...

dnsmasq_config_file = /etc/neutron/dnsmasq-neutron.conf创建/etc/neutron/dnsmasq-neutron.conf并添加下面配置

dhcp-option-force=26,1454kill掉正在run的dnsmasq进程

# pkill dnsmasq配置元数据代理

元数据代理提供配置信息,像实例凭证

# vi /etc/neutron/metadata_agent.ini [DEFAULT]

...

auth_url = http://controller:5000/v2.0

auth_region = regionOne

admin_tenant_name = service

admin_user = neutron

admin_password = cloud

nova_metadata_ip = controller

#实例访问参数和元数据代理ip

metadata_proxy_shared_secret = METADATA_SECRET

#元数据代理共享密钥 METADATA_SECRET你可以用自己独特的字符串进行加密

verbose = True在控制节点上配置元数据代理共享

# vi /etc/nova/nova.conf[neutron]

...

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

此处的METADATA_SECRET必须与网络节点的一模一样在控制节点重启计算服务

# service nova-api restart回到网络节点继续配置

配置OVS

OVS服务提供实例实例底层虚拟网络框架

集成网桥 br-int 处理实例内部网络流量

外部网桥 br-ex 处理外部网络流量

在配置ovs之前必须改变下网络配置

# vi /etc/network/interfaces# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto br-ex

iface br-ex inet static

address 192.168.100.44

netmask 255.255.255.0

gateway 192.168.100.254

dns-nameservers 8.8.8.8

# The external network interface

auto p4p1

iface p4p1 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE down改变网卡配置之后,不要去重启网络,否则会断网

重启ovs服务

# service openvswitch-switch restart添加OVS外部桥

# ovs-vsctl add-br br-ex添加OVS端口,连接物理网卡和外部桥

# ovs-vsctl add-port br-ex p4p1

#因为在上面的网卡配置中网卡名是p4p1所以这儿也是为了达到实例和外部网络之间的合适的吞吐量,禁用GRO

GRO是针对网络接受包进行处理的网络协议

# ethtool -K p4p1 gro off重启网络服务,重启网卡(14.04我不知道怎样快速重启网卡,所以每次都是重启物理机的,望大牛指教)

# service neutron-plugin-openvswitch-agent restart

# service neutron-l3-agent restart

# service neutron-dhcp-agent restart

# service neutron-metadata-agent restart网络节点验证操作

若下列命令不能再网络节点运行的话就去控制节点

使admin环境变量生效,为了方便起见把admin环境变量脚本拷贝到每个节点上

# source admin.sh列出网络代理

# neutron agent-list+--------------------------------------+--------------------+------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------+-------+----------------+---------------------------+

| 767ade3b-c9ad-4bfd-82cb-c333c86c5f00 | DHCP agent | compute-44 | :-) | True | neutron-dhcp-agent |

| 7add274c-b873-4456-8065-5840f38a03e9 | Open vSwitch agent | compute-44 | :-) | True | neutron-openvswitch-agent |

| eb27c4e8-9c1b-4e8f-b632-c9798ed18235 | Metadata agent | compute-44 | :-) | True | neutron-metadata-agent |

| f0a4f988-514a-425c-88a3-8d28be7ec84f | L3 agent | compute-44 | :-) | True | neutron-l3-agent |

+--------------------------------------+--------------------+------------+-------+----------------+---------------------------+安装配置计算组件使用网络(计算节点操作)

计算节点处理实例的连接和安全组

配置内核网络设置

# vi /etc/sysctl.confnet.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0实现改变

# sysctl -p安装网络组件

# apt-get install neutron-plugin-ml2 neutron-plugin-openvswitch-agent配置网络通用组件

# vi /etc/neutron/neutron.conf[DEFAULT]

...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

verbose = True

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = neutron

admin_password = cloud配置ml2插件

# vi /etc/neutron/plugins/ml2/ml2_conf.ini[ml2]

...

type_drivers = flat,gre

tenant_network_types = gre

mechanism_drivers = openvswitch

[ml2_type_gre]

...

tunnel_id_ranges = 1:1000

[securitygroup]

...

enable_security_group = True

enable_ipset = True

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[ovs]

...

local_ip = INSTANCE_TUNNELS_INTERFACE_IP_ADDRESS

#实例隧道接口ip:计算节点ip

enable_tunneling = True

[agent]

...

tunnel_types = gre重启OVS服务

# service openvswitch-switch restart配置计算组件使用网络

发布包默认计算组件使用 legacy网络,我们要使用neutron网络的话必须手动配置

# vi /etc/nova/nova.confnetwork_api_class = nova.network.neutronv2.api.API

security_group_api = neutron

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#防火墙设置

[neutron]

...

url = http://controller:9696

auth_strategy = keystone

admin_auth_url = http://controller:35357/v2.0

admin_tenant_name = service

admin_username = neutron

admin_password = cloud

#访问参数配置,使得nova可以访问neutron网络重启服务

# service nova-compute restart

# service neutron-plugin-openvswitch-agent restart验证操作

是环境变量生效

# source admin.sh列出neutron服务列表

root@compute-44:~# neutron agent-list

+--------------------------------------+--------------------+------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------+-------+----------------+---------------------------+

| 047c83cc-e723-46cc-b7ca-20bf0c952523 | Open vSwitch agent | compute-60 | :-) | True | neutron-openvswitch-agent |

| 4a3695c4-47ed-464c-8a1e-365d6c27403d | Open vSwitch agent | compute-46 | :-) | True | neutron-openvswitch-agent |

| 767ade3b-c9ad-4bfd-82cb-c333c86c5f00 | DHCP agent | compute-44 | :-) | True | neutron-dhcp-agent |

| 7add274c-b873-4456-8065-5840f38a03e9 | Open vSwitch agent | compute-44 | :-) | True | neutron-openvswitch-agent |

| 848f6ab2-9108-430b-82cf-7d6a55305c6e | Open vSwitch agent | compute-11 | :-) | True | neutron-openvswitch-agent |

| eb27c4e8-9c1b-4e8f-b632-c9798ed18235 | Metadata agent | compute-44 | :-) | True | neutron-metadata-agent |

| f0a4f988-514a-425c-88a3-8d28be7ec84f | L3 agent | compute-44 | :-) | True | neutron-l3-agent |五、 添加dashboard

dashboard提供openstack的web界面

安装dashboard组件

# apt-get install openstack-dashboard apache2 libapache2-mod-wsgi memcached python-memcache重启apache2

root@compute-26:~# service apache2 restart

* Restarting web server apache2

AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using 192.168.100.26. Set the 'ServerName' directive globally to suppress this message

...done.出现上述错误后,看下这儿

http://blog.csdn.net/moolight_shadow/article/details/45066165

重启memcached服务

# service memcached restart如果前面配置没有问题的话,到这儿openstack的web界面就可以打开了,基本功能也有了(创建网络,创建实例等)

web页面: http://192.168.100.26/horizon

登录就可以进行简单操作了

如果horizon页面出不来的话,就得检查下这个文件的配置了

/etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.

MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

TIME_ZONE = "UTC"六、 添加块存储服务

块存储服务提供块存储设备,用于实例的后端存储

安装配置控制节点

创建cinder数据库并授权

# mysql -u root -p

Enter password:CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'cloud';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'cloud';

flush privileges;

exit创建cinder用户、服务实体、api端点

使得admin环境变量生效

# source admin.sh创建cinder用户

# keystone user-create --name cinder --pass cloud --email 1403383953@qq.com连接admin角色和cinder用户

# keystone user-role-add --user cinder --tenant service --role admin创建cinder服务实体

# keystone service-create --name cinder --type volume --description "OpenStack Block Storage"

# keystone service-create --name cinderv2 --type volumev2 --description "OpenStack Block Storage"创建cinder的api端点

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volume / {print $2}') \

--publicurl http://controller:8776/v1/%\(tenant_id\)s \

--internalurl http://controller:8776/v1/%\(tenant_id\)s \

--adminurl http://controller:8776/v1/%\(tenant_id\)s \

--region regionOne

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volumev2 / {print $2}') \

--publicurl http://controller:8776/v2/%\(tenant_id\)s \

--internalurl http://controller:8776/v2/%\(tenant_id\)s \

--adminurl http://controller:8776/v2/%\(tenant_id\)s \

--region regionOne安装配置块存储控制组件

安装块存储控制组件

# apt-get install cinder-api cinder-scheduler python-cinderclient配置块存储控制组件

# vi /etc/cinder/cinder.conf[DEFAULT]

...

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

my_ip = 192.168.100.26

verbose = True

[database]

...

connection = mysql://cinder:cloud@controller/cinder

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = cinder

admin_password = cloud更新数据库

# su -s /bin/sh -c "cinder-manage db sync" cinder重启服务

# service cinder-scheduler restart

# service cinder-api restart删除自动生成的轻量级数据库

# rm -f /var/lib/cinder/cinder.sqlite安装配置一个存储节点(本文档将块存储集中在控制节点中)

安装lvm

# apt-get install lvm2配置lvm

创建一个物理卷

# pvcreate /dev/sda2创建一个卷组

# vgcreate cinder-volumes /dev/sda2配置下面文件

# vi /etc/lvm/lvm2.confdevices {

...

filter = [ "a/sda/", "r/.*/"]安装配置块存储卷组件

安装包

# apt-get install cinder-volume python-mysqldb配置组件

[DEFAULT]

...

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

my_ip = 192.168.100.26

glance_host = controller

verbose = True

[database]

...

connection = mysql://cinder:cloud@controller/cinder

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = cinder

admin_password = cloud重启服务

# service tgt restart

# service cinder-volume restart删除系统自动生成的轻量级数据库

# rm -f /var/lib/cinder/cinder.sqlite验证操作

使admin环境变量生效

# source admin.sh加载cinde服务列表

# cinder service-list

root@compute-26:~# source admin-openrc.sh

root@compute-26:~# cinder service-list

+------------------+------------+------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+------------+------+---------+-------+----------------------------+-----------------+

| cinder-scheduler | compute-26 | nova | enabled | up | 2015-04-30T07:36:28.000000 | None |

| cinder-volume | compute-26 | nova | enabled | up | 2015-04-30T07:36:25.000000 | None |

+------------------+------------+------+---------+-------+----------------------------+-----------------+创建一个测试卷,名字叫test,大小为1G

# cinder create --display-name test 1

+---------------------+--------------------------------------+

| Property | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| created_at | 2015-04-30T07:38:26.273424 |

| display_description | None |

| display_name | test |

| encrypted | False |

| id | d4b482c3-e6ab-4bce-b185-d8e3a05f14d3 |

| metadata | {} |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| volume_type | None |

+---------------------+--------------------------------------+查看下刚才新建的测试卷的状态

# cinder volume-list

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

| ID | Status | Display Name | Size | Volume Type | Bootable | Attached to |

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

| d4b482c3-e6ab-4bce-b185-d8e3a05f14d3 | available | test | 1 | None | false | |

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+如果他的状态(states)是false的,看下日志(/var/log/cinder文件夹)

七 、 监控模块

安装配置控制节点

因为监控模块所涉及数据量比较庞大,所以用较大型的mongodb数据库。(在实验条件下用mariadb和mysql也没有关系)

安装配置数据库

# apt-get install mongodb-server mongodb-clients python-pymongo配置mongodb

# vi /etc/mongodb.confbind_ip = 192.168.100.26

smallfiles = true

#认情况下,MongoDB会在 /var/lib/mongodb/journal目录中创建几个1 GB的日志文件。在实验环境中造成较大资源浪费,所以我们减少每个日志文件的大小使mongodb服务暂时中止

# service mongodb stop删掉mongodb初始日志文件

# rm /var/lib/mongodb/journal/prealloc.*启动mongodb服务

# service mongodb start创建mongodb数据库

# mongo --host controller --eval 'db = db.getSiblingDB("ceilometer");db.addUser({user: "ceilometer",pwd: "cloud",roles: [ "readWrite", "dbAdmin" ]})'创建ceilometer用户、服务、api端点

生效admin环境变量

# source admin.sh创建用户

# keystone user-create --name ceilometer --pass cloud --email 1403383953@qq.com连接ceilometer用户和admin角色

# keystone user-role-add --user ceilometer --tenant service --role admin创建ceilometer服务实体

# keystone service-create --name ceilometer --type metering --description "Telemetry"创建ceilometer的api端点

# keystone endpoint-create \

--service-id $(keystone service-list | awk '/ metering / {print $2}') \

--publicurl http://controller:8777 \

--internalurl http://controller:8777 \

--adminurl http://controller:8777 \

--region regionOne安装配置监控组件

安装监控组件

# apt-get install ceilometer-api ceilometer-collector ceilometer-agentcentral ceilometer-agent-notification ceilometer-alarm-evaluator ceilometeralarm-notifier python-ceilometerclient配置监控组件

# vi /etc/ceilometer//ceilometer.confrpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud

auth_strategy = keystone

verbose = True

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = ceilometer

admin_password = cloud

[service_credentials]

...

os_auth_url = http://controller:5000/v2.0

os_username = ceilometer

os_tenant_name = service

os_password = cloud

[publisher]

...

metering_secret = METERING_SECRET

# METERING_SECRET用一个你自己的独特的字符串代替重启服务

# service ceilometer-agent-central restart

# service ceilometer-agent-notification restart

# service ceilometer-api restart

# service ceilometer-collector restart

# service ceilometer-alarm-evaluator restart

# service ceilometer-alarm-notifier restart安装配置ceilometer在计算节点

安装ceilometer计算代理

# apt-get install ceilometer-agent-compute配置

[default]

...

rabbit_host = controller

rabbit_password = cloud

verbose = True

[keystone_authtoken]

...

auth_uri = http://controller:5000/v2.0

identity_uri = http://controller:35357

admin_tenant_name = service

admin_user = ceilometer

admin_password = cloud

[service_credentials]

...

os_auth_url = http://controller:5000/v2.0

os_username = ceilometer

os_tenant_name = service

os_password = cloud

os_endpoint_type = internalURL

os_region_name = regionOne

[publisher]

...

metering_secret = METERING_SECRET

#这里的METERING_SECRET一定要跟上面的一样配置计算服务向消息总线发送通知

# vi /etc/nova/nova.conf[DEFAULT]

...

instance_usage_audit = True

instance_usage_audit_period = hour

notify_on_state_change = vm_and_task_state

notification_driver = messagingv2重启服务

# service ceilometer-agent-compute restart

# service nova-compute restart配置镜像服务(控制节点)

配置镜像服务向消息总线发送通知

在/etc/glance/glance-api.conf 和 /etc/glance/glanceregistry.conf的default节都加上下面部分

[DEFAULT]

...

notification_driver = messagingv2

rpc_backend = rabbit

rabbit_host = controller

rabbit_password = cloud重启镜像服务

# service glance-registry restart

# service glance-api restart配置块存储服务(控制节点和块存储节点我把存储节点也放在控制节点上,所以只配置控制节点就好)

配置块存储服务向消息总线发送通知

# vi /etc/cinder/cinder.conf[DEFAULT]

...

control_exchange = cinder

notification_driver = messagingv2重启服务

# service cinder-api restart

# service cinder-scheduler restart

# service cinder-volume restart验证操作

生效admin环境变量

# source admin.sh列出已监控的资源列表

# ceilometer meter-list

#因为这儿数据量比较大,我就不放这条命令的输出结果了从镜像服务下载之前传上去的哪个测试镜像

# glance image-download "cirros-0.3.3-x86_64" > cirros.img下载完之后查看已监控资源会发生变化

# ceilometer meter-list从image.download计量器中检索使用的统计数据

# ceilometer statistics -m image.download -p 60+--------+---------------------+---------------------+-------

+------------+------------+------------+------------+----------

+----------------------------+----------------------------+

| Period | Period Start | Period End | Count | Min

| Max | Sum | Avg | Duration | Duration Start

| Duration End |

+--------+---------------------+---------------------+-------

+------------+------------+------------+------------+----------

+----------------------------+----------------------------+

| 60 | 2013-11-18T18:08:50 | 2013-11-18T18:09:50 | 1 | 13167616.0

| 13167616.0 | 13167616.0 | 13167616.0 | 0.0 | 2013-11-18T18:09:05.

334000 | 2013-11-18T18:09:05.334000 |

+--------+---------------------+---------------------+-------

+------------+------------+------------+------------+----------

+----------------------------+----------------------------+openstack基础部署到此为止,对象存储、编配、大数据模块因为用的比较少,所以在这个安装文档中没写。如果您还想进行高可用等配置,请参考官方文档

如有错误,欢迎指出,本文原创,欢迎转载,转载请注明出处

3453

3453

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?