Mysql MGR集群搭建过程及常见问题

一、搭建过程

基于mysql5.7.25 和docker部署

1、拉取mysql5.7.25镜像

docker pull mysql:5.7.25

2、创建docker专用网络

docker network create --subnet=172.72.0.0/24 mysql-network

3、创建存储数据相关目录

根据实际的需求创建到对应的目录,不一定要一致

mkdir -p /usr/local/mysql/lygmgr15/conf.d

mkdir -p /usr/local/mysql/lygmgr15/data

mkdir -p /usr/local/mysql/lygmgr16/conf.d

mkdir -p /usr/local/mysql/lygmgr16/data

mkdir -p /usr/local/mysql/lygmgr17/conf.d

mkdir -p /usr/local/mysql/lygmgr17/data

4、启动3个mysql容器

第一个

docker run -d --name mysql5725mgr33065 \

-h lygmgr15 -p 33065:3306 --privileged=true --net=mysql-network --ip 172.72.0.15 \

-v /usr/local/mysql/lygmgr15/conf.d:/etc/mysql/conf.d -v /usr/local/mysql/lygmgr15/data:/var/lib/mysql/ \

-e MYSQL_ROOT_PASSWORD=123456 \

-e TZ=Asia/Shanghai \

mysql:5.7.25

第二个

docker run -d --name mysql5725mgr33066 \

-h lygmgr16 -p 33066:3306 --privileged=true --net=mysql-network --ip 172.72.0.16 \

-v /usr/local/mysql/lygmgr16/conf.d:/etc/mysql/conf.d -v /usr/local/mysql/lygmgr16/data:/var/lib/mysql/ \

-e MYSQL_ROOT_PASSWORD=123456 \

-e TZ=Asia/Shanghai \

mysql:5.7.25

第三个

docker run -d --name mysql5725mgr33067 \

-h lygmgr17 -p 33067:3306 --privileged=true --net=mysql-network --ip 172.72.0.17 \

-v /usr/local/mysql/lygmgr17/conf.d:/etc/mysql/conf.d -v /usr/local/mysql/lygmgr17/data:/var/lib/mysql/ \

-e MYSQL_ROOT_PASSWORD=123456 \

-e TZ=Asia/Shanghai \

mysql:5.7.25

–privileged=true 是为了解决无权限的问题

5、修改配置参数

开启binlog日志需要一个my.cnf文件。接下来分别在conf.d目录下创建my.cnf文件

第一个 my.cnf

[mysqld]

user=mysql

port=3306

character_set_server=utf8mb4

secure_file_priv=''

# server-id必须是唯一的,主节点设置为572533065

server-id = 572533065

# 时区

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

#binlog格式

binlog_format=row

binlog_checksum=NONE

log-slave-updates=1

skip-name-resolve

auto-increment-increment=2

auto-increment-offset=1

gtid-mode=ON

enforce-gtid-consistency=on

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr15-relay-bin-ip15

#记录事务的算法

transaction_write_set_extraction=XXHASH64

# group的名字,是uuid,同一个集群uuid相同

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

# 是否随服务器启动而自动启动组复制,不建议直接启动,怕故障恢复时有扰乱数据准确性的特殊情况

loose-group_replication_start_on_boot=OFF

# 每个节点MGR的IP地址和端口,host:port,是MGR的端口,不是数据库的端口

loose-group_replication_local_address= "172.72.0.15:33061"

# 集群所有MGR的IP地址和端口,是MGR的端口,不是数据库的端口

loose-group_replication_group_seeds= "172.72.0.15:33061,172.72.0.16:33062,172.72.0.17:33063"

# 开启引导模式,添加组成员,用于第一次搭建MGR或重建MGR的时候使用,只需要在集群内的其中一台启动GROUP_REPLICATION时开启,并且在GROUP_REPLICATION启动后关闭

loose-group_replication_bootstrap_group=OFF

loose-group_replication_ip_whitelist="172.72.0.15,172.72.0.16,172.72.0.17"

report_host=172.72.0.15

report_port=3306

第二个 my.cnf

[mysqld]

user=mysql

port=3306

character_set_server=utf8mb4

secure_file_priv=''

server-id = 572533066

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

binlog_format=row

binlog_checksum=NONE

log-slave-updates=1

gtid-mode=ON

enforce-gtid-consistency=ON

skip_name_resolve

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr16-relay-bin-ip16

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

loose-group_replication_start_on_boot=OFF

loose-group_replication_local_address= "172.72.0.16:33062"

loose-group_replication_group_seeds= "172.72.0.15:33061,172.72.0.16:33062,172.72.0.17:33063"

loose-group_replication_bootstrap_group=OFF

loose-group_replication_ip_whitelist="172.72.0.15,172.72.0.16,172.72.0.17"

report_host=172.72.0.16

report_port=3306

第三个my.cnf

[mysqld]

user=mysql

port=3306

character_set_server=utf8mb4

secure_file_priv=''

server-id = 572533067

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

binlog_format=row

binlog_checksum=NONE

log-slave-updates=1

gtid-mode=ON

enforce-gtid-consistency=ON

skip_name_resolve

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr16-relay-bin-ip16

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

loose-group_replication_start_on_boot=OFF

loose-group_replication_local_address= "172.72.0.17:33063"

loose-group_replication_group_seeds= "172.72.0.15:33061,172.72.0.16:33062,172.72.0.17:33063"

loose-group_replication_bootstrap_group=OFF

loose-group_replication_ip_whitelist="172.72.0.15,172.72.0.16,172.72.0.17"

report_host=172.72.0.17

report_port=3306

6、重启容器

docker restart mysql5725mgr33065 mysql5725mgr33066 mysql5725mgr33067

也可先添加my.cnf配置再启动容器,这样的话就不用重启了。

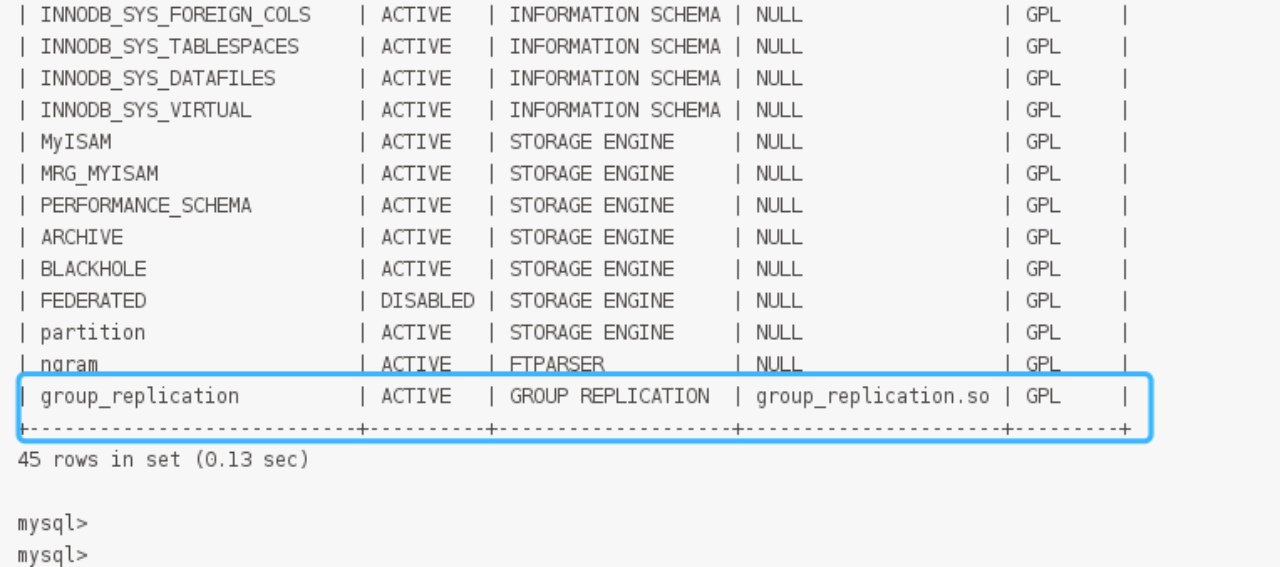

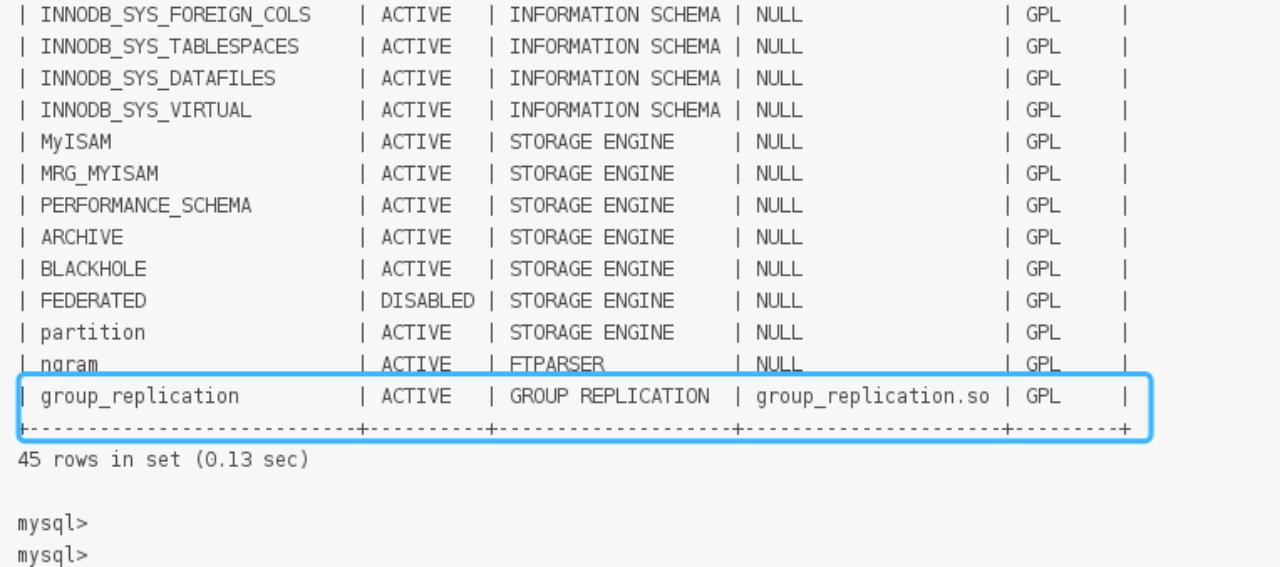

7、分别登录到各个docker容器,安装mgr插件

主容器:

#进入容器

docker exec -it mysql5725mgr33065 /bin/bash

#进入mysql

mysql -uroot -p123456

#安装插件

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

#查看插件是否安装

show plugins;

设置数据复制账号,启动mgr

#关闭日志

SET SQL_LOG_BIN=0;

#创建用户

CREATE USER repl@'%' IDENTIFIED BY 'lyg';

#授权

GRANT REPLICATION SLAVE ON *.* TO repl@'%';

#刷新系统权限

FLUSH PRIVILEGES;

#开启日志

SET SQL_LOG_BIN=1;

#创建同步规则认证信息,就是刚才授权的那个用户

CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='lyg' FOR CHANNEL 'group_replication_recovery';

#启动引导,注意,只有主开启引导,其他两台都忽略这一步

SET GLOBAL group_replication_bootstrap_group=ON;

#启动MGR,所有mysql服务必须都启动

START GROUP_REPLICATION;

#启动完成之中关闭引导

SET GLOBAL group_replication_bootstrap_group=OFF;

从容器(两个操作一样):

#进入容器

docker exec -it mysql5725mgr33066 /bin/bash

#进入mysql

mysql -uroot -p123456

#安装插件

INSTALL PLUGIN group_replication SONAME 'group_replication.so';

#查看插件是否安装

show plugins;

设置数据复制账号,启动MGR

#关闭日志

SET SQL_LOG_BIN=0;

#创建用户

CREATE USER repl@'%' IDENTIFIED BY 'lyg';

#授权

GRANT REPLICATION SLAVE ON *.* TO repl@'%';

#刷新系统权限

FLUSH PRIVILEGES;

#开启日志

SET SQL_LOG_BIN=1;

#创建同步规则认证信息,就是刚才授权的那个用户

CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='lyg' FOR CHANNEL 'group_replication_recovery';

#启动MGR,所有mysql服务必须都启动

START GROUP_REPLICATION;

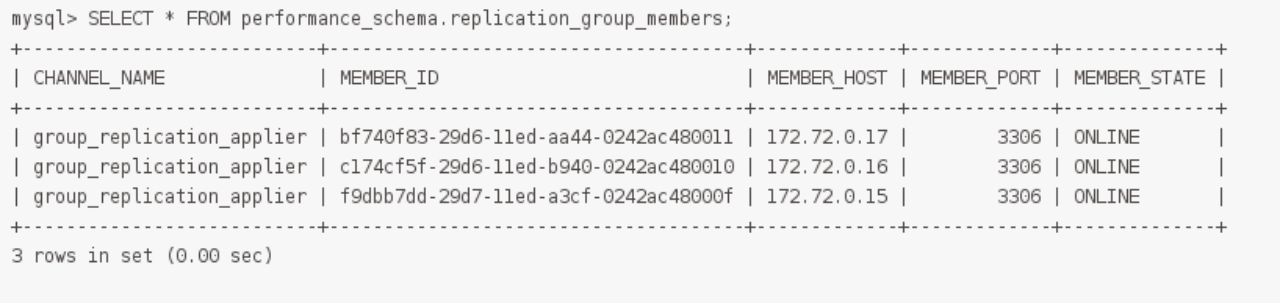

查看MGR组信息

SELECT * FROM performance_schema.replication_group_members;

完成

二、主容器发生故障

1、主节点数据还在、宕机期间集群中的其它结点的binlog日志也都还在

如果主容器发生故障,会随机从从容器中选择一个自动设置为主容器。然后找到发生故障的容器,重启容器,并且进入容器开启同步。

START GROUP_REPLICATION;

如果开启失败,请查看日志,用下面对应的解决方案解决。

2、主结点的数据都没有了

这种情况需要从其余结点备份恢复宕机结点、然后再重启mysql group replication 就可修复问题。

三、常见问题

1、问题一

[ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: bb874065-c485-11e8-8b52-000c2934472e:1 > Group transactions: 3db33b36-0e51-409f-a61d-c99756e90155:1-11'

[ERROR] Plugin group_replication reported: 'The member contains transactions not present in the group. The member will now exit the group.'[Note] Plugin group_replication reported: ‘To force this member into the group you can use the group_replication_allow_local_disjoint_gtids_join option’

解决方案:

根据提示打开set global group_replication_allow_local_disjoint_gtids_join=ON;

2、问题二

[ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: bb874065-c485-11e8-8b52-000c2934472e:1 > Group transactions: 3db33b36-0e51-409f-a61d-c99756e90155:1-15'

[Warning] Plugin group_replication reported: 'The member contains transactions not present in the group. It is only allowed to join due to group_replication_allow_local_disjoint_gtids_join option'

[Note] Plugin group_replication reported: 'This server is working as secondary member with primary member address localhost.localdomaion:3306.'

解决方案:

该故障和故障1的不同之处在于该问题出现时,参数group_replication_allow_local_disjoint_gtids_join已经设置成为on了。解决该问题的方法是执行reset master就行,然后重新在主节点和从节点开启通道,即

CHANGE MASTER TO MASTER_USER=‘rpl_user’, MASTER_PASSWORD=‘rpl_pass’ FOR CHANNEL ‘group_replication_recovery’;

3、问题三

[Warning] Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the 'START SLAVE Syntax' in the MySQL Manual for more information.

[ERROR] Slave I/O for channel 'group_replication_recovery': error connecting to master 'rpl_user@localhost.localdomaion:' - retry-time: 60 retries: 1, Error_code: 2005

[ERROR] Plugin group_replication reported: 'There was an error when connecting to the donor server. Please check that group_replication_recovery channel credentials and all MEMBER_HOST column values of performance_schema.replication_group_members table are correct and DNS resolvable.'

[ERROR] Plugin group_replication reported: 'For details please check performance_schema.replication_connection_status table and error log messages of Slave I/O for channel group_replication_recovery.'

[Note] Plugin group_replication reported: 'Retrying group recovery connection with another donor. Attempt /'

解决方案:

这个问题是由于测试环境上三台主机的hostname设置成为了同一个名称,改了hostname之后,这个问题就解决了。

4、问题四

mysql--root@localhost:(none) ::>>START GROUP_REPLICATION;ERROR (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.

#查看log文件,发现只有一个

warning:2022-09-01T07::30.233937Z [Warning] Plugin group_replication reported: 'Group Replication requires slave-preserve-commit-order to be set to ON when using more than 1 applier threads.

解决方案:

set global slave_preserve_commit_order=1;

5、问题五

2022-09-01T08::31.088437Z [Warning] Plugin group_replication reported: '[GCS] Connection attempt from IP address 192.168.9.208 refused.

Address is not in the IP whitelist.'

2022-09-01T08::32.088676Z [Warning] Plugin group_replication reported: '[GCS] Connection attempt from IP address 192.168.9.208 refused.

Address is not in the IP whitelist.'

解决方法:

在my.cnf中配置group_replication_ip_whitelist参数即可解决

6、问题六

2022-09-01T08::44.087492Z [Warning] Plugin group_replication reported: 'read failed'

2022-09-01T08::44.096171Z [ERROR] Plugin group_replication reported: '[GCS] The member was unable to join the group. Local port: 24801'

2022-09-01T08::14.065775Z [ERROR] Plugin group_replication reported: 'Timeout on wait for view after joining group

解决方案:

将my.cnf中的参数group_replication_group_seeds设置为只包含除自身外其他group成员的ip地址以及内部通信端口,如果写成group所有成员的IP地址,则会出现这个错误,这和相同网段的MGR部署方式有些差异。

7、问题七

[ERROR] Plugin group_replication reported: ‘[GCS] Error on opening a connection to oceanbase07: on local port: '.’

[ERROR] Plugin group_replication reported: ‘[GCS] Error on opening a connection to oceanbase08: on local port: '.’

[ERROR] Plugin group_replication reported: ‘[GCS] Error on opening a connection to oceanbase07: on local port: '.’

解决方案:

未开通防火墙上的固定端口,开通防火墙之后即可解决

8、问题八

[Warning] Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the 'START SLAVE Syntax' in the MySQL Manual for more information.

[ERROR] Slave I/O for channel 'group_replication_recovery': Master command COM_REGISTER_SLAVE failed: Access denied for user 'rpl_user'@'%' (using password: YES) (Errno: 1045), Error_code: 1597

[ERROR] Slave I/O thread couldn't register on master

[Note] Slave I/O thread exiting for channel 'group_replication_recovery', read up to log 'FIRST', position

解决方案:

漏掉了某个节点的用户,为了保险起见,在group节点上执行

CREATE USER rpl_user@‘%’;

GRANT REPLICATION SLAVE ON . TO rpl_user@‘%’ IDENTIFIED BY ‘rpl_pass’;

9、问题九

[ERROR] Failed to open the relay log './localhost-relay-bin.000011' (relay_log_pos ).

[ERROR] Could not find target log file mentioned in relay log info in the index file './work_NAT_1-relay-bin. index' during relay log initialization.

[ERROR] Slave: Failed to initialize the master info structure for channel ''; its record may still be present in 'mysql.slave_master_info' table, consider deleting it.

[ERROR] Failed to open the relay log './localhost-relay-bin-group_replication_recovery.000001' (relay_log_pos ).

[ERROR] Could not find target log file mentioned in relay log info in the index file './work_NAT_1-relay-bin-group_replication_recovery.index' during relay log initialization.

[ERROR] Slave: Failed to initialize the master info structure for channel 'group_replication_recovery'; its record may still be present in 'mysql.slave_master_info' table, consider deleting it.

[ERROR] Failed to create or recover replication info repositories.

[ERROR] Slave SQL for channel '': Slave failed to initialize relay log info structure from the repository, Error_code:

[ERROR] /usr/local/mysql/bin/mysqld: Slave failed to initialize relay log info structure from the repository

[ERROR] Failed to start slave threads for channel ''

解决方案:

这个错误是由于slave节点由于某种原因导致找不到relay-log的位置了,需要重新reset slave

10、问题十

[ERROR] Plugin group_replication reported: 'Member was expelled from the group due to network failures, changing member status to ERROR.'

解决方案:

网络延迟,等网络通畅之后重启。

参考

https://blog.csdn.net/zhangcongyi420/article/details/124574977

http://t.zoukankan.com/zmc60-p-14450910.html

1352

1352

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?