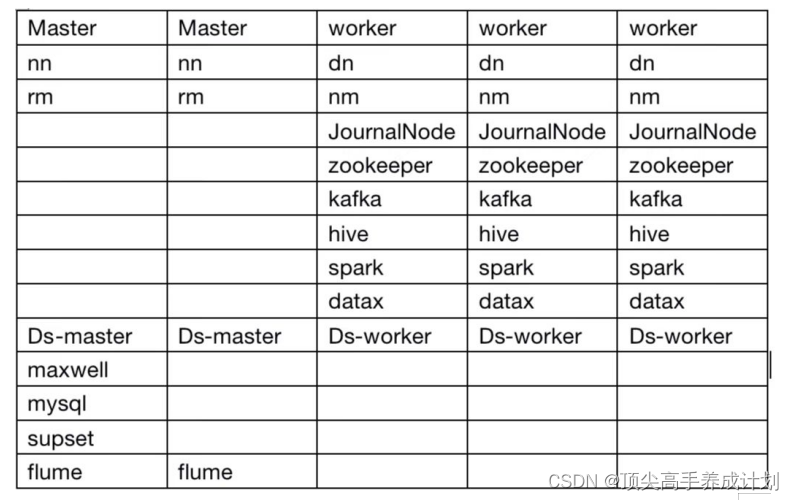

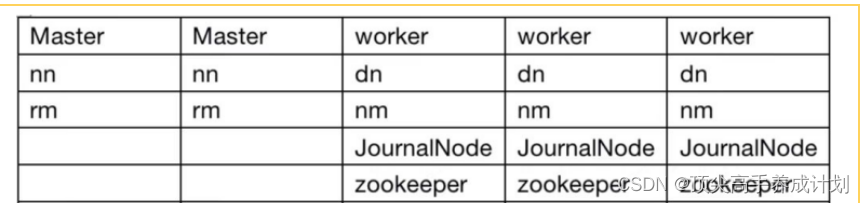

安装计划图

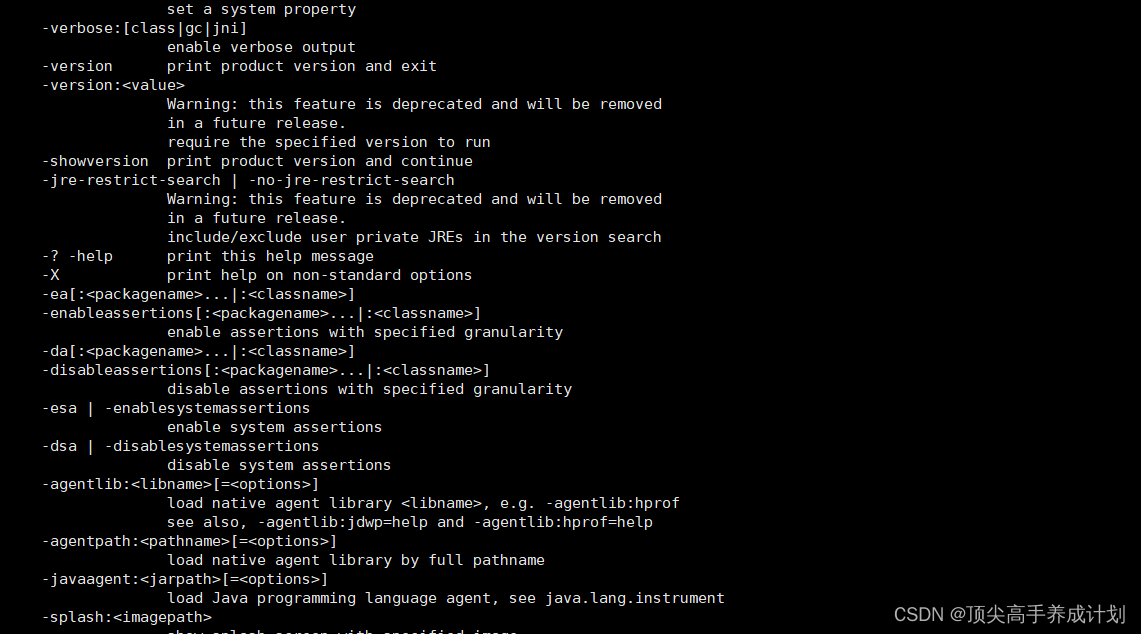

jdk安装

解压到自定的目录

tar -zxvf jdk-8u161-linux-x64.tar.gz -C ../software/配置环境变量

vi /etc/profile.d/my_env.conf#JAVA_HOME

export JAVA_HOME=/home/bigdata/software/jdk1.8.0_161

export PATH=$JAVA_HOME/bin:$PATH

同步配置文件

sudo ./xsync /etc/profile.d/my_env.conf同步jdk

./xsync ../software/jdk1.8.0_161/source /etc/profile.d/my_env.conf输入java

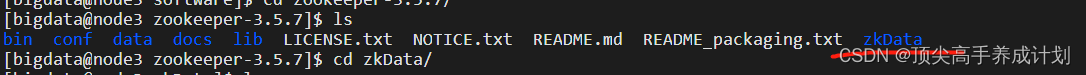

zk集群安装

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz修改配置文件

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/bigdata/software/zookeeper-3.5.7/zkData

#######################cluster##########################

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1对应存储数据的地方创建一个myid 对应上面的server.x

配置环境变量

#ZK_HOME

export ZK_HOME=/home/bigdata/software/zookeeper-3.5.7

export PATH=$ZK_HOME/bin:$PATH

source /etc/profile.d/my_env.conf启停脚本

#!/bin/bash

#检验参数

if [ $# -lt 1 ]

then

echo '参数不能为空!!!'

exit

fi

#循环遍历每一台机器,分别启动或者停止ZK服务

for host in node1 node2 node3

do

case $1 in

"start")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

"stop")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

"status")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

*)

echo '参数有误!!!'

exit

;;

esac

done

hadoopHA安装

core-site.xml

<configuration>

<property>

<!--指定 namenode 的 hdfs 协议文件系统的通信地址-->

<name>fs.defaultFS</name>

<!--指定hdfs高可用的集群名称-->

<value>hdfs://bigdatacluster</value>

</property>

<property>

<!--指定 hadoop 集群存储临时文件的目录-->

<name>hadoop.tmp.dir</name>

<value>/home/bigdata/module/hadoop-3.1.3/data</value>

</property>

<!-- 配置HDFS网页登录使用的静态用户为bigdata -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>bigdata</value>

</property>

<!-- 回收站 -->

<property>

<name>fs.trash.interval</name>

<value>1</value>

</property>

<property>

<name>fs.trash.checkpoint.interval</name>

<value>1</value>

</property>

<!-- 配置该bigdata(superUser)允许通过代理访问的主机节点 -->

<property>

<name>hadoop.proxyuser.bigdata.hosts</name>

<value>*</value>

</property>

<!-- 配置该bigdata(superUser)允许通过代理用户所属组 -->

<property>

<name>hadoop.proxyuser.bigdata.groups</name>

<value>*</value>

</property>

<!-- 配置该bigdata(superUser)允许通过代理的用户-->

<property>

<name>hadoop.proxyuser.bigdata.users</name>

<value>*</value>

</property>

<!-- 指定zkfc要连接的zkServer地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>node1:2181,node2:2181,node3:2181</value>

</property>

</configuration>hdfs-site.xml

<configuration>

<!-- NameNode数据存储目录 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file://${hadoop.tmp.dir}/name</value>

</property>

<!-- DataNode数据存储目录 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file://${hadoop.tmp.dir}/data</value>

</property>

<!-- JournalNode数据存储目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>${hadoop.tmp.dir}/jn</value>

</property>

<!-- 完全分布式集群名称 对应core.xml里面的fs.defaultFS-->

<property>

<name>dfs.nameservices</name>

<value>bigdatacluster</value>

</property>

<!-- 集群中NameNode节点都有哪些 -->

<property>

<name>dfs.ha.namenodes.bigdatacluster</name>

<value>nn1,nn2</value>

</property>

<!-- NameNode的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.bigdatacluster.nn1</name>

<value>master1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.bigdatacluster.nn2</name>

<value>master2:8020</value>

</property>

<!-- NameNode的http通信地址 -->

<property>

<name>dfs.namenode.http-address.bigdatacluster.nn1</name>

<value>master1:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.bigdatacluster.nn2</name>

<value>master2:9870</value>

</property>

<!-- 指定NameNode元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node1:8485;node2:8485;node3:8485/bigdatacluster</value>

</property>

<!-- 访问代理类:client用于确定哪个NameNode为Active -->

<property>

<name>dfs.client.failover.proxy.provider.bigdatacluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制,即同一时刻只能有一台服务器对外响应 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh秘钥登录-->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/bigdata/.ssh/id_rsa</value>

</property>

<!-- 配置黑名单 -->

<property>

<name>dfs.hosts.exclude</name>

<value>/home/bigdata/module/hadoop-3.1.3/etc/blacklist</value>

</property>

<!-- 启用nn故障自动转移 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

</configuration>yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 启用resourcemanager ha -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 声明两台resourcemanager的地址 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>cluster-yarn1</value>

</property>

<!--指定resourcemanager的逻辑列表-->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- ========== rm1的配置 ========== -->

<!-- 指定rm1的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>master1</value>

</property>

<!-- 指定rm1的web端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>master1:8088</value>

</property>

<!-- 指定rm1的内部通信地址 -->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>master1:8032</value>

</property>

<!-- 指定AM向rm1申请资源的地址 -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>master1:8030</value>

</property>

<!-- 指定供NM连接的地址 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>master1:8031</value>

</property>

<!-- ========== rm2的配置 ========== -->

<!-- 指定rm2的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>master2</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>master2:8088</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>master2:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>master2:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>master2:8031</value>

</property>

<!-- 指定zookeeper集群的地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>node1:2181,node2:2181,node3:2181</value>

</property>

<!-- 启用自动恢复 -->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!-- 指定resourcemanager的状态信息存储在zookeeper集群 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<!-- 环境变量的继承 -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<!-- 开启日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置日志聚集服务器地址 -->

<!-- 设置日志聚集服务器地址 -->

<property>

<name>yarn.log.server.url</name>

<value>http://master1:19888/jobhistory/logs</value>

</property>

<!-- 设置日志保留时间为7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

<!--是否启动一个线程检查每个任务正使用的物理内存量,如果任务超出分配值,则直接将其杀掉,默认是true -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!--是否启动一个线程检查每个任务正使用的虚拟内存量,如果任务超出分配值,则直接将其杀掉,默认是true -->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>24576</value>

</property>

</configuration>mapred-site.xml

<configuration>

<!-- 启用jvm重用 -->

<property>

<name>mapreduce.job.jvm.numtasks</name>

<value>10</value>

<description>How many tasks to run per jvm,if set to -1 ,there is no limit</description>

</property>

<property>

<!--指定 mapreduce 作业运行在 yarn 上-->

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/home/bigdata/module/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/home/bigdata/module/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/home/bigdata/module/hadoop-3.1.3</value>

</property>

<!-- 历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>master1:10020</value>

</property>

<!-- 历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master1:19888</value>

</property>

</configuration>workers

192.168.0.217

192.168.0.218

192.168.0.219

capacity-scheduler.xml

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.scheduler.capacity.maximum-applications</name>

<value>10000</value>

<description>

Maximum number of applications that can be pending and running.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.maximum-am-resource-percent</name>

<value>0.3</value>

<description>

Maximum percent of resources in the cluster which can be used to run

application masters i.e. controls number of concurrent running

applications.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.resource-calculator</name>

<value>org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator</value>

<description>

The ResourceCalculator implementation to be used to compare

Resources in the scheduler.

The default i.e. DefaultResourceCalculator only uses Memory while

DominantResourceCalculator uses dominant-resource to compare

multi-dimensional resources such as Memory, CPU etc.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.queues</name>

<value>high,low</value>

<description>

The queues at the this level (root is the root queue).

</description>

</property>

<!--

队列占比

-->

<property>

<name>yarn.scheduler.capacity.root.high.capacity</name>

<value>70</value>

<description>Default queue target capacity.</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.capacity</name>

<value>30</value>

<description>Default queue target capacity.</description>

</property>

<!--

百分比

-->

<property>

<name>yarn.scheduler.capacity.root.high.user-limit-factor</name>

<value>1</value>

<description>

Default queue user limit a percentage from 0.0 to 1.0.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.user-limit-factor</name>

<value>1</value>

<description>

Default queue user limit a percentage from 0.0 to 1.0.

</description>

</property>

<!--

运行状态

-->

<property>

<name>yarn.scheduler.capacity.root.high.maximum-capacity</name>

<value>100</value>

<description>

The maximum capacity of the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.state</name>

<value>RUNNING</value>

<description>

The state of the default queue. State can be one of RUNNING or STOPPED.

</description>

</property>

<!--

权限

-->

<property>

<name>yarn.scheduler.capacity.root.high.acl_submit_applications</name>

<value>*</value>

<description>

The ACL of who can submit jobs to the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.acl_submit_applications</name>

<value>*</value>

<description>

The ACL of who can submit jobs to the default queue.

</description>

</property>

<!--

权限

-->

<property>

<name>yarn.scheduler.capacity.root.high.acl_administer_queue</name>

<value>*</value>

<description>

The ACL of who can administer jobs on the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.acl_administer_queue</name>

<value>*</value>

<description>

The ACL of who can administer jobs on the default queue.

</description>

</property>

<!--

权限

-->

<property>

<name>yarn.scheduler.capacity.root.high.acl_application_max_priority</name>

<value>*</value>

<description>

The ACL of who can submit applications with configured priority.

For e.g, [user={name} group={name} max_priority={priority} default_priority={priority}]

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.acl_application_max_priority</name>

<value>*</value>

<description>

The ACL of who can submit applications with configured priority.

For e.g, [user={name} group={name} max_priority={priority} default_priority={priority}]

</description>

</property>

<!--

权限

-->

<property>

<name>yarn.scheduler.capacity.root.high.maximum-application-lifetime

</name>

<value>-1</value>

<description>

Maximum lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

This will be a hard time limit for all applications in this

queue. If positive value is configured then any application submitted

to this queue will be killed after exceeds the configured lifetime.

User can also specify lifetime per application basis in

application submission context. But user lifetime will be

overridden if it exceeds queue maximum lifetime. It is point-in-time

configuration.

Note : Configuring too low value will result in killing application

sooner. This feature is applicable only for leaf queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.maximum-application-lifetime

</name>

<value>-1</value>

<description>

Maximum lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

This will be a hard time limit for all applications in this

queue. If positive value is configured then any application submitted

to this queue will be killed after exceeds the configured lifetime.

User can also specify lifetime per application basis in

application submission context. But user lifetime will be

overridden if it exceeds queue maximum lifetime. It is point-in-time

configuration.

Note : Configuring too low value will result in killing application

sooner. This feature is applicable only for leaf queue.

</description>

</property>

<!--

生命周期

-->

<property>

<name>yarn.scheduler.capacity.root.high.default-application-lifetime

</name>

<value>-1</value>

<description>

Default lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

If the user has not submitted application with lifetime value then this

value will be taken. It is point-in-time configuration.

Note : Default lifetime can't exceed maximum lifetime. This feature is

applicable only for leaf queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.low.default-application-lifetime

</name>

<value>-1</value>

<description>

Default lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

If the user has not submitted application with lifetime value then this

value will be taken. It is point-in-time configuration.

Note : Default lifetime can't exceed maximum lifetime. This feature is

applicable only for leaf queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.node-locality-delay</name>

<value>40</value>

<description>

Number of missed scheduling opportunities after which the CapacityScheduler

attempts to schedule rack-local containers.

When setting this parameter, the size of the cluster should be taken into account.

We use 40 as the default value, which is approximately the number of nodes in one rack.

Note, if this value is -1, the locality constraint in the container request

will be ignored, which disables the delay scheduling.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.rack-locality-additional-delay</name>

<value>-1</value>

<description>

Number of additional missed scheduling opportunities over the node-locality-delay

ones, after which the CapacityScheduler attempts to schedule off-switch containers,

instead of rack-local ones.

Example: with node-locality-delay=40 and rack-locality-delay=20, the scheduler will

attempt rack-local assignments after 40 missed opportunities, and off-switch assignments

after 40+20=60 missed opportunities.

When setting this parameter, the size of the cluster should be taken into account.

We use -1 as the default value, which disables this feature. In this case, the number

of missed opportunities for assigning off-switch containers is calculated based on

the number of containers and unique locations specified in the resource request,

as well as the size of the cluster.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.queue-mappings</name>

<value></value>

<description>

A list of mappings that will be used to assign jobs to queues

The syntax for this list is [u|g]:[name]:[queue_name][,next mapping]*

Typically this list will be used to map users to queues,

for example, u:%user:%user maps all users to queues with the same name

as the user.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.queue-mappings-override.enable</name>

<value>false</value>

<description>

If a queue mapping is present, will it override the value specified

by the user? This can be used by administrators to place jobs in queues

that are different than the one specified by the user.

The default is false.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.per-node-heartbeat.maximum-offswitch-assignments</name>

<value>1</value>

<description>

Controls the number of OFF_SWITCH assignments allowed

during a node's heartbeat. Increasing this value can improve

scheduling rate for OFF_SWITCH containers. Lower values reduce

"clumping" of applications on particular nodes. The default is 1.

Legal values are 1-MAX_INT. This config is refreshable.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.application.fail-fast</name>

<value>false</value>

<description>

Whether RM should fail during recovery if previous applications'

queue is no longer valid.

</description>

</property>

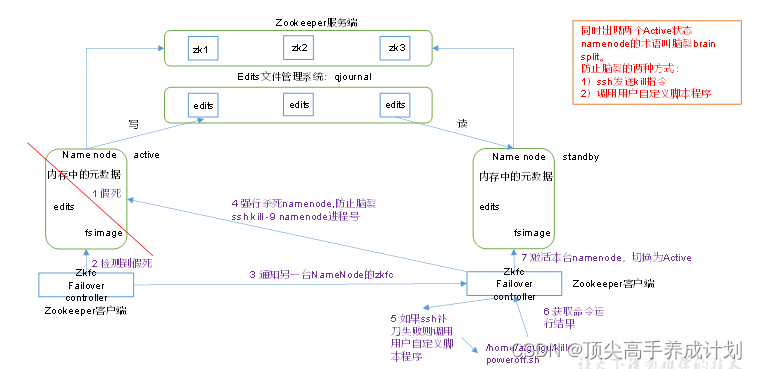

</configuration>架构如图

启动注意事项

先关闭防火墙

sudo systemctl status firewalld

setenforce 0

sudo vi /etc/selinux/config

SELINUXTYPE=disabled#启动Zookeeper以后,然后再初始化HA在Zookeeper中状态(在对应的namenode上面其中一台格式化就行,最好这种情况是master1主节点):

hdfs zkfc -formatZK#启动journalnode他是8485端口(在datanode的所有机器上面启动)

hdfs --daemon start journalnode#然后格式化(namenode:主节点格式化,这里也就是master1)

hdfs namenode -formatmaster1启动namenode (master2先不用启动)

hdfs --daemon start namenodemaster2然后同步namenode数据

hdfs namenode -bootstrapStandby 运行集群的时候注意:如果有JAVA_HOME找不到,那么就把hadoop里面的bin和sbin里面的执行脚本都加一个JAVA_HOME变量,比如:

JAVA_HOME="/home/bigdata/module/jdk1.8.0_161"加入到脚本靠前的位置就行了。

启动集群脚本

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo "node1的journalnode启动"

ssh node1 "hdfs --daemon start journalnode"

echo "node2的journalnode启动"

ssh node2 "hdfs --daemon start journalnode"

echo "node3的journalnode启动"

ssh node3 "hdfs --daemon start journalnode"

echo " --------------- 启动 hdfs ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh master2 "/home/bigdata/software/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh master2 "/home/bigdata/software/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/sbin/stop-dfs.sh"

echo "node1的journalnode关闭"

ssh node1 "hdfs --daemon stop journalnode"

echo "node2的journalnode关闭"

ssh node2 "hdfs --daemon stop journalnode"

echo "node3的journalnode关闭"

ssh node3 "hdfs --daemon stop journalnode"

;;

*)

echo "Input Args Error..."

;;

esac测试集群

hadoop dfs -mkdir /data

hadoop jar /home/bigdata/module/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.job.queuename=yarn队列名 /datadata /output测试resourcemanger高可用是否可用你

yarn-daemon.sh start resourcemanager

yarn-daemon.sh stop resourcemanager测试namenode高可用是否可用

hdfs --daemon start namenode

hdfs --daemon stop namenodekafka安装

集群规划

集群部署

1)解压安装包

tar -zxvf kafka_2.11-2.4.1.tgz -C /home/bigdata/software/

2)修改解压后的文件名称 (可改可不改,注意一下后面配置即可 我没改)

mv kafka_2.11-2.4.1/ kafka

3)在/opt/module/kafka目录下创建logs文件夹

mkdir logs

4)修改配置文件

cd config/

vim server.properties

修改或者增加以下内容:

#broker的全局唯一编号,不能重复 这个非常重要

broker.id=0

#删除topic功能使能

delete.topic.enable=true

#kafka运行日志存放的路径

log.dirs=/home/bigdata/software/kafka_2.11-2.4.1/data

#配置连接Zookeeper集群地址

zookeeper.connect=node1:2181,node2:2181,node3:2181/kafka5)配置环境变量

sudo vim /etc/profile.d/my_env.sh

#KAFKA_HOME

export KAFKA_HOME=/home/bigdata/software/kafka_2.11-2.4.1/

export PATH=$PATH:$KAFKA_HOME/bin环境变量改完之后source一下,让它生效

source /etc/profile.d/my_env.sh

6)分发安装包

xsync kafka/

注意:分发之后记得配置其他机器的环境变量

7)分别在其它节点上修改配置文件/home/bigdata/software/kafka_2.11-2.4.1/config/server.properties中的broker.id=1、broker.id=2

注:broker.id不得重复

8)启动集群

依次在node1/node2/node3节点上启动kafka

bin/kafka-server-start.sh -daemon /home/bigdata/software/kafka_2.11-2.4.1/config/server.properties

bin/kafka-server-start.sh -daemon /home/bigdata/software/kafka_2.11-2.4.1/config/server.properties

bin/kafka-server-start.sh -daemon /home/bigdata/software/kafka_2.11-2.4.1/server.properties

9)关闭集群

bin/kafka-server-stop.sh

bin/kafka-server-stop.sh

bin/kafka-server-stop.sh

kafka群起脚本

#!/bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------启动 $i Kafka-------"

ssh $i "/opt/module/kafka/kafka_2.12-3.0.0/bin/kafka-server-start.sh -daemon /opt/module/kafka/kafka_2.12-3.0.0/config/server.properties "

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------停止 $i Kafka-------"

ssh $i "/opt/module/kafka/kafka_2.12-3.0.0/bin/kafka-server-stop.sh stop"

done

};;

esacflume 3.0安装

安装地址

(1) Flume官网地址:Welcome to Apache Flume — Apache Flume

(2)文档查看地址:Flume 1.10.0 User Guide — Apache Flume

(3)下载地址:http://archive.apache.org/dist/flume/

安装部署

(1)将apache-flume-1.9.0-bin.tar.gz上传到linux的/home/bigdata/package目录下

(2)解压apache-flume-1.9.0-bin.tar.gz到/home/bigdata/software/目录下

[bigdata@master1 flume-1.9.0]$ tar -zxvf /home/bigdata/package /apache-flume-1.9.0-bin.tar.gz -C /home/bigdata/software/

(3)修改apache-flume-1.9.0-bin的名称为flume

[bigdata@master1 lib]$ mv apache-flume-1.9.0-bin/ flume-1.9.0

(4)将lib文件夹下的guava-11.0.2.jar删除以兼容Hadoop 3.1.3

[bigdata@master1 flume-1.9.0]$ rm /home/bigdata/software/flume-1.9.0/lib/guava-11.0.2.jar

注意:删除guava-11.0.2.jar的服务器节点,一定要配置hadoop环境变量。否则会报如下异常。

Caused by: java.lang.ClassNotFoundException: com.google.common.collect.Lists

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 1 more

(5)修改conf目录下的log4j.properties配置文件,配置日志文件路径

[bigdata@master1 conf]$ vim log4j.properties

flume.log.dir=/home/bigdata/software/flume-1.9.0/logs将flume分发到master2上

rsync -av /home/bigdata/software/flume-1.9.0 root@master2:/home/bigdata/software

项目经验

1)堆内存调整

Flume堆内存通常设置为4G或更高,配置方式如下:

修改/opt/module/flume/conf/flume-env.sh文件,配置如下参数

export JAVA_OPTS="-Xms4096m -Xmx4096m -Dcom.sun.management.jmxremote"

注:

-Xms表示JVM Heap(堆内存)最小尺寸,初始分配;

-Xmx 表示JVM Heap(堆内存)最大允许的尺寸,按需分配。

2)方便起见,此处编写一个日志采集Flume进程的启停脚本

在master1节点的/home/bigdata/bin目录下创建脚本f1.sh

[bigdata@master1 bin]$ vim f1.sh

在脚本中填写如下内容

脚本

#!/bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103

do

echo " --------启动 $i 采集flume-------"

ssh $i "nohup /opt/module/flume/apache-flume-1.9.0-bin/bin/flume-ng agent --conf /opt/module/flume/apache-flume-1.9.0-bin/conf --conf-file /opt/module/flume/apache-flume-1.9.0-bin/myconf/file_to_kafka.conf --name a1 -Dflume.root.logger=INFO,console >/dev/null 2>&1 &"

done

};;

"stop"){

for i in hadoop102 hadoop103

do

echo " --------停止 $i 采集flume-------"

ssh $i "ps -ef | grep file_to_kafka.conf | grep -v grep |awk '{print \$2}' | xargs -n1 kill -9 "

done

};;

esac集群脚本

hadoop

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo "node1的journalnode启动"

ssh node1 "hdfs --daemon start journalnode"

echo "node2的journalnode启动"

ssh node2 "hdfs --daemon start journalnode"

echo "node3的journalnode启动"

ssh node3 "hdfs --daemon start journalnode"

echo " --------------- 启动 hdfs ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh master2 "/home/bigdata/software/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh master2 "/home/bigdata/software/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh master1 "/home/bigdata/software/hadoop-3.1.3/sbin/stop-dfs.sh"

echo "node1的journalnode关闭"

ssh node1 "hdfs --daemon stop journalnode"

echo "node2的journalnode关闭"

ssh node2 "hdfs --daemon stop journalnode"

echo "node3的journalnode关闭"

ssh node3 "hdfs --daemon stop journalnode"

;;

*)

echo "Input Args Error..."

;;

esac

jpsall.sh

#! /bin/bash

for i in master1 master2 node1 node2 node3

do

echo --------- $i ----------

ssh $i "jps"

done

kafka

#!/bin/bash

case $1 in

"start"){

for i in node1 node2 node3

do

echo " --------启动 $i Kafka-------"

ssh $i "/home/bigdata/software/kafka_2.11-2.4.1/bin/kafka-server-start.sh -daemon /home/bigdata/software/kafka_2.11-2.4.1/config/server.properties "

done

};;

"stop"){

for i in node1 node2 node3

do

echo " --------停止 $i Kafka-------"

ssh $i "/home/bigdata/software/kafka_2.11-2.4.1/bin/kafka-server-stop.sh stop"

done

};;

esacxsync

#!/bin/bash

#1. 判断参数个数

if [ $# -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. 遍历集群所有机器

for host in master1 master2 node1 node2 node3

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#6. 获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

zk

#!/bin/bash

#检验参数

if [ $# -lt 1 ]

then

echo '参数不能为空!!!'

exit

fi

#循环遍历每一台机器,分别启动或者停止ZK服务

for host in node1 node2 node3

do

case $1 in

"start")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

"stop")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

"status")

echo "*****************$1 $host zookeeper****************"

ssh $host /home/bigdata/software/zookeeper-3.5.7/bin/zkServer.sh $1

;;

*)

echo '参数有误!!!'

exit

;;

esac

done如果启动mapreduce提交到Yarn

注解掉hosts的127.0.0.1 还有

然后重启hadoop,一定要重启!!!

1813

1813

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?