本文主要介绍在android平台上使用RtmpDump来完成推音视频流的示例。音频流使用faac编码器,视频流使用x264编码器编码器。

具体可参考Github仓库

文章目录

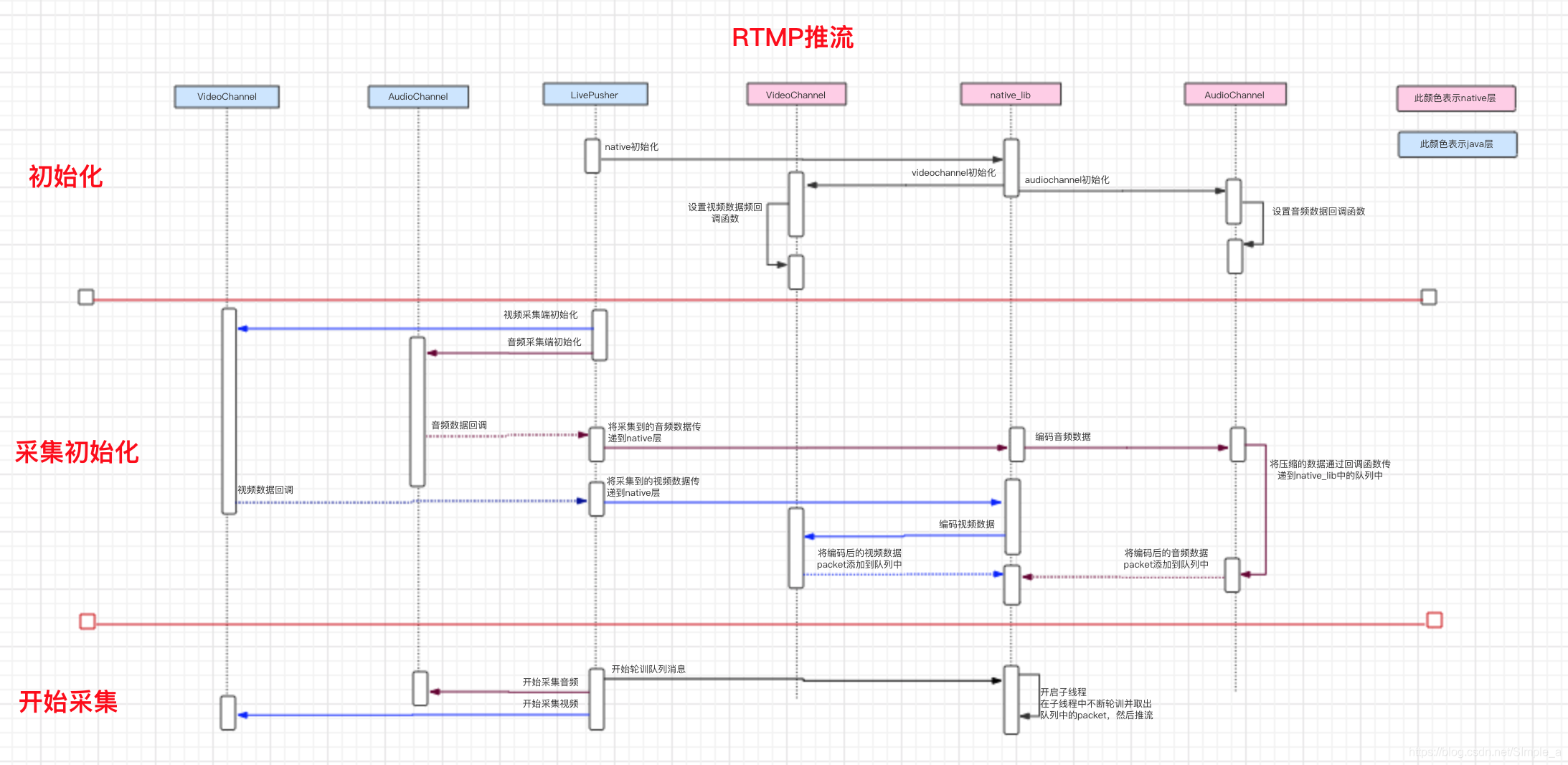

1 总结构图

java层的VideoChannel和AudioChnnel负责音视频数据的采集。C层的VideoChannle和AudioChannel负责音视频数据的编码。

流程主要分三大步骤

-

native层的初始化。通过java层的LivePusher类调用native_lib.c的jni函数,在函数中会创建C层的用于编码的VideoChannel和AudioChannel。并会实现编码后的回调函数。

-

音视频采集的初始化。首先是java层的VideoChannel和AudioChannel的初始化。当有数据采集出来会通过LivePusher传递到native_lib类中。然后通过C层的VideoChannel和AudioChannel编码音视频的数据。当VideoChannel和AudioChannel编码数据完成后会通过回调函数,将RtmpPacket添加到native_lib.c文件的队列中。

-

开始采集: 当一切准备工作都完成后,开始发起java层的音视频采集。此时采集后的数据会按照之前初始化的步骤来完成音视频的编码及添加到待推流的队列过程。然后native_lib.c中会开启一个线程,不断轮询队列中的packet数据,并将packet推到服务器。

2 音频采集

2.1 java层 音频采集初始化配置

public AudioChannel(LivePusher livePusher) {

//1 初始化LivePusher, 用于将采集到的数据通过LivePusher中的native函数传递到c层

mLivePusher = livePusher;

//2 创建线程池对象,用于在线程中读取音频数据

executor = Executors.newSingleThreadExecutor();

//3 配置通道数 比如是单声道或者立体声

int channelConfig;

if (channels == 2) {

channelConfig = AudioFormat.CHANNEL_IN_STEREO;

} else {

channelConfig = AudioFormat.CHANNEL_IN_MONO;

}

//4 给native设置音频采样率及通道数

mLivePusher.native_setAudioInfo(44100, channels);

//5 选择一个合适的音频缓冲区大小

inputSamples = mLivePusher.getInputSamples() * 2;

int minBufferSize = AudioRecord.getMinBufferSize(44100, channelConfig, AudioFormat.ENCODING_PCM_16BIT) * 2;

minBufferSize = minBufferSize > inputSamples ? minBufferSize : inputSamples;

//6 创建用于音频采集的AudioRecord对象

audioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, 44100, channelConfig, AudioFormat.ENCODING_PCM_16BIT, minBufferSize);

}

2.2 java层 开始采集音频数据

public void startLive() {

isLiving = true;

//启动线程 读取采集到的音频数据

executor.submit(new AudioTask());

}

class AudioTask implements Runnable {

@Override

public void run() {

//1 开始采集

audioRecord.startRecording();

//2 定义每次读流的字节大小

byte[] bytes = new byte[inputSamples];

while (isLiving) {

//3 读流

int len = audioRecord.read(bytes, 0, bytes.length);

//4 将读取到的音频数据传递到native层

if (len > 0) {

mLivePusher.native_pushAudio(bytes);

}

}

audioRecord.stop();

}

}

3 音频编码

3.1 将音频数据从java层传递到native层

//将音频数据传递到native层

public native void native_pushAudio(byte[] bytes);

extern "C"

JNIEXPORT void JNICALL

Java_com_yeliang_LivePusher_native_1pushAudio(JNIEnv *env, jobject instance, jbyteArray bytes_) {

if (!audioChannel || !readyPushing) {

return;

}

jbyte *bytes = env->GetByteArrayElements(bytes_, NULL);

//将音频数据层传递到native层的audioChannel进行编码

audioChannel->encodeData(bytes);

env->ReleaseByteArrayElements(bytes_, bytes, 0);

}

3.4 native层 编码音频数据

在开始编码之前首先对编码器做一系列配置及初始化

void AudioChannel::setAudioEncInfo(int samplesInHZ, int channels) {

mChannel = channels;

//1 打开编码器 采样率 并配置采样率,通道数。初始化采样大小及最大输出字节

audioCodec = faacEncOpen(samplesInHZ, channels, &inputSamples, &maxOutputBytes);

//编码器配置参数

faacEncConfigurationPtr config = faacEncGetCurrentConfiguration(audioCodec);

config->mpegVersion = MPEG4;

config->aacObjectType = LOW;

config->inputFormat = FAAC_INPUT_16BIT;

config->outputFormat = 0;

//2 设置编码器参数

faacEncSetConfiguration(audioCodec, config);

buffer = new u_char[maxOutputBytes];

}

编码aac数据包

void AudioChannel::encodeData(int8_t *data) {

int bytelen = faacEncEncode(audioCodec, reinterpret_cast<int32_t *>(data), inputSamples, buffer,maxOutputBytes);

if(bytelen > 0){

int bodySize = 2 + bytelen;

RTMPPacket *packet = new RTMPPacket;

RTMPPacket_Alloc(packet, bodySize);

packet->m_body[0] = 0xAF;

if(mChannel == 1){

packet->m_body[0] = 0xAE;

}

packet->m_body[1] = 0x01;

memcpy(&packet->m_body[2], buffer, bytelen);

packet->m_hasAbsTimestamp = 0;

packet->m_nBodySize = bodySize;

packet->m_packetType = RTMP_PACKET_TYPE_AUDIO;

packet->m_nChannel = 0x11;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

LOGI("AudioChannel 发送一帧音频数据 packet.size = d%",packet->m_nBodySize);

audioCallback(packet);

}

}

然后将编码后的数据包添加到等待推流的队列中

SafeQueue<RTMPPacket *> packets;

void callback(RTMPPacket *packet) {

if (packet) {

packet->m_nTimeStamp = RTMP_GetTime() - start_time;

packets.push(packet);

}

}

4 视频采集

4.1 视频采集初始化配置

1 CameraHelper类初始化

public VideoChannel(LivePusher livePusher, Activity activity, int width, int height, int bitrate, int fps, int cameraId) {

mLivePusher = livePusher;

mBitrate = bitrate;

mFps = fps;

mCameraHelper = new CameraHelper(activity, cameraId, width, height);

mCameraHelper.setPreviewCallBack(this);

mCameraHelper.setmOnSizeChangeListener(this);

}

2 添加surface的回调

CameraHelper#setPreviewDisPlay()

public void setPreviewDisPlay(SurfaceHolder surfaceHolder) {

mSurfaceHolder = surfaceHolder;

mSurfaceHolder.addCallback(this);

}

当surface创建后,开始预览

@Override

public void surfaceCreated(SurfaceHolder holder) {

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

stopPreview();

startPreview();

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

stopPreview();

}

3 打开相机预览

private void startPreview() {

try {

//1 打开相机

mCamera = Camera.open(mCameraId);

//2 相机的配置参数如预览大小,预览的数据格式

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewFormat(ImageFormat.NV21);

setPreviewSize(parameters);

//3 设置预览方向

setPreviewOrientation();

mCamera.setParameters(parameters);

buffer = new byte[mWidth * mHeight * 3 / 2];

bytes = new byte[buffer.length];

//4 设置缓冲区

mCamera.addCallbackBuffer(buffer);

//5 设置像素数据回调

mCamera.setPreviewCallbackWithBuffer(this);

//6 设置预览回调 会通过SurfaceHolder在Surface上渲染数据

mCamera.setPreviewDisplay(mSurfaceHolder);

//7 开始预览

mCamera.startPreview();

} catch (IOException e) {

e.printStackTrace();

}

}

4.2 开始采集视频数据

VideoChannel#onPreviewFrame()

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

if (isLiving) {

//将数据传递到native层

mLivePusher.native_push_video(data);

}

}

native_lib#push_video()

extern "C"

JNIEXPORT void JNICALL

Java_com_yeliang_LivePusher_native_1push_1video(JNIEnv *env, jobject instance, jbyteArray data_) {

if (!videoChannel || !readyPushing) {

return;

}

jbyte *data = env->GetByteArrayElements(data_, NULL);

//通过native层的videoChannel编码视频数据

videoChannel->encodeData(data);

env->ReleaseByteArrayElements(data_, data, 0);

}

5 视频编码

5.1 将视频频数据从java层传递到native层

LivePusher#native_push_video()

public native void native_push_video(byte[] data);

native_lib#native_push_video()

extern "C"

JNIEXPORT void JNICALL

Java_com_yeliang_LivePusher_native_1push_1video(JNIEnv *env, jobject instance, jbyteArray data_) {

if (!videoChannel || !readyPushing) {

return;

}

jbyte *data = env->GetByteArrayElements(data_, NULL);

videoChannel->encodeData(data);

env->ReleaseByteArrayElements(data_, data, 0);

}

5.2 native层 编码视频数据

首先是对x264编码器初始化

void VideoChannel::setVideoEncodeInfo(int width, int height, int fps, int bitrate) {

pthread_mutex_lock(&mutex);

mWidth = width;

mHeight = height;

mFps = fps;

mBitrate = bitrate;

ySize = width * height;

uvSize = ySize / 4;

if (videoCodec) {

x264_encoder_close(videoCodec);

videoCodec = 0;

}

if (pic_in) {

x264_picture_clean(pic_in);

delete pic_in;

pic_in = 0;

}

//打开x264解码器

//x264编码器的属性

x264_param_t param;

//ultrafast:最快 zerolatency:无延迟解码

//1 初始化编码器参数

x264_param_default_preset(¶m, "ultrafast", "zerolatency");

param.i_level_idc = 32;

//2 输入数据格式

param.i_csp = X264_CSP_I420;

param.i_width = width;

param.i_height = height;

//3 无b帧

param.i_bframe = 0;

//4 码率控制 参数i_rc_method表示码率控制, CQP(恒定质量) CRF(恒定码率) ABR(平均码率)

param.rc.i_rc_method = X264_RC_ABR;

//5 码率(比特率 单位Kbps)

param.rc.i_bitrate = bitrate / 1000;

//6 瞬时最大码率

param.rc.i_vbv_max_bitrate = bitrate / 1000 * 1.2;

//7 设置了i_vbv_max_bitrate必须设置此参数, 码率控制区大小 单位kbps

param.rc.i_vbv_buffer_size = bitrate / 1000;

//8 帧率

param.i_fps_num = fps;

param.i_fps_den = 1;

param.i_timebase_den = param.i_fps_num;

param.i_timebase_num = param.i_fps_den;

//9 用fps而不是时间戳来计算帧间距离

param.b_vfr_input = 0;

//10 帧距离(关键帧) 2s一个关键帧

param.i_keyint_max = fps * 2;

//11 是否赋值sps和pps放在每个关键帧的前面 该参数设置是让每个关键帧(I帧)都附带sps/pps

param.b_repeat_headers = 1;

//12 配置编码线程数

param.i_threads = 1;

x264_param_apply_profile(¶m, "baseline");

//13 打开解码器

videoCodec = x264_encoder_open(¶m);

//14 创建编码帧

pic_in = new x264_picture_t;

x264_picture_alloc(pic_in, X264_CSP_I420, width, height);

pthread_mutex_unlock(&mutex);

}

开始编码视频数据

void VideoChannel::encodeData(int8_t *data) {

//编码

pthread_mutex_lock(&mutex);

memcpy(pic_in->img.plane[0], data, ySize);

for (int i = 0; i < uvSize; ++i) {

//间隔1个字节取一个数据

//u数据

*(pic_in->img.plane[1] + i) = *(data + ySize + i * 2 + 1);

//v数据

*(pic_in->img.plane[2] + i) = *(data + ySize + i * 2);

}

//编码出的数据

x264_nal_t *pp_nal;

//编码除了几个nalu

int pi_nal;

x264_picture_t pic_out;

//编码

int ret = x264_encoder_encode(videoCodec, &pp_nal, &pi_nal, pic_in, &pic_out);

if (ret < 0) {

pthread_mutex_unlock(&mutex);

return;

}

int sps_len, pps_len;

uint8_t sps[100];

uint8_t pps[100];

for (int i = 0; i < pi_nal; ++i) {

//数据类型

if (pp_nal[i].i_type == NAL_SPS) {

//去掉00 00 00 01

sps_len = pp_nal[i].i_payload - 4;

memcpy(sps, pp_nal[i].p_payload + 4, sps_len);

} else if(pp_nal[i].i_type == NAL_PPS){

pps_len = pp_nal[i].i_payload - 4;

memcpy(pps, pp_nal[i].p_payload + 4, pps_len);

sendSpsPps(sps, pps, sps_len, pps_len);

}else {

sendFrame(pp_nal[i].i_type, pp_nal[i].p_payload, pp_nal[i].i_payload);

}

}

pthread_mutex_unlock(&mutex);

}

5.3 编码sps和pps数据

void VideoChannel::sendSpsPps(uint8_t *sps, uint8_t *pps, int sps_len, int pps_len) {

RTMPPacket *packet = new RTMPPacket;

int bodysize = 13 + sps_len + 3 + pps_len;

RTMPPacket_Alloc(packet, bodysize);

int i = 0;

//固定头

packet->m_body[i++] = 0x17;

//类型

packet->m_body[i++] = 0x00;

//composition time 0x000000

packet->m_body[i++] = 0x00;

packet->m_body[i++] = 0x00;

packet->m_body[i++] = 0x00;

//版本

packet->m_body[i++] = 0x01;

//编码规格

packet->m_body[i++] = sps[1];

packet->m_body[i++] = sps[2];

packet->m_body[i++] = sps[3];

packet->m_body[i++] = 0xFF;

//整个sps

packet->m_body[i++] = 0xE1;

//sps长度

packet->m_body[i++] = (sps_len >> 8) & 0xff;

packet->m_body[i++] = sps_len & 0xff;

memcpy(&packet->m_body[i], sps, sps_len);

i += sps_len;

//pps

packet->m_body[i++] = 0x01;

packet->m_body[i++] = (pps_len >> 8) & 0xff;

packet->m_body[i++] = (pps_len) & 0xff;

memcpy(&packet->m_body[i], pps, pps_len);

//视频

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nBodySize = bodysize;

//随意分配一个管道(尽量避开rtmp.c中使用的)

packet->m_nChannel = 10;

//sps pps没有时间戳

packet->m_nTimeStamp = 0;

//不使用绝对时间

packet->m_hasAbsTimestamp = 0;

packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

callback(packet);

}

5.4 编码帧数据

void VideoChannel::sendFrame(int type, uint8_t *p_payload, int payload) {

//去掉 00 00 00 01/ 00 00 01

if (p_payload[2] == 0x00) {

payload -= 4;

p_payload += 4;

} else {

payload -= 3;

p_payload += 3;

}

int bodysize = 9 + payload;

RTMPPacket *packet = new RTMPPacket;

RTMPPacket_Alloc(packet, bodysize);

RTMPPacket_Reset(packet);

packet->m_body[0] = 0x27;

//关键帧

if (type == NAL_SLICE_IDR) {

LOGE("关键帧");

packet->m_body[0] = 0x17;

}

//类型

packet->m_body[1] = 0x01;

packet->m_body[2] = 0x00;

packet->m_body[3] = 0x00;

packet->m_body[4] = 0x00;

//数据长度 int 4个字节 相当于把int转成4个字节的byte数组

packet->m_body[5] = (payload >> 24) & 0xff;

packet->m_body[6] = (payload >> 16) & 0xff;

packet->m_body[7] = (payload >> 8) & 0xff;

packet->m_body[8] = (payload) & 0xff;

//图片数据

memcpy(&packet->m_body[9], p_payload, payload);

packet->m_hasAbsTimestamp = 0;

packet->m_nBodySize = bodysize;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nChannel = 0x10;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

callback(packet);

}

6 推流

初始化时,会创建一个线程,在线程中不停轮训队列中的数据。并将编码后的数据推流

extern "C"

JNIEXPORT void JNICALL

Java_com_yeliang_LivePusher_native_1start(JNIEnv *env, jobject instance, jstring path_) {

const char *path = env->GetStringUTFChars(path_, 0);

if (isStart) {

return;

}

char *url = new char[strlen(path) + 1];

strcpy(url, path);

isStart = 1;

//创建用于轮训编码数据的线程 拿到数据后推流

pthread_create(&pid, 0, start, url);

env->ReleaseStringUTFChars(path_, path);

}

线程函数

void *start(void *args) {

char *url = static_cast<char *>(args);

RTMP *rtmp = 0;

do {

rtmp = RTMP_Alloc();

if (!rtmp) {

LOGE("rtmp创建失败");

break;

}

RTMP_Init(rtmp);

rtmp->Link.timeout = 5;

int ret = RTMP_SetupURL(rtmp, url);

if (!ret) {

LOGE("rtmp设置地址失败: %s", url);

break;

}

RTMP_EnableWrite(rtmp);

ret = RTMP_Connect(rtmp, 0);

if (!ret) {

LOGE("rtmp连接地址失败:%s", url);

}

ret = RTMP_ConnectStream(rtmp, 0);

if (!ret) {

LOGE("rtmp连接流失败:%s", url);

break;

}

readyPushing = 1;

start_time = RTMP_GetTime();

packets.setWork(1);

RTMPPacket *packet = 0;

while (readyPushing) {

packets.pop(packet);

if (!readyPushing) {

break;

}

if (!packet) {

continue;

}

packet->m_nInfoField2 = rtmp->m_stream_id;

ret = RTMP_SendPacket(rtmp, packet, 1);

releasePackets(packet);

if (!ret) {

LOGE("发送流数据失败");

break;

}

}

releasePackets(packet);

} while (0);

isStart = 0;

readyPushing = 0;

packets.setWork(0);

packets.clear();

if (rtmp) {

RTMP_Close(rtmp);

RTMP_Free(rtmp);

}

delete url;

return 0;

}

2362

2362

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?