1. 副本集

1.1 编写clickhouse-replication.yml文件

该代码已上传至gitee,可克隆下来

# 副本集部署示例

version: '3'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

zoo2:

image: zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

zoo3:

image: zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

ck1:

image: clickhouse/clickhouse-server

container_name: ck1

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

- ./logs/:/var/log/clickhouse-server

ports:

- 18123:8123 # http接口用

- 19000:9000 # 本地客户端用

depends_on:

- zoo1

networks:

- ckNet

ck2:

image: clickhouse/clickhouse-server

container_name: ck2

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

ports:

- 18124:8123 # http接口用

- 19001:9000 # 本地客户端用

depends_on:

- zoo1

networks:

- ckNet

networks:

ckNet:

driver: bridge

其中config.d目录下ck_zk.xml配置如下

<?xml version="1.0" encoding="utf-8" ?>

<yandex>

<zookeeper-servers>

<node index="1">

<host>zoo1</host>

<port>2181</port>

</node>

<node index="2">

<host>zoo2</host>

<port>2181</port>

</node>

<node index="3">

<host>zoo3</host>

<port>2181</port>

</node>

</zookeeper-servers>

</yandex>

对于config.xml主要新增以下三行

<!-- 副本集配置新增该两行 -->

<zookeeper incl="zookeeper-servers" optional="true"></zookeeper>

<include_from>/etc/clickhouse-server/config.d/ck_zk.xml</include_from>

<!-- 远程访问,windows可能不支持,可以打开下面 -->

<!-- <listen_host>::</listen_host>-->

<!-- Same for hosts without support for IPv6: -->

<listen_host>0.0.0.0</listen_host>

1.2 创建表

datagrip工具连接127.0.0.1:18123与127.0.0.1:18124

分别创建表house,其中/clickhouse/table/01/house的01代表分片,rep_001为副本名称

CREATE TABLE house (

id String,

city String,

region String,

name String,

price Float32,

publish_date DateTime

) ENGINE=ReplicatedMergeTree('/clickhouse/table/01/house', 'rep_001') PARTITION BY toYYYYMMDD(publish_date) PRIMARY KEY(id) ORDER BY (id, city, region, name)

SETTINGS index_granularity=8192

CREATE TABLE house (

id String,

city String,

region String,

name String,

price Float32,

publish_date DateTime

) ENGINE=ReplicatedMergeTree('/clickhouse/table/01/house', 'rep_002') PARTITION BY toYYYYMMDD(publish_date) PRIMARY KEY(id) ORDER BY (id, city, region, name)

SETTINGS index_granularity=8192

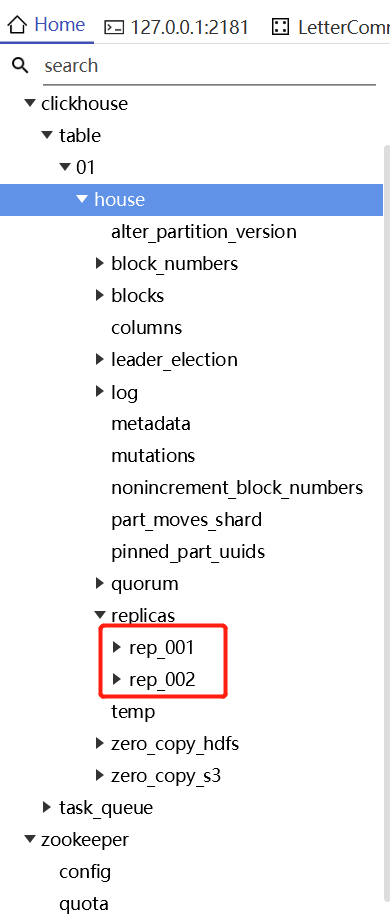

此时,副本集的表已创建完成,通过prettyZoo工具可以看到副本rep_001与rep_002

1.3 验证副本集

在副本rep_001表house中插入INSERT INTO house(name, price) VALUES ('场中小区', '59680');,

然后在副本rep_002中查询,可以看到数据已插入,反过来插入查询也一样结果

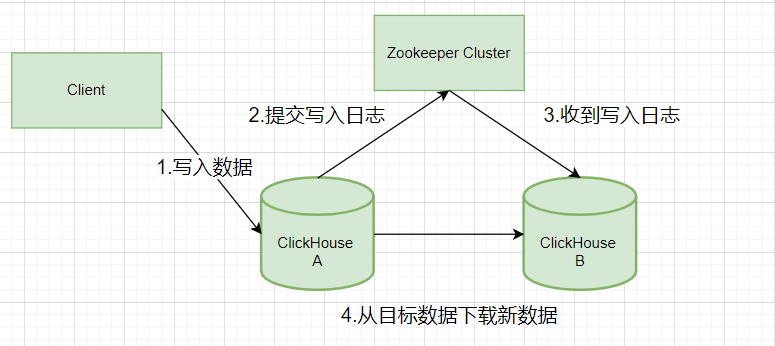

1.4 副本写入流程图

了解了怎么具体部署副本集,咱们现在了解下副本写入的流程

欢迎关注公众号算法小生或沈健的技术博客shenjian.online

2. 分片集群

副本集对数据进行完整备份,数据高可用,对于分片集群来说,不管是ES还是ClickHouse是为了解决数据横向扩展的问题,ClickHouse在实际应用中一般配置副本集就好了

2.1 编写clickhouse-shard.yml文件

具体代码已上传至gitee,可直接克隆使用

# 副本集部署示例

version: '3'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

zoo2:

image: zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

zoo3:

image: zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

- ckNet

ck1_1:

image: clickhouse/clickhouse-server

container_name: ck1_1

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./1_1/config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

ports:

- 18123:8123 # http接口用

- 19000:9000 # 本地客户端用

depends_on:

- zoo1

- zoo2

- zoo3

networks:

- ckNet

ck1_2:

image: clickhouse/clickhouse-server

container_name: ck1_2

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./1_2/config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

ports:

- 18124:8123 # http接口用

- 19001:9000 # 本地客户端用

depends_on:

- zoo1

- zoo2

- zoo3

networks:

- ckNet

ck2_1:

image: clickhouse/clickhouse-server

container_name: ck2_1

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./2_1/config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

ports:

- 18125:8123 # http接口用

- 19002:9000 # 本地客户端用

depends_on:

- zoo1

- zoo2

- zoo3

networks:

- ckNet

ck2_2:

image: clickhouse/clickhouse-server

container_name: ck2_2

ulimits:

nofile:

soft: "262144"

hard: "262144"

volumes:

- ./2_2/config.d:/etc/clickhouse-server/config.d

- ./config.xml:/etc/clickhouse-server/config.xml

ports:

- 18126:8123 # http接口用

- 19003:9000 # 本地客户端用

depends_on:

- zoo1

- zoo2

- zoo3

networks:

- ckNet

networks:

ckNet:

driver: bridge

修改config.xml导入的文件名称为metrika_shard

<include_from>/etc/clickhouse-server/config.d/metrika_shard.xml</include_from>

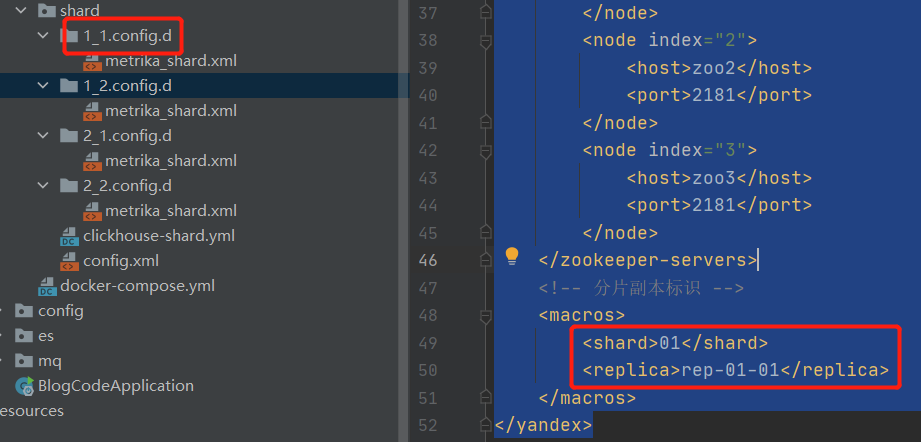

2.2 分片副本具体配置

我们配置了了2个分片,每个分片1个副本,目录如下

每个目录下metrika_shard.xml配置如下

<?xml version="1.0" encoding="utf-8" ?>

<yandex>

<remote_servers>

<shenjian_cluster>

<!-- 分片1 -->

<shard>

<internal_replication>true</internal_replication>

<replica>

<!-- 第一个副本 -->

<host>ck1_1</host>

<port>9000</port>

</replica>

<replica>

<!-- 第二个副本,生产环境中不同副本分布在不同机器 -->

<host>ck1_2</host>

<port>9000</port>

</replica>

</shard>

<!-- 分片2 -->

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>ck2_1</host>

<port>9000</port>

</replica>

<replica>

<host>ck2_2</host>

<port>9000</port>

</replica>

</shard>

</shenjian_cluster>

</remote_servers>

<zookeeper-servers>

<node index="1">

<host>zoo1</host>

<port>2181</port>

</node>

<node index="2">

<host>zoo2</host>

<port>2181</port>

</node>

<node index="3">

<host>zoo3</host>

<port>2181</port>

</node>

</zookeeper-servers>

<!-- 分片副本标识 -->

<macros>

<shard>01</shard>

<replica>rep-01-01</replica>

</macros>

</yandex>

对于其他三个,只需要修改shard replica标识即可

<macros>

<shard>01</shard>

<replica>rep-01-02</replica>

</macros>

<macros>

<shard>02</shard>

<replica>rep-02-01</replica>

</macros>

<macros>

<shard>02</shard>

<replica>rep-02-02</replica>

</macros>

OK,至此为止,可以docker-compose -f clickhouse-shard.yml up -d启动了

2.3 创建表

请将shenjian_cluster改为自己集群的名称,也就是metrika_shard.xml中remote_servers子标签,shard与replica无需改动,clickhouse会自动查找匹配的配置,为此集群下所有节点创建该表

CREATE TABLE house ON CLUSTER shenjian_cluster

(

id String,

city String,

region String,

name String,

price Float32,

publish_date DateTime

) ENGINE=ReplicatedMergeTree('/clickhouse/table/{shard}/house', '{replica}') PARTITION BY toYYYYMMDD(publish_date) PRIMARY KEY(id) ORDER BY (id, city, region, name)

创建后,可以看到所有节点【127.0.0.1:18123 127.0.0.1:18124 127.0.0.1:18125 127.0.0.1:18126】都存在了该表,正确

2.4 创建distribute表

CREATE TABLE distribute_house ON CLUSTER shenjian_cluster

(

id String,

city String,

region String,

name String,

price Float32,

publish_date DateTime

) ENGINE=Distributed(shenjian_cluster, default, house, hiveHash(publish_date))

- house: 表名

- hiveHash(city): 分片键

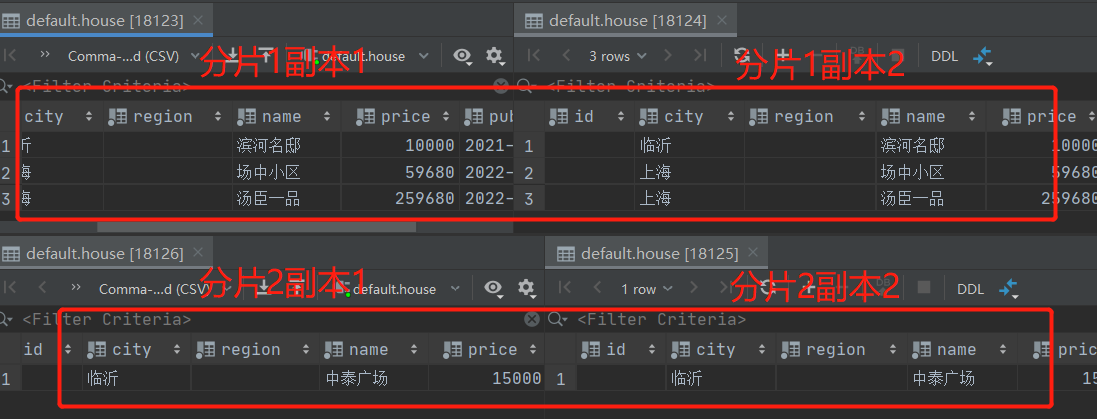

2.5 新增数据验证分片集群

INSERT INTO distribute_house(city, name, price, publish_date) VALUES ('上海', '场中小区', 59680, '2022-08-01'),

('上海', '汤臣一品', 259680, '2022-08-01'),

('临沂', '滨河名邸', 10000, '2021-08-01'),

('临沂', '中泰广场', 15000, '2020-08-01');

OK,分片集群成功,快给自己鼓掌吧!!!!

欢迎关注公众号算法小生或沈健的技术博客shenjian.online

1742

1742

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?