22/04/08 17:41:37 ERROR util.SparkUncaughtExceptionHandler: Uncaught exception in thread Thread[Executor task launch worker for task 3.2 in stage 2.0 (TID 298),5,main] java.lang.OutOfMemoryError: Java heap space at org.apache.spark.sql.catalyst.expressions.codegen.BufferHolder.grow(BufferHolder.java:80) at org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.grow(UnsafeWriter.java:63) at org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.writeUnalignedBytes(UnsafeWriter.java:127) at org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.write(UnsafeWriter.java:118) at org.apache.spark.sql.execution.datasources.text.TextFileFormat.$anonfun$readToUnsafeMem$5(TextFileFormat.scala:133) at org.apache.spark.sql.execution.datasources.text.TextFileFormat$$Lambda$1039/870544334.apply(Unknown Source) at scala.collection.Iterator$$anon$10.next(Iterator.scala:461) at scala.collection.Iterator$$anon$10.next(Iterator.scala:461) at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.next(FileScanRDD.scala:96) at scala.collection.Iterator$$anon$10.next(Iterator.scala:461) at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:486) at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:492) at scala.collection.Iterator.foreach(Iterator.scala:943) at scala.collection.Iterator.foreach$(Iterator.scala:943) at scala.collection.AbstractIterator.foreach(Iterator.scala:1431) at scala.collection.TraversableOnce.reduceLeft(TraversableOnce.scala:237) at scala.collection.TraversableOnce.reduceLeft$(TraversableOnce.scala:220) at scala.collection.AbstractIterator.reduceLeft(Iterator.scala:1431) at scala.collection.TraversableOnce.reduceLeftOption(TraversableOnce.scala:249) at scala.collection.TraversableOnce.reduceLeftOption$(TraversableOnce.scala:248) at scala.collection.AbstractIterator.reduceLeftOption(Iterator.scala:1431) at scala.collection.TraversableOnce.reduceOption(TraversableOnce.scala:256) at scala.collection.TraversableOnce.reduceOption$(TraversableOnce.scala:256) at scala.collection.AbstractIterator.reduceOption(Iterator.scala:1431) at org.apache.spark.sql.catalyst.json.JsonInferSchema.$anonfun$infer$1(JsonInferSchema.scala:80) at org.apache.spark.sql.catalyst.json.JsonInferSchema$$Lambda$733/995054571.apply(Unknown Source) at org.apache.spark.rdd.RDD.$anonfun$mapPartitions$2(RDD.scala:863) at org.apache.spark.rdd.RDD.$anonfun$mapPartitions$2$adapted(RDD.scala:863) at org.apache.spark.rdd.RDD$$Lambda$612/295536973.apply(Unknown Source) at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52) at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373) at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)遇到这个错误,是因为数据量太大,Executor内存不够。

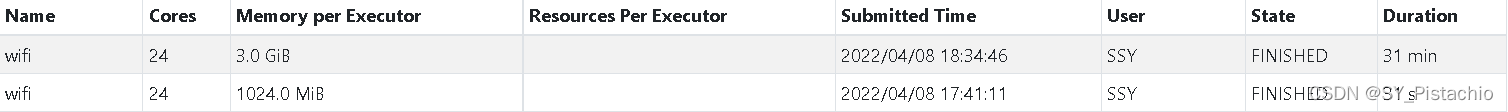

改进:增加per Executor的内存

nohup spark-submit --class "com.sparkcore.dataQuality.dataExploration.data_exploration_7.Code_Test" --master spark://10.10.10.10:7077 --total-executor-cores 24 --executor-memory 3G --driver-memory 3G /home/spark/code_jar/gz_data/wifi/first-classes.jar修改好后再次运行,成功:

1118

1118

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?