List All Files Regardless of 260 Character Path Restriction Using PowerShell and Robocopy

A common pain had by many System Administrators is when you are trying to recursively list all files and folders in a given path or even retrieve the total size of a folder. After waiting for a while in hopes of seeing the data you expect, you instead are greeted with the following message showing a failure to go any deeper in the folder structure.

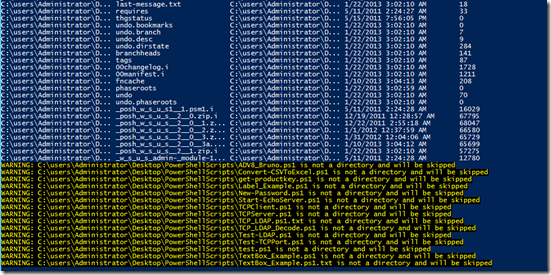

Get-ChildItem -recurse .\PowerShellScripts | Select -Expand Fullname

The infamous PathTooLongException that occurs when you hit the 260 character limit on a fully qualified path. Seeing this message means heartburn because now we are unable to get a good idea on just how many files (and how big those files are) when performing the folder query.

A lot of different reasons for what causes this issue are available, such as users that are mapped to a folder deep in the folder structure using a drive letter and then continuing to build more folders and sub-folders underneath each other. I won’t go into the technical reason for this issue, but you can find one conversation that talks about it here. What I a going to do is show you how to get around this issue and see all of the folders and files as well as giving you a total size of a given folder.

So how do we accomplish this feat? The answer lies in a freely available (and already installed) piece of software called robocopy.exe. Because robocopy is not a windows specific utility, it does not adhere to the 260 character limit (unless you want to using the /256 switch) meaning that you can set it off and it will grab all of the files and folders based on the switches that you use for it.

Using some switches in robocopy, we can list all of the files along with the size and last modified time as well as showing the total count and size of all files.

robocopy .\PowerShellScripts NULL /L /S /NJH /BYTES /FP /NC /NDL /XJ /TS /R:0 /W:0

As you can see, regardless of the total characters in the path, I can easily see all of the data I was hoping to see in my original query now available. So what do the switches do you ask? The table below will explain each switch.

/L List only – don’t copy, timestamp or delete any files.

/S copy Subdirectories, but not empty ones.

/NJH No Job Header.

/BYTES Print sizes as bytes.

/FP include Full Pathname of files in the output.

/NC No Class – don’t log file classes.

/NDL No Directory List – don’t log directory names.

/TS include source file Time Stamps in the output.

/R:0 number of Retries on failed copies: default 1 million.

/W:0 Wait time between retries: default is 30 seconds.

/XJ eXclude Junction points. (normally included by default)

I use the /XJ so I do not get caught up in an endless spiral of junction points that eventually result in errors and false data. If running a scan against folders that might be using mount points, it would be a good idea to use Win32_Volume to get the paths to those mount points. Something like this would work and you can then use Caption as the source path.

$drives = Get-WMIObject -Class Win32_Volume -Filter "NOT Caption LIKE '\\\\%'" |

Select Caption, LabelThere is also /MaxAge and /MinAge where you can specify n number of days to filter based on the LastWriteTime of a file.

This is great and all, but the output is just a collection of strings and not really a usable object that can be sorted or filtered with PowerShell. For this, I use regular expressions to parse out the size in bytes, lastwritetime and full path to the file. The end result is something like this:

$item = "PowerShellScripts"

$params = New-Object System.Collections.Arraylist

$params.AddRange(@("/L","/S","/NJH","/BYTES","/FP","/NC","/NDL","/TS","/XJ","/R:0","/W:0"))

$countPattern = "^\s{3}Files\s:\s+(?<Count>\d+).*"

$sizePattern = "^\s{3}Bytes\s:\s+(?<Size>\d+(?:\.?\d+)\s[a-z]?).*"

((robocopy $item NULL $params)) | ForEach {

If ($_ -match "(?<Size>\d+)\s(?<Date>\S+\s\S+)\s+(?<FullName>.*)") {

New-Object PSObject -Property @{

FullName = $matches.FullName

Size = $matches.Size

Date = [datetime]$matches.Date

}

} Else {

Write-Verbose ("{0}" -f $_)

}

}

Now we have something that works a lot better using PowerShell. I can take this output and do whatever I want with it. If I want to just get the size of the folder, I can either take this output and then use Measure-Object to get the sum of the Size property or use another regular expression to pull the data at the end of the robocopy job that displays the count and total size of the files.

To get this data using robocopy and pulling the data at the end of the job, I use something like this:

$item = "PowerShellScripts"

$params = New-Object System.Collections.Arraylist

$params.AddRange(@("/L","/S","/NJH","/BYTES","/FP","/NC","/NDL","/TS","/XJ","/R:0","/W:0"))

$countPattern = "^\s{3}Files\s:\s+(?<Count>\d+).*"

$sizePattern = "^\s{3}Bytes\s:\s+(?<Size>\d+(?:\.?\d+)).*"

$return = robocopy $item NULL $params

If ($return[-5] -match $countPattern) {

$Count = $matches.Count

}

If ($Count -gt 0) {

If ($return[-4] -match $sizePattern) {

$Size = $matches.Size

}

} Else {

$Size = 0

}

$object = New-Object PSObject -Property @{

FullName = $item

Count = [int]$Count

Size = ([math]::Round($Size,2))

}

$object.pstypenames.insert(0,'IO.Folder.Foldersize')

Write-Output $object

$Size=$Null

Not only was able to use robocopy to get a listing of all files regardless of the depth of the folder structure, but I am also able to get the total count of all files and the total size in bytes. Pretty handy to use when you run into issues with character limit issues when scanning folders.

This is all great and can be fit into any script that you need to run to scan folders. Obvious things missing are the capability to filter for specific extensions, files, etc… But I think the tradeoff of being able to bypass the character limitation is acceptable in my own opinion as I can split out the extensions and filter based on that.

As always, having the code snippets to perform the work is one thing, but being able to put this into a function is where something like this will really shine. so with that, here is my take on a function to accomplish this called Get-FolderItem.

You will notice that I do not specify a total count or total size of the files in this function. I gave it some thought and played with the idea of doing this but in the end I felt that a user would really be more interested in the files that are actually found and you could always save the output to a variable and use Measure-Object to get that information. If there are enough people asking for this to be added into the command, then I will definitely look into doing this. (Hint: If you use the –Verbose parameter, you can find this information for each folder scanned in the Verbose output!).

I’ll show various examples using my known “bad” folder to show how I can get all of the files from that folder and subfolders beneath it.

Get-FolderItem -Path .\PowerShellScripts |

Format-Table

I only chose to use Format-Table at the end to make it easier to view the output. If you were outputting this to a CSV file or anything else, you must remove Format-Table.

Get-ChildItem .\PowerShellScripts |

Get-FolderItem | Format-Table

Instead of searching from the PowerShell Scripts folder, I use Get-ChildItem and pipe that information into Get-FolderItem. Note that only directories will be scanned while files are ignored.

You can also specify a filter based on the LastWriteTime to find files older than a specified time. In this case, I will look for files older than a year (365 days).

Get-FolderItem -Path .\PowerShellScripts -MinAge 365 |

Format-Table

Likewise, now I want only the files that are newer then 6 months (186 days)

Get-FolderItem -Path .\PowerShellScripts -MaxAge 186 |

Format-Table

It is important to understand that MaxAge means everything after the given days while MinAge is everything before the days given. This is based off of robocopy’s own parameters filtering by days.

Lastly, let’s get the count and size of the files.

$files = Get-FolderItem -Path .\PowerShellScripts

$files | Measure-Object -Sum -Property Length |

Select Count,@{L='SizeMB';E={$_.Sum/1MB}}

So with that, you can now use this function to generate a report of all files on a server or file share, regardless of the size of characters in the full path. The script file is available for download at the link below. As always, I am always interested in hearing what you think of this article and the script!

295

295

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?