一、环境准备

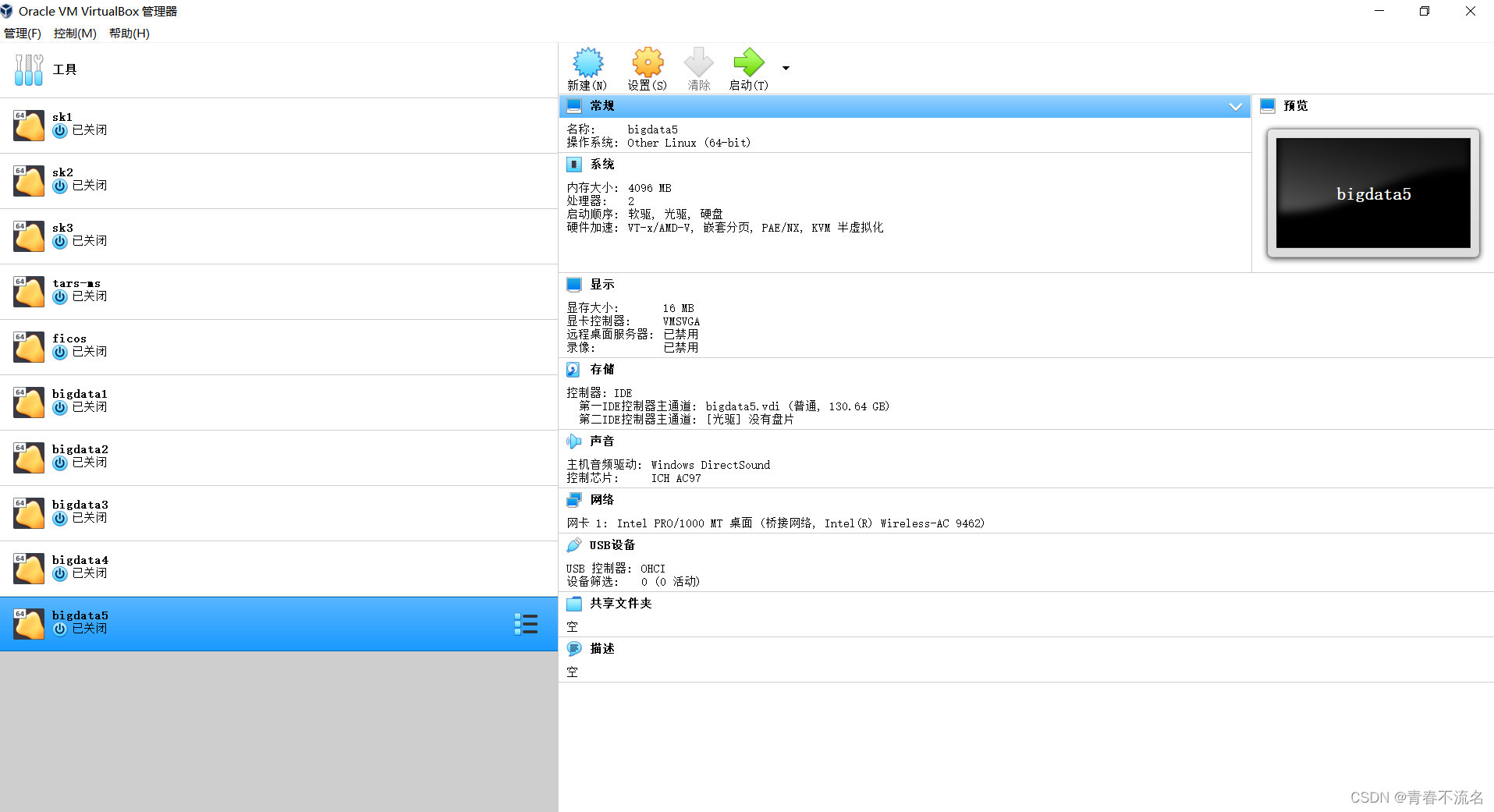

服务集群机器

描述:使用Oracle VM VirtualBox搭建部署的五台虚拟机,使用系统Centos(2009)

192.168.1.11 bigdata1

192.168.1.12 bigdata2

192.168.1.13 bigdata3

192.168.1.14 bigdata4

192.168.1.15 bigdata5

ssh免登录的设置

在每台机器上分别执行ssh-keygen -t rsa,一直按住回车键。

在bigdata 192.168.1.11的机器上进行公钥的追加操作 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

将其他机器上生成的公钥拷贝到192.168.1.11的~/.ssh目录,生成id_rsa.pub12至15的文件

scp -r ~/.ssh/id_rsa.pub root@192.168.1.11:~/.ssh/id_rsa.pub12

scp -r ~/.ssh/id_rsa.pub root@192.168.1.11:~/.ssh/id_rsa.pub13

scp -r ~/.ssh/id_rsa.pub root@192.168.1.11:~/.ssh/id_rsa.pub14

scp -r ~/.ssh/id_rsa.pub root@192.168.1.11:~/.ssh/id_rsa.pub15

执行上面命令后在bigdata1的~/.ssh目录下ls,可看到如下文件

把相关文件追加到authorized_keys后,再进行分发。

cat id_rsa.pub12 >> authorized_keys

cat id_rsa.pub13 >> authorized_keys

cat id_rsa.pub14 >> authorized_keys

cat id_rsa.pub15 >> authorized_keys

chmod 600 authorized_keys (设置读写的权限)

进行分发的操作

scp -r ~/.ssh/authorized_keys root@192.168.1.12:~/.ssh

scp -r ~/.ssh/authorized_keys root@192.168.1.13:~/.ssh

scp -r ~/.ssh/authorized_keys root@192.168.1.14:~/.ssh

scp -r ~/.ssh/authorized_keys root@192.168.1.15:~/.ssh

为每台机器设置 /etc/hosts配置

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.11 bigdata1

192.168.1.12 bigdata2

192.168.1.13 bigdata3

192.168.1.14 bigdata4

192.168.1.15 bigdata5

二、安装部署

安装JDK环境

每台机器上安装JDK环境,JDK的版本是jdk8

rpm -ivh jdk-8u321-linux-x64.rpm

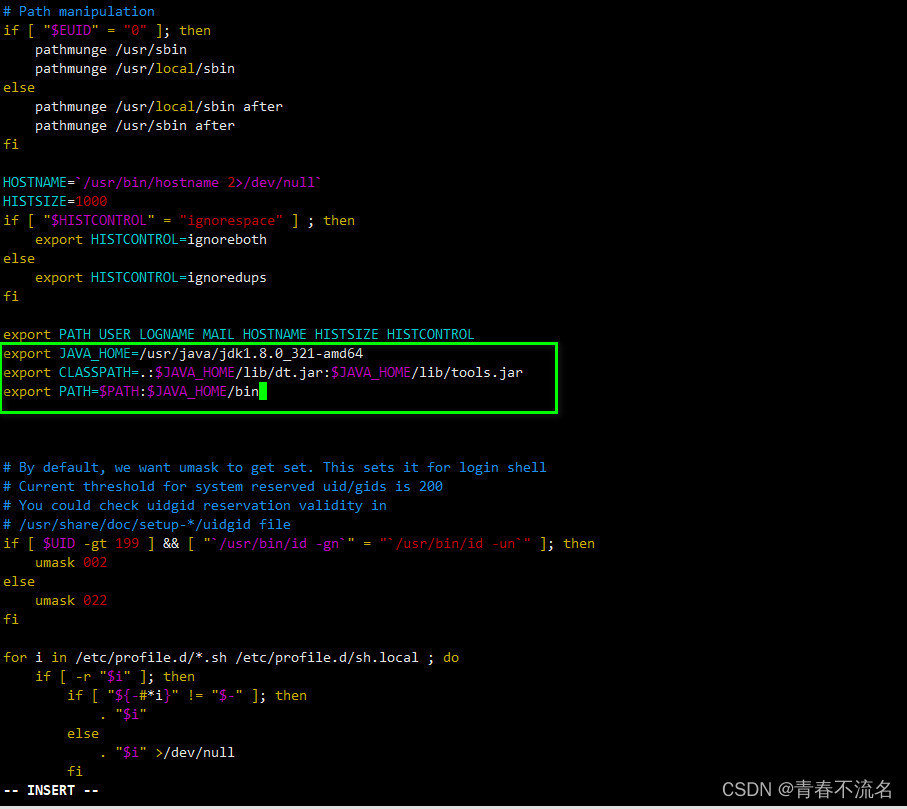

配置环境变量

在每台机器上执行vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_321-amd64

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

使配置生效

source /etc/profile

三、安装zookeeper集群

安装目录 /home/bigdata

下载Zookeeper并安装配置

复制配置文件

cp -r /home/bigdata/zookeeper/conf/zoo_sample.cfg /home/bigdata/zookeeper/conf/zoo.cfg

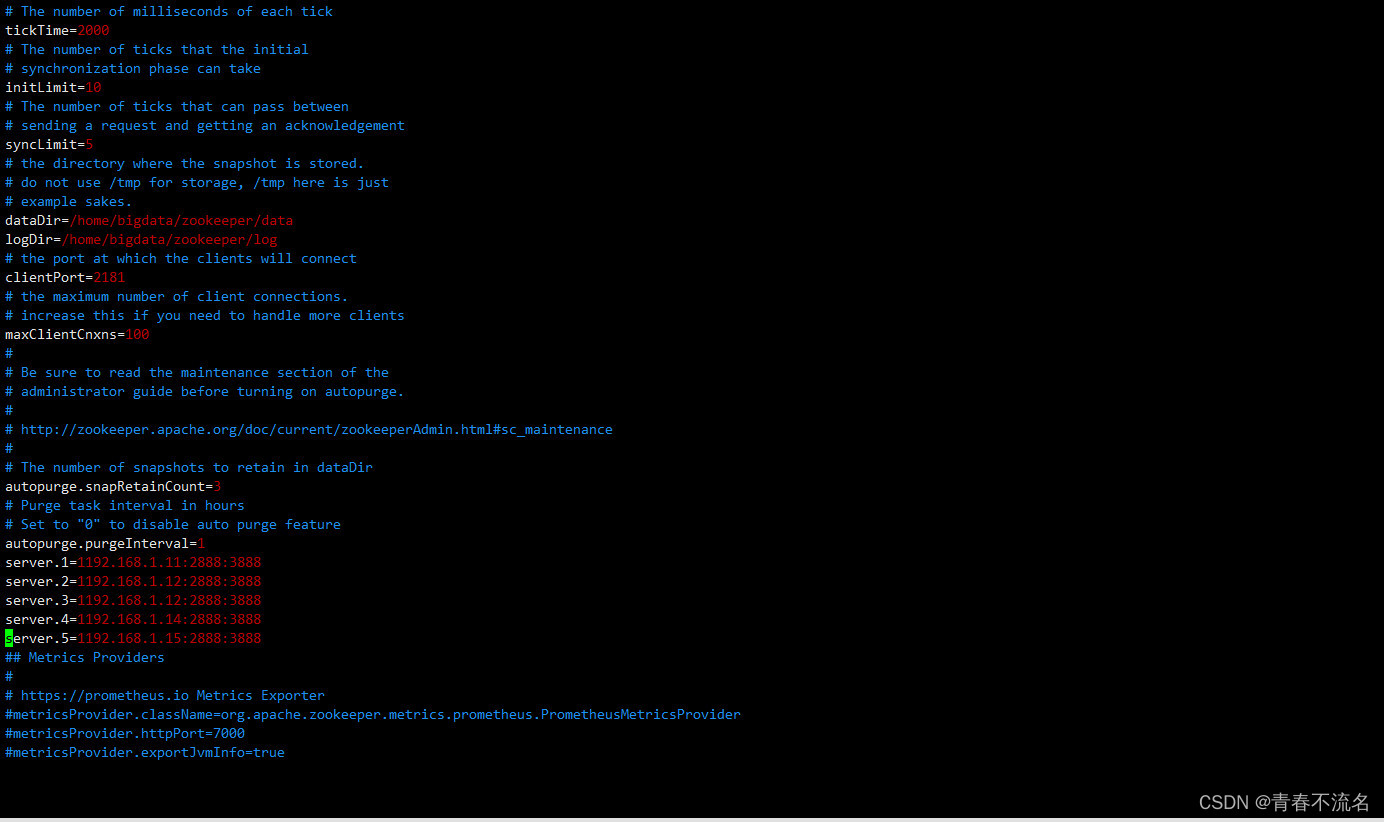

zoo.cfg的内容

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/bigdata/zookeeper/data

dataLogDir=/home/bigdata/zookeeper/logs

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

maxClientCnxns=100

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

autopurge.purgeInterval=1

server.1=192.168.1.11:2888:3888

server.2=192.168.1.12:2888:3888

server.3=192.168.1.12:2888:3888

server.4=192.168.1.14:2888:3888

server.5=192.168.1.15:2888:3888

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

将整理好的zookeeper目录进行分发

scp -r /home/bigdata/zookeeper/ root@192.168.1.12:/home/bigdata/

scp -r /home/bigdata/zookeeper/ root@192.168.1.13:/home/bigdata/

scp -r /home/bigdata/zookeeper/ root@192.168.1.14:/home/bigdata/

scp -r /home/bigdata/zookeeper/ root@192.168.1.15:/home/bigdata/

为每台机器分别设置myid值

echo "1" > /home/bigdata/zookeeper/data/myid

echo "2" > /home/bigdata/zookeeper/data/myid

echo "3" > /home/bigdata/zookeeper/data/myid

echo "4" > /home/bigdata/zookeeper/data/myid

echo "5" > /home/bigdata/zookeeper/data/myid

设置zookeeper的环境变量,在/etc/profile中追加内容

export ZOOKEEPER=/home/bigdata/zookeeper

export PATH=$PATH:$JAVA_HOME/bin:$ZOOKEEPER/bin

启动zk的命令,在每台机器上执行

/home/bigdata/zookeeper/bin/zkServer.sh restart

/home/bigdata/zookeeper/bin/zkServer.sh stop

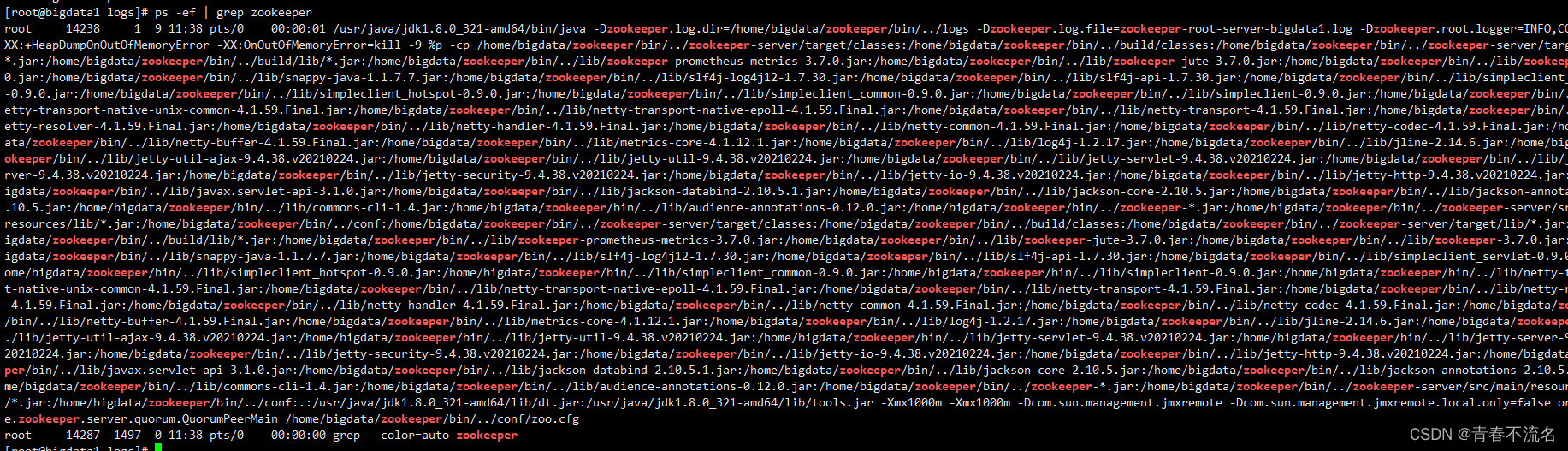

检查是否启动成功 ps -ef | grep zookeeper

输出下面的内容表示启动成功

四、安装hadoop环境

配置相关的环境变量,source /etc/profile

export YARN_NODEMANAGER_USER=root

export YARN_RESOURCEMANAGER_USER=root

export HDFS_DATANODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

export HDFS_NAMENODE_USER=root

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

export JAVA_HOME=/usr/java/jdk1.8.0_321-amd64

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export ZOOKEEPER=/home/bigdata/zookeeper

export PATH=$PATH:$JAVA_HOME/bin:$ZOOKEEPER/bin

解压hadoop文件并重命名文件夹,使用最新的版本是3.3.1

tar -zxvf hadoop-3.3.1.tar.gz && mv hadoop-3.3.1 hadoop

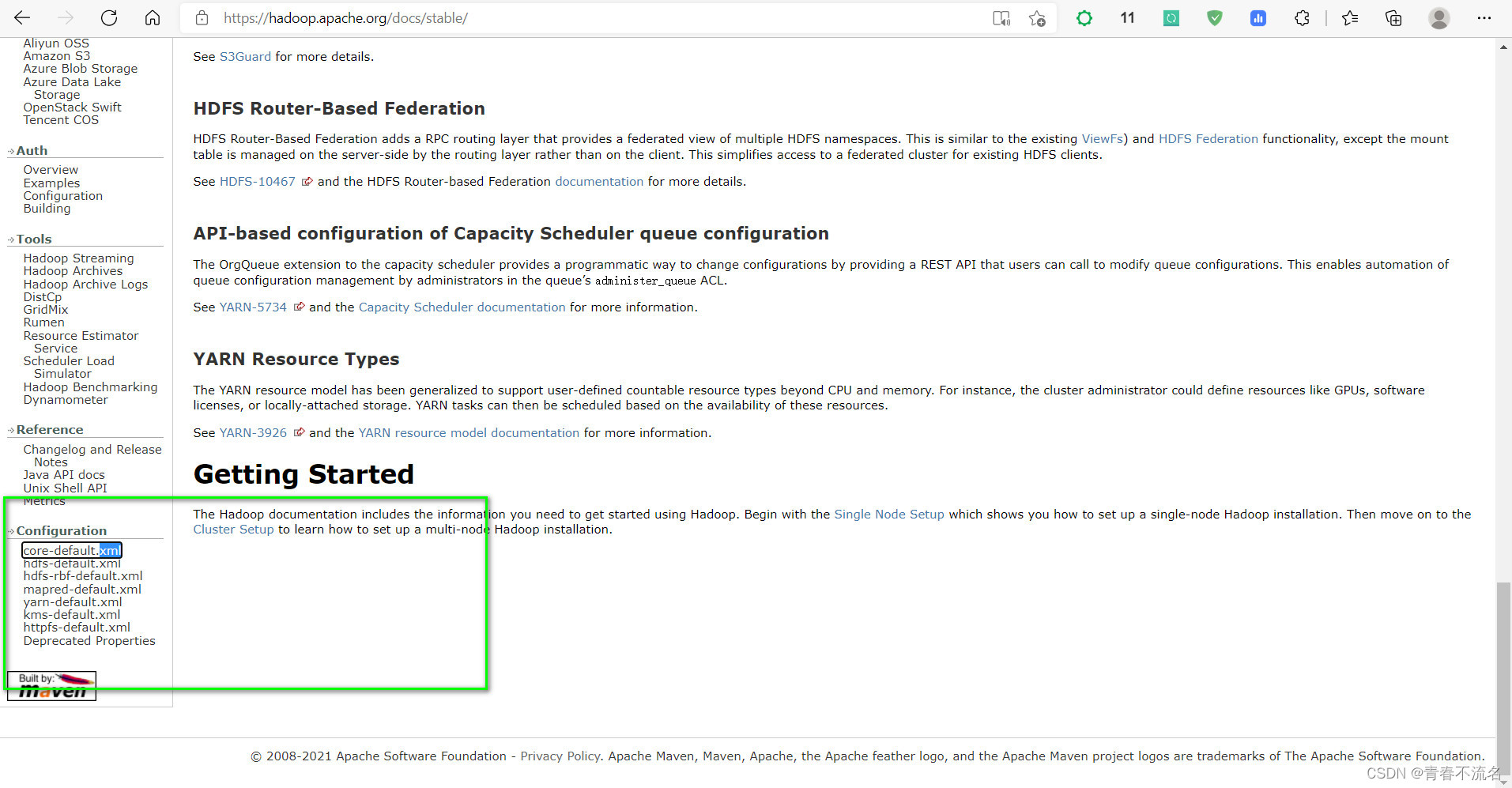

官方XML配置文件的地址Hadoop – Apache Hadoop 3.3.1

core-site.xm

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<!-- 官网配置文件的地址-->

<!-- https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/core-default.xml -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://liebe</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/bigdata/hadoop/tmp</value>

</property>

<!--webUI展示时的用户-->

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>bigdata1:2181,bigdata2:2181,bigdata3:2181,bigdata4:2181,bigdata5:2181</value>

</property>

<!-- NN 连接 JN 重试次数,默认是 10 次 -->

<property>

<name>ipc.client.connect.max.retries</name>

<value>20</value>

</property>

<!-- 重试时间间隔,默认 1s -->

<property>

<name>ipc.client.connect.retry.interval</name>

<value>2000</value>

</property>

<!-- hadoop链接zookeeper的超时时长设置 -->

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>30000</value>

<description>ms</description>

</property>

<property>

<name>fs.trash.interval</name>

<value>1440</value>

</property>

</configuration>

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<!-- 官网配置文件的地址-->

<!-- https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml -->

<configuration>

<property>

<!-- 为namenode集群定义一个services name -->

<name>dfs.nameservices</name>

<value>liebe</value>

</property>

<property>

<!-- nameservice 包含哪些namenode,为各个namenode起名 -->

<name>dfs.ha.namenodes.liebe</name>

<value>cc1,cc2,cc3</value>

</property>

<property>

<!-- 名为cc1的namenode 的rpc地址和端口号,rpc用来和datanode通讯 -->

<name>dfs.namenode.rpc-address.liebe.cc1</name>

<value>bigdata1:8020</value>

</property>

<property>

<!-- 名为cc2的namenode 的rpc地址和端口号,rpc用来和datanode通讯 -->

<name>dfs.namenode.rpc-address.liebe.cc2</name>

<value>bigdata2:8020</value>

</property>

<property>

<!-- 名为cc3的namenode 的rpc地址和端口号,rpc用来和datanode通讯 -->

<name>dfs.namenode.rpc-address.liebe.cc3</name>

<value>bigdata3:8020</value>

</property>

<property>

<!--名为cc1的namenode 的http地址和端口号,web客户端 -->

<name>dfs.namenode.http-address.liebe.cc1</name>

<value>bigdata1:9870</value>

</property>

<property>

<!--名为cc2的namenode 的http地址和端口号,web客户端 -->

<name>dfs.namenode.http-address.liebe.cc2</name>

<value>bigdata2:9870</value>

</property>

<property>

<!--名为cc3的namenode 的http地址和端口号,web客户端 -->

<name>dfs.namenode.http-address.liebe.cc3</name>

<value>bigdata3:9870</value>

</property>

<property>

<!-- namenode间用于共享编辑日志的journal节点列表 -->

<!-- 指定NameNode的edits元数据的共享存储位置。也就是JournalNode列表

该url的配置格式:qjournal://host1:port1;host2:port2;host3:port3/journalId

journalId推荐使用nameservice,默认端口号是:8485 -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://bigdata1:8485;bigdata2:8485;bigdata3:8485/liebe</value>

</property>

<property>

<!-- journalnode 上用于存放edits日志的目录 -->

<name>dfs.journalnode.edits.dir</name>

<value>${hadoop.tmp.dir}/jn</value>

</property>

<property>

<!-- 客户端连接可用状态的NameNode所用的代理类 -->

<name>dfs.client.failover.proxy.provider.liebe</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<!-- 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行 -->

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用隔离机制时需要 ssh 秘钥登录-->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/root/.ssh/id_rsa</value>

</property>

<!-- journalnode集群之间通信的超时时间 -->

<property>

<name>dfs.qjournal.start-segment.timeout.ms</name>

<value>60000</value>

</property>

<!-- 指定副本数 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--namenode路径-->

<property>

<name>dfs.namenode.name.dir</name>

<value>file://${hadoop.tmp.dir}/name</value>

</property>

<!--datanode路径-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file://${hadoop.tmp.dir}/data</value>

</property>

<!-- 开启NameNode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- A switch to turn on/off tracking DataNode peer statistics. -->

<property>

<name>dfs.datanode.peer.stats.enabled</name>

<value>true</value>

</property>

<!-- The timeout in seconds of calling rollEdits RPC on Active NN. -->

<property>

<name>dfs.ha.tail-edits.rolledits.timeout</name>

<value>120</value>

</property>

</configuration>

yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.auto-update.containers</name>

<value>true</value>

</property>

<property>

<name>yarn.webapp.api-service.enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

<property>

<!-- 启用resourcemanager的ha功能 -->

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<!-- 为resourcemanage ha 集群起个id -->

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-liebe</value>

</property>

<property>

<!-- 指定resourcemanger ha 有哪些节点名 -->

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2,rm3</value>

</property>

<!-- ========== rm1 的配置 ========== -->

<!-- 指定 rm1 的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>bigdata1</value>

</property>

<!-- 指定 rm1 的 web 端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>bigdata1:8088</value>

</property>

<!-- 指定 rm1 的内部通信地址 -->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>bigdata1:8032</value>

</property>

<!-- 指定 AM 向 rm1 申请资源的地址 -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>bigdata1:8030</value>

</property>

<!-- 指定供 NM 连接的地址 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>bigdata1:8031</value>

</property>

<!-- ========== rm2 的配置 ========== -->

<!-- 指定 rm2 的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>bigdata2</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>bigdata2:8088</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>bigdata2:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>bigdata2:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>bigdata2:8031</value>

</property>

<!-- ========== rm3 的配置 ========== -->

<!-- 指定 rm1 的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm3</name>

<value>bigdata3</value>

</property>

<!-- 指定 rm1 的 web 端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm3</name>

<value>bigdata3:8088</value>

</property>

<!-- 指定 rm1 的内部通信地址 -->

<property>

<name>yarn.resourcemanager.address.rm3</name>

<value>bigdata3:8032</value>

</property>

<!-- 指定 AM 向 rm1 申请资源的地址 -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm3</name>

<value>bigdata3:8030</value>

</property>

<!-- 指定供 NM 连接的地址 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm3</name>

<value>bigdata3:8031</value>

</property>

<property>

<!-- 指定resourcemanger ha 所用的zookeeper 节点 -->

<name>yarn.resourcemanager.zk-address</name>

<value>bigdata1:2181,bigdata2:2181,bigdata3:2181,bigdata4:2181,bigdata5:2181</value>

</property>

<property>

<!-- -->

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!-- 制定resourcemanager的状态信息存储在zookeeper集群上 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://bigdata1:19888/jobhistory/logs/</value>

</property>

<!-- 环境变量的继承 -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLAS

SPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<property>

<name>yarn.resourcemanager.am.max-attempts</name>

<value>5</value>

</property>

<!--

<property>

<name>yarn.nodemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.recovery.dir</name>

<value>${hadoop.tmp.dir}/yarn-nm-recovery</value>

</property>

<property>

<name>yarn.nodemanager.address</name>

<value>192.168.1.11</value>

</property>

<property>

<name>yarn.nodemanager.hostname</name>

<value>bigdata1</value>

</property>

-->

</configuration>

workers

bigdata1

bigdata2

bigdata3

bigdata4

bigdata5

mapre-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 配置 MapReduce Applications -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- JobHistory Server ============================================================== -->

<!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>bigdata1:10020</value>

</property>

<!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>bigdata1:19888</value>

</property>

<!-- 配置 Map段输出的压缩,snappy-->

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

</configuration>

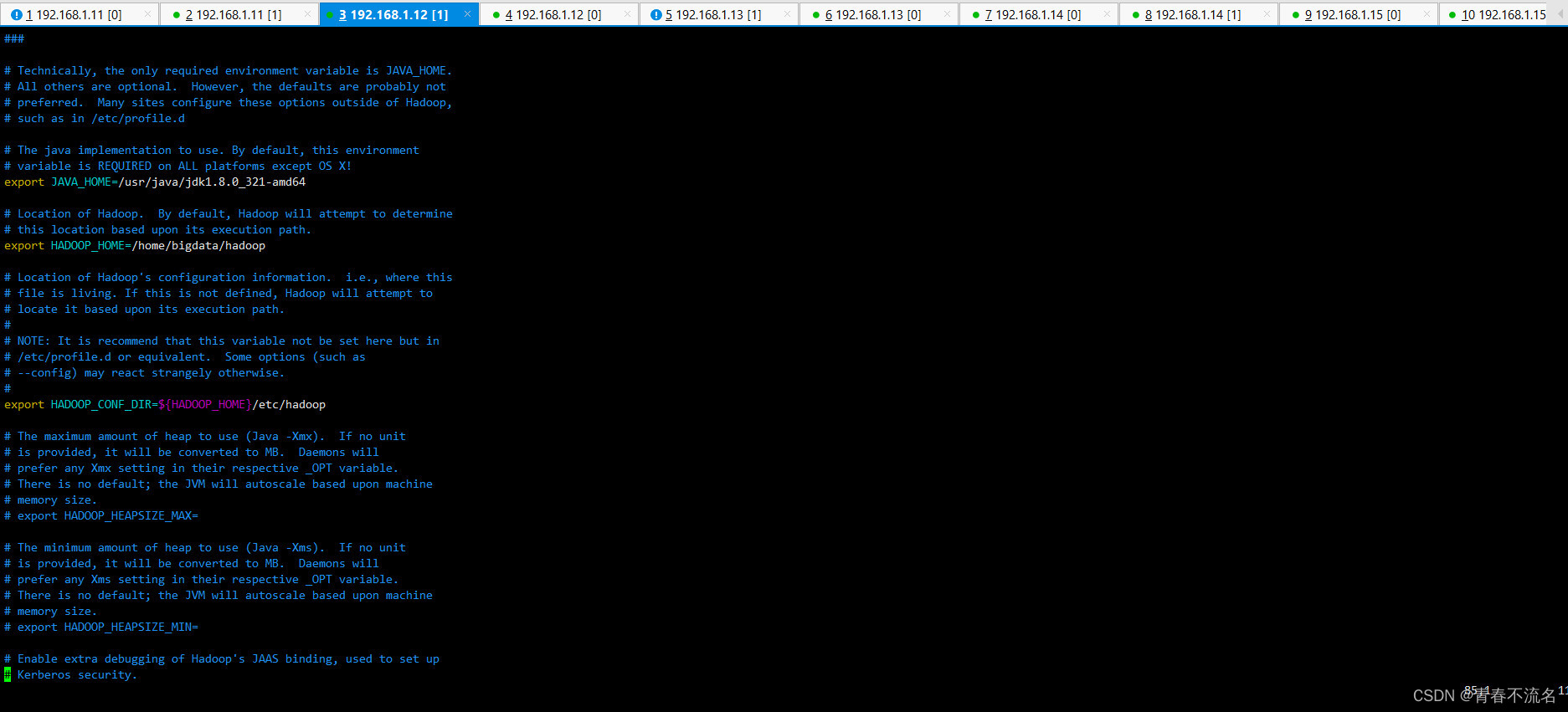

vim /home/bigdata/hadoop/etc/hadoop/hadoop-env.sh

对bigdata1下/home/bigdata/hadoop文件夹进行分发操作

scp -r /home/bigdata/hadoop/ root@192.168.1.12:/home/bigdata

scp -r /home/bigdata/hadoop/ root@192.168.1.13:/home/bigdata

scp -r /home/bigdata/hadoop/ root@192.168.1.14:/home/bigdata

scp -r /home/bigdata/hadoop/ root@192.168.1.15:/home/bigdata

Hadoop集群的启动

/home/bigdata/hadoop/sbin/start-all.sh

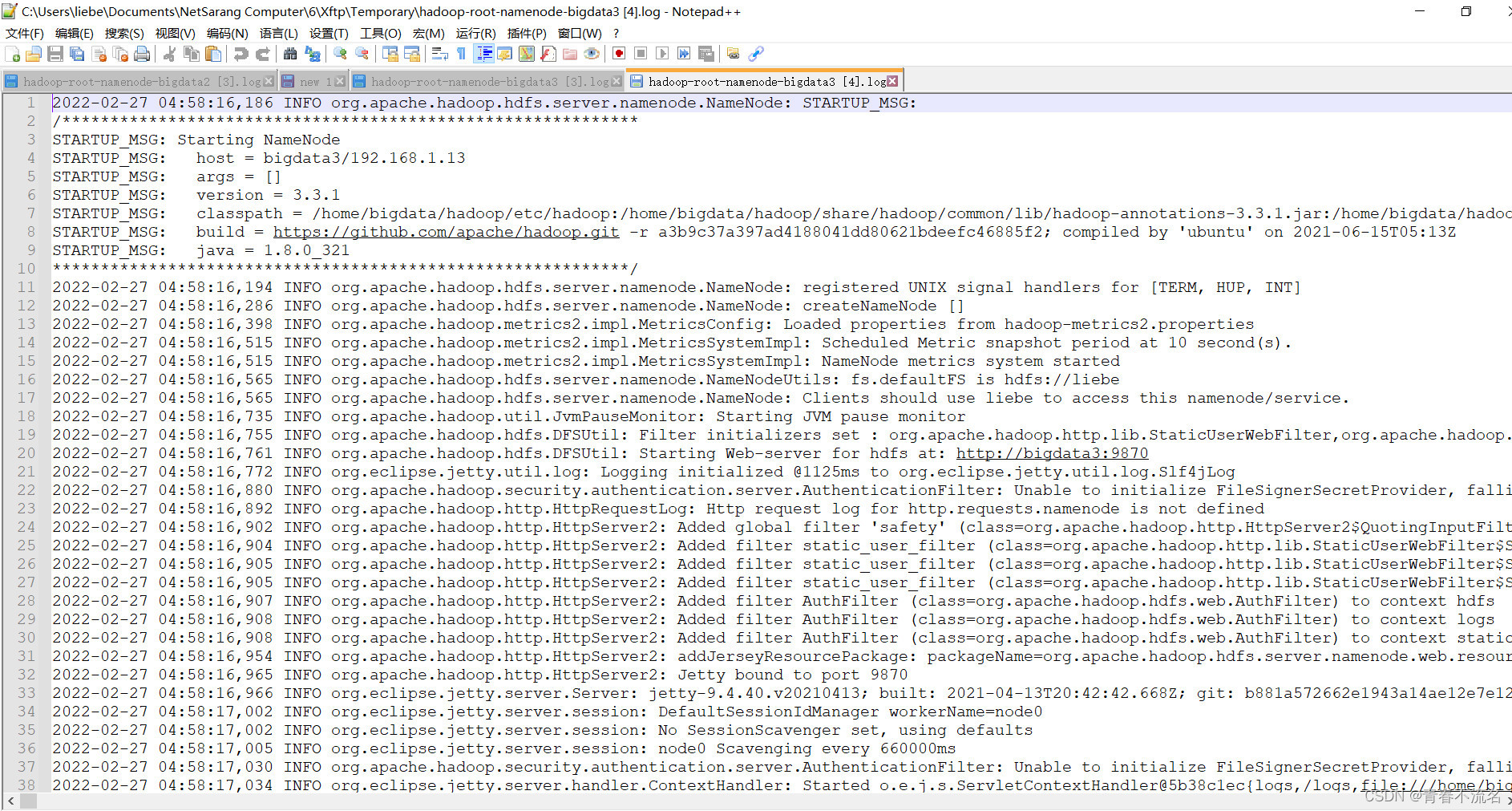

启动完成,查看logs目录下的日志信息,出现错误,逐步排查解决。

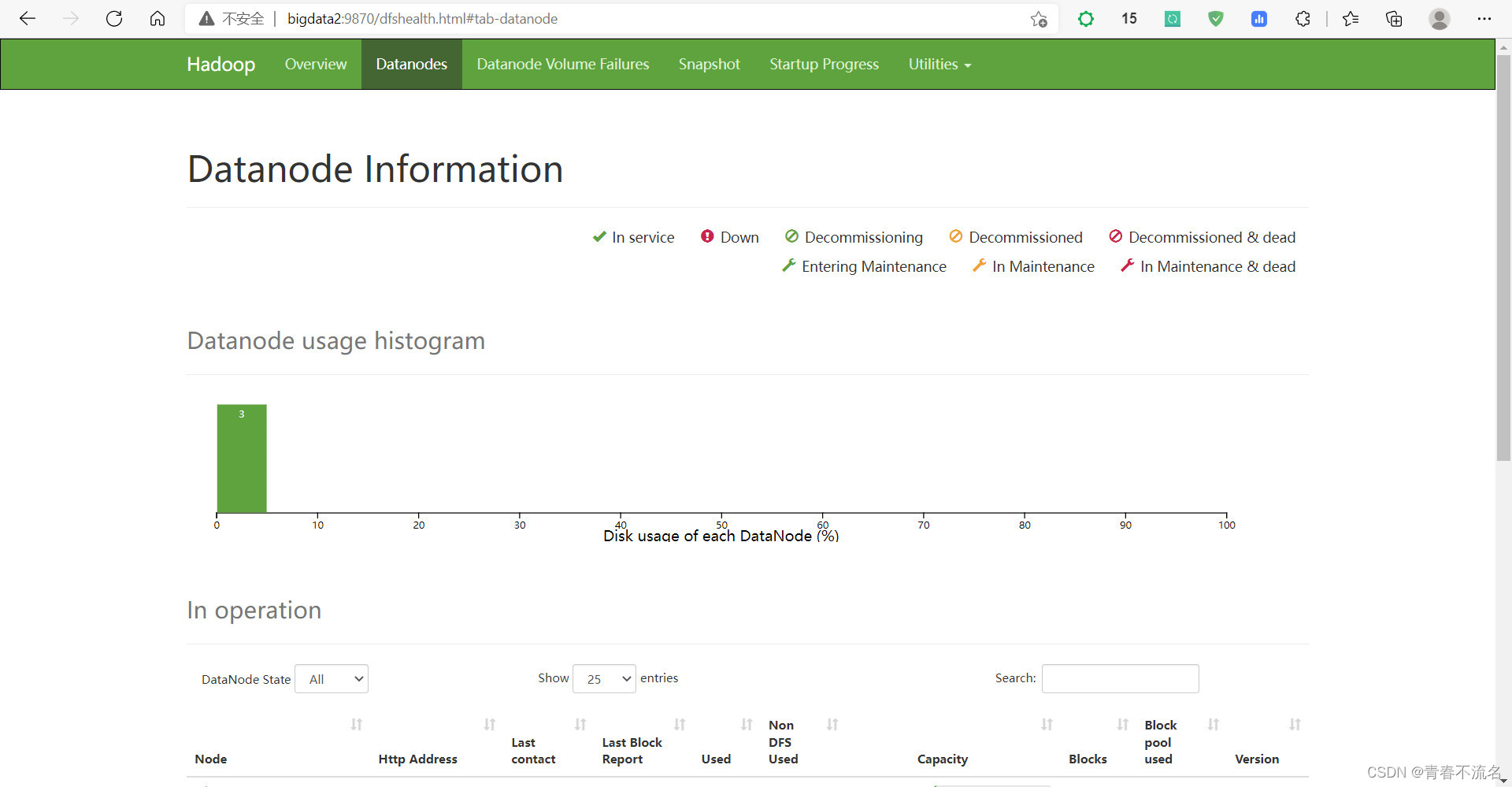

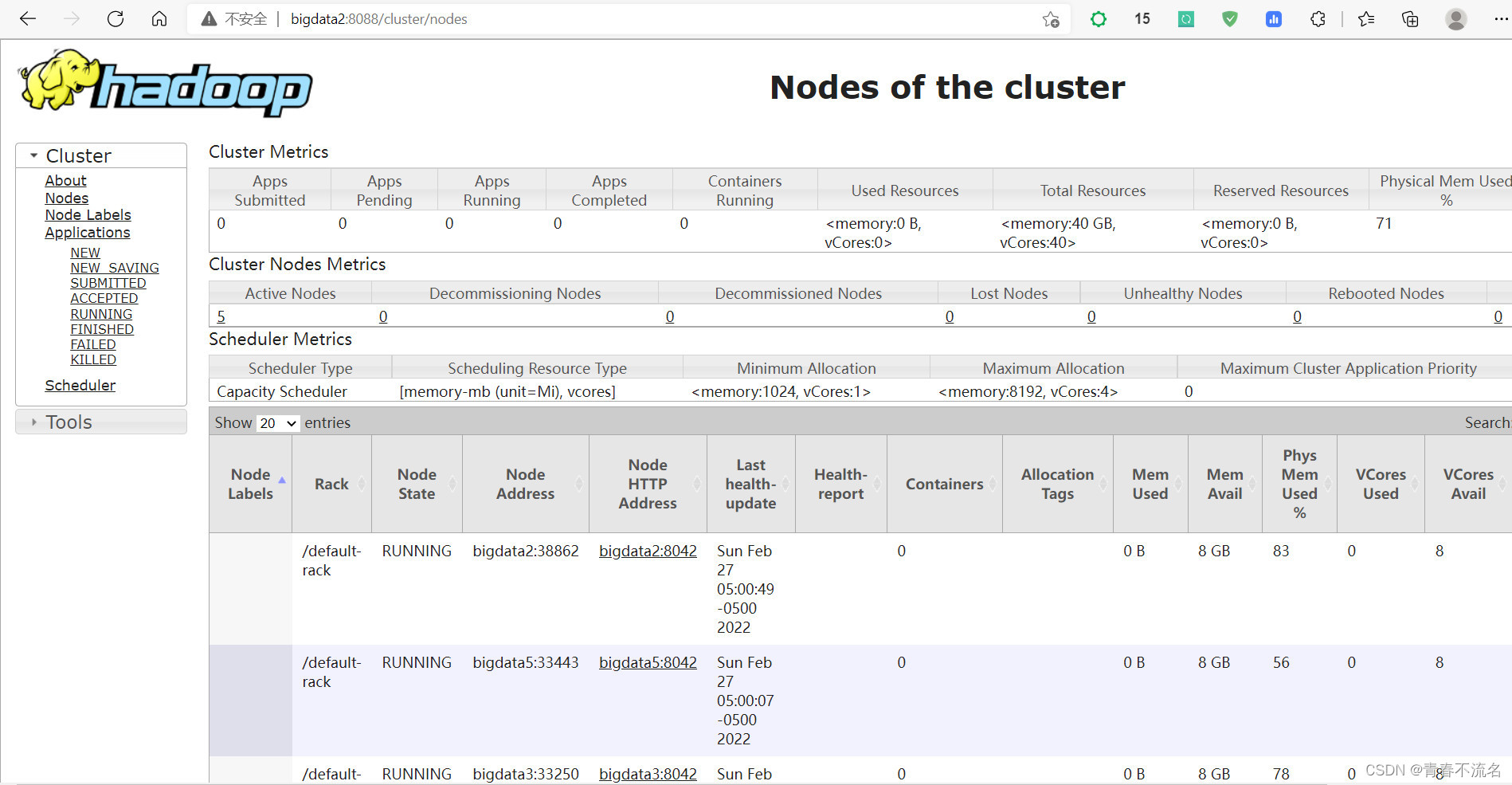

使用验证

java.io.IOException: NameNode is not formatted.

rm -rf /home/bigdata/hadoop/tmp/data/*

rm -rf /home/bigdata/hadoop/tmp/name/*

rm -rf /home/bigdata/hadoop/tmp/jn/*

rm -rf /home/bigdata/hadoop/tmp/dfs/data/*

rm -rf /home/bigdata/hadoop/tmp/dfs/dn/*

rm -rf /home/bigdata/hadoop/tmp/dfs/name/*

rm -rf /home/bigdata/hadoop/tmp/dfs/jn/*

rm -rf /home/bigdata/hadoop/tmp/dfs/nn/*

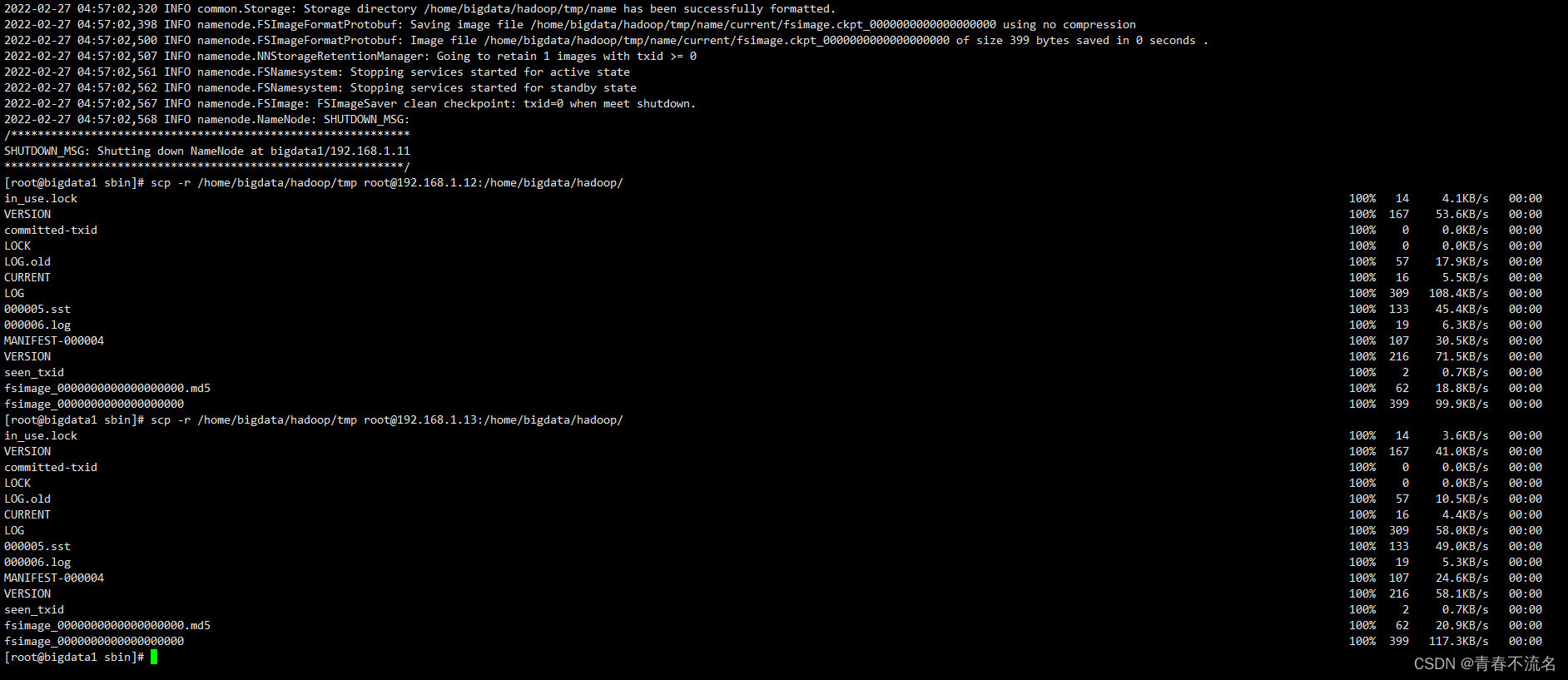

/home/bigdata/hadoop/bin/hdfs namenode -format

五、部署spark

5.1、配置目录 ${spark_home}/conf

5.2、workers

node88

node89

node995.3、spark-env.sh

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# This file is sourced when running various Spark programs.

# Copy it as spark-env.sh and edit that to configure Spark for your site.

export SPARK_HOME=/home/spark-3.3.0-bin-hadoop3

#locally模式

# Options read when launching programs locally with

# ./bin/run-example or ./bin/spark-submit

# - HADOOP_CONF_DIR, to point Spark towards Hadoop configuration files

# - SPARK_LOCAL_IP, to set the IP address Spark binds to on this node

# - SPARK_PUBLIC_DNS, to set the public dns name of the driver program

# Options read by executors and drivers running inside the cluster

# - SPARK_LOCAL_IP, to set the IP address Spark binds to on this node

# - SPARK_PUBLIC_DNS, to set the public DNS name of the driver program

# - SPARK_LOCAL_DIRS, storage directories to use on this node for shuffle and RDD data

# - MESOS_NATIVE_JAVA_LIBRARY, to point to your libmesos.so if you use Mesos

# Options read in any mode

export SPARK_CONF_DIR=${SPARK_HOME}/conf

export SPARK_EXECUTOR_CORES=1

export SPARK_EXECUTOR_MEMORY=1G

export SPARK_DRIVER_MEMORY=1G

# Options read in any cluster manager using HDFS

export HADOOP_CONF_DIR=/home/hadoop-3.3.4/etc/hadoop

# Options read in YARN client/cluster mode

export YARN_CONF_DIR=/home/hadoop-3.3.4/etc/hadoop

export SPARK_HISTORY_OPTS="

-Dspark.history.ui.port=18080

-Dspark.history.fs.logDirectory=hdfs://liebe/spark/history-eventLog

-Dspark.history.retainedApplications=30"

# Options for the daemons used in the standalone deploy mode

# - SPARK_MASTER_HOST, to bind the master to a different IP address or hostname

# - SPARK_MASTER_PORT / SPARK_MASTER_WEBUI_PORT, to use non-default ports for the master

# - SPARK_MASTER_OPTS, to set config properties only for the master (e.g. "-Dx=y")

# - SPARK_WORKER_CORES, to set the number of cores to use on this machine

# - SPARK_WORKER_MEMORY, to set how much total memory workers have to give executors (e.g. 1000m, 2g)

# - SPARK_WORKER_PORT / SPARK_WORKER_WEBUI_PORT, to use non-default ports for the worker

# - SPARK_WORKER_DIR, to set the working directory of worker processes

# - SPARK_WORKER_OPTS, to set config properties only for the worker (e.g. "-Dx=y")

# - SPARK_DAEMON_MEMORY, to allocate to the master, worker and history server themselves (default: 1g).

# - SPARK_HISTORY_OPTS, to set config properties only for the history server (e.g. "-Dx=y")

# - SPARK_SHUFFLE_OPTS, to set config properties only for the external shuffle service (e.g. "-Dx=y")

# - SPARK_DAEMON_JAVA_OPTS, to set config properties for all daemons (e.g. "-Dx=y")

# - SPARK_DAEMON_CLASSPATH, to set the classpath for all daemons

# - SPARK_PUBLIC_DNS, to set the public dns name of the master or workers

# Options for launcher

# - SPARK_LAUNCHER_OPTS, to set config properties and Java options for the launcher (e.g. "-Dx=y")

# Generic options for the daemons used in the standalone deploy mode

# - SPARK_CONF_DIR Alternate conf dir. (Default: ${SPARK_HOME}/conf)

# - SPARK_LOG_DIR Where log files are stored. (Default: ${SPARK_HOME}/logs)

# - SPARK_LOG_MAX_FILES Max log files of Spark daemons can rotate to. Default is 5.

# - SPARK_PID_DIR Where the pid file is stored. (Default: /tmp)

# - SPARK_IDENT_STRING A string representing this instance of spark. (Default: $USER)

# - SPARK_NICENESS The scheduling priority for daemons. (Default: 0)

# - SPARK_NO_DAEMONIZE Run the proposed command in the foreground. It will not output a PID file.

# Options for native BLAS, like Intel MKL, OpenBLAS, and so on.

# You might get better performance to enable these options if using native BLAS (see SPARK-21305).

# - MKL_NUM_THREADS=1 Disable multi-threading of Intel MKL

# - OPENBLAS_NUM_THREADS=1 Disable multi-threading of OpenBLAS

5.4、spark-defaults.conf

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# Default system properties included when running spark-submit.

# This is useful for setting default environmental settings.

# Example:

# spark.master spark://master:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://liebe/spark

# spark.serializer org.apache.spark.serializer.KryoSerializer

# spark.driver.memory 5g

# spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"

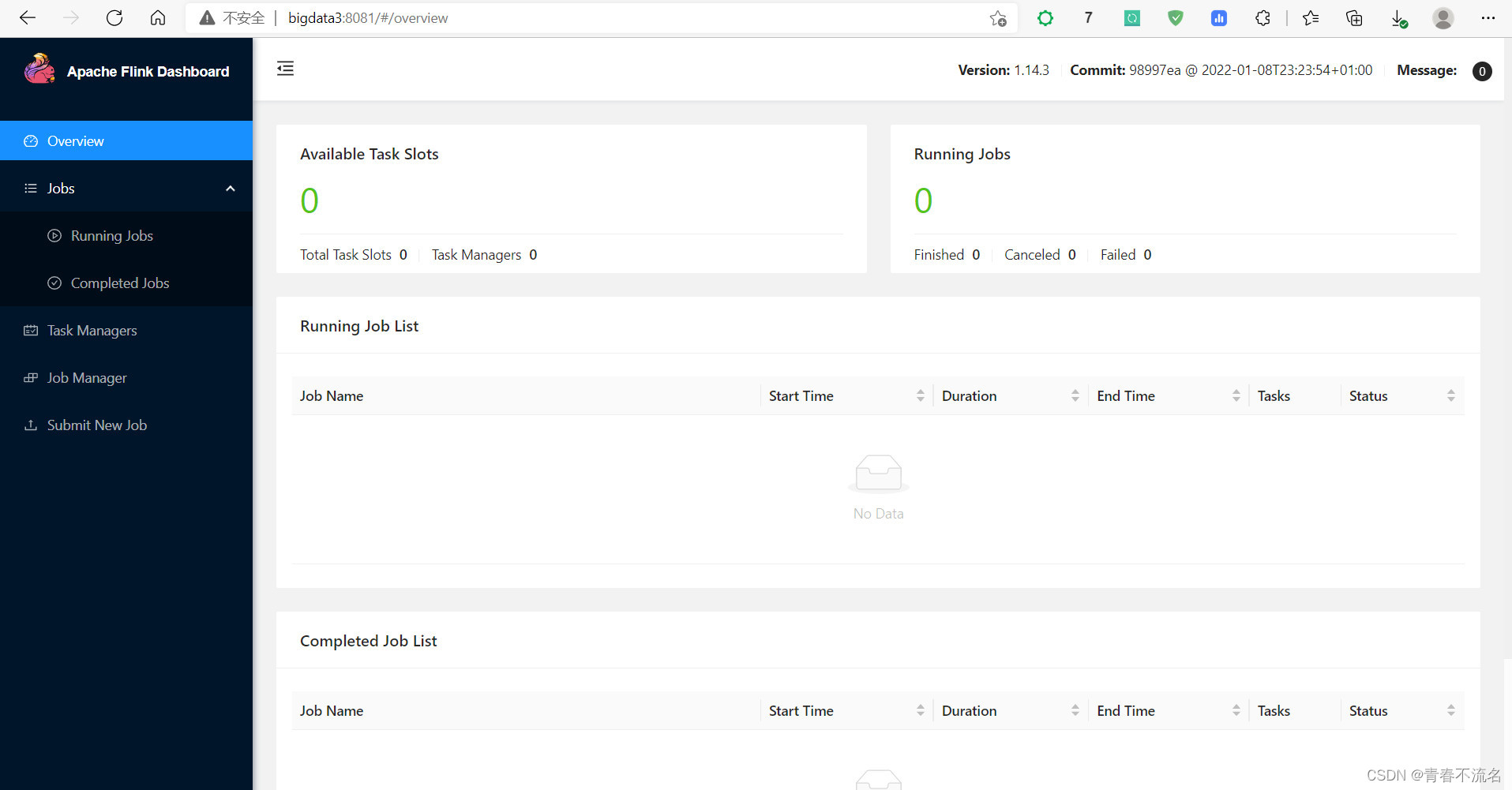

六、部署flink

安装Flink(flink-1.14.3-bin-scala_2.11.tgz)

flink-1.14.3-bin-scala_2.11.tgz hadoop tool zookeeper

上传安装包文件并进行解压重命名

需要相关jar文件(9条消息) flink-shaded-hadoop-3-uber-3.1.1.7.2.9.0-173-9.0.jar-Hadoop文档类资源-CSDN文库

(9条消息) commons-cli-1.5.0.jar-Java文档类资源-CSDN文库

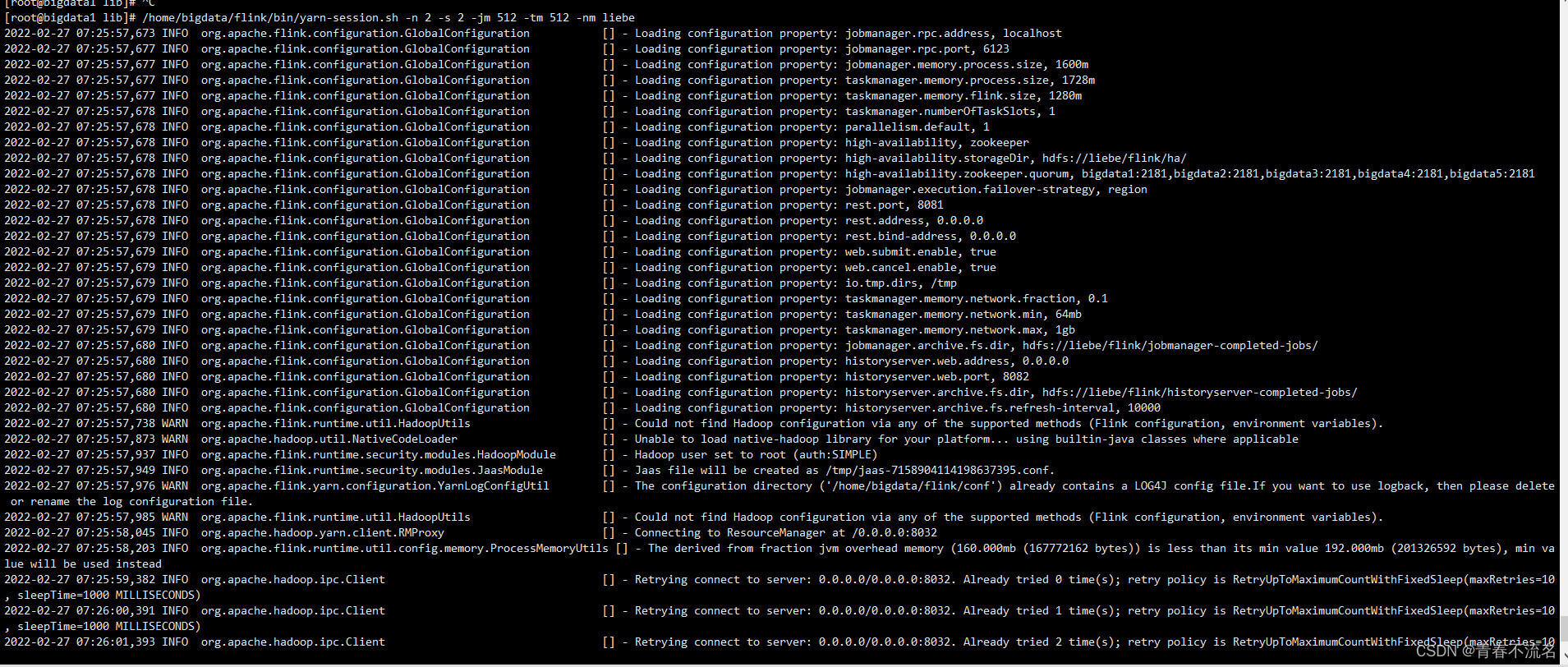

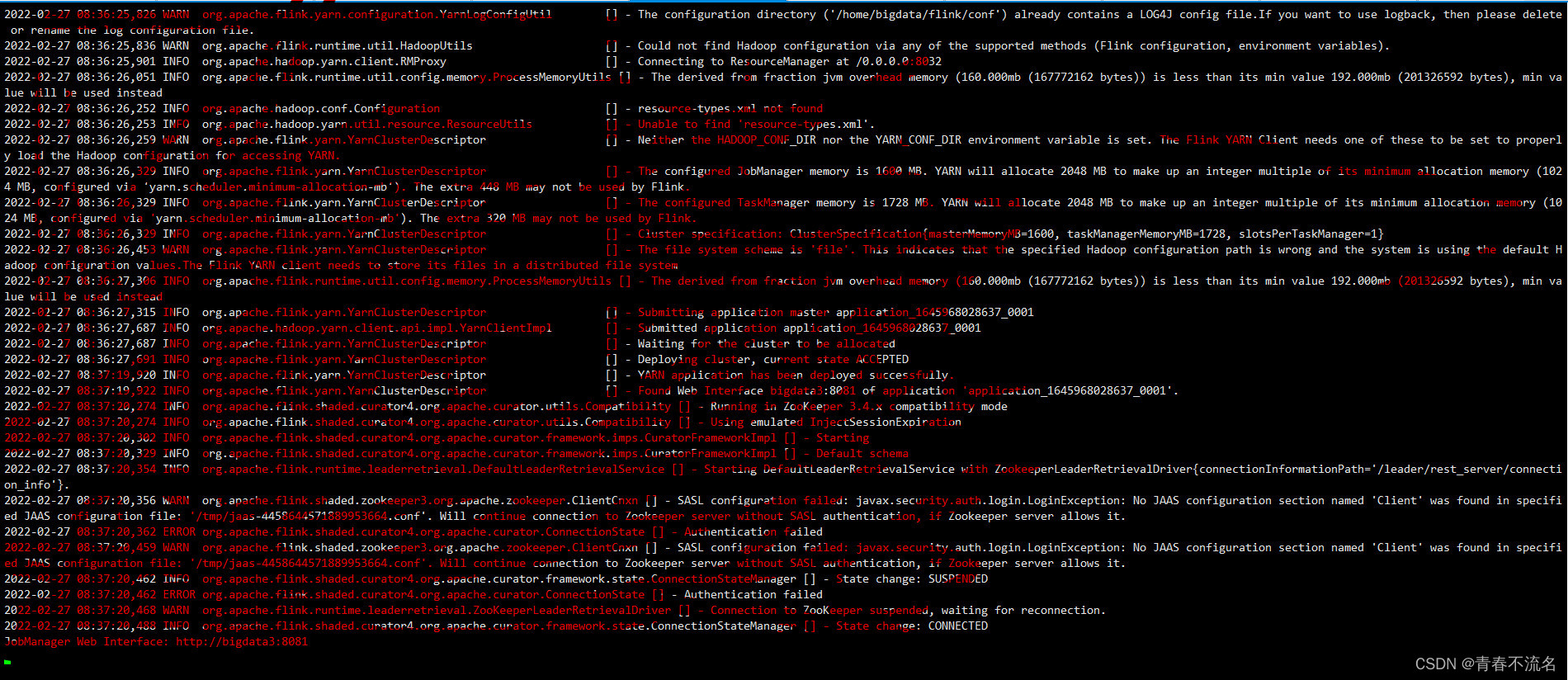

FLINK的SESSION模式启动。

/home/bigdata/flink/bin/yarn-session.sh -n 2 -s 2 -jm 512 -tm 512 -nm liebe

配置文件flink.conf

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

#==============================================================================

# Common

#==============================================================================

# The external address of the host on which the JobManager runs and can be

# reached by the TaskManagers and any clients which want to connect. This setting

# is only used in Standalone mode and may be overwritten on the JobManager side

# by specifying the --host <hostname> parameter of the bin/jobmanager.sh executable.

# In high availability mode, if you use the bin/start-cluster.sh script and setup

# the conf/masters file, this will be taken care of automatically. Yarn

# automatically configure the host name based on the hostname of the node where the

# JobManager runs.

jobmanager.rpc.address: localhost

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6123

# The total process memory size for the JobManager.

#

# Note this accounts for all memory usage within the JobManager process, including JVM metaspace and other overhead.

jobmanager.memory.process.size: 1600m

# The total process memory size for the TaskManager.

#

# Note this accounts for all memory usage within the TaskManager process, including JVM metaspace and other overhead.

taskmanager.memory.process.size: 1728m

# To exclude JVM metaspace and overhead, please, use total Flink memory size instead of 'taskmanager.memory.process.size'.

# It is not recommended to set both 'taskmanager.memory.process.size' and Flink memory.

#

taskmanager.memory.flink.size: 1280m

# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.

taskmanager.numberOfTaskSlots: 1

# The parallelism used for programs that did not specify and other parallelism.

parallelism.default: 1

# The default file system scheme and authority.

#

# By default file paths without scheme are interpreted relative to the local

# root file system 'file:///'. Use this to override the default and interpret

# relative paths relative to a different file system,

# for example 'hdfs://mynamenode:12345'

#

# fs.default-scheme

#==============================================================================

# High Availability 高可用的配置

#==============================================================================

# The high-availability mode. Possible options are 'NONE' or 'zookeeper'.

# 设置高可用模式,这里默认选用开启了zookeeper

high-availability: zookeeper

# The path where metadata for master recovery is persisted. While ZooKeeper stores

# the small ground truth for checkpoint and leader election, this location stores

# the larger objects, like persisted dataflow graphs.

#

# Must be a durable file system that is accessible from all nodes

# (like HDFS, S3, Ceph, nfs, ...)

# 设置文件系统的存储,这里使用的HDFS(必要的): JobManager 元数据持久化到文件系统 high-#availability.storageDir 配置的路径中,并且在 ZooKeeper 中只能有一个目录指向此位置。

high-availability.storageDir: hdfs://liebe/flink/ha/

# The list of ZooKeeper quorum peers that coordinate the high-availability

# setup. This must be a list of the form:

# "host1:clientPort,host2:clientPort,..." (default clientPort: 2181)

# (必要的): ZooKeeper quorum 是一个提供分布式协调服务的复制组。

high-availability.zookeeper.quorum: bigdata1:2181,bigdata2:2181,bigdata3:2181,bigdata4:2181,bigdata5:2181

每个 addressX:port 指的是一个 ZooKeeper 服务器,它可以被 Flink 在给定的地址和端口上访问。

high-availability.zookeeper.path.root (推荐的): ZooKeeper 根节点,集群的所有节点都放在该节点下。

high-availability.zookeeper.path.root: /flink

high-availability.cluster-id (推荐的): ZooKeeper cluster-id 节点,在该节点下放置集群所需的协调数据。

high-availability.cluster-id: /default_ns # important: customize per cluster

重要: 在 YARN、原生 Kubernetes 或其他集群管理器上运行时,不应该手动设置此值。在这些情况下,将自动生成一个集群 ID。如果在未使用集群管理器的机器上运行多个 Flink 高可用集群,则必须为每个集群手动配置单独的集群 ID(cluster-ids)。

# ACL options are based on https://zookeeper.apache.org/doc/r3.1.2/zookeeperProgrammers.html#sc_BuiltinACLSchemes

# It can be either "creator" (ZOO_CREATE_ALL_ACL) or "open" (ZOO_OPEN_ACL_UNSAFE)

# The default value is "open" and it can be changed to "creator" if ZK security is enabled

#

# high-availability.zookeeper.client.acl: open

#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================

# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled. Checkpointing is enabled when execution.checkpointing.interval > 0.

#

# Execution checkpointing related parameters. Please refer to CheckpointConfig and ExecutionCheckpointingOptions for more details.

#

# execution.checkpointing.interval: 3min

# execution.checkpointing.externalized-checkpoint-retention: [DELETE_ON_CANCELLATION, RETAIN_ON_CANCELLATION]

# execution.checkpointing.max-concurrent-checkpoints: 1

# execution.checkpointing.min-pause: 0

# execution.checkpointing.mode: [EXACTLY_ONCE, AT_LEAST_ONCE]

# execution.checkpointing.timeout: 10min

# execution.checkpointing.tolerable-failed-checkpoints: 0

# execution.checkpointing.unaligned: false

#

# Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the

# <class-name-of-factory>.

#

# state.backend: filesystem

# Directory for checkpoints filesystem, when using any of the default bundled

# state backends.

#

# state.checkpoints.dir: hdfs://namenode-host:port/flink-checkpoints

# Default target directory for savepoints, optional.

#

# state.savepoints.dir: hdfs://namenode-host:port/flink-savepoints

# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

#

# state.backend.incremental: false

# The failover strategy, i.e., how the job computation recovers from task failures.

# Only restart tasks that may have been affected by the task failure, which typically includes

# downstream tasks and potentially upstream tasks if their produced data is no longer available for consumption.

jobmanager.execution.failover-strategy: region

#==============================================================================

# Rest & web frontend

#==============================================================================

# The port to which the REST client connects to. If rest.bind-port has

# not been specified, then the server will bind to this port as well.

#

rest.port: 8081

# The address to which the REST client will connect to

#

rest.address: 0.0.0.0

# Port range for the REST and web server to bind to.

#

#rest.bind-port: 8080-8090

# The address that the REST & web server binds to

#

rest.bind-address: 0.0.0.0

# Flag to specify whether job submission is enabled from the web-based

# runtime monitor. Uncomment to disable.

web.submit.enable: true

# Flag to specify whether job cancellation is enabled from the web-based

# runtime monitor. Uncomment to disable.

web.cancel.enable: true

#==============================================================================

# Advanced

#==============================================================================

# Override the directories for temporary files. If not specified, the

# system-specific Java temporary directory (java.io.tmpdir property) is taken.

#

# For framework setups on Yarn, Flink will automatically pick up the

# containers' temp directories without any need for configuration.

#

# Add a delimited list for multiple directories, using the system directory

# delimiter (colon ':' on unix) or a comma, e.g.:

# /data1/tmp:/data2/tmp:/data3/tmp

#

# Note: Each directory entry is read from and written to by a different I/O

# thread. You can include the same directory multiple times in order to create

# multiple I/O threads against that directory. This is for example relevant for

# high-throughput RAIDs.

#

io.tmp.dirs: /tmp

# The classloading resolve order. Possible values are 'child-first' (Flink's default)

# and 'parent-first' (Java's default).

#

# Child first classloading allows users to use different dependency/library

# versions in their application than those in the classpath. Switching back

# to 'parent-first' may help with debugging dependency issues.

#

# classloader.resolve-order: child-first

# The amount of memory going to the network stack. These numbers usually need

# no tuning. Adjusting them may be necessary in case of an "Insufficient number

# of network buffers" error. The default min is 64MB, the default max is 1GB.

#

taskmanager.memory.network.fraction: 0.1

taskmanager.memory.network.min: 64mb

taskmanager.memory.network.max: 1gb

#==============================================================================

# Flink Cluster Security Configuration

#==============================================================================

# Kerberos authentication for various components - Hadoop, ZooKeeper, and connectors -

# may be enabled in four steps:

# 1. configure the local krb5.conf file

# 2. provide Kerberos credentials (either a keytab or a ticket cache w/ kinit)

# 3. make the credentials available to various JAAS login contexts

# 4. configure the connector to use JAAS/SASL

# The below configure how Kerberos credentials are provided. A keytab will be used instead of

# a ticket cache if the keytab path and principal are set.

# security.kerberos.login.use-ticket-cache: true

# security.kerberos.login.keytab: /path/to/kerberos/keytab

# security.kerberos.login.principal: flink-user

# The configuration below defines which JAAS login contexts

# security.kerberos.login.contexts: Client,KafkaClient

#==============================================================================

# ZK Security Configuration

#==============================================================================

# Below configurations are applicable if ZK ensemble is configured for security

# Override below configuration to provide custom ZK service name if configured

# zookeeper.sasl.service-name: zookeeper

# The configuration below must match one of the values set in "security.kerberos.login.contexts"

# zookeeper.sasl.login-context-name: Client

#==============================================================================

# HistoryServer

#==============================================================================

# The HistoryServer is started and stopped via bin/historyserver.sh (start|stop)

# Directory to upload completed jobs to. Add this directory to the list of

# monitored directories of the HistoryServer as well (see below).

jobmanager.archive.fs.dir: hdfs://liebe/flink/jobmanager-completed-jobs/

# The address under which the web-based HistoryServer listens.

historyserver.web.address: 0.0.0.0

# The port under which the web-based HistoryServer listens.

historyserver.web.port: 8082

# Comma separated list of directories to monitor for completed jobs.

historyserver.archive.fs.dir: hdfs://liebe/flink/historyserver-completed-jobs/

# Interval in milliseconds for refreshing the monitored directories.

historyserver.archive.fs.refresh-interval: 10000

rm -rf /home/bigdata/zookeeper/data/version-2/ /home/bigdata/zookeeper/data/zookeeper_server.pid

选择Resource是Active的的节点启动Yarn Session Flink

/home/bigdata/flink/bin/yarn-session.sh -n 2 -s 2 -jm 512 -tm 512 -nm liebe

Flink UI的网页的页面

3375

3375

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?