We want to represent a word with a vector in NLP. There are many methods.

1 one-hot Vector

Represent every word as an ℝ|V|∗1 vector with all 0s and one 1 at the index of that word in the sorted english language. Where V is the set of vocabularies.

2 SVD Based Methods

2.1 Window based Co-occurrence Matrix

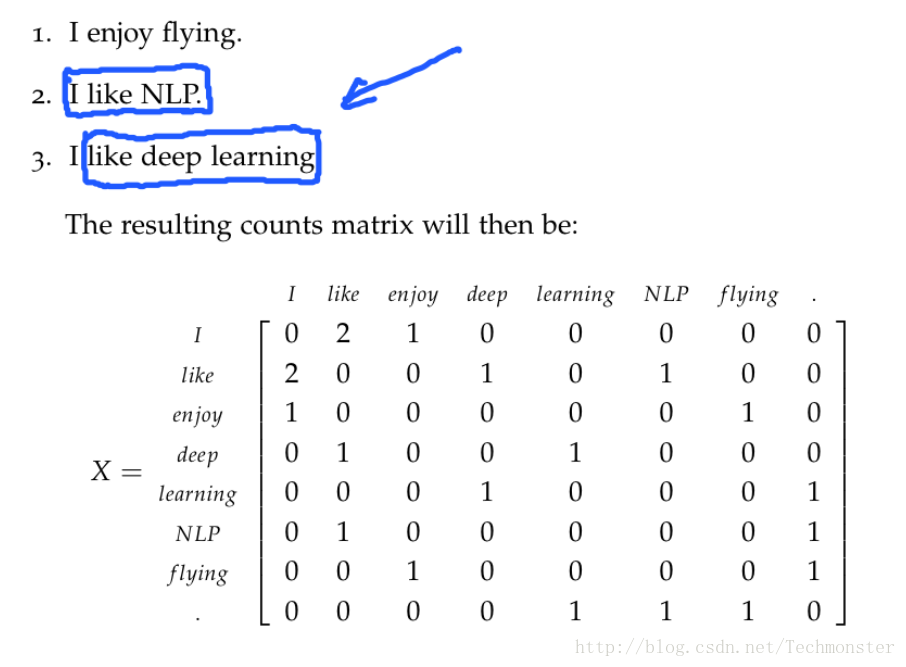

Representing a word by means of its neighbors.

In this method we count the number of times each word appears inside a window of a particular size around the word of interest.

For example:

The matrix is too large. We should make it smaller with SVD.

- Generate

|V|∗|V| co-occurrence matrix, X . - Apply SVD on

X to get X=USVT .- Select the first k columns of

U to get a k -dimensional word vectors. -

∑ki=1σi∑|V|i=1σi indicates the amount of variance captured by the first k dimensions.

- Select the first k columns of

2.2 shortage

SVD based methods do not scale well for big matrices and it is hard to incorporate new words or documents. Computational cost for a

3 Iteration Based Methods - Word2Vec

3.1 Language Models (Unigrams, Bigrams, etc.)

We need to create such a model that will assign a probability to a sequence of tokens.

For example

* The cat jumped over the puddle. —high probability

* Stock boil fish is toy. —low probability

Unigrams:

We can take the unary language model approach and break apart this probability by assuming the word occurrences are completely independent:

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

783

783

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?