目录

一、搭建nfs服务器,给web 服务提供网站数据,创建好相关的pv、pvc等

三、对nginx-web的pod启动HPA功能,控制资源的消耗

四、增加mysql pod,为nginx-web提供数据库存储

八、安装Prometheus对集群的cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等,进行监控

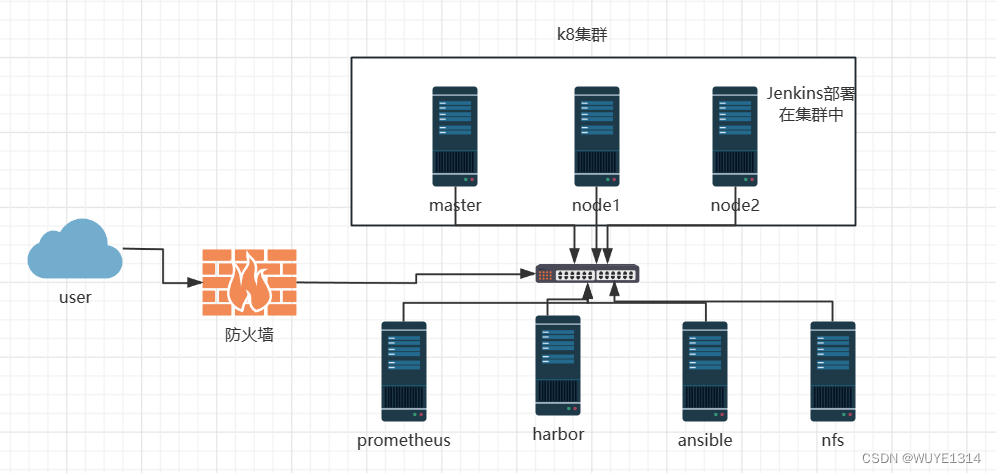

框架图

项目描述

模拟公司搭建部署k8s系统,具有firewalld、Bastionhost、nfs、Prometheus、Jenkins、harbor、ansible等功能的web-nginx集群

项目步骤

1 搭建nfs服务器,给web 服务提供网站数据,创建好相关的pv、pvc等

2 制作自己的nginx-web镜像

3 对nginx-web的pod启动HPA功能

4 增加mysql pod,为nginx-web提供数据库存储

5 创建harbor私有库,对镜像进行存储

6 部署Jenkins,可以用于持续集成和持续交付

7 使用ingress给web业务做负载均衡

8 安装Prometheus对集群的cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等,进行监控

9 部署防火墙,增强集群的安全性10 部署ansible,为之后的集群维护做保障

集群IP地址说明

| IP | service |

| 192.168.254.134 | master |

| 192.168.254.135 | node1 |

| 192.168.254.136 | node2 |

| 192.168.254.162 | nfs |

| 192.168.254.162 | harbor |

| 192.168.254.156 | firewalld |

| 192.168.254.157 | ansible |

项目环境

centos:7.9、kubernetes:1.20.6、mysql:5.7.42、Calico:3.18.0、harbor:2.7.3、nfs:v4、docker:1.20.6、Prometheus:2.34.0、Grafana:10.0.0、Jenkins、metrics-server:0.6.3、ingress-nginx-controlrv:1.1.0

一、搭建nfs服务器,给web 服务提供网站数据,创建好相关的pv、pvc等

在k8s集群和nfs服务器下载nfs-utils

yum install nfs-utils -y

启动nfs

service nfs start

创建共享目录

[root@nfs ~]# mkdir /web

[root@nfs ~]# cd /web

[root@nfs web]# echo "welcome to wu" >>index.html

[root@nfs web]# echo "welcome to nongda" >>index.html

设置共享目录

[root@nfs ~]# vim /etc/exports

/web 192.168.2.0/24(rw,no_root_squash,sync)

刷新nfs或者重新输出共享目录

[root@nfs ~]# exportfs -r

在任意节点上挂载

[root@k8snode1 ~]# mkdir /nfs

[root@k8snode1 ~]# mount 192.168.254.162:/web /nfs

[root@k8snode1 ~]# df -Th|grep nfs

192.168.254.162:/web nfs4 26G 9.7G 17G 38% /nfs

取消挂载

[root@k8snode1 ~]# umount /nfs

创建pv使用nfs服务器上的共享目录

[root@master pv]# ls

nginx-pod.yml pvc-nfs.yml pv-nfs.yml

创建pv

[root@k8smaster pv]# cat nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-pv

labels:

type: web-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: "/web"

server: 192.168.254.162

readOnly: false

[root@k8smaster pv]# kubectl apply -f nfs-pv.yml

persistentvolume/web-pv created

创建pvc

[root@master pv]# cat pvc-nfs.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: web-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs

kubectl apply -f pvc-nfs.yml

persistentvolumeclaim/web-pvc created

创建pod使用pvc

[root@k8smaster pv]# cat nginx-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: pv-storage-nfs

persistentVolumeClaim:

claimName: web-pvc

containers:

- name: pv-container-nfs

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: pv-storage-nfs

[root@k8smaster pv]# kubectl apply -f nginx-pod.yaml

deployment.apps/nginx-pod created

访问pod

[root@k8snode2 ~]# curl 10.244.104.7

welcome to wu

welcome to nongda二、制作自己的nginx-web镜像

[root@node1 Dockerfile]# pwd

/Dockerfile

[root@node1 Dockerfile]# ls

Dockerfile

在node1与node2分别制作镜像

[root@node1 Dockerfile]# cat Dockerfile

FROM nginx

WORKDIR /Dockerfile

COPY . /Dockerfile

CMD ["nginx", "-g", "daemon off;"] #由于没有制作自己的脚本,就直接执行CMD将pod运行起来就行

[root@node1 Dockerfile]# docker build -t wumuweb:2.0 .

[root@node1 Dockerfile]# docker image ls | grep wumyweb

wumyweb 2.0 4b338287fa2d 40 hours ago 141MB

利用刚制作的镜像创建pod,并发布出去

[root@master hpa]# cat nginx-web.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-web

name: nginx-web

spec:

replicas: 3

selector:

matchLabels:

app: nginx-web

template:

metadata:

labels:

app: nginx-web

spec:

containers:

- name: nginx-web

# image: wumyweb:2.0

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

resources:

limits:

cpu: 30m

requests:

cpu: 10m

# env:

# - name: MYSQL_HOST

# value: mysql

# - name: MYSQL_PORT

# value: "3306"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-web-svc

name: nginx-web-svc

spec:

selector:

app: nginx-web

type: NodePort

ports:

- name: http

port: 8000

protocol: TCP

targetPort: 80

nodePort: 30001

[root@k8smaster hpa]# kubectl apply -f nginx-web.yml

deployment.apps/nginx-web created

service/nginx-web-svc created

root@master hpa]# kubectl get pod|grep nginx-web

nginx-web-7878f794cc-4vjb2 1/1 Running 0 37s

nginx-web-7878f794cc-crcd6 1/1 Running 0 37s

nginx-web-7878f794cc-x6w2t 1/1 Running 0 37s

三、对nginx-web的pod启动HPA功能,控制资源的消耗

在执行HPA功能之前,需先配置metrics server

下载components.yaml配置文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

替换image

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.2

imagePullPolicy: IfNotPresent

args:

# 新增下面两行参数

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalDNS,ExternalIP,Hostname

修改components.yaml配置文件

[root@k8smaster ~]# cat components.yaml

spec:

containers:

- args:

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.2

imagePullPolicy: IfNotPresent

# 执行安装命令

[root@k8smaster metrics]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master hpa]# kubectl get pod -n kube-system

metrics-server-769f6c8464-hmgwh 1/1 Running 11 53d

[root@master hpa]# cat hpa.yml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: web-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-web

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

[root@master hpa]# kubectl apply -f hpa.yml

horizontalpodautoscaler.autoscaling/web-hpa created

[root@master hpa]# kubectl get pod|grep nginx-web

nginx-web-7878f794cc-4vjb2 1/1 Running 0 4m55s 10.244.166.160 node1 <none> <none>

四、增加mysql pod,为nginx-web提供数据库存储

[root@master mysql]# cat mysql.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mysql

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.7.42

name: mysql

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

labels:

app: svc-mysql

name: svc-mysql

spec:

selector:

app: mysql

type: NodePort

ports:

- name: mysql

port: 3306

protocol: TCP

targetPort: 3306

nodePort: 30007

[root@master mysql]# kubectl apply -f mysql.yml

deployment.apps/mysql create

service/svc-mysql create

[root@master mysql]# kubectl get pod

mysql-5f9bccd855-qrqpx 1/1 Running 3 37h

[root@master mysql]# kubectl get svcsvc-mysql NodePort 10.109.205.30 <none> 3306:30007/TCP 37h

在/hpa/nginx-web.yml的service中添加

env:

- name: MYSQL_HOST

value: mysql

- name: MYSQL_PORT

value: "3306"

五、创建harbor私有库,对镜像进行存储

安装harbor需要先是安装好 docker 和 docker compose

1.配置阿里云的repo源

yum install -y yum-utils

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 2.安装docker服务

yum install docker-ce-20.10.6 -y

# 启动docker,设置开机自启

systemctl start docker

systemctl enable docker.service

安装 harbor,到 harbor 官网下载harbor源码包

下载harbor并解压

[root@nfs harbor]# ls

harbor harbor-offline-installer-v2.7.3.tgz

修改配置文件

[root@nfs harbor]# cat harbor.yml

# Configuration file of Harbor

# The IP address or hostname to access admin UI and registry service.

# DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

hostname: 192.168.254.162 #修改成安装harbor的ip地址

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 888 #修改harbor开发的端口好

# https可以全部注释,如果不用

# https related config

#https:

# https port for harbor, default is 443

# port: 443

# The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

启动部署脚本

[root@harbor harbor]# ./install.sh

[+] Running 10/10

⠿ Network harbor_harbor Created 0.7s

⠿ Container harbor-log Started 1.6s

⠿ Container registry Started 5.2s

⠿ Container harbor-db Started 4.9s

⠿ Container harbor-portal Started 5.1s

⠿ Container registryctl Started 4.8s

⠿ Container redis Started 3.9s

⠿ Container harbor-core Started 6.5s

⠿ Container harbor-jobservice Started 9.0s

⠿ Container nginx Started 9.1s

✔ ----Harbor has been installed and started successfully.----

配置开机自启

[root@harbor harbor]# cat /etc/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

ulimit -n 1000000

/usr/local/wunginx/sbin/nginx

/usr/local/sbin/docker-compose -f /harbor/harbor/docker-compose.yml up -d

设置权限

[root@harbor harbor]# chmod +x /etc/rc.local /etc/rc.d/rc.local

访问harbor

http://192.168.254.162:888

在k8s集群设置访问harbor仓库

[root@k8snode2 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],

"insecure-registries":["192.168.254.162:888"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

登入harbor

[root@master hpa]# docker login 192.168.254.162:888

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

六、部署Jenkins,可以用于持续集成和持续交付

安装git软件

[root@k8smaster jenkins]# yum install git -y

去官网git下载Jenkins压缩包

[root@master jenkins]# ls

kubernetes-jenkins-main kubernetes-jenkins-main.zip

[root@master kubernetes-jenkins-main]# ls

deployment.yaml namespace.yaml README.md serviceAccount.yaml service.yaml volume.yaml

deployment.yaml 部署Jenkins

namespace.yaml 创建命名空间

README.md 安装指南

serviceAccount.yaml 创建服务账号,集群角色,绑定

service.yaml 启动服务发布Jenkins

volume.yaml 创建服务账号,集群角色,绑定

依次执行namespace.yaml、serviceAccount.yaml、volume.yaml、deployment.yaml、service.yaml七、使用ingress给web业务做负载均衡

文件介绍

[root@master ingress]# ls

ingress-controller-deploy.yaml kube-webhook-certgen.tar nginx-svc-1.yaml

ingress.yaml nginx-deployment-nginx-svc-2.yaml

ingress-controller-deploy.yaml 是部署ingress controller使用的yaml文件

kube-webhook-certgen.tar kube-webhook-certgen镜像

nginx-deployment-nginx-svc-2.yaml 启动nginx-deployment-nginx-svc-2服务和相关pod的yaml

nginx-svc-1.yaml 启动nginx-svc-1服务和相关pod的yaml

ingress.yaml 创建ingress的配置文件

执行yaml文件去创建ingres controller

[root@k8smaster ingress]# kubectl apply -f ingress-controller-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

第2大步骤:创建pod和暴露pod的服务

[root@k8smaster ingress]# cat nginx-svc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

labels:

app: nginx-feng

spec:

replicas: 3

selector:

matchLabels:

app: nginx-feng

template:

metadata:

labels:

app: nginx-feng

spec:

containers:

- name: nginx-feng

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

labels:

app: nginx-svc

spec:

selector:

app: nginx-feng

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

[root@k8smaster ingress]# kubectl apply -f nginx-svc-1.yaml

deployment.apps/nginx-deploy created

service/nginx-svc created

[root@k8smaster ingress]# kubectl describe svc sc-nginx-svc

查看Endpoints是成功挂载上

Name: nginx-svc

Namespace: default

Labels: app=sc-nginx-svc

Annotations: <none>

Selector: app=nginx-feng

Type: ClusterIP

IP Families: <none>

IP: 10.98.23.62

IPs: 10.98.23.62

Port: name-of-service-port 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.56:80,10.244.104.58:80,10.244.166.147:80

Session Affinity: None

Events: <none>

访问ip,看是否发布成功[root@master ingress]# curl 10.244.104.56:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

启用ingress关联ingress controller 和service

# 创建一个yaml文件,去启动ingress

[root@k8smaster ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress

annotations:

kubernets.io/ingress.class: nginx

spec:

ingressClassName: nginx

rules:

- host: www.wu.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginx-svc

port:

number: 80

- host: www.liu.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginx-svc-2

port:

number: 80

[root@k8smaster ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/ingress created

查看ingress controller 里的nginx.conf 文件里是否有ingress对应的规则

[root@master ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-4qhpk 0/1 Completed 0 18h

ingress-nginx-admission-patch-k2kqv 0/1 Completed 0 18h

ingress-nginx-controller-6c8ffbbfcf-8vnrk 1/1 Running 1 18h

ingress-nginx-controller-6c8ffbbfcf-bnvqg 1/1 Running 1 18h

[root@master ingress]# kubectl exec -n ingress-nginx -it ingress-nginx-controller-6c8ffbbfcf-8vnrk -- bash

bash-5.1$ cat nginx.conf |grep wu.com

## start server www.wu.com

server_name www.wu.com ;

## end server www.wu.com

bash-5.1$ cat nginx.conf |grep liu.com

## start server www.liu.com

server_name www.liu.com ;

## end server www.liu.com

bash-5.1$ cat nginx.conf|grep -C3 upstream_balancer

error_log /var/log/nginx/error.log notice;

upstream upstream_balancer {

server 0.0.0.1:1234; # placeholder

balancer_by_lua_block {

在其他机器上进行域名解析

[root@zabbix ~]# vim /etc/hosts

[root@zabbix ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.254.134 www.wu.com

192.168.254.134 www.liu.com

[root@nfs harbor]# curl www.wu.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

访问www.liu.com出现异常,503错误,是nginx内部错误

[root@nfs harbor]# curl www.liu.com

<html>

<head><title>503 Service Temporarily Unavailable</title></head>

<body>

<center><h1>503 Service Temporarily Unavailable</h1></center>

<hr><center>nginx</center>

</body>

</html>

启动第2个服务和pod,使用了pv+pvc+nfs

需要提前准备好第yi步骤nfs服务器+创建pv和pvc

创建pv

[root@k8smaster pv]# cat nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-pv

labels:

type: web-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: "/web"

server: 192.168.254.162

readOnly: false

[root@k8smaster pv]# kubectl apply -f nfs-pv.yml

persistentvolume/web-pv created

创建pvc

[root@master pv]# cat pvc-nfs.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: web-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs

kubectl apply -f pvc-nfs.yml

persistentvolumeclaim/web-pvc created

[root@k8smaster ingress]# cat nginx-deployment-nginx-svc-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx-feng-2

template:

metadata:

labels:

app: nginx-feng-2

spec:

volumes:

- name: pv-storage-nfs

persistentVolumeClaim:

claimName: pvc-web

containers:

- name: pv-container-nfs

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: pv-storage-nfs

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc-2

labels:

app: nginx-svc-2

spec:

selector:

app: nginx-feng-2

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

[root@k8smaster ingress]# kubectl apply -f nginx-deployment-nginx-svc-2.yaml

deployment.apps/nginx-deployment created

service/nginx-svc-2 created

[root@master ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.wu.com,www.liu.com 192.168.254.135,192.168.254.136 80 18h

八、安装Prometheus对集群的cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等,进行监控

1在k8s集群提前下载镜像

docker pull prom/node-exporter

docker pull prom/prometheus:v2.0.0

docker pull grafana/grafana:6.1.4

全程只展示master操作,node1和node2相同

[root@k8smaster ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

prom/node-exporter latest 1dbe0e931976 18 months ago 20.9MB

grafana/grafana 6.1.4 d9bdb6044027 4 years ago 245MB

prom/prometheus v2.0.0 67141fa03496 5 years ago 80.2MB

采用daemonset方式部署node-exporter

[root@master pro]# ll

总用量 36

-rw-r--r-- 1 root root 5638 10月 10 15:34 configmap.yaml

-rw-r--r-- 1 root root 1514 10月 10 16:11 grafana-deploy.yaml

-rw-r--r-- 1 root root 256 10月 10 16:12 grafana-ing.yaml

-rw-r--r-- 1 root root 225 10月 10 16:14 grafana-svc.yaml

-rw-r--r-- 1 root root 711 10月 10 15:30 node-exporter.yaml

-rw-r--r-- 1 root root 233 10月 10 15:36 prometheus.svc.yml

-rw-r--r-- 1 root root 1103 10月 10 15:35 prometheus.yaml

-rw-r--r-- 1 root root 716 10月 10 15:33 rbac-setup.yaml

[root@k8smaster pro]# cat node-exporter.yaml

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

[root@k8smaster pro]# kubectl apply -f node-exporter.yaml

daemonset.apps/node-exporter created

service/node-exporter created

[root@k8smaster prometheus]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system node-exporter-fcmx5 1/1 Running 0 47s

kube-system node-exporter-qccwb 1/1 Running 0 47s

[root@k8smaster pro]# kubectl get daemonset -A

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system calico-node 3 3 3 3 3 kubernetes.io/os=linux 7d

kube-system kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 7d

kube-system node-exporter 2 2 2 2 2 <none> 2m29s

[root@k8smaster prometheus]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-system node-exporter NodePort 10.111.247.142 <none> 9100:31672/TCP 3m24s

部署Prometheus

[root@k8smaster prometheus]# cat rbac-setup.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

[root@k8smaster prometheus]# kubectl apply -f rbac-setup.yaml

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

[root@k8smaster pro]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

[root@k8smaster pro]# kubectl apply -f configmap.yaml

configmap/prometheus-config created

[root@k8smaster pro]# cat prometheus.deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

[root@k8smaster pro]# kubectl apply -f prometheus.deploy.yml

deployment.apps/prometheus created

[root@k8smaster pro]# cat prometheus.svc.yml

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

[root@k8smaster prometheus]# kubectl apply -f prometheus.svc.yml

service/prometheus created

4.部署grafana

[root@k8smaster pro]# cat grafana-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:6.1.4

name: grafana-core

imagePullPolicy: IfNotPresent

# env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

# does not really work, because of template variables in exported dashboards:

# - name: GF_DASHBOARDS_JSON_ENABLED

# value: "true"

readinessProbe:

httpGet:

path: /login

port: 3000

# initialDelaySeconds: 30

# timeoutSeconds: 1

#volumeMounts: #先不进行挂载

#- name: grafana-persistent-storage

# mountPath: /var

#volumes:

#- name: grafana-persistent-storage

#emptyDir: {}

[root@k8smaster pro]# kubectl apply -f grafana-deploy.yaml

deployment.apps/grafana-core created

[root@k8smaster pro]# cat grafana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

component: core

[root@k8smaster pro]# kubectl apply -f grafana-svc.yaml

service/grafana created

[root@k8smaster pro]# cat grafana-ing.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: kube-system

spec:

rules:

- host: k8s.grafana

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

[root@k8smaster pro]# kubectl apply -f grafana-ing.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/grafana created

检查、测试

[root@k8smaster pro]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system grafana-core-78958d6d67-49c56 1/1 Running 0 31m

kube-system node-exporter-fcmx5 1/1 Running 0 9m33s

kube-system node-exporter-qccwb 1/1 Running 0 9m33s

kube-system prometheus-68546b8d9-qxsm7 1/1 Running 0 2m47s

[root@k8smaster mysql]# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-system grafana NodePort 10.110.87.158 <none> 3000:31370/TCP 31m

kube-system node-exporter NodePort 10.111.247.142 <none> 9100:31672/TCP 39m

kube-system prometheus NodePort 10.102.0.186 <none> 9090:30003/TCP 32m

访问node-exporter采集的数据

http://192.168.2.104:31672/metrics

Prometheus的页面

http://192.168.2.104:30003

grafana的页面,

http://192.168.2.104:31370

九、部署防火墙,增强集群的安全性

# 编写防火墙策略脚本

[root@firewalld ~]# cat snat_dnat.sh

#!/bin/bash

# open route

echo 1 >/proc/sys/net/ipv4/ip_forward

# stop firewall

systemctl stop firewalld

systemctl disable firewalld

# clear iptables rule

iptables -F

iptables -t nat -F

# enable snat

iptables -t nat -A POSTROUTING -s 192.168.254.0/24 -o ens33 -j MASQUERADE

# enable dnat

iptables -t nat -A PREROUTING -d 192.168.1.3 -i ens33 -p tcp --dport 2233 -j DNAT --to-destination 192.168.254.134:22

# open web 80

iptables -t nat -A PREROUTING -d 192.168.1.3 -i ens33 -p tcp --dport 80 -j DNAT --to-destination 192.168.254.134:80

在master上编写接口访问规则

root@k8smaster ~]# cat open_app.sh

#!/bin/bash

# open ssh

iptables -t filter -A INPUT -p tcp --dport 22 -j ACCEPT

# open dns

iptables -t filter -A INPUT -p udp --dport 53 -s 192.168.254.0/24 -j ACCEPT

# open dhcp

iptables -t filter -A INPUT -p udp --dport 67 -j ACCEPT

# open http/https

iptables -t filter -A INPUT -p tcp --dport 80 -j ACCEPT

iptables -t filter -A INPUT -p tcp --dport 443 -j ACCEPT

# open mysql

iptables -t filter -A INPUT -p tcp --dport 3306 -j ACCEPT

# default policy DROP

iptables -t filter -P INPUT DROP

# drop icmp request

iptables -t filter -A INPUT -p icmp --icmp-type 8 -j DROP1.建立免密通道 在ansible主机上生成密钥对

[root@ansible ~]# ssh-keygen -t ecdsa

Generating public/private ecdsa key pair.

Enter file in which to save the key (/root/.ssh/id_ecdsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_ecdsa.

Your public key has been saved in /root/.ssh/id_ecdsa.pub.

The key fingerprint is:

SHA256:FNgCSDVk6i3foP88MfekA2UzwNn6x3kyi7V+mLdoxYE root@ansible

The key's randomart image is:

+---[ECDSA 256]---+

| ..+*o =. |

| .o .* o. |

| . +. . |

| . . ..= E . |

| o o +S+ o . |

| + o+ o O + |

| . . .= B X |

| . .. + B.o |

| ..o. +oo.. |

+----[SHA256]-----+

[root@ansible ~]# cd /root/.ssh

[root@ansible .ssh]# ls

id_ecdsa id_ecdsa.pub

上传公钥到所有服务器的root用户家目录下,所有服务器上开启ssh服务 ,开放22号端口,允许root用户登录,上传公钥到k8smaster

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub root@192.168.254.134

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.254.134 (192.168.254.134)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.254.134's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@192.168.254.134'"

and check to make sure that only the key(s) you wanted were added.

# 上传公钥到k8snode

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub root@192.168.254.135

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.254.135 (192.168.254.135)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.2.111's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@192.168.254.135'"

and check to make sure that only the key(s) you wanted were added.

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub root@192.168.254.136

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.254.136 (192.168.254.136)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.2.112's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@192.168.254.136'"

and check to make sure that only the key(s) you wanted were added.

安装ansible

[root@ansible .ssh]# yum install epel-release -y

[root@ansible .ssh]# yum install ansible -y

[root@ansible ~]# ansible --version

ansible 2.9.27

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Oct 14 2020, 14:45:30) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)]

编写主机清单

[root@ansible .ssh]# cd /etc/ansible

[root@ansible ansible]# ls

ansible.cfg hosts roles

[root@ansible ansible]# vim hosts

[k8smaster]

192.168.254.134

[k8snode]

192.168.254.135

192.168.254.136

[nfs]

192.168.254.162

[harbor]

192.168.254.162

799

799

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?