一、安装zookeepe的方式

1、zookeeper安装(Kafka2.8.0后不需要安装ZK)

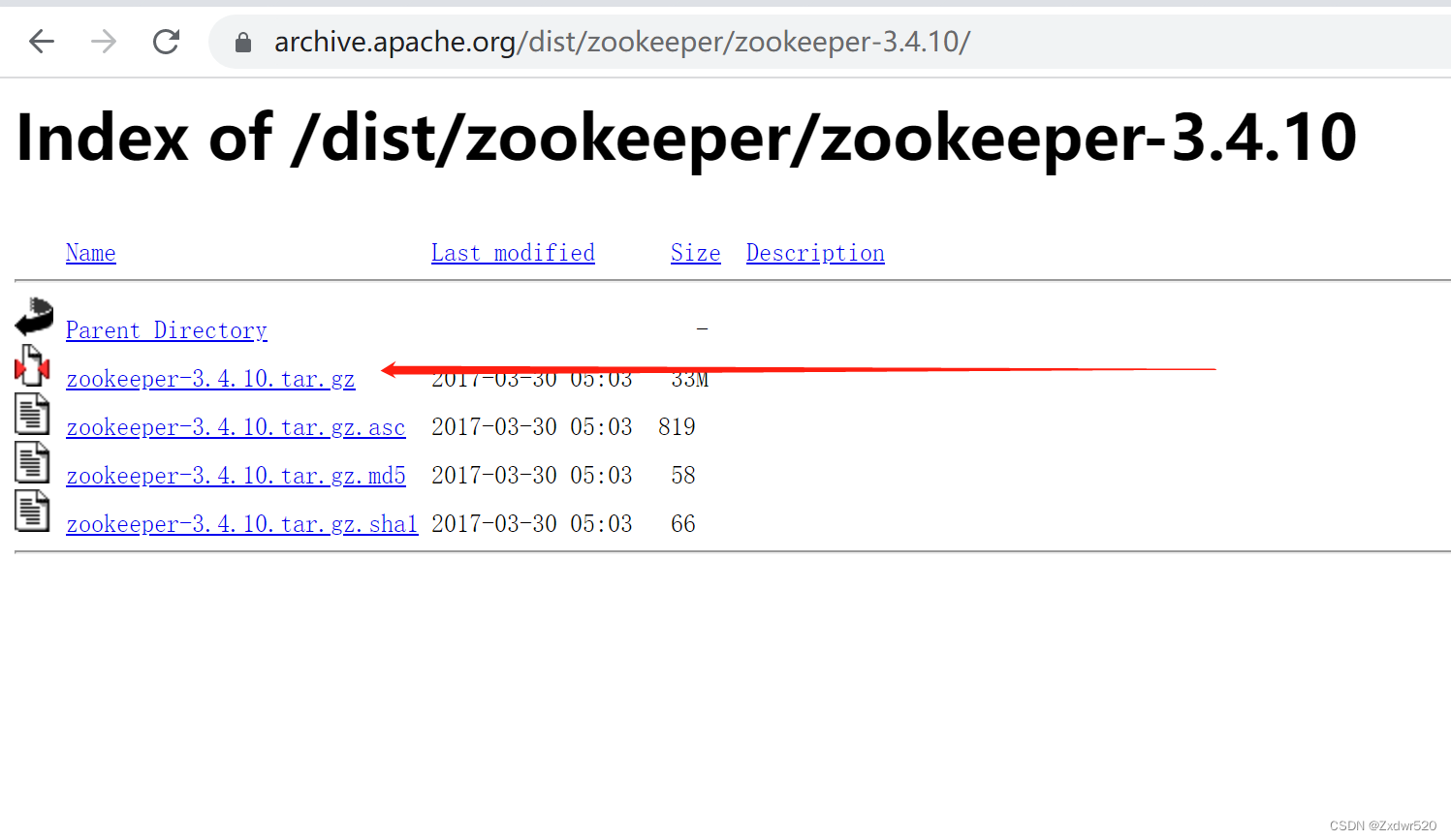

下载安装文件:Index of /dist/zookeeper/zookeeper-3.4.10

下载安装文件:Index of /dist/zookeeper/zookeeper-3.4.10

Index of /dist/zookeeper/zookeeper-3.4.10![]() https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/

https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/

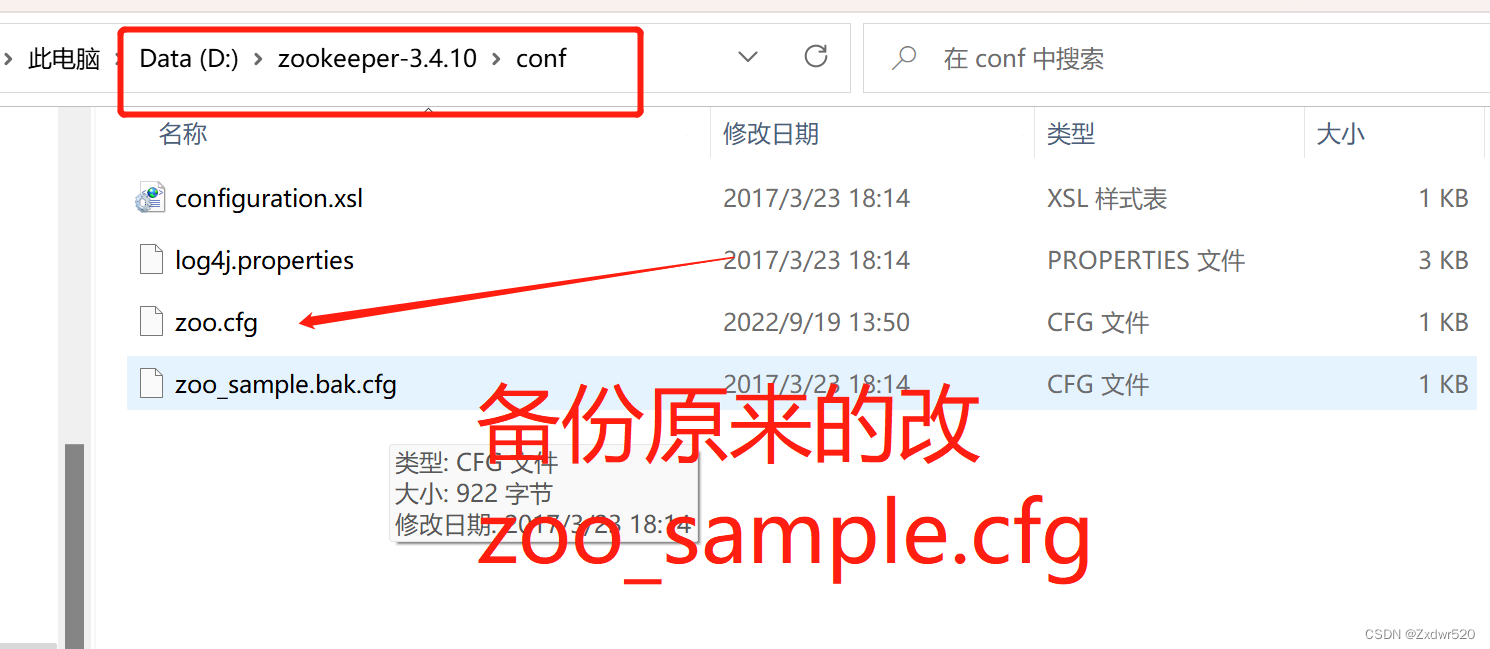

解压后进入conf目录下zoo_sample.cfg重命名为zoo.cfg

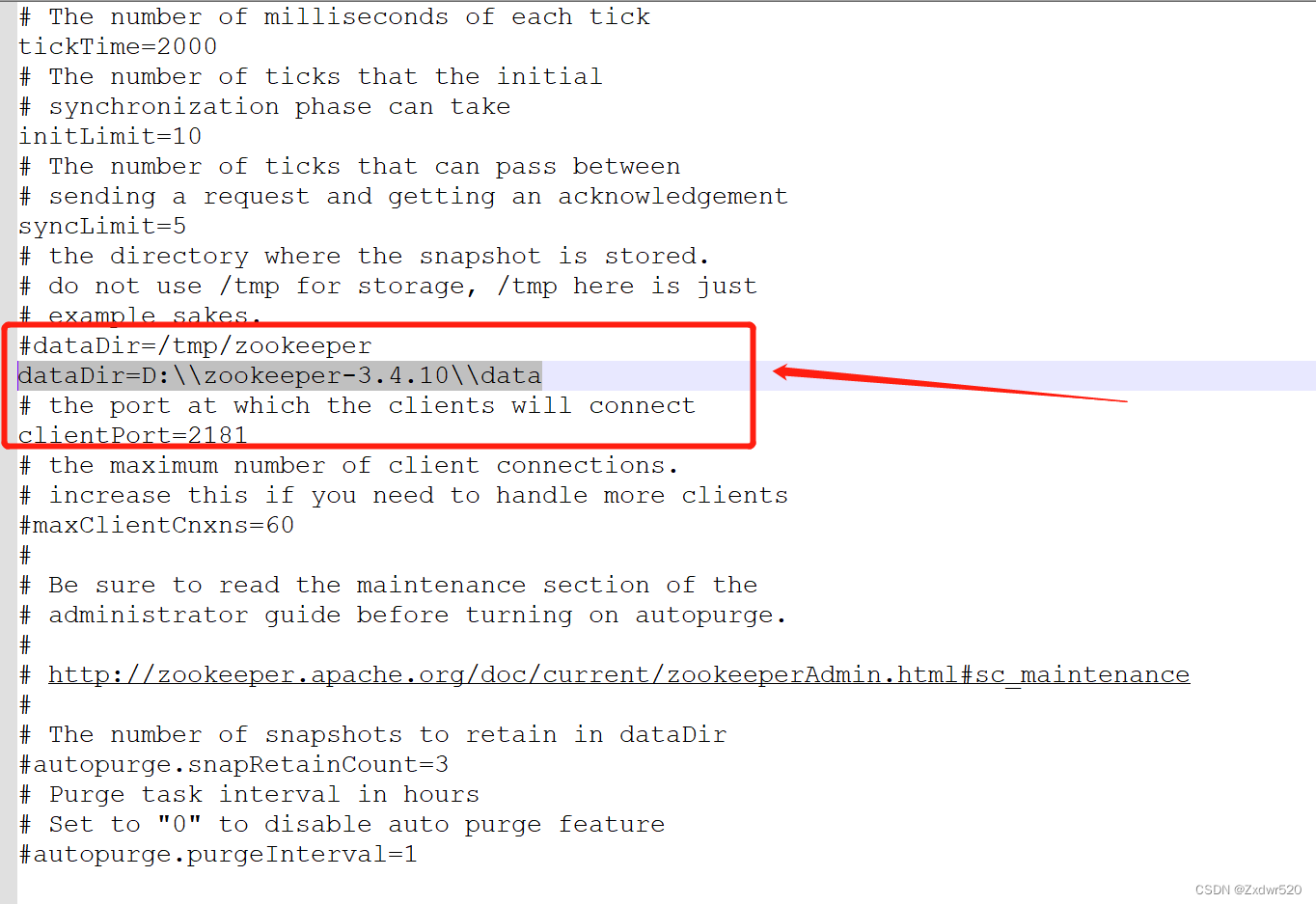

进入zoo.cfg编辑

dataDir=D:\\zookeeper-3.4.10\\data

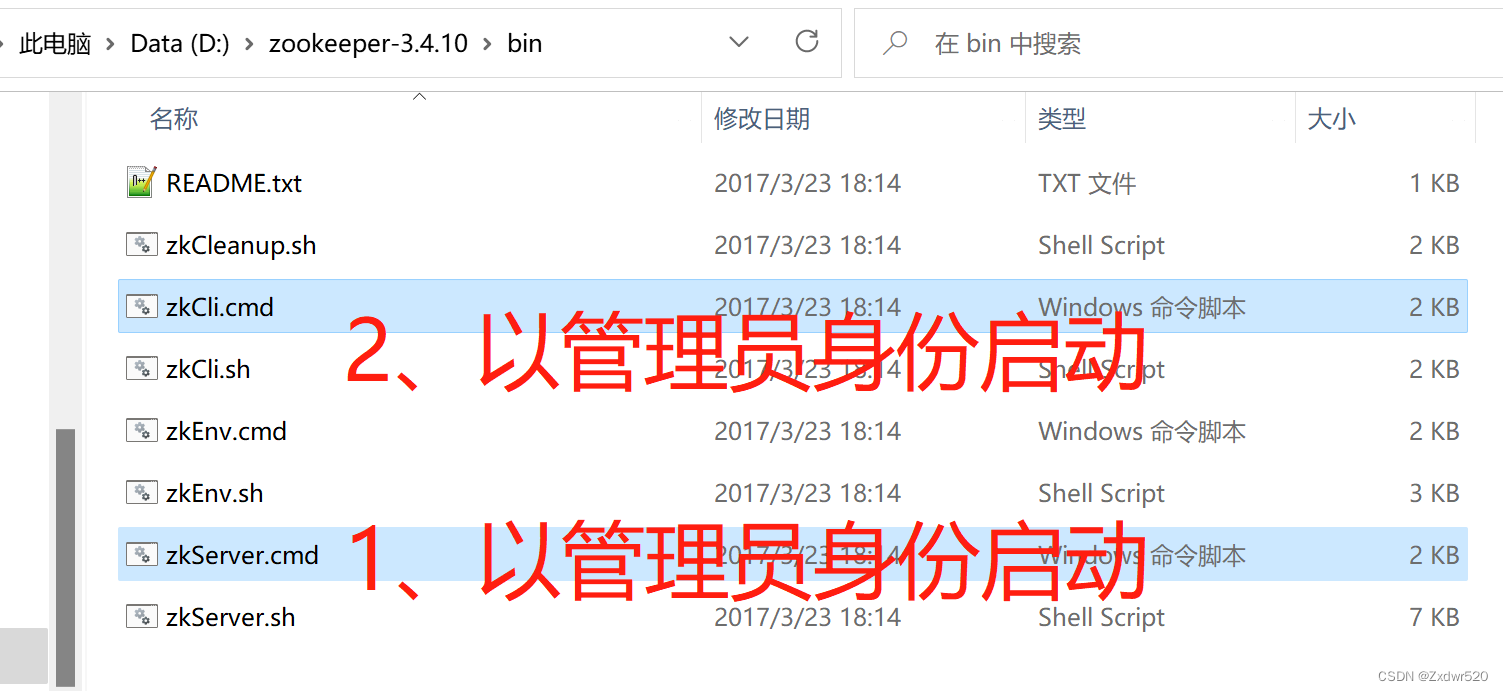

进入D:\zookeeper-3.4.10\bin件夹,以管理员身份运行zkServer.cmd、zkCli.cmd

2、安装kafka

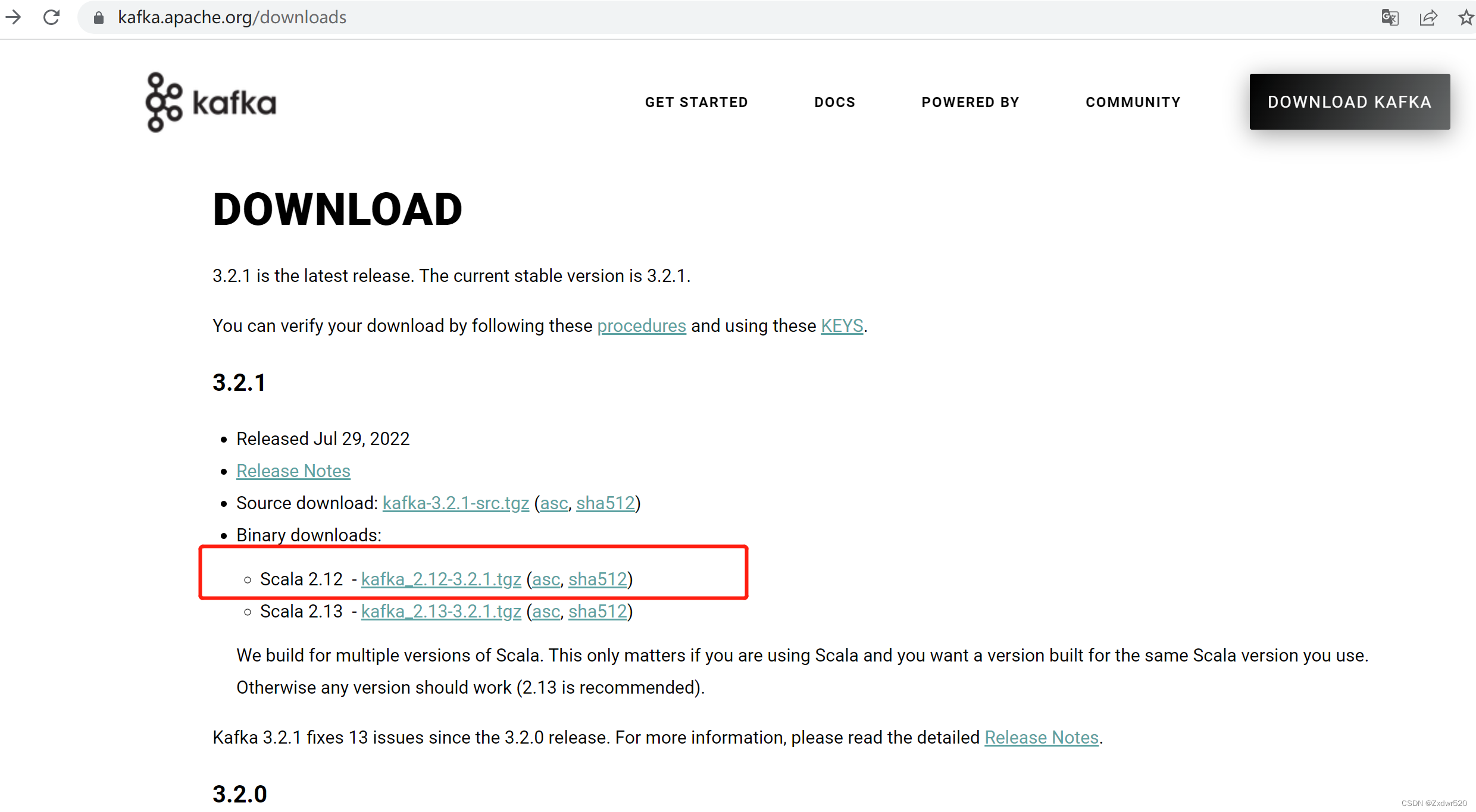

下载安装包(下载二进制版本):Apache Kafka

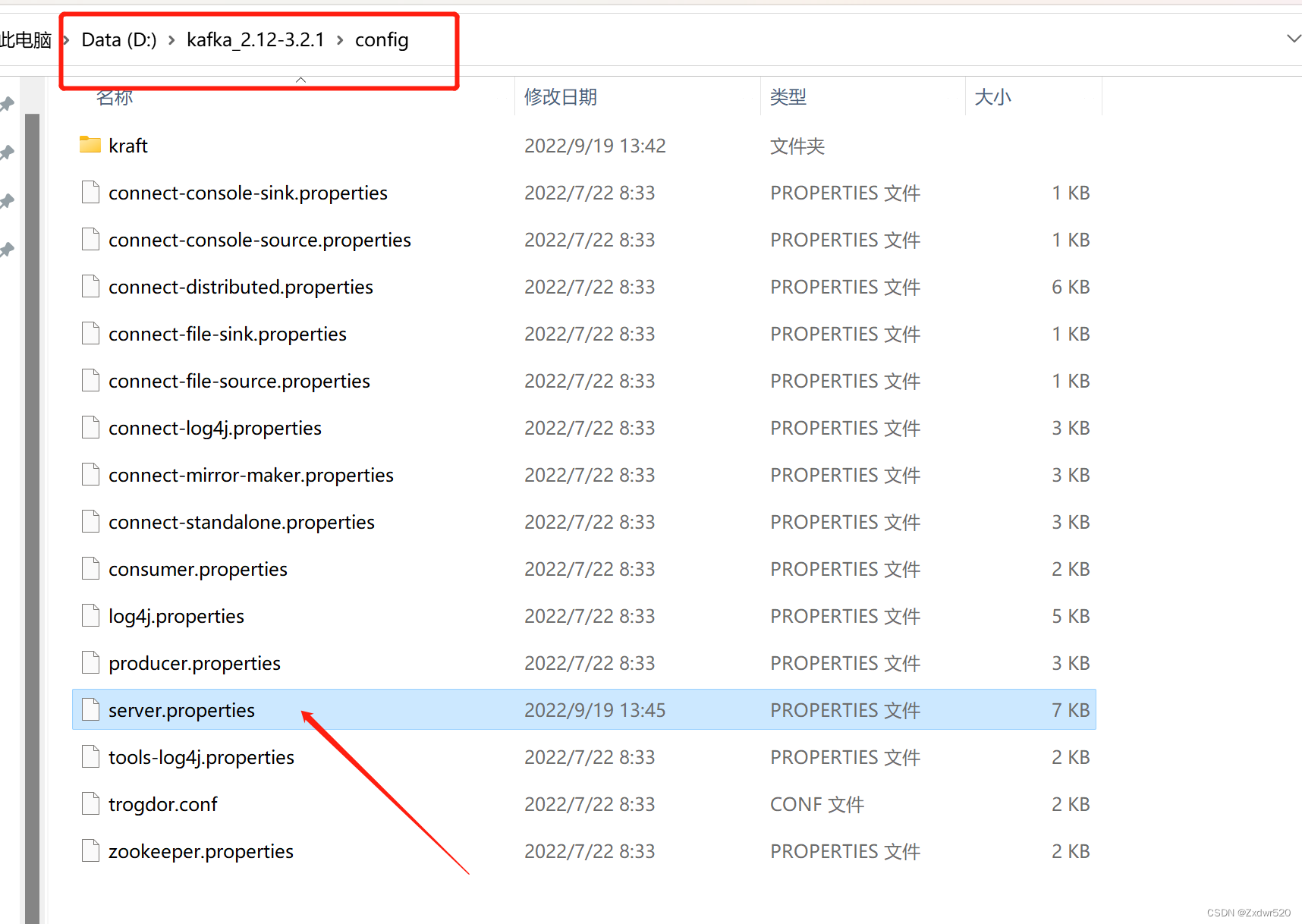

解压后编辑server.properties

log.dirs=D:\kafka_2.12-3.2.1\kafka-logs

log.retention.hours=168消息默认过期时间168h=7天

Kafka默认端口:9092,zookeeper默认端口:2181

启动kafka

.\bin\windows\kafka-server-start.bat .\config\server.properties

二、不需要安装zookeeper,只需安装Kafka

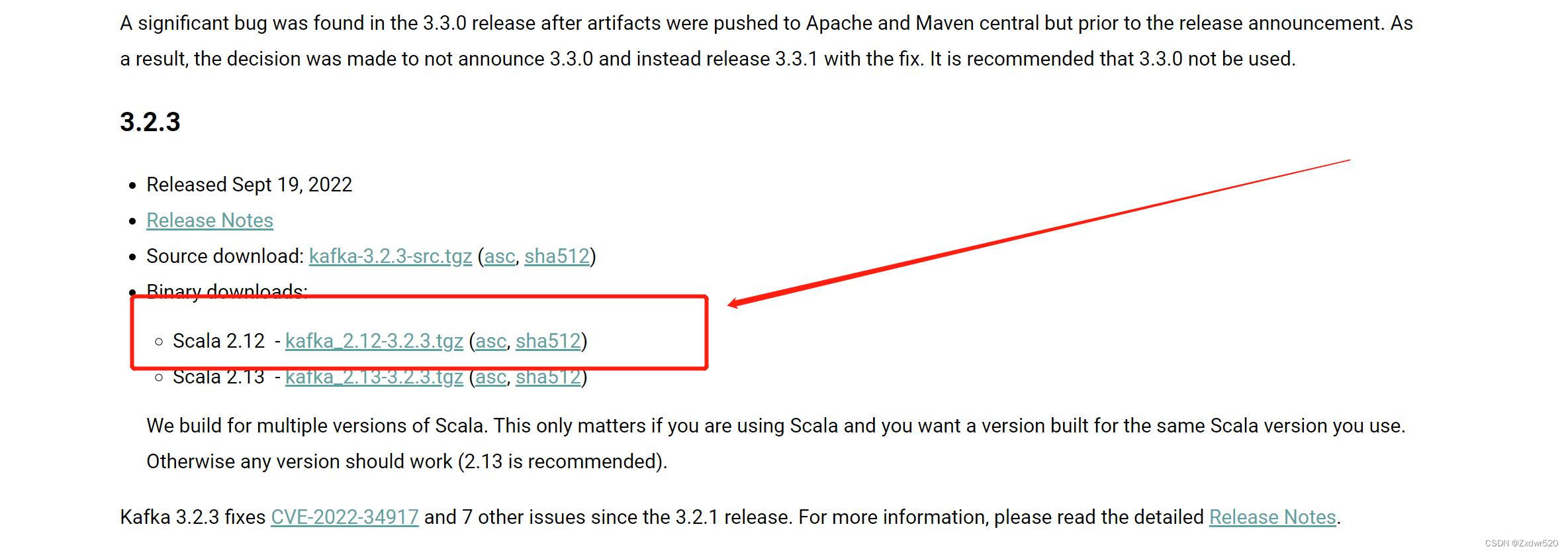

版本要>=2.8.0,博客实例用3.2.3版本的卡夫卡

下载kafka

https://kafka.apache.org/downloads

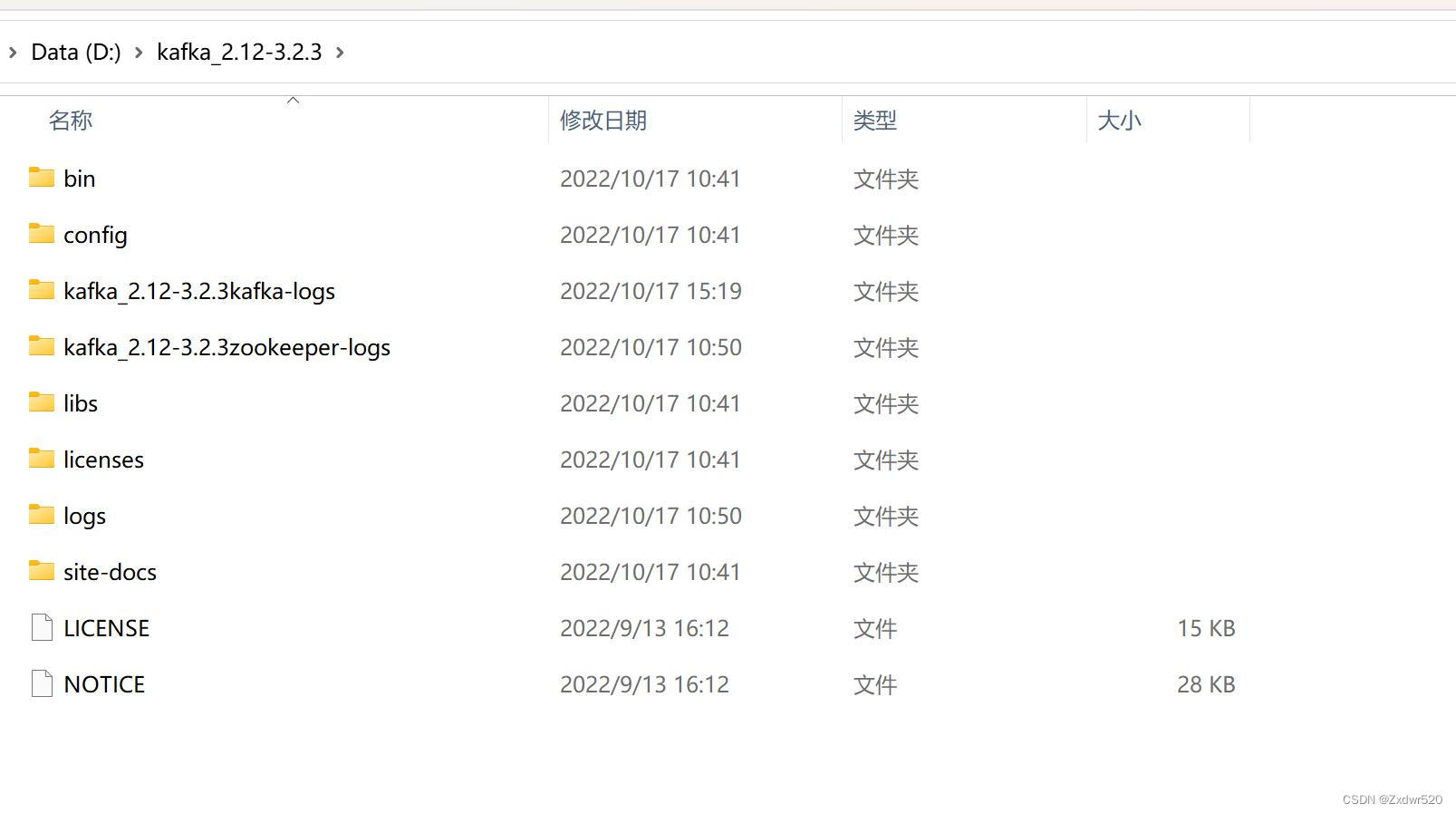

解压后

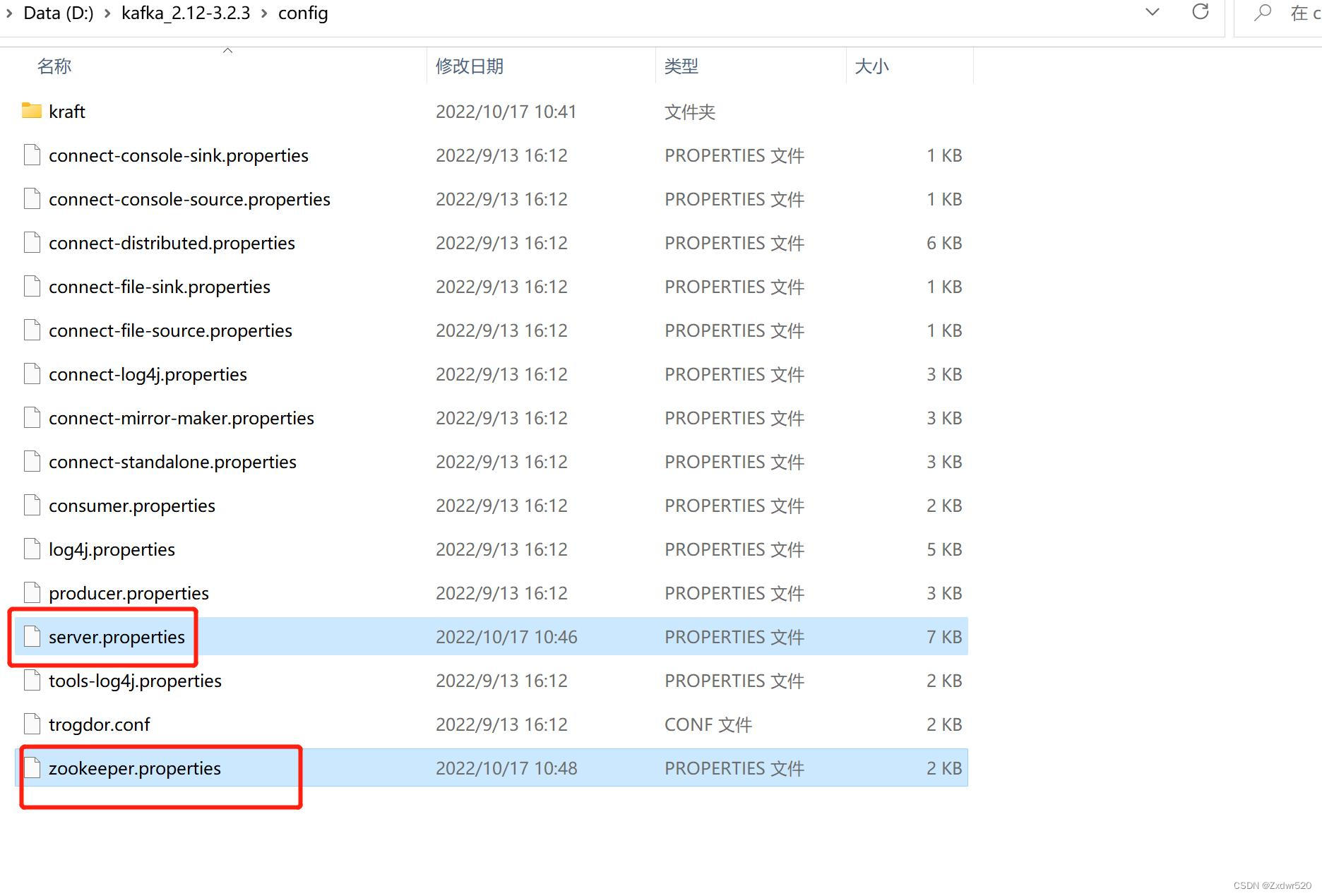

编辑如下文件

zookeeper.properties

dataDir=D:\kafka_2.12-3.2.3\zookeeper-logs

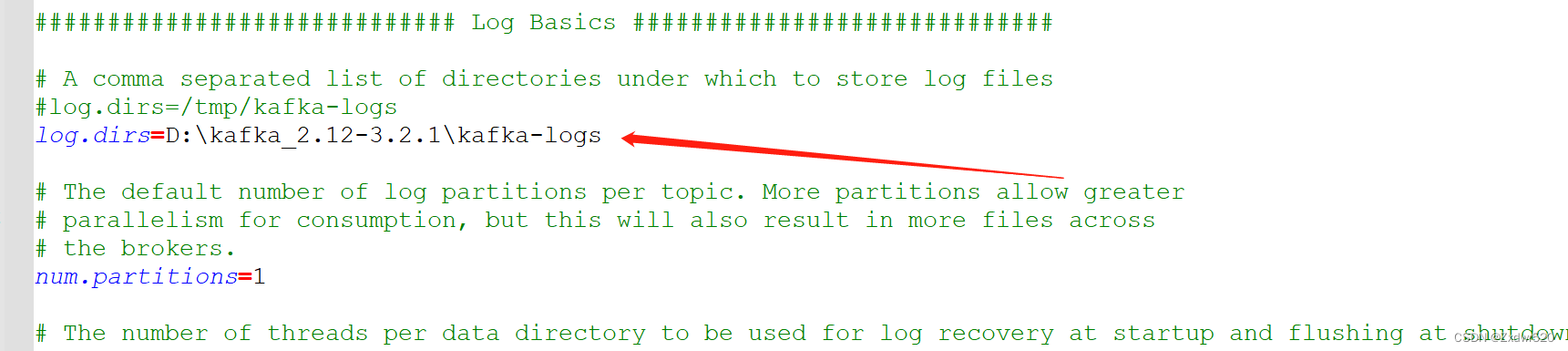

server.properties 修改

log.dirs=D:\kafka_2.12-3.2.3\kafka-logs

用cmd进入D:\kafka_2.12-3.2.3

- 启动zookeeper

bin\windows\zookeeper-server-start.bat config\zookeeper.properties

- 启动kafka

bin\windows\kafka-server-start.bat .\config\server.properties

引入依赖到pom.xml

<!--kafka支持-->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>yml

server:

port: 8089

#定时任务是否开启

scheduling:

enable: true

#--------------------kafka相关配置start--------------------------------

spring:

kafka:

bootstrap-servers: localhost:9092 #集群方式:ip1:9092,ip2:9092,ip3:9092

#设置一个默认组

consumer:

group-id: myGroup

#是否开启自动提交

enable-auto-commit: true

#Kafka中没有初始偏移或如果当前偏移在服务器上不再存在时,默认区最新 ,有三个选项 【latest, earliest, none】

auto-offset-reset: latest

#key-value序列化反序列化

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

producer:

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

batch-size: 65536

buffer-memory: 524288

#--------------------kafka相关配置end--------------------------------控制器

@RestController

@Slf4j

public class KafkaController {

@Autowired

KafkaProducers kafkaProducers;

@RequestMapping(value = "/kafka/test")

public void run(){

log.info("----------begin----------");

//查询数据--这里仿接口造数据

List<User> userList = new ArrayList<>();

User user = new User();

user.setId(1);

user.setDateTime(new Date());

user.setName("王八");

user.setSex("男");

user.setAge(20);

userList.add(user);

if(userList != null && userList.size() > 0) {

try {

ObjectMapper objectMapper = new ObjectMapper();

String msg = objectMapper.writeValueAsString(userList);

kafkaProducers.send(msg);

} catch (Exception e) {

e.printStackTrace();

log.error(e.getMessage());

}

}

log.info("----------end----------");

}

}kafka生产者

@Service

@Slf4j

public class KafkaProducers {

public static final String topic = "myTopic";

@Autowired

KafkaTemplate<String, String> kafkaTemplate;

public void send(String msg) {

try {

kafkaTemplate.send(topic,msg).addCallback(new ListenableFutureCallback() {

@Override

public void onFailure(Throwable throwable) {

log.error("There was an error sending the message.");

}

@Override

public void onSuccess(Object o) {

log.info(" My message was sent successfully.");

}

});

} catch (Exception e){

e.printStackTrace();

}

}

}消费者

@Component

@Slf4j

public class KafkaConsumer {

@Autowired

MyService myService;

@KafkaListener(topics = "myTopic")

public void runStart(ConsumerRecord<?, ?> record){

ObjectMapper objectMapper = new ObjectMapper();

Optional<?> kafkaMessage = Optional.ofNullable(record.value());

List<Detail> objects = new ArrayList<>();

try {

if (kafkaMessage.isPresent()) {

String message = (String) kafkaMessage.get();

log.info("[runStart()-->]message: {}", message);

List<User> userList = objectMapper.readValue(message, new TypeReference<List<User>>() {});

if (!CollectionUtils.isEmpty(userList)) {

for (User bean : userList) {

Detail detail = new Detail();

detail.setName(bean.getName);

objects.add(detail);

}

try {

if (objects.size() > 0) { //入库

myService.saveBatch(objects);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

} catch (Exception e){

e.printStackTrace();

log.error(e.getMessage());

}

}

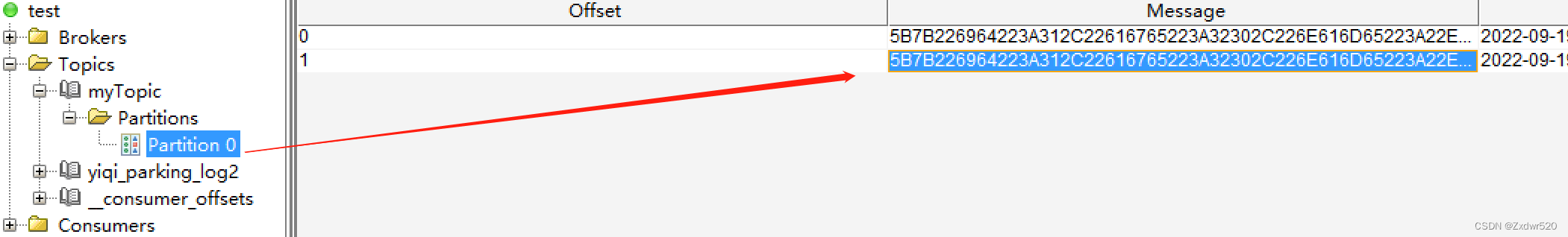

}消费的主题: myTopic,默认0区

至此完成!!!

一下是另外的消费方式

@Slf4j

@Component

public class DispatchUserListener {

@Resource

MyService myService ;

@KafkaListener(id = "dispatch-0",

topicPartitions = {@TopicPartition(topic = "dispatch_bus", partitions = {"0"})})

public void dispatchUserConsumer(String msg){

ObjectMapper objectMapper = new ObjectMapper();

DispatchBus dispatchBus = null;

try {

Map map = objectMapper.readValue(msg, Map.class);

if ("4A".equals(map.get("package_type"))) {

dispatchUser = objectMapper.readValue(msg, DispatchUser.class);

}

} catch (Exception e) { }

if (dispatchUser == null ) {

return;

}

myService .dispatch(dispatchUser);

}原生写法

/**

* @Author: fan

* @Description: 随便写写

* @Date: 2022-09-03 15:16

* @version: v1.0

**/

@Component

@Slf4j

public class KafkaConsume {

@Autowired

IMyService myService;

@Autowired

IYouLogService youLogService;

@Value("${kafka.topic-name.checkAdreal}")//主题可以放到配置文件中

private String checkAdreal;

public void runAdreal() {

List<String> topicList = new ArrayList<>();

topicList.add(checkAdreal);

KafkaConfig kafkaConfig = SpringContextUtil.getBean("kafkaConfig", KafkaConfig.class);//KafkaConfig读kafka配置的信息,可以使用封装的kafkaTemplate都不用put

Properties props = new Properties();

props.put("bootstrap.servers", kafkaConfig.getZooKeeper());

props.put("group.id", kafkaConfig.getGroupId());

props.put("zookeeper.session.timeout.ms", kafkaConfig.getTimeout());

props.put("zookeeper.sync.time.ms", kafkaConfig.getSyncTime());

props.put("auto.commit.interval.ms", kafkaConfig.getInterval());

props.put("auto.offset.reset", "latest");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

ObjectMapper objectMapper = new ObjectMapper();

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(topicList);

Duration duration = Duration.ofMillis(100);

Map<String,Object> map = new HashMap<>();

List<UserVo> list = myService.getListAll(map);

log.info("[KafkaConsume--runAdreal()] size={},topic={},bootstrap.servers={},group.id={}",

list.size(),

checkAdreal,

kafkaConfig.getZooKeeper(),

kafkaConfig.getGroupId());

Integer i = 0;

while (true) {

ConsumerRecords<String, String> records = consumer.poll(duration);

for (ConsumerRecord<String, String> record : records) {

try {

if (StringUtil.isNotEmpty(record.value())) {

Map<String, Object> mapKafka = objectMapper.readValue(record.value(), Map.class);

String order_id = (String) mapKafka.get("order_id");

String product_id = (String) mapKafka.get("product_id");

String order_number = (String) mapKafka.get("order_number");

if (CollectionUtils.isNotEmpty(list)

&& StringUtil.isNotEmpty(order_id)

&& StringUtil.isNotEmpty(product_id)

&& StringUtil.isNotEmpty(order_number) ) {

for (UserVo user : list) {

Integer number = user.getNumber();

Integer orderId = user.getOrderId();

Integer productId = user.getProductId();

List<Object> idList = new ArrayList<>(Collections.singleton(mapKafka.get("id")));

map.put("paramter0",idList.toArray(new String[idList.size()]));

map.put("paramter1",user.getName());

map.put("paramter2",user.getAge());

map.put("paramter3",user.getSex());

map.put("paramter4",user.getScores());

map.put("paramter5",number);

map.put("paramter6",orderId);

map.put("paramter7",productId);

map.put("paramter8",mapKafka.get("status"));

MyUtil.post(map);

YouLog youLog = new YouLog();

youLog.setid((Integer) mapKafka.get("id"));

youLog.setName((String) mapKafka.get("name"));

youLog.setContent( "你干了什么事:" );

youLog.setSendTime(new Date());

youLog.setSendBy("sys");

i += youLogService.save(youLog);

}

}

}

} catch (Exception e) {

e.printStackTrace();

log.error("下发日志失败 error={}", e.getMessage());

}finally {

log.info("[KafkaConsume--runAdreal()] size={}, topic={}, bootstrap.servers={}, group.id={}, i={}",

list.size(),

checkAdreal,

kafkaConfig.getZooKeeper(),

kafkaConfig.getGroupId(),

i);

}

}

}

}

}

8639

8639

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?