环境准备

# host准备

$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.20.1.15 node1

10.20.1.17 node2

# repo准备

$ cat >/etc/yum.repos.d/CentOS7-Openstack-train.repo <<EOF

[Openstack-train]

name=openstack

gpgcheck=0

enabled=1

baseurl=https://vault.centos.org/centos/7.9.2009/cloud/x86_64/openstack-train/

EOF

$ yum makecache fast

$ yum -y install python2-openstackclient.noarch openstack-selinux.noarch

基础组件部署

数据库

$ yum -y install mariadb mariadb-server python2-PyMySQL

**创建 ****/etc/my.cnf.d/openstack.cnf**文件并编辑

[mysqld]

bind-address = 10.20.1.15 # 换成本机IP

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

$ systemctl enable mariadb.service

# 初始化数据库

$ mysql_secure_installation

消息队列Rabbitmq

$ yum install rabbitmq-server -y

$ systemctl enable rabbitmq-server.service --now

# 创建Openstack用户,自行替换密码(RABBIT_PASS)

# rabbitmqctl add_user openstack RABBIT_PASS

$ rabbitmqctl add_user openstack openstack

# 给openstack用户授权

$ rabbitmqctl set_permissions openstack ".*" ".*" ".*"

缓存memcache

$ yum install memcached python-memcached -y

$ vim /etc/sysconfig/memcached

PORT=“11211”

USER=“memcached”

MAXCONN=“1024”

CACHESIZE=“64”

OPTIONS=“-l 127.0.0.1,::1,node1” # 修改监听

$ systemctl enable memcached.service --now

kv存储 etcd

$ yum install etcd -y

编辑**/etc/etcd/etcd.conf**

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://10.20.1.15:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.20.1.15:2379"

ETCD_NAME="node1"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.20.1.15:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.20.1.15:2379"

ETCD_INITIAL_CLUSTER="node1=http://10.20.1.15:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

$ systemctl enable --now etcd

安装keystone认证服务

username: admin

password: admin

创建数据库

$ mysql -u root -p123123

$ CREATE DATABASE keystone;

$ GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

$ GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

# KEYSTONE_DBPASS 为授权密码,自行定义

安装keystone

$ yum install python2-qpid-proton-0.26.0-2.el7.x86_64 -y # 不安装会报错

$ yum install openstack-keystone httpd mod_wsgi -y # keystone没有对外提供服务的能力,使用httpd来实现

# /etc/keystone/keystone.conf

$ vim /etc/keystone/keystone.conf

# KEYSTONE_DBPASS 要和上面授权密码保持一致

# node1 为连接地址

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@node1/keystone

[token]

provider = fernet

# 初始化keystone数据库

$ su -s /bin/sh -c "keystone-manage db_sync" keystone

启动keystone服务

# 初始化keystone用户凭证信息

$ keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

$ keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

# 启动

keystone-manage bootstrap --bootstrap-password admin \

--bootstrap-admin-url http://node1:5000/v3/ \

--bootstrap-internal-url http://node1:5000/v3/ \

--bootstrap-public-url http://node1:5000/v3/ \

--bootstrap-region-id RegionOne

配置apache关联

编辑 /etc/httpd/conf/httpd.conf

ServerName node1 # 配置serverName

创建软链

$ ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启动

$ systemctl enable httpd.service --now

keystone 启动环境

Openstack自身去控制的时候也是需要访问权限的,通过下面变量文件进行账户信息赋值,使用的时候直接source即可

$ vim openrc

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://node1:5000/v3

export OS_IDENTITY_API_VERSION=3

创建域、项目、用户、角色 example

域

$ openstack domain create --description "An Example Domain for zhenxy" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain for zhenxy |

| enabled | True |

| id | cb5671ea6786482c9db07584ba7a46db |

| name | example |

| options | {} |

| tags | [] |

+-------------+----------------------------------+

$ openstack domain list

+----------------------------------+---------+---------+------------------------------+

| ID | Name | Enabled | Description |

+----------------------------------+---------+---------+------------------------------+

| cb5671ea6786482c9db07584ba7a46db | example | True | An Example Domain for zhenxy |

| default | Default | True | The default domain |

+----------------------------------+---------+---------+------------------------------+

项目

$ openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 470f35fda8bb4d49b3ca2f9c7dd1566d |

| is_domain | False |

| name | service |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

$ openstack project list

+----------------------------------+---------+

| ID | Name |

+----------------------------------+---------+

| 470f35fda8bb4d49b3ca2f9c7dd1566d | service |

| b647dee8c0a84732842d042fff3f7f62 | admin |

+----------------------------------+---------+

用户、组

$ openstack user create --domain default --password=user user

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | a05b633550e1417ea10ec263108f815c |

| name | user |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

$ openstack role create role

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | None |

| domain_id | None |

| id | 1660d5616f5444f5aa7f0d33bbec854a |

| name | role |

| options | {} |

+-------------+----------------------------------+

# 将用户user添加到service项目中的role组里

$ openstack role add --project service --user user role

$ openstack user list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| e61fc5846db84845a758b073574791ab | admin |

| a05b633550e1417ea10ec263108f815c | user |

+----------------------------------+-------+

verify 验证

$ openstack --os-auth-url http://node1:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2024-07-25T04:14:15+0000 |

| id | gAAAAABmocMHPUGz1W1cwDNHqtOHGx8RNfwwiGSkjR8Sflb8UNmCY6vAecv0Fs9cDKJI6404PDbcxWG83UlH_tFgVJYGp8OaqeJvXdSF1JX7ugkzZp-vJsz5pX4tYI3MaeLIrss-Q_PedFSU0rhhq9KwyKrDQhqBVcZpUU1DWwz9QLs-XMIjt-0 |

| project_id | b647dee8c0a84732842d042fff3f7f62 |

| user_id | e61fc5846db84845a758b073574791ab |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

安装glance镜像管理服务

创建数据库

$ mysql -u root -p123123

$ CREATE DATABASE glance;

$ GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

$ GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

# GLANCE_DBPASS 为授权密码,自行定义

keystone给glance授权

username: glance

password: glance

# 在默认域创建glance用户

$ openstack user create --domain default --password=glance glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 8e8d61a502aa49fca060e0c48984f272 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 把glance用户加入到管理员角色

$ openstack role add --project service --user glance admin

# 创建service

$ openstack service create --name glance --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | ffc8a1d1f0204484a8fc1cf269775f7f |

| name | glance |

| type | image |

+-------------+----------------------------------+

# 创建镜像服务的访问端点,三种网络区域都要(集群网络、租户网、外部访问网络)

$ openstack endpoint create --region RegionOne image public http://node1:9292

$ openstack endpoint create --region RegionOne image internal http://node1:9292

$ openstack endpoint create --region RegionOne image admin http://node1:9292

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------+

| 04701e3aecd74606bb26d36f89ec1992 | RegionOne | glance | image | True | public | http://node1:9292 |

| d485d39d98e5490486e51b236885dcdc | RegionOne | glance | image | True | admin | http://node1:9292 |

| ebac336a226846229f0470cd995588f2 | RegionOne | glance | image | True | internal | http://node1:9292 |

+----------------------------------+-----------+--------------+--------------+---------+-----------+-----------------------+

安装glance

yum install openstack-glance -y

编辑**/etc/glance/glance-api.conf**

$ vim /etc/glance/glance-api.conf

# GLANCE_DBPASS 要和上面授权密码保持一致

# node1 为连接地址

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@node1/glance

[keystone_authtoken]

# ...

www_authenticate_uri = http://node1:5000

auth_url = http://node1:5000

memcached_servers = node1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /data/lib/glance/images/ # 存储目录

初始化glance数据库

$ su -s /bin/sh -c "glance-manage db_sync" glance

启动服务

# 需要创建存储目录

$ mkdir -pv /data/lib/glance/images/

$ chown -R glance.glance /data/lib/glance/images/

$ systemctl enable openstack-glance-api.service --now

安装Placement采集服务

创建数据库

$ mysql -u root -p123123

$ CREATE DATABASE placement;

$ GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

$ GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

# PLACEMENT_DBPASS 为授权密码,自行定义

keystone给placement授权

username: placement

password: placement

# 在默认域创建placement用户

$ openstack user create --domain default --password=placement placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | ba8d6cbea4664096b05869ddf5857694 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 把placement用户加入到管理员角色

$ openstack role add --project service --user placement admin

# 创建service

$ openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 78dc30991857404fa4f433b39637efa0 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

# 创建服务的访问端点,三种网络区域都要(集群网络、租户网、外部访问网络)

$ openstack endpoint create --region RegionOne placement public http://node1:8778

$ openstack endpoint create --region RegionOne placement internal http://node1:8778

$ openstack endpoint create --region RegionOne placement admin http://node1:8778

$ openstack endpoint list |grep placement

| 18f482d23d4a426a8b9273621c35c43a | RegionOne | placement | placement | True | public | http://node1:8778 |

| 4538ecb2b54641e1b9a7a5e74f96f6f2 | RegionOne | placement | placement | True | internal | http://node1:8778 |

| ecd6e187fd734691aba3f114d0f18cf6 | RegionOne | placement | placement | True | admin | http://node1:8778 |

安装placement

$ yum install openstack-placement-api -y

编辑**/etc/placement/placement.conf**

$ vim /etc/placement/placement.conf

# PLACEMENT_DBPASS 要和上面授权密码保持一致

# node1 为连接地址

[placement_database]

# ...

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@node1/placement

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://node1:5000/v3

memcached_servers = node1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = placement

httpd 增加访问授权

$ vim /etc/httpd/conf.d/00-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess placement-api processes=3 threads=1 user=placement group=placement

WSGIScriptAlias / /usr/bin/placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/placement/placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

</VirtualHost>

Alias /placement-api /usr/bin/placement-api

<Location /placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

# 增加下面内容,不增加指标上报会权限不够

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

初始化glance数据库

$ su -s /bin/sh -c "placement-manage db sync" placement

启动服务

$ systemctl restart httpd

验证

$ placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

安装nova技术服务

创建数据库

$ mysql -u root -p123123

$ CREATE DATABASE nova_api;

$ CREATE DATABASE nova;

$ CREATE DATABASE nova_cell0;

$ GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

$ GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

$ GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

$ GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

$ GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

$ GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

# NOVA_DBPASS 为授权密码,自行定义

keystone给nova授权

username: nova

password: nova

# 在默认域创建nova用户

openstack user create --domain default --password=nova nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | e901beeaa95e4d3b937ab9e6e325c587 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 把nova用户加入到管理员角色

[root@openstack01 ~]# openstack role add --project service --user nova admin

# 创建service

[root@openstack01 ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 6372631026354e66a1b207d4dde72aea |

| name | nova |

| type | compute |

+-------------+----------------------------------+

# 创建服务的访问端点,三种网络区域都要(集群网络、租户网、外部访问网络)

$ openstack endpoint create --region RegionOne compute public http://node1:8774/v2.1

$ openstack endpoint create --region RegionOne compute internal http://node1:8774/v2.1

$ openstack endpoint create --region RegionOne compute admin http://node1:8774/v2.1

$ openstack endpoint list |grep compute

| 253b09ddb76d453db7b86d6f051c0c8f | RegionOne | nova | compute | True | public | http://node1:8774/v2.1 |

| 2627d1a3a1da43ff96cafa391c31fe6d | RegionOne | nova | compute | True | admin | http://node1:8774/v2.1 |

| cc101738e81e4efe8af847d0e908496c | RegionOne | nova | compute | True | internal | http://node1:8774/v2.1 |

安装nova控制节点

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-novncproxy openstack-nova-scheduler -y

编辑**/etc/nova/nova.conf**文件

$ vim /etc/nova/nova.conf

# NOVA_DBPASS 要和上面授权密码保持一致

# node1 为连接地址

# Rabbitmq密码也要和上面密码保持一致

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:openstack@node1:5672/

my_ip = 10.20.1.15

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@node1/nova_api

[database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@node1/nova

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://node1:5000/

auth_url = http://node1:5000/

memcached_servers = node1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

[vnc]

enabled = true

# ...

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

# ...

api_servers = http://node1:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://node1:5000/v3

username = placement

password = placement

初始化nova-api数据库

$ su -s /bin/sh -c "nova-manage api_db sync" nova

初始化cell0数据库

$ su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

初始化cell1 数据库

$ su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

数据填充

$ su -s /bin/sh -c "nova-manage db sync" nova

验证

$ su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+-------------------------------------+--------------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+-------------------------------------+--------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@node1/nova_cell0 | False |

| cell1 | 6d74a539-a8a5-4dc8-90b7-356dd1db1c24 | rabbit://openstack:****@node1:5672/ | mysql+pymysql://nova:****@node1/nova | False |

+-------+--------------------------------------+-------------------------------------+--------------------------------------------+----------+

启动服务

$ systemctl enable \

openstack-nova-api.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

安装nova 计算节点

基础源

$ cat >/etc/yum.repos.d/kvm.repo <<EOF

[Virt]

name=CentOS-$releasever - Base

baseurl=http://mirrors.aliyun.com/centos/7.9.2009/virt/x86_64/kvm-common/

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

EOF

# 需要有以上源,不然会报异常缺少组件qemu-kvm-rhev >= 2.10.0

$ yum -y install openstack-nova-compute python2-qpid-proton-0.26.0-2.el7.x86_64

编辑/etc/nova/nova.conf

# 确定计算节点是否支持虚拟机硬件加速

# 如果此命令返回的值为 zero ,则您的计算节点不支持硬件加速,您必须配置 libvirt 以使用 QEMU 而不是 KVM。

$ egrep -c '(vmx|svm)' /proc/cpuinfo

$ vim /etc/nova/nova.conf

# 把当前控制节点,也当做计算节点,差异就差下面这一行,增加上

# 这里我直接写成ip地址了,由于没有dns服务器,在正常控制台场景的时候是无法解析的

[vnc]

novncproxy_base_url = http://10.20.1.15:6080/vnc_auto.html

[libvirt]

virt_type = qemu

启动服务

$ systemctl enable libvirtd.service openstack-nova-compute.service --now

控制节点操作

# 查看节点是否进来了

$ openstack compute service list

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| 1 | nova-conductor | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T06:31:04.000000 |

| 2 | nova-scheduler | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T06:31:04.000000 |

| 9 | nova-compute | openstack01.guoyutec.com | nova | enabled | up | None |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

# 手动发现一下

$ su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 6d74a539-a8a5-4dc8-90b7-356dd1db1c24

Checking host mapping for compute host 'openstack01.guoyutec.com': bc081a82-5c5e-4ccb-974b-076bd94de608

Creating host mapping for compute host 'openstack01.guoyutec.com': bc081a82-5c5e-4ccb-974b-076bd94de608

Found 1 unmapped computes in cell: 6d74a539-a8a5-4dc8-90b7-356dd1db1c24

$ openstack compute service list

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| 1 | nova-conductor | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T06:32:44.000000 |

| 2 | nova-scheduler | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T06:32:44.000000 |

| 9 | nova-compute | openstack01.guoyutec.com | nova | enabled | up | 2024-07-25T06:32:42.000000 |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

$ openstack hypervisor list

+----+--------------------------+-----------------+------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+----+--------------------------+-----------------+------------+-------+

| 1 | openstack01.guoyutec.com | QEMU | 10.20.1.15 | up |

+----+--------------------------+-----------------+------------+-------+

$ openstack compute service list

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

| 1 | nova-conductor | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T07:07:13.000000 |

| 2 | nova-scheduler | openstack01.guoyutec.com | internal | enabled | up | 2024-07-25T07:07:14.000000 |

| 9 | nova-compute | openstack01.guoyutec.com | nova | enabled | up | 2024-07-25T07:07:13.000000 |

| 10 | nova-compute | openstack02.guoyutec.com | nova | enabled | up | 2024-07-25T07:07:12.000000 |

+----+----------------+--------------------------+----------+---------+-------+----------------------------+

$ openstack hypervisor list

+----+--------------------------+-----------------+------------+-------+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+----+--------------------------+-----------------+------------+-------+

| 1 | openstack01.guoyutec.com | QEMU | 10.20.1.15 | up |

| 2 | openstack02.guoyutec.com | QEMU | 10.20.1.17 | up |

+----+--------------------------+-----------------+------------+-------+

添加新计算节点时,必须在控制器节点上运行

**nova-manage cell_v2 discover_hosts**以注册这些新计算节点。或者,也可以在**/etc/nova/nova.conf**中设置适当的时间间隔:

[scheduler]

discover_hosts_in_cells_interval = 300

ref:https://docs.openstack.org/nova/train/install/compute-install-rdo.html

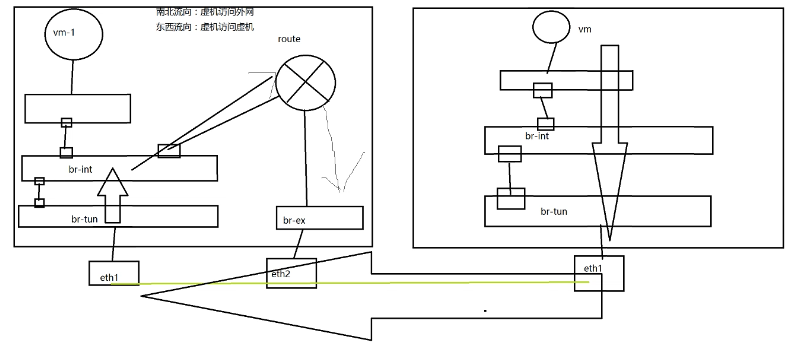

安装neutron网络服务

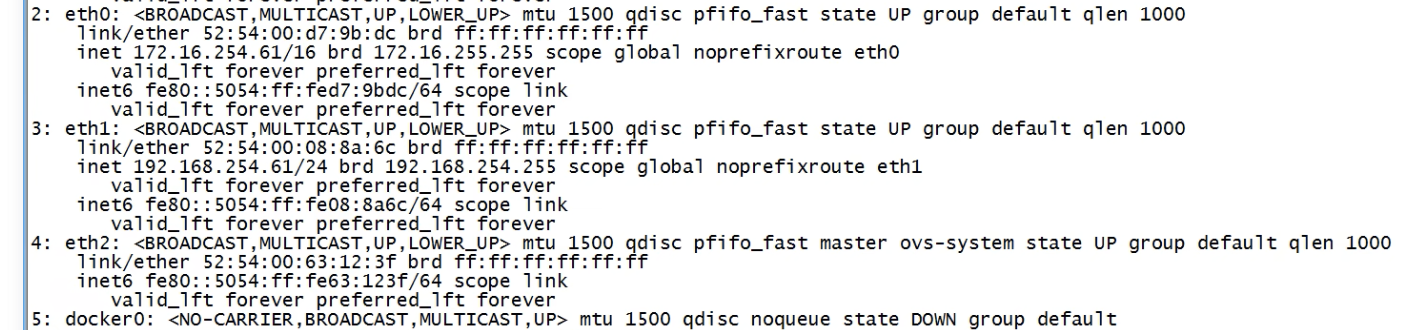

- 控制节点 3 网卡(eth0, eth1, eth2),计算节点 2 网卡(eth0, eth1)。

- 合并 Management 和 API 网络,使用 eth0

- VM 网络使用 eht1。 nat 网络

- 控制节点的 eth2 与 External 网络连接 也就是第三块网卡

三块网卡,

第一块管理网

第二块内网

第三块访问外网,可以和第一块在一个网

控制节点安装

创建数据库

$ mysql -u root -p123123

$ CREATE DATABASE neutron;

$ GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';

$ GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

# NEUTRON_DBPASS 为授权密码,自行定义

keystone 给 neutron 授权

username: neutron

password: neutron

# 在默认域创建neutron用户

$ openstack user create --domain default --password=neutron neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 8eed683f79ad45f9b4a81926dfe6fba8 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 把neutron用户加入到管理员角色

$ openstack role add --project service --user neutron admin

# 创建service

$ openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 254c5263e22b4ab99c14094dfc31b7bd |

| name | neutron |

| type | network |

+-------------+----------------------------------+

# 创建服务的访问端点,三种网络区域都要(集群网络、租户网、外部访问网络)

$ openstack endpoint create --region RegionOne network public http://node1:9696

$ openstack endpoint create --region RegionOne network internal http://node1:9696

$ openstack endpoint create --region RegionOne network admin http://node1:9696

$ openstack endpoint list |grep network

| 7b1e78d9e4084f308e5b2d1c2eb9b02c | RegionOne | neutron | network | True | admin | http://node1:9696 |

| a1ee4d1b4e104f4f95f0d964c4a278e4 | RegionOne | neutron | network | True | internal | http://node1:9696 |

| d2f374a9e47545bb83fa89c1b429556e | RegionOne | neutron | network | True | public | http://node1:9696 |

安装linuxbridge

$ yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

编辑/etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@node1/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:openstack@node1

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[keystone_authtoken]

www_authenticate_uri = http://node1:5000

auth_url = http://node1:5000

memcached_servers = node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[nova]

auth_url = http://node1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = nova

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

编辑/etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini

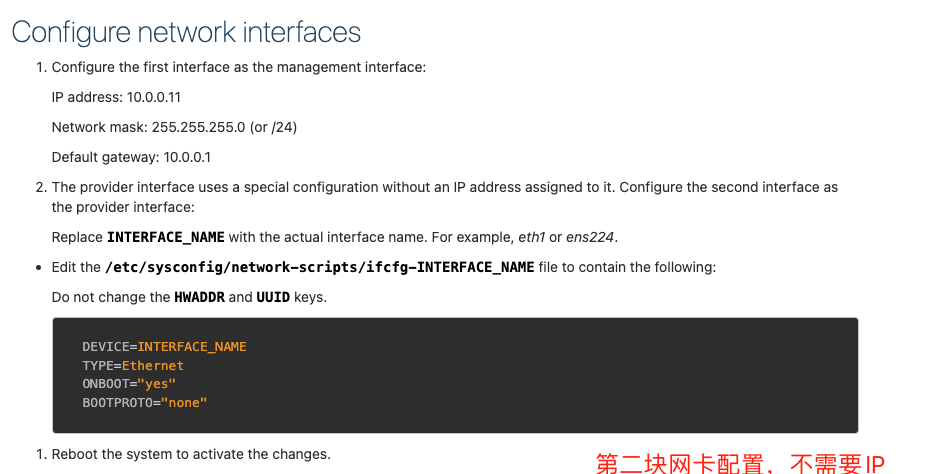

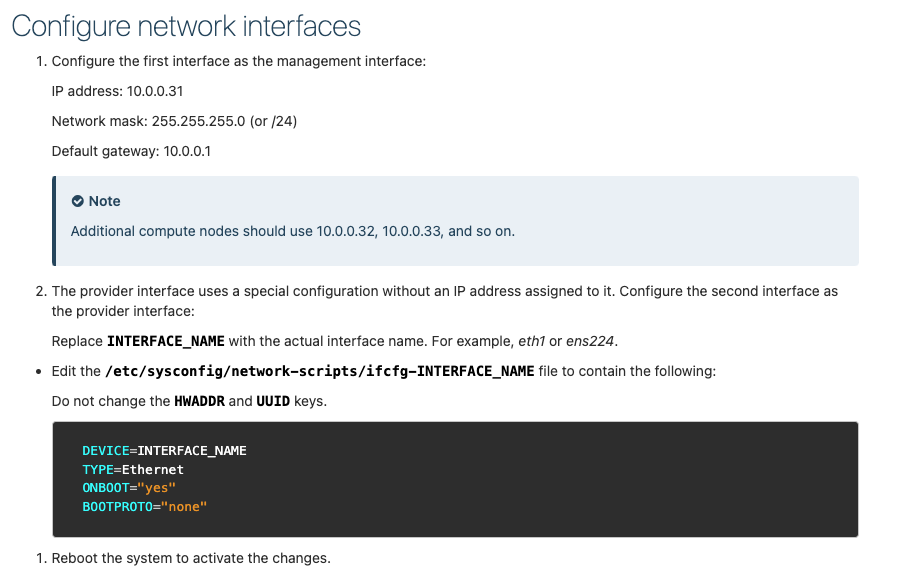

# PROVIDER_INTERFACE_NAME 接口替换为第二个网卡

# OVERLAY_INTERFACE_IP_ADDRESS 替换为第一个网卡IP

[linux_bridge]

# physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

physical_interface_mappings = provider:ens224

[vxlan]

enable_vxlan = true

local_ip = 10.20.1.15

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

编辑/etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

编辑/etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

编辑**/etc/neutron/metadata_agent.ini**

[DEFAULT]

nova_metadata_host = node1

metadata_proxy_shared_secret = METADATA_SECRET

编辑/etc/nova/nova.conf

[neutron]

auth_url = http://node1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

启动

$ ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

$ su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

$ systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service --now

$ systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

$ systemctl enable neutron-l3-agent.service --now

$ systemctl restart neutron-l3-agent.service

$ openstack network agent list

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

| 1e6eb147-5668-4143-a04a-f8c058f71683 | Metadata agent | openstack01.guoyutec.com | None | :-) | UP | neutron-metadata-agent |

| 5aee84ae-7372-4ffe-b75f-9b3171f1a28c | L3 agent | openstack01.guoyutec.com | nova | :-) | UP | neutron-l3-agent |

| fa0cc65f-f6a9-41c6-9451-fb1760545311 | Linux bridge agent | openstack01.guoyutec.com | None | :-) | UP | neutron-linuxbridge-agent |

| fcf60bf5-6cd0-4b12-ae45-2682b34f0325 | DHCP agent | openstack01.guoyutec.com | nova | :-) | UP | neutron-dhcp-agent |

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

计算节点安装 10.20.1.17

组件安装

$ yum install openstack-neutron-linuxbridge ebtables ipset -y

编辑**/etc/neutron/neutron.conf**

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack@node1

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://node1:5000

auth_url = http://node1:5000

memcached_servers = node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

编辑**/etc/neutron/plugins/ml2/linuxbridge_agent.ini**

# PROVIDER_INTERFACE_NAME 接口替换为第二个网卡

# OVERLAY_INTERFACE_IP_ADDRESS 替换为第一个网卡IP

[linux_bridge]

# physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

physical_interface_mappings = provider:ens224

[vxlan]

enable_vxlan = true

# local_ip = OVERLAY_INTERFACE_IP_ADDRESS

local_ip = 10.20.1.17

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

编辑/etc/nova/nova.conf

[neutron]

auth_url = http://node1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

启动

$ systemctl restart openstack-nova-compute.service

$ systemctl enable neutron-linuxbridge-agent.service

$ systemctl start neutron-linuxbridge-agent.service

verify

$ openstack network agent list

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

| 1e6eb147-5668-4143-a04a-f8c058f71683 | Metadata agent | openstack01.guoyutec.com | None | :-) | UP | neutron-metadata-agent |

| 5aee84ae-7372-4ffe-b75f-9b3171f1a28c | L3 agent | openstack01.guoyutec.com | nova | :-) | UP | neutron-l3-agent |

| ee183ee6-771e-46d1-a066-336758665dd2 | Linux bridge agent | openstack02.guoyutec.com | None | :-) | UP | neutron-linuxbridge-agent |

| fa0cc65f-f6a9-41c6-9451-fb1760545311 | Linux bridge agent | openstack01.guoyutec.com | None | :-) | UP | neutron-linuxbridge-agent |

| fcf60bf5-6cd0-4b12-ae45-2682b34f0325 | DHCP agent | openstack01.guoyutec.com | nova | :-) | UP | neutron-dhcp-agent |

+--------------------------------------+--------------------+--------------------------+-------------------+-------+-------+---------------------------+

两种部署方式,也可参考[OpenVswitch安装]

安装horizon前端服务

安装配置

yum install openstack-dashboard -y

编辑**/etc/openstack-dashboard/local_settings**

$ vim /etc/openstack-dashboard/local_settings

WEBROOT = '/dashboard'

OPENSTACK_HOST = "node1"

ALLOWED_HOSTS = ['one.example.com', 'two.example.com','*']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'node1:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "member"

OPENSTACK_NEUTRON_NETWORK = {

'enable_auto_allocated_network': False,

'enable_distributed_router': False,

'enable_fip_topology_check': True,

'enable_ha_router': False,

'enable_ipv6': True,

# TODO(amotoki): Drop OPENSTACK_NEUTRON_NETWORK completely from here.

# enable_quotas has the different default value here.

'enable_quotas': True,

'enable_rbac_policy': True,

'enable_router': True,

'default_dns_nameservers': [],

'supported_provider_types': ['*'],

'segmentation_id_range': {},

'extra_provider_types': {},

'supported_vnic_types': ['*'],

'physical_networks': [],

}

TIME_ZONE = "TIME_ZONE"

编辑**/etc/httpd/conf.d/openstack-dashboard.conf**

$ vim /etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL} # 添加此内容

启动

$ systemctl restart httpd.service memcached.service

安装cinder存储服务

控制节点安装

创建数据库

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'CINDER_DBPASS';

# CINDER_DBPASS 为授权密码,自行定义

keystone 给cinder授权

username:cinder

password:cinder

# 在默认域创建neutron用户

$ openstack user create --domain default --password=cinder cinder

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 63e872082f8d4b298b9ec78628da6a73 |

| name | cinder |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 把neutron用户加入到管理员角色

$ openstack role add --project service --user cinder admin

# 创建service 新版本的openstack新增cinder接口,这个地方需要创建两个

$ openstack service create --name cinderv2 \

> --description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | d578d3cfdea2455bb01f8fea12bf44e9 |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+

$ openstack service create --name cinderv3 \

> --description "OpenStack Block Storage" volumev3

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 3719eb12818b460dbebb45956f54e268 |

| name | cinderv3 |

| type | volumev3 |

+-------------+----------------------------------+

# 创建服务的访问端点,三种网络区域都要(集群网络、租户网、外部访问网络)

$ openstack endpoint create --region RegionOne volumev2 public http://node1:8776/v2/%\(project_id\)s

$ openstack endpoint create --region RegionOne volumev2 internal http://node1:8776/v2/%\(project_id\)s

$ openstack endpoint create --region RegionOne volumev2 admin http://node1:8776/v2/%\(project_id\)s

$ openstack endpoint create --region RegionOne volumev3 public http://node1:8776/v3/%\(project_id\)s

$ openstack endpoint create --region RegionOne volumev3 internal http://node1:8776/v3/%\(project_id\)s

$ openstack endpoint create --region RegionOne volumev3 admin http://node1:8776/v3/%\(project_id\)s

$ openstack endpoint list |grep volume

| 0bc833e1e41a4848a2d2653ce311ac1a | RegionOne | cinderv3 | volumev3 | True | internal | http://node1:8776/v3/%(project_id)s |

| 93ab2fe9e4fc4b2badb60294006f1419 | RegionOne | cinderv2 | volumev2 | True | internal | http://node1:8776/v2/%(project_id)s |

| 9ca65dc78a16420a83ee2eefd70cc7b4 | RegionOne | cinderv2 | volumev2 | True | admin | http://node1:8776/v2/%(project_id)s |

| ace72ac21ebc43808bda04acc15eceb8 | RegionOne | cinderv3 | volumev3 | True | admin | http://node1:8776/v3/%(project_id)s |

| b41ff64b96b54a9699ec94ec479d0169 | RegionOne | cinderv2 | volumev2 | True | public | http://node1:8776/v2/%(project_id)s |

| d2956cec80a14ffe82134b72ad173c62 | RegionOne | cinderv3 | volumev3 | True | public | http://node1:8776/v3/%(project_id)s |

安装cinder

$ yum install openstack-cinder

编辑/etc/cinder/cinder.conf

$ vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@node1/cinder

[DEFAULT]

transport_url = rabbit://openstack:openstack@node1

my_ip = 10.20.1.15

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://node1:5000

auth_url = http://node1:5000

memcached_servers = node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder #keystone分配的密码

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

初始化库

su -s /bin/sh -c "cinder-manage db sync" cinder

编辑计算节点**/etc/nova/nova.conf**

[cinder]

os_region_name = RegionOne

启动

$ systemctl restart openstack-nova-api.service # 重启节点nova

$ systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service --now # 启动cinder

存储节点安装

需增加单独的盘,做lvm

# 一般常见的系统会自带lvm

$ yum install lvm2 device-mapper-persistent-data -y

$ systemctl enable lvm2-lvmetad.service --now

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdb 8:16 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

sda 8:0 0 100G 0 disk

├─sda2 8:2 0 200M 0 part /boot

├─sda3 8:3 0 99.8G 0 part

│ └─centos-root 253:0 0 99.8G 0 lvm /

└─sda1 8:1 0 2M 0 part

$ pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

$ vgcreate cinder-volumes /dev/sdb

Volume group "cinder-volumes" successfully created

编辑/etc/lvm/lvm.conf

$ vim /etc/lvm/lvm.conf

devices {

devices {

filter = [ "a/sdb/", "r/.*/"] # 配置对应的盘

}

}

组件安装

$ yum install openstack-cinder targetcli python-keystone -y

编辑**/etc/cinder/cinder.conf**

$ vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@node1/cinder

[DEFAULT]

transport_url = rabbit://openstack:openstack@node1

auth_strategy = keystone

my_ip = 10.20.1.17

enabled_backends = lvm

glance_api_servers = http://node1:9292

[keystone_authtoken]

www_authenticate_uri = http://node1:5000

auth_url = http://node1:5000

memcached_servers = node1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes # 要和lvm名字对应

target_protocol = iscsi

target_helper = lioadm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

启动

$ systemctl enable openstack-cinder-volume.service target.service

$ openstack volume service list

+------------------+------------------------------+------+---------+-------+----------------------------+

| Binary | Host | Zone | Status | State | Updated At |

+------------------+------------------------------+------+---------+-------+----------------------------+

| cinder-scheduler | openstack01.guoyutec.com | nova | enabled | up | 2024-07-30T07:24:25.000000 |

| cinder-volume | openstack02.guoyutec.com@lvm | nova | enabled | up | 2024-07-30T07:24:20.000000 |

+------------------+------------------------------+------+---------+-------+----------------------------+

8195

8195

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?