总结haproxy各调度算法的实现方式及其应用场景

HAProxy调度算法

HAProxy通过固定参数 balance 指明对后端服务器的调度算法,该参数可以配置在listen或backend选项中。

HAProxy的调度算法分为静态和动态调度算法,但是有些算法可以根据参数在静态和动态算法中相互转换。

官方文档:http://cbonte.github.io/haproxy-dconv/2.1/configuration.html#4-balance

1 静态算法

静态算法:按照事先定义好的规则轮询公平调度,不关心后端服务器的当前负载、连接数和响应速度等,且无法实时修改权重(只能为0和1,不支持其它值),只能靠重启HAProxy生效。

可以借助socat实现real server的启动和停止(权重设置为0【关闭】或1【启动】),不重启需要借助工具socat,这是通过socket进行,并未修改配置文件,不用重启,但是因为配置文件未修改,重启(reload和restart)服务这些修改会丢失,仍然按照配置设置执行。

1.1 socat 工具

对服务器动态权重和其它状态可以利用 socat工具进行调整,Socat 是 Linux 下的一个多功能的网络工具,名字来由是Socket CAT,利用此工具来控制socket,相当于netCAT的增强版.Socat 的主要特点就是在两个数据流之间建立双向通道,且支持众多协议和链接方式。如 IP、TCP、 UDP、IPv6、Socket文件等

范例:利用工具socat 对服务器动态权重调整

socket就是同一主机上,客户端与服务器之间连接的桥梁,进而避免采用网络的方式连接客户端与服务器。socket文件位置主配置文件有定义,放在/var/lib/haproxy/haproxy.sock中,利用socat修改内容,不用重启服务

socat通过socket控制与haproxy的通讯。

1.安装相关包

[root@haproxy:/var/lib/haproxy]#

yum -y install socat

[root@haproxy:/var/lib/haproxy]#

echo "help" | socat stdio /var/lib/haproxy/haproxy.sock

#利用管道符把help传给socat,进而标准重输入重定向到sockt文件中。实际为在此socket文件中输入help,获得帮助,只是获得此socket的帮助,不同socket的帮助不同,用法不同,不是所有socket都支持多种用法。

The following commands are valid at this level:

abort ssl cert <certfile> : abort a transaction for a certificate file

add acl [@<ver>] <acl> <pattern> : add an acl entry

add map [@<ver>] <map> <key> <val> : add a map entry (payload supported instead of key/val)

add ssl crt-list <list> <cert> [opts]* : add to crt-list file <list> a line <cert> or a payload

clear acl [@<ver>] <acl> : clear the contents of this acl

clear counters [all] : clear max statistics counters (or all counters)

clear map [@<ver>] <map> : clear the contents of this map

clear table <table> [<filter>]* : remove an entry from a table (filter: data/key)

commit acl @<ver> <acl> : commit the ACL at this version

commit map @<ver> <map> : commit the map at this version

commit ssl cert <certfile> : commit a certificate file

del acl <acl> [<key>|#<ref>] : delete acl entries matching <key>

del map <map> [<key>|#<ref>] : delete map entries matching <key>

del ssl cert <certfile> : delete an unused certificate file

del ssl crt-list <list> <cert[:line]> : delete a line <cert> from crt-list file <list>

disable agent : disable agent checks

disable dynamic-cookie backend <bk> : disable dynamic cookies on a specific backend

disable frontend <frontend> : temporarily disable specific frontend

disable health : disable health checks

disable server (DEPRECATED) : disable a server for maintenance (use 'set server' instead)

enable agent : enable agent checks

enable dynamic-cookie backend <bk> : enable dynamic cookies on a specific backend

enable frontend <frontend> : re-enable specific frontend

enable health : enable health checks

enable server (DEPRECATED) : enable a disabled server (use 'set server' instead)

get acl <acl> <value> : report the patterns matching a sample for an ACL

get map <acl> <value> : report the keys and values matching a sample for a map

get var <name> : retrieve contents of a process-wide variable

get weight <bk>/<srv> : report a server's current weight

new ssl cert <certfile> : create a new certificate file to be used in a crt-list or a directory

operator : lower the level of the current CLI session to operator

prepare acl <acl> : prepare a new version for atomic ACL replacement

prepare map <acl> : prepare a new version for atomic map replacement

set dynamic-cookie-key backend <bk> <k> : change a backend secret key for dynamic cookies

set map <map> [<key>|#<ref>] <value> : modify a map entry

set maxconn frontend <frontend> <value> : change a frontend's maxconn setting

set maxconn global <value> : change the per-process maxconn setting

set maxconn server <bk>/<srv> : change a server's maxconn setting

set profiling <what> {auto|on|off} : enable/disable resource profiling (tasks,memory)

set rate-limit <setting> <value> : change a rate limiting value

set server <bk>/<srv> [opts] : change a server's state, weight, address or ssl

set severity-output [none|number|string]: set presence of severity level in feedback information

set ssl cert <certfile> <payload> : replace a certificate file

set ssl ocsp-response <resp|payload> : update a certificate's OCSP Response from a base64-encode DER

set ssl tls-key [id|file] <key> : set the next TLS key for the <id> or <file> listener to <key>

set table <table> key <k> [data.* <v>]* : update or create a table entry's data

set timeout [cli] <delay> : change a timeout setting

set weight <bk>/<srv> (DEPRECATED) : change a server's weight (use 'set server' instead)

show acl [@<ver>] <acl>] : report available acls or dump an acl's contents

show activity : show per-thread activity stats (for support/developers)

show backend : list backends in the current running config

show cache : show cache status

show cli level : display the level of the current CLI session

show cli sockets : dump list of cli sockets

show env [var] : dump environment variables known to the process

show errors [<px>] [request|response] : report last request and/or response errors for each proxy

show events [<sink>] [-w] [-n] : show event sink state

show fd [num] : dump list of file descriptors in use or a specific one

show info [desc|json|typed|float]* : report information about the running process

show libs : show loaded object files and libraries

show map [@ver] [map] : report available maps or dump a map's contents

show peers [dict|-] [section] : dump some information about all the peers or this peers section

show pools : report information about the memory pools usage

show profiling [<what>|<#lines>|byaddr]*: show profiling state (all,status,tasks,memory)

show resolvers [id] : dumps counters from all resolvers section and associated name servers

show schema json : report schema used for stats

show servers conn [<backend>] : dump server connections status (all or for a single backend)

show servers state [<backend>] : dump volatile server information (all or for a single backend)

show sess [id] : report the list of current sessions or dump this exact session

show ssl cert [<certfile>] : display the SSL certificates used in memory, or the details of a file

show ssl crt-list [-n] [<list>] : show the list of crt-lists or the content of a crt-list file <list>

show startup-logs : report logs emitted during HAProxy startup

show stat [desc|json|no-maint|typed|up]*: report counters for each proxy and server

show table <table> [<filter>]* : report table usage stats or dump this table's contents (filter: data/key)

show tasks : show running tasks

show threads : show some threads debugging information

show tls-keys [id|*] : show tls keys references or dump tls ticket keys when id specified

show trace [<module>] : show live tracing state

show version : show version of the current process

shutdown frontend <frontend> : stop a specific frontend

shutdown session [id] : kill a specific session

shutdown sessions server <bk>/<srv> : kill sessions on a server

trace [<module>|0] [cmd [args...]] : manage live tracing (empty to list, 0 to stop all)

user : lower the level of the current CLI session to user

help [<command>] : list matching or all commands

prompt : toggle interactive mode with prompt

quit : disconnect

2.查看后端backend参数信息

[root@haproxy:/var/lib/haproxy]#

echo "show backend" | socat stdio /var/lib/haproxy/haproxy.sock

# name

stats

m44_webservers1

m44_webservers2

[root@haproxy:/var/lib/haproxy]#

echo "show info" | socat stdio /var/lib/haproxy/haproxy.sock

Name: HAProxy

Version: 2.4.17-9f97155

Release_date: 2022/05/13

Nbthread: 1

Nbproc: 2

Process_num: 1

Pid: 11324

Uptime: 0d 2h32m43s

Uptime_sec: 9163

Memmax_MB: 0

PoolAlloc_MB: 0

PoolUsed_MB: 0

PoolFailed: 0

Ulimit-n: 200030

Maxsock: 200030

Maxconn: 100000

Hard_maxconn: 100000

CurrConns: 0

CumConns: 9156

CumReq: 8

MaxSslConns: 0

CurrSslConns: 0

CumSslConns: 0

Maxpipes: 0

PipesUsed: 0

PipesFree: 0

ConnRate: 0

ConnRateLimit: 0

MaxConnRate: 0

SessRate: 0

SessRateLimit: 0

MaxSessRate: 0

SslRate: 0

SslRateLimit: 0

MaxSslRate: 0

SslFrontendKeyRate: 0

SslFrontendMaxKeyRate: 0

SslFrontendSessionReuse_pct: 0

SslBackendKeyRate: 0

SslBackendMaxKeyRate: 0

SslCacheLookups: 0

SslCacheMisses: 0

CompressBpsIn: 0

CompressBpsOut: 0

CompressBpsRateLim: 0

ZlibMemUsage: 0

MaxZlibMemUsage: 0

Tasks: 13

Run_queue: 0

Idle_pct: 100

node: haproxy.magedu.org

Stopping: 0

Jobs: 6

Unstoppable Jobs: 2

Listeners: 5

ActivePeers: 0

ConnectedPeers: 0

DroppedLogs: 0

BusyPolling: 0

FailedResolutions: 0

TotalBytesOut: 45376

TotalSplicdedBytesOut: 0

BytesOutRate: 0

DebugCommandsIssued: 0

CumRecvLogs: 0

Build info: 2.4.17-9f97155

Memmax_bytes: 0

PoolAlloc_bytes: 54560

PoolUsed_bytes: 54560

Start_time_sec: 1657332508

Tainted: 0

[root@haproxy:/var/lib/haproxy]#

echo "show servers state" | socat stdio /var/lib/haproxy/haproxy.sock

1

# be_id be_name srv_id srv_name srv_addr srv_op_state srv_admin_state srv_uweight srv_iweight srv_time_since_last_change srv_check_status srv_check_result srv_check_health srv_check_state srv_agent_state bk_f_forced_id srv_f_forced_id srv_fqdn srv_port srvrecord srv_use_ssl srv_check_port srv_check_addr srv_agent_addr srv_agent_port

4 m44_webservers1 1 rs1 10.0.0.18 2 0 1 1 9233 6 3 4 6 0 0 0 - 80 - 0 0 - - 0

4 m44_webservers1 2 rs2 10.0.0.28 2 0 1 1 9233 6 3 4 6 0 0 0 - 80 - 0 0 - - 0

4 m44_webservers1 3 local 127.0.0.1 2 0 1 1 9233 1 0 2 0 0 0 0 - 80 - 0 0 - - 0

5 m44_webservers2 1 rs2 10.0.0.28 2 0 1 1 9233 1 0 2 0 0 0 0 - 80 - 0 0 - - 0

#查询backend的后端服务器权重,未设置,默认是1

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock

1 (initial 1)

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs2" | socat stdio /var/lib/haproxy/haproxy.sock

1 (initial 1)

#修改weight,注意只针对单进程有效,因为socket发送消息,可能发到不同的进程,显示的效果就不一样,因此只适合与单进程。多进程的话除非每个进程都设置相同的值,否则每次就会返回不同的值,因此可以代用多进程配合多个socket文件,进行绑定,就能确保一一对应。

[root@haproxy:/var/lib/haproxy]#

echo "set weight m44_webservers1/rs1 3" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock

0 (initial 1)

#设置开启两个socket,并进行绑定,然后就能一对一的操作了

[root@haproxy:/var/lib/haproxy]#

vim /etc/haproxy/haproxy.cfg

global

maxconn 100000

chroot /apps/haproxy

#stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin

stats socket /var/lib/haproxy/haproxy.sock1 mode 600 level admin process 1

stats socket /var/lib/haproxy/haproxy.sock2 mode 600 level admin process 2

[root@haproxy:/var/lib/haproxy]# 通过socket修改第一个进程权重为0

echo "set weight m44_webservers1/rs1 0" | socat stdio /var/lib/haproxy/haproxy.sock1

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock1

0 (initial 1)

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock2

1 (initial 1)

[root@haproxy:/var/lib/haproxy]# #通过socket修改第二个进程也为0

echo "set weight m44_webservers1/rs1 0" | socat stdio /var/lib/haproxy/haproxy.sock2

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/rs1" | socat stdio /var/lib/haproxy/haproxy.sock2

0 (initial 1)

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

#通过权重都设置为0,实现优雅的下线,这样比用交互式命令,直接使用命令,可以方便的脚本实现,这都是临时效果,服务重启这些修改都会丢失。

#将后端服务器禁用,注意只针对单进程有效,多进程需要绑定socket,分别执行

[root@haproxy:/var/lib/haproxy]#

echo " disable server m44_webservers1/rs2 0" | socat stdio /var/lib/haproxy/haproxy.sock1

[root@haproxy:/var/lib/haproxy]#

echo " disable server m44_webservers1/rs2 0" | socat stdio /var/lib/haproxy/haproxy.sock2

[root@client:~]#

curl 10.0.0.100

SorryServer

#两个后端,一个优雅下线,一个禁用,就直接进行sorryserver页面。

#启用

[root@haproxy:/var/lib/haproxy]#

echo " enable server m44_webservers1/rs2 0" | socat stdio /var/lib/haproxy/haproxy.sock2

[root@haproxy:/var/lib/haproxy]#

echo " enable server m44_webservers1/rs2 0" | socat stdio /var/lib/haproxy/haproxy.sock

1

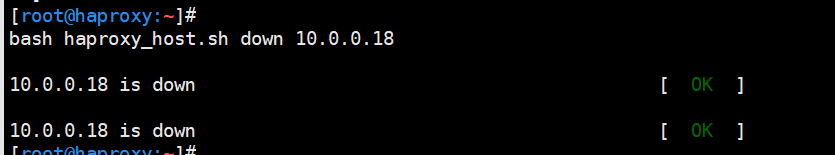

范例: 上线和下线后端服务器脚本

[root@centos7 ~]#cat haproyx_host_up_down.sh

. /etc/init.d/functions #执行函数库,方便后面调用函数库中的action函数,显示执行结果的成功与失败。

case $1 in

up)

echo "set weight magedu-m42-web-80/$2 1" | socat stdio /var/lib/haproxy/haproxy.sock

[ $? -eq 0 ] && action "$2 is up"

;;

down)

echo "set weight magedu-m42-web-80/$2 0" | socat stdio /var/lib/haproxy/haproxy.sock

[ $? -eq 0 ] && action "$2 is down"

;;

*)

echo "Usage: `basename $0` up|down IP"

;;

esac

#$0为脚本的名字,$1为脚本输入的第一个字符串(up|down) $2为第二个服务器名字

自己实验:利用socat上线和下线后端服务器

为了方便管理,在backend中设置自ip为后端服务器的名字,通过ip名字进行判断主机,方便判断是那台主机。

1.修改之后的效果

[root@haproxy:/var/lib/haproxy]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:/var/lib/haproxy]#

systemctl reload haproxy

编辑脚本:自己本机设置了两个socket因此用循环实现,

[root@haproxy:~]#

vim haproxy_host.sh

. /etc/init.d/functions

for sock in /var/lib/haproxy/haproxy.sock*;do

case $1 in

up)

echo "set weight m44_webservers1/$2 1" | socat stdio $sock

[ $? -eq 0 ] && action "$2 is up"

;;

down)

echo "set weight m44_webservers1/$2 0" | socat stdio $sock

[ $? -eq 0 ] && action "$2 is down"

;;

*)

echo "Usage: `basename $0` up|down IP"

;;

esac

done

[root@haproxy:~]# #检查语法

bash -n haproxy_host.sh

[root@haproxy:~]#

bash haproxy_host.sh down 10.0.0.18

10.0.0.18 is down [ OK ]

10.0.0.18 is down [ OK ]

#10.0.0.18已经下线

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

1.2 static-rr

static-rr:基于权重的轮询调度,不支持运行时利用socat进行权重的动态调整(只支持0和1,不支持其它值,只能改为上线或者下线)及后端服务器慢启动,其后端主机数量没有限制,相当于LVS中的 wrr

balance只能放在backend和listen中

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance static-rr #采用static-rr算法

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 2 check inter 3000 fall 2 rise 5

自己实验

1.设置static-rr算法

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance static-rr

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@haproxy:~]#

echo "set weight m44_webservers1/10.0.0.18 3" | socat stdio /var/lib/haproxy/haproxy.sock2

Backend is using a static LB algorithm and only accepts weights '0%' and '100%'.

#此算法不能够通过socat实现修改权重,只能实现0或1,即上线或者下线。

[root@haproxy:~]#

echo "set weight m44_webservers1/10.0.0.18 0" | socat stdio /var/lib/haproxy/haproxy.sock2

[root@haproxy:~]#

echo "set weight m44_webservers1/10.0.0.18 0" | socat stdio /var/lib/haproxy/haproxy.sock1

#测试

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

1.3 first(几乎不用)

first:根据服务器在列表中的位置,自上而下进行调度,但是其只会当第一台服务器的连接数达到上限,新请求才会分配给下一台服务,因此会忽略服务器的权重设置,此方式使用较少不支持用socat进行动态修改权重,可以设置0和1,可以设置其它值但无效

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance first

server web1 10.0.0.17:80 maxconn 2 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

测试访问效果

#同时运行下面命令,观察结果

# while true;do curl http://10.0.0.7/index.html ; sleep 0.1;done

自己实验:

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance first

server 10.0.0.18 10.0.0.18:80 check maxconn 10 #连接数超过10个才访问第二个。

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.18

#注意,权重能修改,但是不起作用。

#并发测试

[root@client:~]#

yum -y install httpd-tools

[root@client:~]#

while :;do ab -c20 -n100 -k http://10.0.0.100/;sleep 0.1;done

#-c并发20个,-n总共100个包,-k长连接的形式,循环的形式执行

#与此同时其他主机,进行测试,会出现28的主机,但是大多数还是18的机器。

[root@haproxy:~]#

curl 10.0.0.100

10.0.0.18

[root@haproxy:~]#

curl 10.0.0.100

10.0.0.18

[root@haproxy:~]#

curl 10.0.0.100

10.0.0.28

[root@haproxy:~]#

curl 10.0.0.100

10.0.0.18

2 动态算法

动态算法:基于后端服务器状态进行调度适当调整,新请求将优先调度至当前负载较低的服务器,且权重可以在haproxy运行时动态调整无需重启。

2.1 roundrobin

roundrobin:基于权重的轮询动态调度算法,支持权重的运行时调整,不同于lvs中的rr轮训模式,HAProxy中的roundrobin支持慢启动(新加的服务器会逐渐增加转发数),其每个后端backend中最多支持4095个real server,支持对real server权重动态调整,roundrobin为默认调度算法,此算法使用广泛 ,静态算法后端real server个数不限。

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance roundrobin

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 2 check inter 3000 fall 2 rise 5

支持动态调整权重:

# echo "get weight web_host/web1" | socat stdio /var/lib/haproxy/haproxy.sock

1 (initial 1)

# echo "set weight web_host/web1 3" | socat stdio /var/lib/haproxy/haproxy.sock

# echo "get weight web_host/web1" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

自己实验

#为了操作方便,仍然采用单进程,需要进行调整socket个数,以及对应的进程个数和CPU绑定。

[root@haproxy:~]#

vim /etc/haproxy/haproxy.cfg

global

maxconn 100000

chroot /apps/haproxy

stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin

# stats socket /var/lib/haproxy/haproxy.sock1 mode 600 level admin process 1

#stats socket /var/lib/haproxy/haproxy.sock2 mode 600 level admin process 2

#uid 99

#gid 99

user haproxy

group haproxy

daemon

nbproc 1 #设置进程数为一个,与上面的socket数一致。

#nbthread 4

#cpu-map 1 0

#cpu-map 2 1 #关闭绑定,因为只有一个进程,一个CPU,不用绑定

........

[root@haproxy:/var/lib/haproxy]#

rm -rf haproxy.sock*

[root@haproxy:/var/lib/haproxy]#

systemctl reload haproxy

[root@haproxy:/var/lib/haproxy]#

pstree -p |grep haproxy

|-haproxy(22173)---haproxy(51326)---{haproxy}(51327)

1.修改算法为roundrobin

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance roundrobin

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试,未添加权重,权重都为1

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.18

2.修改权重

[root@haproxy:/var/lib/haproxy]#

echo "set weight m44_webservers1/10.0.0.18 3" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/10.0.0.18" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

#测试,权重已经提升。

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.28

2.2 leastconn

leastconn加权的最少连接的动态,支持权重的运行时调整和慢启动(相当于lvs的wlc),即:根据当前连接最少的后端服务器而非权重进行优先调度(新客户端连接),比较适合长连接的场景使用,比如:MySQL等场景。

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance leastconn

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

自己实验

1.修改算法为leastconn

[root@haproxy:/var/lib/haproxy]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance leastconn

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:/var/lib/haproxy]#

systemctl reload haproxy

[root@haproxy:/var/lib/haproxy]#

echo "set weight m44_webservers1/10.0.0.18 3" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/10.0.0.18" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

#测试,按照最少连接数,能看到权重,目前权重不起作用

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-TtHncBJV-1657441004000)(https://raw.githubusercontent.com/TMXKWWZ/blog/main/img/image-20220710082015218.png)]

2.3 random

在1.9版本开始增加 random的负载平衡算法,其基于随机数作为一致性hash的key,随机负载平衡对于大型服务器场或经常添加或删除服务器非常有用,支持weight的动态调整,weight较大的主机有更大概率获取新请求

random配置实例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance random

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

自己实验

1.修改算法

[root@haproxy:/var/lib/haproxy]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance random

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:/var/lib/haproxy]#

systemctl reload haproxy

#测试,随机访问

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.28

[root@client:~]#

curl 10.0.0.100

10.0.0.18

2.动态修改权重

[root@haproxy:/var/lib/haproxy]#

echo "set weight m44_webservers1/10.0.0.18 3" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy:/var/lib/haproxy]#

echo "get weight m44_webservers1/10.0.0.18" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

#测试

3 其他算法

其它算法即可作为静态算法,又可以通过选项(hash-type 决定)成为动态算法

默认不写就是hash-type map-base的静态,hash-type consistent 就是动态的。

3.1 source

**源地址hash,**基于用户源地址hash并将请求转发到后端服务器,后续同一个源地址请求将被转发至同一个后端web服务器。此方式当后端服务器数据量发生变化时,会导致很多用户的请求转发至新的后端服务器,默认为静态方式,但是可以通过hash-type支持的选项更改

这个算法一般是在不插入Cookie的TCP模式下使用,也可给拒绝会话cookie的客户提供最好的会话粘性,适用于session会话保持但不支持cookie和缓存的场景

源地址有两种转发客户端请求到后端服务器的服务器选取计算方式,分别是取模法和一致性hash

3.1.1 map-base 取模法(静态算法)

map-based:取模法,对source地址进行hash计算,再基于服务器总权重的取模,最终结果决定将此请求转发至对应的后端服务器。**此方法是静态的,即不支持在线调整权重,**不支持慢启动,可实现对后端服务器均衡调度。缺点是当服务器的总权重发生变化时,即有服务器上线或下线,都会因总权重发生变化而导致调度结果整体改变,hash-type 指定的默认值为此算法

所谓取模运算,就是计算两个数相除之后的余数,10%7=3, 7%4=3

map-based算法:基于权重取模,hash(source_ip)%所有后端服务器相加的总权重

取模法配置示例:

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode tcp

log global

balance source

hash-type map-based

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 3

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 3

#不支持动态调整权重值

[root@haproxy ~]#echo "set weight web_host/10.0.0.27 10" | socat stdio /var/lib/haproxy/haproxy.sock

Backend is using a static LB algorithm and only accepts weights '0%' and '100%'.

#只能动态上线和下线

[root@haproxy ~]#echo "set weight web_host/10.0.0.27 0" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy conf.d]#echo "get weight web_host/10.0.0.27" | socat stdio

/var/lib/haproxy/haproxy.sock

0 (initial 1)

自己实验

1.修改算法为source

[root@haproxy:/var/lib/haproxy]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance source

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:/var/lib/haproxy]#

systemctl reload haproxy

#测试

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@client:~]#

curl 10.0.0.100

10.0.0.18

[root@centos7:~]#

curl 10.0.0.100

10.0.0.28

[root@centos7:~]#

curl 10.0.0.100

10.0.0.28

#根据客户端地址进行了哈希运算再取模并绑定,之后每次都调度到同一real server,可以避免session丢失的问题,缺点就是后端服务器增减或者减少,就会重新调度,也会丢失session。

3.1.2 一致性hash(动态算法)

一致性哈希,当服务器的总权重发生变化时,对调度结果影响是局部的,不会引起大的变动,hash(o)mod n ,该hash算法是动态的,支持使用 socat等工具进行在线权重调整,支持慢启动

算法:

1、key1=hash(source_ip)%(2^32) [0---4294967295]

2、keyA=hash(后端服务器虚拟ip)%(2^32)

3、将key1和keyA都放在hash环上,将用户请求调度到离key1最近的keyA对应的后端服务器

3.1.2.1 hash对象

Hash对象到后端服务器的映射关系:

3.1.2.2 一致性hash示意图

后端服务器在线与离线的调度方式

对客户端的ip进行哈希运算,对于运算结果对232取模,对后端real serve也同样做哈希运算对232取模,把哈希取模的结果放在环中,根据距离关系进行调度,调度到取模的结果离的近的进行real server,通过虚拟real server ip增加ip权重,出现更多的取模的结果分布在环中,避免了偏斜(real server调度分配不均)即使后端服务器数改变,影响的也是局部的session绑定结果。

3.1.2.3 一致性hash配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode tcp

log global

balance source

hash-type consistent

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

自己实验

1.添加hash-type consistent,实现一致性原地址哈希

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance source

hash-type consistent

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试,支持动态更改权重

[root@haproxy:~]#

echo "set weight m44_webservers1/10.0.0.18 3" | socat stdio /var/lib/haproxy/haproxy.sock

[root@haproxy:~]#

echo "get weight m44_webservers1/10.0.0.18" | socat stdio /var/lib/haproxy/haproxy.sock

3 (initial 1)

3.2 uri

基于对用户请求的URI的左半部分或整个uri做hash,再将hash结果对总权重进行取模后,根据最终结果将请求转发到后端指定服务器,适用于后端是缓存服务器场景,默认是静态算法,也可以通过hash-type

指定map-based和consistent,来定义使用取模法还是一致性hash。

注意:此算法基于应用层,所以只支持 mode http ,不支持 mode tcp

<scheme>://<user>:<password>@<host>:<port>/<path>;<params>?<query>#<frag>

左半部分:/<path>;<params>

整个uri:/<path>;<params>?<query>#<frag>

3.2.1 uri 取模法配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance uri

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.2.2 uri 一致性hash配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance uri

hash-type consistent

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.2.3 访问测试

访问不同的uri,确认可以将用户同样的请求转发至相同的服务器

# curl http://10.0.0.7/test1.html

# curl http://10.0.0.7/test2..html

自己实验

1.后端real server制作页面

1)

[root@rs1:~]#

cd /var/www/html

[root@rs1:/var/www/html]#

for i in {1..10};do echo test$i on 10.0.0.18 > test$i.html;done

2)

[root@rs2:~]#

cd /var/www/html/

[root@rs2:/var/www/html]#

for i in {1..10};do echo test$i on 10.0.0.18 > test$i.html;done

2.haproxy修改算法

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance uri

hash-type consistent

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试,访文的uri不一样,调度的real server不一样,绑定好就不会变了。及时访问的主机更改了,调度的主机不会改变,不能用此方法可以实现动静分离,因为调度的规则不受控制,但是可以作为缓存使用,利用缓存服务器,这样调度可以提高访问效率,减轻后端服务器的负载。如果缓存服务器挂了,那么后端可能承受不住大的访问量进而崩溃。

[root@client:~]#

curl http://10.0.0.100/test1.html

test1 on 10.0.0.18

[root@client:~]#

curl http://10.0.0.100/test1.html

test1 on 10.0.0.18

#更换访问主机

[root@centos7:~]#

curl http://10.0.0.100/test1.html

test1 on 10.0.0.18

[root@centos7:~]#

curl http://10.0.0.100/test1.html

test1 on 10.0.0.18

[root@client:~]#

curl http://10.0.0.100/test3.html

test3 on 10.0.0.28

[root@client:~]#

curl http://10.0.0.100/test3.html

test3 on 10.0.0.28

#更换访问主机

[root@centos7:~]#

curl http://10.0.0.100/test3.html

test3 on 10.0.0.28

[root@centos7:~]#

curl http://10.0.0.100/test3.html

test3 on 10.0.0.28

3.3 url_param

url_param对用户请求的url中的 params 部分中的一个参数key对应的value值(路径中的?形式)作hash计算,并由服务器总权重相除以后派发至某挑出的服务器;通常用于追踪用户,以确保来自同一个用户的请求始终发往同一个real server,如果无没key,将按roundrobin算法

#假设:

url = http://www.magedu.com/foo/bar/index.php?key=value

#则:

host = "www.magedu.com"

url_param = "key=value"

3.3.1 url_param取模法配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance url_param userid #url_param hash

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.3.2 url_param一致性hash配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance url_param userid #对url_param的值取hash

hash-type consistent

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.3.3 测试访问

# curl http://10.0.0.7/index.html?userid=<NAME_ID>

# curl "http://10.0.0.7/index.html?userid=<NAME_ID>&typeid=<TYPE_ID>"

自己实验

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance url_param userid

hash-type consistent

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试,只要userid也一样,就一直调度到一台realserver。uid是自己虚构的,也可以提前设置好(要与开发商商量好,支持才行),

[root@client:~]#

curl 10.0.0.100/test3.html?userid=chen

test3 on 10.0.0.18

[root@client:~]#

curl 10.0.0.100/test3.html?userid=miao

test3 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/test3.html?userid=miao

test3 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/test3.html?userid=chen

#与访问的文件无关,只与uid有关,

[root@client:~]#

curl 10.0.0.100/test1.html?userid=chen

test1 on 10.0.0.18

[root@client:~]#

curl 10.0.0.100/test2.html?userid=chen

test2 on 10.0.0.18

[root@client:~]#

curl 10.0.0.100/test3.html?userid=chen

test3 on 10.0.0.18

[root@client:~]#

curl 10.0.0.100/test4.html?userid=chen

test4 on 10.0.0.18

3.4 hdr

针对用户每个http头部(header)请求中的指定信息做hash,此处由 name 指定的http首部将会被取出并做hash计算,然后由服务器总权重取模以后派发至某挑出的服务器,如果无有效值,则会使用默认的轮询调度。

3.4.1 hdr取模法配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance hdr(User-Agent)

#balance hdr(host)

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.4.2 一致性hash配置示例

listen web_host

bind 10.0.0.7:80,:8801-8810,10.0.0.7:9001-9010

mode http

log global

balance hdr(User-Agent)

hash-type consistent

server web1 10.0.0.17:80 weight 1 check inter 3000 fall 2 rise 5

server web2 10.0.0.27:80 weight 1 check inter 3000 fall 2 rise 5

3.4.3 测试访问

[root@centos6 ~]#curl -v http://10.0.0.7/index.html

[root@centos6 ~]#curl -vA 'firefox' http://10.0.0.7/index.html

[root@centos6 ~]#curl -vA 'chrome' http://10.0.0.7/index.html

自己实验

[root@haproxy:~]#

vim /etc/haproxy/conf.d/test.cfg

frontend m44_web_80

bind 10.0.0.100:80

use_backend m44_webservers1

backend m44_webservers1

balance hdr(User-Agent)

hash-type consistent

server 10.0.0.18 10.0.0.18:80 check

server 10.0.0.28 10.0.0.28:80 check

server local 127.0.0.1:80 backup

backend m44_webservers2

server 10.0.0.28 10.0.0.28:80

[root@haproxy:~]#

systemctl reload haproxy

#测试,只要浏览器版本一样,会进行绑定,调度到固定的real server。

[root@client:~]#

curl 10.0.0.100/test1.html

test1 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/test2.html

test2 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/test3.html

test3 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/test4.html

test4 on 10.0.0.28

[root@client:~]#

curl 10.0.0.100/index.html

10.0.0.28

#-A 模拟浏览器版本,不同浏览器版本调度不一样。

[root@client:~]#

curl -A "chrome" 10.0.0.100/index.html

10.0.0.28

[root@client:~]#

curl -A "chrome18" 10.0.0.100/index.html

10.0.0.18

[root@client:~]#

curl -A "IE" 10.0.0.100/index.html

10.0.0.28

3.5 rdp-cookie

rdp-cookie对远windows远程桌面的负载,使用cookie保持会话,默认是静态,也可以通过hash-type指定map-based和consistent,来定义使用取模法还是一致性hash。

3.5.1 rdp-cookie 取模法配置示例

listen RDP

bind 10.0.0.7:3389

balance rdp-cookie

mode tcp

server rdp0 10.0.0.17:3389 check fall 3 rise 5 inter 2000 weight 1

4.3.5.2 rdp-cookie 一致性hash配置示例

[root@haproxy ~]#cat /etc/haproxy/conf.d/windows_rdp.cfg

listen magedu_RDP_3389

bind 172.16.0.100:3389

balance rdp-cookie

hash-type consistent

mode tcp

server rdp0 10.0.0.200:3389 check fall 3 rise 5 inter 2000 weight 1

[root@haproxy ~]#hostname -I

10.0.0.7 172.16.0.100

4 算法总结

#静态

static-rr--------->tcp/http

first------------->tcp/http

#动态

roundrobin-------->tcp/http

leastconn--------->tcp/http

random------------>tcp/http

#以下静态和动态取决于hash_type是否consistent

source------------>tcp/http

Uri--------------->http

url_param--------->http

hdr--------------->http

rdp-cookie-------->tcp

5 各算法使用场景

first #使用较少

static-rr #做了session共享的web集群(不用担心session丢失)

roundrobin #默认值,用的多

random

leastconn #数据库

source #基于客户端公网IP的会话保持,很少用,因为客户端都是nat模式共享公网ip。

Uri--------------->http #缓存服务器,CDN服务商,蓝汛、百度、阿里云、腾讯

url_param--------->http #可以实现session保持

hdr #基于客户端请求报文头部做下一步

rdp-cookie #基于Windows主机,很少使用

439

439

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?