本篇使用到的开发工具IntelliJ IDEA,jdk版本为:jdk1.8,虚拟机版本为CentOS 7。

MySQL版本为:5.6.50 MySQL Community Server (GPL),hive版本为:hive-1.1.0-cdh5.14.2,HBase版本为:hbase-1.2.0-cdh5.14.2。

一、Java连接Mysql

先新建一个maven项目。

在pom.xml中放入

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java -->

<!-- mysql-connector-java JDBC驱动 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.32</version>

</dependency>

</dependencies>在resources中新建一个MysqlResource.properties文件,用来放Mysql的配置信息。

依次创建BaseConfig.java,Result.java,BaseDao.java,Test.java。

1.BaseConfig.java

package java2Mysql;

import java.io.FileReader;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Properties;

public class BaseConfig {

private class Config {

String driver;

String url;

String username;

String password;

}

private Config config;

{

try {

init();

Class.forName(config.driver);

} catch (Exception e) {

System.err.println(e.getMessage());

}

}

private void init() throws Exception {

String path = Thread.currentThread().getContextClassLoader().getResource("MysqlResource.properties").getPath();

//工具类Properties对hashtable扩展的一个类(线程安全),把文件装载到此工具中,以流的方式写入

Properties pro = new Properties();

pro.load(new FileReader(path));

String url = pro.getProperty("url");

if (null == url) {

throw new Exception("缺少url配置异常");

}

config = new Config();

config.url = url;

config.driver = pro.getProperty("driver", "com.mysql.jdbc.Driver");

config.username = pro.getProperty("username", "root");

config.password = pro.getProperty("password", "");

}

//获取连接对象

protected Connection getCon() throws SQLException {

return DriverManager.getConnection(config.url, config.username, config.password);

}

//释放多态资源

protected void close(AutoCloseable... closeables) {

for (AutoCloseable closeable : closeables) {

if (closeable != null) {

try {

closeable.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

}

2.Result.java

package java2Mysql;

public class Result<T> {

private boolean err;

private T data;

public static <T> Result succeed(T data){

return new Result(false,data);

}

public static Result fail(){

return new Result(true,null);

}

private Result(boolean err, T data) {

this.err = err;

this.data = data;

}

public boolean isErr() {

return err;

}

public T getData() {

return data;

}

}

3.BaseDao.java

package java2Mysql;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

public final class BaseDao extends BaseConfig {

//预编译执行器(连接,sql语句,动态参数)

private PreparedStatement getPst(Connection con, String sql, Object... params) throws SQLException {

PreparedStatement pst = con.prepareStatement(sql);

if (params.length > 0) {

for (int i = 0; i < params.length; i++) {

pst.setObject(i + 1, params[i]);

}

}

return pst;

}

//执行增删改操作

public Result exeNonQuery(String sql, Object... params) {

Connection con = null;//连接对象

PreparedStatement pst = null;//执行对象

try {

con = getCon();

pst = getPst(con, sql, params);

return Result.succeed(pst.executeUpdate());

} catch (SQLException e) {

e.printStackTrace();

return Result.fail();

} finally {

close(pst, con);

}

}

//执行查询操作

public Result exeQuery(String sql, Object... params) {

Connection con = null;

PreparedStatement pst = null;

ResultSet rst = null;//结果集

try {

con = getCon();

pst = getPst(con, sql, params);

rst = pst.executeQuery();

List<List<String>> data = new ArrayList<>();

if (null != rst && rst.next()) {

final int CC = rst.getMetaData().getColumnCount();

do {

//控制行

List<String> row = new ArrayList<>(CC);

for (int i = 1; i <= CC; i++) {

row.add(rst.getObject(i).toString());

}

data.add(row);

} while (rst.next());

}

return Result.succeed(data);

} catch (SQLException e) {

e.printStackTrace();

return Result.fail();

} finally {

close(rst, pst, con);

}

}

public String readSql(String...paths) throws IOException {

String path=paths.length==0?"sql/mysql.sql":paths[0];

StringBuilder builder=new StringBuilder();

BufferedReader reader=new BufferedReader(new FileReader(path));

String line=null;

while (null!=(line=reader.readLine())){

builder.append(line.trim()+" ");

}

return builder.toString();

}

}

4.Test.java

package java2Mysql;

import java.io.IOException;

import java.util.List;

public class Test {

public static void main(String[] args) throws IOException {

BaseDao dao=new BaseDao();

Result<List<List<String>>> tables=dao.exeQuery(dao.readSql());

if (!tables.isErr()){

tables.getData().forEach(row->{

row.forEach(col->{

System.out.print(col+"\t");

System.out.println();

});

});

}else {

System.out.println("查询异常");

}

}

}

测试结果为:

二、Java连接Hive

同样,创建一个maven项目。

在pom.xml放入

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<hadoop.version>2.6.0</hadoop.version>

<hive.version>1.1.0</hive.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>然后新建一个HiveResource.properties文件,用来放Hive的配置信息。

依次新建BaseConfig.java、Result.java、BaseDao.java、Test.java。

1.BaseConfig.java

package java2Hive;

import java.io.FileReader;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Properties;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class BaseConfig {

private class Config {

private String driver;

String url;

String username;

String password;

}

private Config config;

private boolean valid(String url) {

Pattern p = Pattern.compile("jdbc:\\w+://((\\d{1,3}\\.){3}\\d{1,3}|\\w+):\\d{1,5}/\\w+");

Matcher m = p.matcher(url);

return m.matches();

}

private void init() throws Exception {

String path = Thread.currentThread().getContextClassLoader().getResource("HiveResource.properties").getFile();

Properties pro = new Properties();

pro.load(new FileReader(path));

String url = pro.getProperty("url");

if (null == url ||!valid(url)) {

throw new Exception("no url or invalid url exception");

}

config = new Config();

config.url = url;

config.driver = pro.getProperty("driver", "com.mysql.jdbc.Driver");

config.username = pro.getProperty("username", "root");

config.password = pro.getProperty("password", "");

}

{

try {

init();

Class.forName(config.driver);

} catch (Exception e) {

e.printStackTrace();

System.out.println(e.getMessage());

}

}

protected Connection getCon() throws SQLException {

return DriverManager.getConnection(config.url,config.username,config.password);

}

void close(AutoCloseable...closeables){

for (AutoCloseable closeable : closeables) {

if (closeable!=null){

try {

closeable.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

}

2.Result.java

package java2Hive;

public class Result<T> {

private boolean err;

private T data;

public static <T> Result succeed(T data){

return new Result(false,data);

}

public static Result fail(){

return new Result(true,null);

}

private Result(boolean err, T data) {

this.err = err;

this.data = data;

}

public boolean isErr() {

return err;

}

public T getData() {

return data;

}

}

3.BaseDao.java

package java2Hive;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

public final class BaseDao extends BaseConfig{

private PreparedStatement getPst(Connection con,String sql,Object...params) throws SQLException {

PreparedStatement pst=con.prepareStatement(sql);

if(params.length>0){

for (int i = 0; i < params.length; i++) {

pst.setObject(i+1,params[i]);

}

}

return pst;

}

public Result exeNonQuery(String sql,Object...params){

Connection con=null;

PreparedStatement pst=null;

try {

con=getCon();

pst=getPst(con,sql,params);

return Result.succeed(pst.executeUpdate());

} catch (SQLException e) {

e.printStackTrace();

return Result.fail();

}finally {

close(pst,con);

}

}

public Result exeQuery(String sql,Object...params){

Connection con=null;

PreparedStatement pst=null;

ResultSet rst=null;

try {

con=getCon();

pst=getPst(con,sql,params);

rst=pst.executeQuery();

List<List<String>> data=new ArrayList<>();

if (null!=rst&&rst.next()){

final int CC=rst.getMetaData().getColumnCount();

do {

//往行里加列

List<String> row=new ArrayList<>(CC);

for (int i = 1; i <=CC; i++) {

row.add(rst.getObject(i).toString());

}

//往表里加行

data.add(row);

}while (rst.next());

}

return Result.succeed(data);

} catch (SQLException e) {

e.printStackTrace();

return Result.fail();

}finally {

close(rst,pst,con);

}

}

public String readSql(String...paths) throws IOException {

String path=paths.length==0?"sql/hive.sql":paths[0];

//流。StringBuilder 是一个可变的字符序列。

//BufferedReader提供通用的缓冲方式文本读取,

// 而且提供了很实用的readLine,读取一个文本行,从字符输入流中读取文本,缓冲各个字符,从 而提供字符、数组和行的高效读取

StringBuilder builder=new StringBuilder();

BufferedReader reader=new BufferedReader(new FileReader(path));

String line=null;

while (null!=(line=reader.readLine())){

builder.append(line.trim()+" ");

}

return builder.toString();

}

}

4.Test.java

package java2Hive;

import java.io.IOException;

import java.util.List;

public class Test {

public static void main(String[] args) throws IOException {

BaseDao dao=new BaseDao();

Result<List<List<String>>> data=dao.exeQuery(dao.readSql());

if (!data.isErr()){

data.getData().forEach(row->{

row.forEach(col->{

System.out.print(col+"\t");

System.out.println();

});

});

}else {

System.out.println("查询异常");

}

}

}

运行结果如下:

三、Java连接HBase

创建一个maven项目。

在pom.xml中

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<hadoop.version>2.6.0-cdh5.14.2</hadoop.version>

<hive.version>1.1.0-cdh5.14.2</hive.version>

<hbase.version>1.2.0-cdh5.14.2</hbase.version>

</properties>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<!--hadoop-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--日志-->

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.2</version>

</dependency>

<!--MapReduce-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.zookeeper/zookeeper -->

<!--zookeeper-->

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.5</version>

<type>pom</type>

</dependency>

<!--hbase-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

<!--log4j-->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

<!--测试-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

</dependency>

</dependencies>创建一个HBaseConnect.java

package java2Hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.Test;

import java.io.IOException;

public class HBaseConnect {

//创建一个表

@Test

public void createTable() throws IOException {

//1.获取hbase连接,配置

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.153.130");

conf.set("hbase.zookeeper.property.clientPort", "2181");

//2.创建连接

Connection conn = ConnectionFactory.createConnection(conf);

//3.创建admin对象级操作

Admin admin = conn.getAdmin();

//使用表空间,如果不存在表空间,则创建一个表空间

try {

admin.getNamespaceDescriptor("demo");

} catch (NamespaceNotFoundException e) {

NamespaceDescriptor namespace = NamespaceDescriptor.create("demo").build();

admin.createNamespace(namespace);

}

//4.创建表的相关信息,表名

HTableDescriptor student = new HTableDescriptor(TableName.valueOf("demo:student"));

//5.添加列族信息

student.addFamily(new HColumnDescriptor("info"));

student.addFamily(new HColumnDescriptor("score"));

//6.调用创建表的方法进行建表操作

admin.createTable(student);

//7.关闭连接

admin.close();

conn.close();

System.out.println("创建表成功");

}

//向表中添加数据

@Test

public void putData2Table() throws IOException {

//1.配置

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.153.130");

conf.set("hbase.zookeeper.property.clientPort", "2181");

//2.创建连接

Connection conn = ConnectionFactory.createConnection(conf);

//3.创建admin对象级操作

Admin admin = conn.getAdmin();

//使用表空间,如果不存在表空间,则创建一个表空间

try {

admin.getNamespaceDescriptor("demo");

} catch (NamespaceNotFoundException e) {

NamespaceDescriptor namespace = NamespaceDescriptor.create("demo").build();

admin.createNamespace(namespace);

}

//4.获取table

Table student = conn.getTable(TableName.valueOf("student"));

//5.往表中添加数据rowkey

Put put = new Put(Bytes.toBytes("1001"));

//6.添加列info:name zhangsan

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), Bytes.toBytes("zhangsan"));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("gender"), Bytes.toBytes("male"));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("age"), Bytes.toBytes("11"));

//7.插入数据

student.put(put);

//8.关闭连接

admin.close();

conn.close();

}

//读数据

@Test

public void getDataFromTable() throws IOException {

//1.配置

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.153.130");

conf.set("hbase.zookeeper.property.clientPort", "2181");

//2.创建连接

Connection conn = ConnectionFactory.createConnection(conf);

//3.创建admin对象级操作

Admin admin = conn.getAdmin();

//使用表空间,如果不存在表空间,则创建一个表空间

try {

admin.getNamespaceDescriptor("demo");

} catch (NamespaceNotFoundException e) {

NamespaceDescriptor namespace = NamespaceDescriptor.create("demo").build();

admin.createNamespace(namespace);

}

//4.获取table

Table student = conn.getTable(TableName.valueOf("student"));

//5.读取数据Get

Get get = new Get(Bytes.toBytes("1001"));

//6.获取结果

Result result = student.get(get);

//7.遍历

Cell[] cells = result.rawCells();

for (Cell cell : cells) {

//获取具体的值

System.out.println("rowkey:" + Bytes.toString(CellUtil.cloneRow(cell)));

System.out.println("列族:" + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("列名:" + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value:" + Bytes.toString(CellUtil.cloneValue(cell)));

System.out.println("-----------------------");

}

//8.关闭连接

admin.close();

conn.close();

}

@Test

//删除

public void dropTable() throws IOException {

//1.配置

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.153.130");

conf.set("hbase.zookeeper.property.clientPort", "2181");

//2.创建连接

Connection conn = ConnectionFactory.createConnection(conf);

//3.get admin

Admin admin = conn.getAdmin();

//使用表空间,如果不存在表空间,则创建一个表空间

try {

admin.getNamespaceDescriptor("demo");

} catch (NamespaceNotFoundException e) {

NamespaceDescriptor namespace = NamespaceDescriptor.create("demo").build();

admin.createNamespace(namespace);

}

//4.禁用表

admin.disableTable(TableName.valueOf("demo:student"));

//5.删除表

admin.deleteTable(TableName.valueOf("demo:student"));

//6.关闭连接

admin.close();

conn.close();

}

}

创建一张demo:student表,运行结果如下:

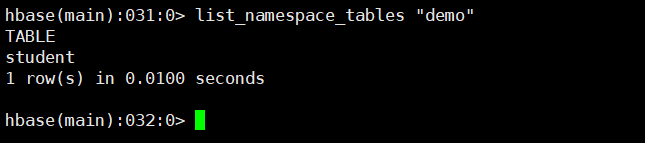

在HBase中查询结果如下:

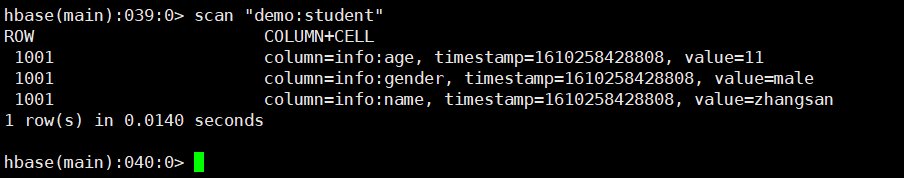

往表demo:student中插入一条数据,在HBase中查询运行结果如下:

读表demo:student的数据,运行结果如下:

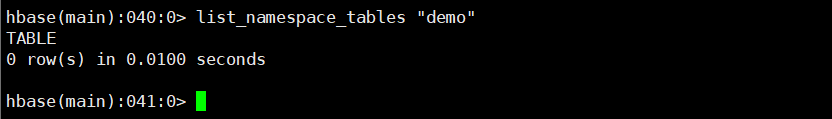

执行删除表操作,将demo:student表删除,运行结果如下:

119

119

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?