一、softmax计算交叉熵损失

1.计算softmax输出

假设模型对某种图像的输出logits为2, 3, 0.1,则经过softmax后,为0.2585, 0.7028, 0.0387。假设该图像真实标签为1,则one-hot编码为[0, 1, 0]。

import torch

from torch import nn

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

print('logits:', logits)

print('logits.shape:', logits.shape)

softmax_out = torch.softmax(logits, dim=0)

print('softmax_out:', softmax_out)

print('softmax_out.shape:', softmax_out.shape)

def softmax_cal(x):

return torch.exp(x) / torch.sum(torch.exp(x))

softmax_out_v2 = softmax_cal(logits)

print('softmax_out_v2:', softmax_out_v2)

print('softmax_out_v2.shape:', softmax_out_v2.shape)

logits: tensor([2.0000, 3.0000, 0.1000])

logits.shape: torch.Size([3])

softmax_out: tensor([0.2585, 0.7028, 0.0387])

softmax_out.shape: torch.Size([3])

softmax_out_v2: tensor([0.2585, 0.7028, 0.0387])

softmax_out_v2.shape: torch.Size([3])

Process finished with exit code 0

2.计算loss

计算logits经过softmax后与真实标签的交叉熵损失,使用nn.CrossEntropyLoss()计算,与手动计算结果均为0.3527。nn.CrossEntropyLoss()中已经包含了softmax操作。

loss = -(0 * log(0.2585) + 1 * log(0.7028) + 0 * log(0.0387)) = 0.3527

import torch

from torch import nn

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('logits:', logits)

print('logits.shape:', logits.shape)

print('label:', label)

print('label.shape', label.shape)

print('---softmax输出---')

softmax_out = torch.softmax(logits, dim=1)

print('softmax_out:', softmax_out)

print('softmax_out.shape:', softmax_out.shape)

def softmax_cal(x):

return torch.exp(x) / torch.sum(torch.exp(x))

softmax_out_v2 = softmax_cal(logits)

print('softmax_out_v2.shape:', softmax_out_v2.shape)

print('---计算loss---')

softmax_loss = nn.CrossEntropyLoss()(logits, label)

print('nn.CrossEntropyLoss()计算softmax_loss:', softmax_loss)

print(softmax_loss.shape)

softmax_loss_v2 = -1 * torch.log(torch.tensor(0.7028))

print('手动计算softmax_loss_v2', softmax_loss_v2)

print(softmax_loss_v2.shape)

计算结果

logits: tensor([[2.0000, 3.0000, 0.1000]])

logits.shape: torch.Size([1, 3])

label: tensor([1])

label.shape torch.Size([1])

---softmax输出---

softmax_out: tensor([[0.2585, 0.7028, 0.0387]])

softmax_out.shape: torch.Size([1, 3])

softmax_out_v2.shape: torch.Size([1, 3])

---计算loss---

nn.CrossEntropyLoss()计算softmax_loss: tensor(0.3527)

torch.Size([])

手动计算softmax_loss_v2 tensor(0.3527)

torch.Size([])

二、sigmoid计算交叉熵损失

1.sigmoid输出

同样假设模型对某种图像的输出logits为2, 3, 0.1,则经过sigmoid后,为0.8808, 0.9526, 0.5250。假设该图像真实标签为1,则one-hot编码为[0, 1, 0]。

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('---sigmoid输出---')

sigmoid_out = torch.sigmoid(logits)

print('sigmoid_out:', sigmoid_out)

print('sigmoid_out.shape:', sigmoid_out.shape)

def sigmoid_cal(x):

return 1 / (1 + torch.exp(-x))

sigmoid_out_v2 = sigmoid_cal(logits)

print('sigmoid_out_v2:', sigmoid_out_v2)

print('sigmoid_v2.shape:', sigmoid_out_v2.shape)

sigmoid输出

sigmoid_out: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_out.shape: torch.Size([1, 3])

sigmoid_out_v2: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_v2.shape: torch.Size([1, 3])

2.计算loss

logits = [2, 3, 0.1];label = 1

首先使用nn.BCEWithLogitsLoss()进行计算,与nn.CrossEntropyLoss()不同的是,如果直接将label传入nn.nn.BCEWithLogitsLoss()会报错。应对先将label转为one-hot形式,再传入计算。

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('---sigmoid输出---')

sigmoid_out = torch.sigmoid(logits)

print('sigmoid_out:', sigmoid_out)

print('sigmoid_out.shape:', sigmoid_out.shape)

def sigmoid_cal(x):

return 1 / (1 + torch.exp(-x))

sigmoid_out_v2 = sigmoid_cal(logits)

print('sigmoid_out_v2:', sigmoid_out_v2)

print('sigmoid_v2.shape:', sigmoid_out_v2.shape)

true_label = torch.tensor([0, 1, 0], dtype=torch.float)

true_label = true_label.unsqueeze(0)

#sigmoid_loss = nn.BCEWithLogitsLoss()(logits, true_label)

sigmoid_loss = nn.BCEWithLogitsLoss()(logits, label)

print('nn.BCEWithLogitsLoss()计算sigmoid_loss:', sigmoid_loss)

报错

raise ValueError("Target size ({}) must be the same as input size ({})".format(target.size(), input.size()))

ValueError: Target size (torch.Size([1])) must be the same as input size (torch.Size([1, 3]))

将label=1,转为one-hot, [0, 1, 0]进行计算

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('---sigmoid输出---')

sigmoid_out = torch.sigmoid(logits)

print('sigmoid_out:', sigmoid_out)

print('sigmoid_out.shape:', sigmoid_out.shape)

def sigmoid_cal(x):

return 1 / (1 + torch.exp(-x))

sigmoid_out_v2 = sigmoid_cal(logits)

print('sigmoid_out_v2:', sigmoid_out_v2)

print('sigmoid_v2.shape:', sigmoid_out_v2.shape)

true_label = torch.tensor([0, 1, 0], dtype=torch.float)

true_label = true_label.unsqueeze(0)

sigmoid_loss = nn.BCEWithLogitsLoss()(logits, true_label)

#sigmoid_loss = nn.BCEWithLogitsLoss()(logits, label)

print('nn.BCEWithLogitsLoss()计算sigmoid_loss:', sigmoid_loss)

sigmoid_out: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_out.shape: torch.Size([1, 3])

sigmoid_out_v2: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_v2.shape: torch.Size([1, 3])

nn.BCEWithLogitsLoss()计算sigmoid_loss: tensor(0.9733)

那么,这个0.9733是如何得到的呢?如果按照刚刚softmax计算交叉熵的思路:

loss = -(0 * log(0.8808) + 1 * log(0.9526) + 0 * log(0.5250)) = 0.0486,结果并不是0.9733。

在使用nn.CrossEntropyLoss()时,传入label = 1即可计算,而在使用nn.BCEWithLogitsLoss()却需要使用one-hot label = [0,1,0]。个人认为在nn.BCEWithLogitsLoss()是在计算3个二分类损失并求平均值,而nn.CrossEntropyLoss()是在计算1个三分类损失。

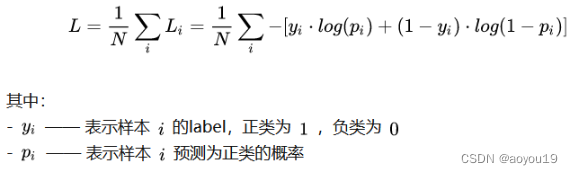

为了验证这一点,结合logits = [2, 3, 0.1]经过sigmoid后的输出[0.8808, 0.9526, 0.5250]与one-hot label = [0,1,0],使用二分类交叉熵计算表达式

计算loss:

loss_0 = -(0 * log(0.8808) + (1-0) * log(1-0.8808))

loss_1 = -(1 * log(0.9526) + (1-1) * log(1-0.9526))

loss_2 = -(0*log(0.5250)) + (1-0) * log(0.5250))

loss = (loss_0 + loss_1 + loss_2) / 3 = 0.9733

结果与使用nn.BCEWithLogitsLoss()计算一致

在此处one-hot label = [0,1,0]可以理解成[[1,0], [0,1], [1,0]],对每个类别又one-hot一次,0为无该类别属性,1为有该类别属性。

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('---sigmoid输出---')

sigmoid_out = torch.sigmoid(logits)

print('sigmoid_out:', sigmoid_out)

print('sigmoid_out.shape:', sigmoid_out.shape)

def sigmoid_cal(x):

return 1 / (1 + torch.exp(-x))

sigmoid_out_v2 = sigmoid_cal(logits)

print('sigmoid_out_v2:', sigmoid_out_v2)

print('sigmoid_v2.shape:', sigmoid_out_v2.shape)

true_label = torch.tensor([0, 1, 0], dtype=torch.float)

true_label = true_label.unsqueeze(0)

sigmoid_loss = nn.BCEWithLogitsLoss()(logits, true_label)

#sigmoid_loss = nn.BCEWithLogitsLoss()(logits, label)

print('nn.BCEWithLogitsLoss()计算sigmoid_loss:', sigmoid_loss)

sigmoid_loss_v2 = -1 * torch.log(torch.tensor([1 - 0.8808])) - 1 * torch.log(torch.tensor(0.9526)) - 1 * torch.log(

torch.tensor(1 - 0.5250))

print('手动计算sigmoid_loss_v2', sigmoid_loss_v2 / 3)

err_loss = -1 * torch.log(torch.tensor(0.9526))

print('错误err_loss:', err_loss)

输出结果:

---sigmoid输出---

sigmoid_out: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_out.shape: torch.Size([1, 3])

sigmoid_out_v2: tensor([[0.8808, 0.9526, 0.5250]])

sigmoid_v2.shape: torch.Size([1, 3])

nn.BCEWithLogitsLoss()计算sigmoid_loss: tensor(0.9733)

手动计算sigmoid_loss_v2 tensor([0.9733])

错误err_loss: tensor(0.0486)

三、总结

1.在上述例子中使用nn.CrossEntropyLoss(),计算的是3分类损失。而使用nn.BCEWithLogitsLoss(),计算的是3个二分类损失。

2.使用sigmoid激活,可以用于多标签图像分类。

3.二分类损失计算公式中的pi为正样本的概率。具体来说,在使用softmax时为对类别1的预测概率,在使用sigmoid时,就为sigmoid的输出。

参考链接:

CrossEntropy交叉熵损失函数及softmax函数的理解

一文读懂交叉熵损失函数

完整实验代码

import torch

from torch import nn

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('logits:', logits)

print('logits.shape:', logits.shape)

print('label:', label)

print('label.shape', label.shape)

print('---softmax输出---')

softmax_out = torch.softmax(logits, dim=1)

print('softmax_out:', softmax_out)

print('softmax_out.shape:', softmax_out.shape)

def softmax_cal(x):

return torch.exp(x) / torch.sum(torch.exp(x))

softmax_out_v2 = softmax_cal(logits)

print('softmax_out_v2.shape:', softmax_out_v2.shape)

print('---计算loss---')

softmax_loss = nn.CrossEntropyLoss()(logits, label)

print('nn.CrossEntropyLoss()计算softmax_loss:', softmax_loss)

print(softmax_loss.shape)

softmax_loss_v2 = -1 * torch.log(torch.tensor(0.7028))

print('手动计算softmax_loss_v2', softmax_loss_v2)

print(softmax_loss_v2.shape)

##########################

logits = torch.tensor([2, 3, 0.1], dtype=torch.float)

logits = logits.unsqueeze(0)

label = torch.tensor([1], dtype=torch.long)

print('---sigmoid输出---')

sigmoid_out = torch.sigmoid(logits)

print('sigmoid_out:', sigmoid_out)

print('sigmoid_out.shape:', sigmoid_out.shape)

def sigmoid_cal(x):

return 1 / (1 + torch.exp(-x))

sigmoid_out_v2 = sigmoid_cal(logits)

print('sigmoid_out_v2:', sigmoid_out_v2)

print('sigmoid_v2.shape:', sigmoid_out_v2.shape)

true_label = torch.tensor([0, 1, 0], dtype=torch.float)

true_label = true_label.unsqueeze(0)

sigmoid_loss = nn.BCEWithLogitsLoss()(logits, true_label)

#sigmoid_loss = nn.BCEWithLogitsLoss()(logits, label)

print('nn.BCEWithLogitsLoss()计算sigmoid_loss:', sigmoid_loss)

sigmoid_loss_v2 = -1 * torch.log(torch.tensor([1 - 0.8808])) - 1 * torch.log(torch.tensor(0.9526)) - 1 * torch.log(

torch.tensor(1 - 0.5250))

print('手动计算sigmoid_loss_v2', sigmoid_loss_v2 / 3)

err_loss = -1 * torch.log(torch.tensor(0.9526))

print('错误err_loss:', err_loss)

310

310

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?