目录

一、插件实现机制

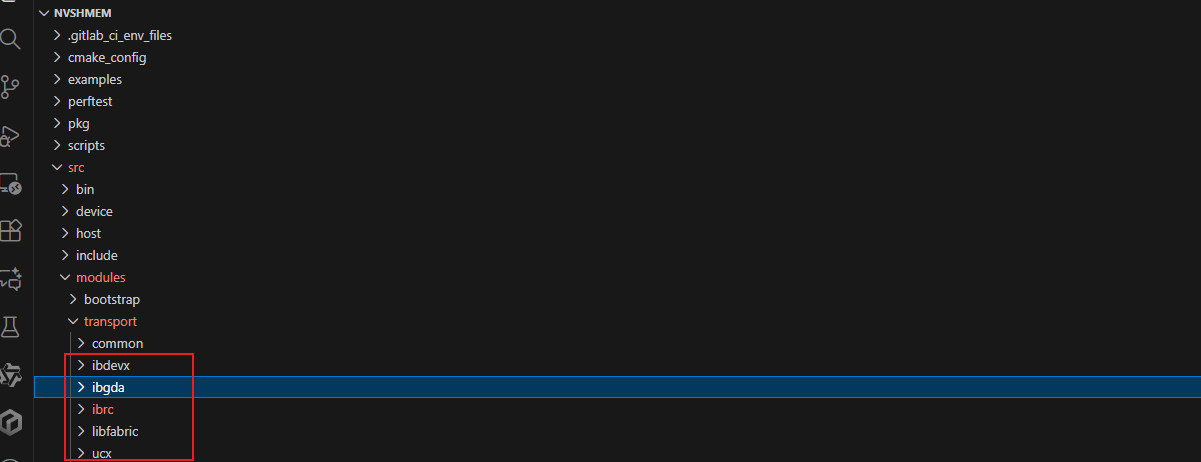

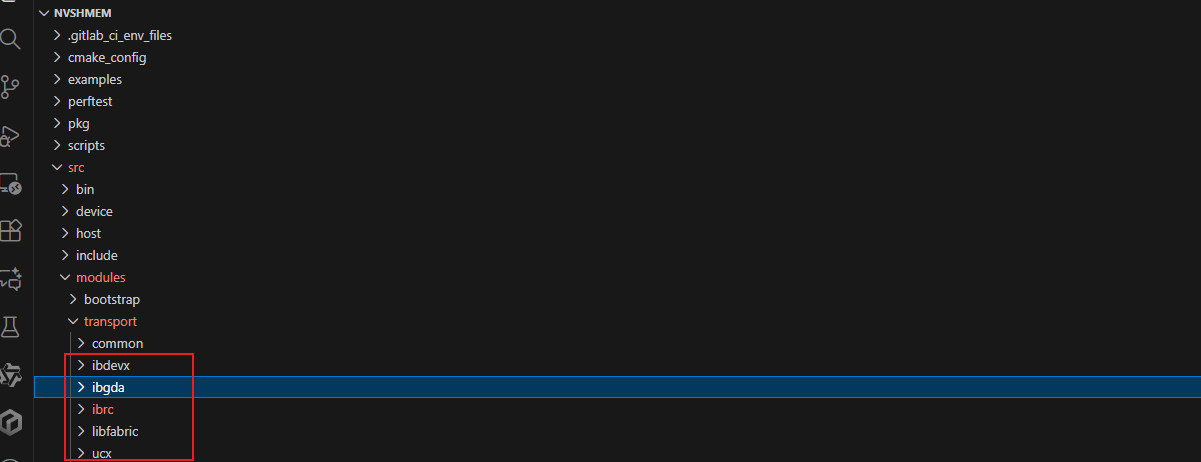

IBGDA ,IBRC ,IBDEVX ……每个传输模式(插件)编译出来对应一个.so库, 每个.so都有自己的nvshmemt_init。

通过环境变量决定加载哪一个.so,然后在加载之后 运行.so里面的nvshmemt_init,将传输层统一的接口映射到.so库的具体实现:

二、具体实现过程

抽象接口定义

在 src/include/internal/host_transport/transport.h 中定义了统一的传输接口:

struct nvshmem_transport_host_ops {

int (*can_reach_peer)(int *access, nvshmem_transport_pe_info_t *peer_info,

struct nvshmem_transport *transport);

int (*connect_endpoints)(struct nvshmem_transport *tcurr, int *selected_dev_ids,

int num_selected_devs);

int (*get_mem_handle)(nvshmem_mem_handle_t *mem_handle, nvshmem_mem_handle_t *mem_handle_in,

void *buf, size_t size, struct nvshmem_transport *transport,

bool local_only);

// RMA和AMO操作函数指针

rma_handle rma;

amo_handle amo;

fence_handle fence;

quiet_handle quiet;

// ... 其他操作

};IBGDA ,IBRC ,IBDEVX插件实现对应接口

编译出.so库

运行时选择机制

根据环境变量配置选择.so 文件transport_object_file

具体赋值逻辑:

IBRC传输(默认情况):

status = snprintf(transport_object_file, transport_object_file_len,

"nvshmem_transport_ibrc.so.%d", NVSHMEM_TRANSPORT_PLUGIN_MAJOR_VERSION);

这会产生类似:nvshmem_transport_ibrc.so.3(基于版本3)

UCX传输:

status = snprintf(transport_object_file, transport_object_file_len,

"nvshmem_transport_ucx.so.%d", NVSHMEM_TRANSPORT_PLUGIN_MAJOR_VERSION);

IBDEVX传输:

status = snprintf(transport_object_file, transport_object_file_len,

"nvshmem_transport_ibdevx.so.%d", NVSHMEM_TRANSPORT_PLUGIN_MAJOR_VERSION);

LIBFABRIC传输:

status = snprintf(transport_object_file, transport_object_file_len,

"nvshmem_transport_libfabric.so.%d", NVSHMEM_TRANSPORT_PLUGIN_MAJOR_VERSION);打开 .so 文件

transport_lib = dlopen(transport_object_file, RTLD_NOW);

if (transport_lib == NULL) {

WARN("Unable to open the %s transport. %s\n", transport_object_file, dlerror());

goto transport_fail;

}

init_fn = (nvshmemi_transport_init_fn)dlsym(transport_lib, "nvshmemt_init");

// 调用初始化函数

status = init_fn(&transports[index], nvshmemi_cuda_syms, NVSHMEM_TRANSPORT_INTERFACE_VERSION);运行.so里面的nvshmemt_init接口,对应的函数映射到统一接口:

IBGDA:

int nvshmemt_init(nvshmem_transport_t *transport,

struct nvshmemi_cuda_fn_table *table,

int api_version)

{

// 初始化工作...

// 填充 host_ops 结构体

transport->host_ops.can_reach_peer = nvshmemt_ibgda_can_reach_peer;

transport->host_ops.connect_endpoints = nvshmemt_ibgda_connect_endpoints;

transport->host_ops.get_mem_handle = nvshmemt_ibgda_get_mem_handle;

// ... 其他函数指针赋值

return status;

}

IBRC:

int nvshmemt_init(nvshmem_transport_t *transport,

struct nvshmemi_cuda_fn_table *table,

int api_version)

{

// 初始化工作...

// 填充 host_ops 结构体

transport->host_ops.can_reach_peer = nvshmemt_ibrc_can_reach_peer;

transport->host_ops.connect_endpoints = nvshmemt_ibrc_connect_endpoints;

transport->host_ops.get_mem_handle = nvshmemt_ibrc_get_mem_handle;

// ... 其他函数指针赋值

return status;

}

……上层调用统一API层

上层应用通过统一的NVSHMEM API调用,这些调用被路由到相应插件的实现函数:

// 上层调用

nvshmem_put(...);

// 内部路由到插件的rma函数

transport->host_ops.rma(transport, pe, verb, remote, local, bytesdesc, is_proxy);

890

890

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?