indexer 主要包含:

html parser,word generator, url id generator,inverted index(keyword->[docId],docs table(url, id, refCount)

word generator的职责是输入一个text文本,返回一个word iterable。具体参数有,最小word长度,exclude list。基本实现是英文的自然分词。可插拔不同模块支持中文,带空格短语等分词策略。

inverted index的参数:主要是就是最长拉链长度 max_chain_len

indexer负责主流程,一个bfs过程,主流程涉及的对象:

队列

visited:这个role可以由 docs table 承担

indexer主要参数:

索引文件目录 index_dir

种子url(搜索的起点,可以是多个,预先在队列里)

搜索深度 depth

具体工作由html parser做,两件事:

1)得到新的url(参数:是否搜索外链,这个对规模影响很大)

2)对text 进行分词、索引

索引文件:

index.txt : 倒排

PageRank.txt:doc table(url, id, refCount)

Searcher:load 索引,响应查询请求

load 倒排的时候,对拉链按 reference Count 排序

索引内存数据结构可以是Trie,hashmap,平衡树

由于不保存网页,基本上在内存做就够了。大规模的情况下(比如要保存网页,更多数据)的思路有两个方向

1)和现在类似,只是在内存里的 倒排和doc table 比较大的时候,dump到文件,这样索引和网页库按文件 partition, 也就是多索引文件,对应的 searcher需要查询多个索引,然后做aggregate。

2)分析网页的时候,不直接更新索引,而只是产生log,比如 (word1 -> doc1), (word2->doc1),另一个process,feed on这些log,更新倒排,doc table等,这就是map reduce的方式。

indexer

from urllib2 import urlopen, Request

from HTMLParser import HTMLParser

from urlparse import urlparse

from Queue import Queue

import sys

reload(sys)

sys.setdefaultencoding("utf-8")

base_url, index_dir = urlparse('http://english.sina.com/news/china'), 'c:\\indexer'

min_word_len, max_chain_len, excludeList = 4, 100, ['under', 'because', 'these', 'those']

index, docs, queue = {},{base_url.geturl(): [0, 0]}, Queue(0)

seq, url = 0, ''

queue.put(base_url.geturl())

def get_words(data):

for i in xrange(0, len(data)):

if data[i].isalpha() and (i == 0 or not data[i - 1].isalpha()): word_start = i

if data[i].isalpha() and (i == len(data) - 1 or not data[i + 1].isalpha()):

word = data[word_start: i + 1]

if len(word) >= min_word_len and word not in excludeList: yield word

class MyParser(HTMLParser):

def __init__(self):

HTMLParser.__init__(self)

self.__stack = []

def handle_starttag(self, tag, attrList):

self.__stack.append(tag)

attrs = {}

for kv in attrList: attrs[kv[0]] = kv[1]

if tag == 'a':

try:

link = attrs['href']

pr = urlparse(link)

i = pr.path.find('.')

if (i > 0 and pr.path[i + 1:] in ['jpg', 'jpeg', 'gif', 'bmp', 'flv', 'mp4', 'wmv','swf','css']): return

if pr.scheme == '':

if pr.path[0] == '/': link = 'http://' + base_url.netloc + link

else: link = url[0: url.rindex('/') + 1] + link

elif pr.scheme != 'http' or pr.netloc != base_url.netloc: return

if link[-1] == '/': link = link[0: -1]

link = link.strip()

if link not in docs:

docs[link] = len(docs), 1

queue.put(link)

else: docs[link][1] += 1

except Exception, e: return

def handle_endtag(self, tag):

if len(self.__stack) > 0: self.__stack.pop()

def handle_data(self, data):

if len(self.__stack) > 0 and self.__stack[-1] in ['script', 'style']: return

for word in get_words(data):

if word not in index: index[word] = []

if len(index[word]) > 0 and index[word][-1] == seq: continue

if len(index[word]) < max_chain_len: index[word].append(seq)

parser, depth = MyParser(), 2

while not queue.empty() and depth > 0:

for i in xrange(queue.qsize()):

url = queue.get()

try:

parser.feed(urlopen(Request(url, headers={'User-agent': 'Mozilla 5.10'})).read())

print url, "indexed."

seq += 1

except Exception, e: print 'Failed to index', url, '. the error is', e

depth -= 1

with open(index_dir + '\\PageRank.txt','w') as url_file:

url_file.writelines(map(lambda e: e[0] + ' ' + str(e[1][0]) + ' ' + str(e[1][1]) + '\n', docs.items()))

with open(index_dir + '\\Index.txt','w') as index_file:

index_file.writelines( map(lambda e: e[0] + ' ' + ','.join(map(str, e[1])) + '\n', index.items()))

print seq,'pages indexed. Keyword number:', len(index)indexer 运行情况:

Searcher

import sys

index_dir = 'c:\\indexer2'

class Searcher:

def __init__(self, base_dir):

self.docs, self.index = {}, {}

with open(base_dir + '\\PageRank.txt') as url_file:

for line in url_file.xreadlines():

try:

url, docId, refCount = line.split(' ')

self.docs[int(docId)] = {'url': url, 'refCount': int(refCount)}

except Exception, e: pass #print 'invalid doc entry:', line

with open(base_dir + '\\Index.txt') as index_file:

for word, chain in map(lambda line: line.split(' '), index_file.readlines()):

self.index[word] = map(int, chain.split(','))

try:

self.index[word].sort(lambda doc1, doc2: self.docs[doc1]['refCount'] - self.docs[doc2]['refCount'])

except Exception, e: pass #print 'Failed to sort chain for keyowrd:' , word

def search(self, keyword):

if keyword not in self.index:return []

return map(lambda docId: self.docs[docId]['url'], self.index[keyword])

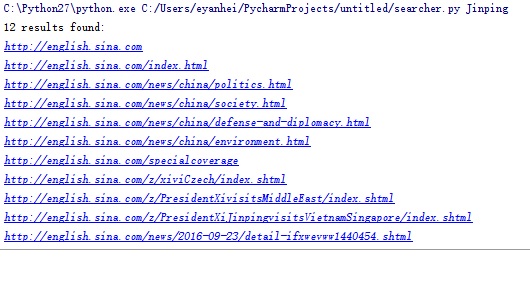

hits = Searcher(index_dir).search(sys.argv[1])

print len(hits), 'results found:'

for hit in hits: print hitSearcher 运行结果:

1772

1772

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?