一,自定义函数 UDF

案例:

//样例类

case class Hobbies(name:String,hobbies:String)

object LianXi2 {

def main(args: Array[String]): Unit = {

//创建spark运行配置对象

val conf = new SparkConf().setMaster("local[*]").setAppName("linxi2")

//创建spark上下文环境对象(链接对象)

val sc = new SparkContext(conf)

val spark = SparkSession.builder().master("local[*]").appName("dsD")

.getOrCreate()

//导入spark包

import spark.implicits._

//从外部读取数据

val rdd:RDD[String] = sc.textFile("in/tmp/hobbies.txt")

//存储

val hobbyDF = rdd.map(_.split(" ")).map(p=>Hobbies(p(0),p(1))).toDF()

hobbyDF.show()

//注册表

hobbyDF.createOrReplaceTempView("hobby")

//注册UDF,自定义函数,注意匿名函数

spark.udf.register("hobby_num",(x:String)=>x.split(",").size)

//自定义SQL 方法

spark.sql("select name,hobbies,hobby_num(hobbies) as hobynum from hobby")

.show(false) //false不隐藏

二,UDAF

案例:

object LianXi2 {

def main(args: Array[String]): Unit = {

//创建spark运行配置对象

val conf = new SparkConf().setMaster("local[*]").setAppName("linxi2")

//创建spark上下文环境对象(链接对象)

val sc = new SparkContext(conf)

val spark = SparkSession.builder().master("local[*]").appName("dsD")

.getOrCreate()

val students = Seq(Student(1, "zhangsan", "F", 21), Student(2, "lisi", "M", 25),

Student(3, "wangwu", "M", 28), Student(4, "zhaoliu", "F", 40),

Student(5, "sunqi", "F", 33), Student(6, "qianba", "M", 16)

, Student(7, "a", "M", 55), Student(8, "b", "F", 19)

, Student(9, "c", "F", 20), Student(10, "d", "M", 35),

Student(11, "e", "M", 15), Student(12, "f", "F", 45))

//导入spark包

import spark.implicits._

//将students转变成DataFrame 方法一

val frame:DataFrame = students.toDF()

//注册表

frame.createOrReplaceTempView("students")

//调用下面MyAgeAvgFunction类

val myUDAF = new MyAgeAvgFunction

//注册自定义函数,注意匿名函数

spark.udf.register("myAvg",myUDAF)

//SQL语句

//select avg(age) from student group by gender;

val resultDF:DataFrame = spark.sql(

"select gender,myAvg(age) as avgage from students group by gender"

)

//打印表格式

resultDF.printSchema()

//打印表内容

resultDF.show()

}

}

class MyAgeAvgFunction extends UserDefinedAggregateFunction{

//聚合函数的输入输出数据结构

override def inputSchema: StructType = {

new StructType().add("age",LongType)

}

//缓存区内的数据结构

override def bufferSchema: StructType = {

new StructType().add("sum",LongType).add("count",LongType)

}

//聚合函数返回值的数据结构

override def dataType: DataType = DoubleType

//聚合函数相同的输出是否总是要得到相同的输出 聚合函数是否幂相同

override def deterministic: Boolean = true

//数据初始化

override def initialize(buffer: MutableAggregationBuffer): Unit = {

buffer(0)=0L //记录 传入所有用户年龄总和 76 【12,23,41】

buffer(1)=0L //记录 传入的用户的个数 3

}

//传入一条新的数据后需要进行的处理

override def update(buffer: MutableAggregationBuffer, input: Row): Unit = {

buffer(0) = buffer.getLong(0)+input.getLong(0)

buffer(1) = buffer.getLong(1)+1

}

//合并各分区内的数据

override def merge(buffer1: MutableAggregationBuffer, buffer2: Row): Unit = {

//总年龄之和

buffer1(0) = buffer1.getLong(0)+buffer2.getLong(0)

//总人数

buffer1(1) = buffer1.getLong(1)+buffer2.getLong(1)

}

//计算最终结果

override def evaluate(buffer: Row): Any = {

buffer.getLong(0).toDouble/buffer.getLong(1)

}

}

三.UDTF

数据:

package fuction

import java.util

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF

import org.apache.hadoop.hive.serde2.objectinspector.{ObjectInspector, ObjectInspectorFactory, StructObjectInspector}

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory

import org.apache.spark.SparkConf

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SparkSession}

/**

* @author

* @create

*/

object UDTF2 {

def main(args: Array[String]): Unit = {

// val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("udtfdemo")

val spark = SparkSession.builder()

.master("local[*]")

.enableHiveSupport() //需要hive支持

.appName("SparkUDTFDemo")

.getOrCreate()

val sc = spark.sparkContext

//导包

import spark.implicits._

//从外部读取数据

val rdd:RDD[String] = sc.textFile("in/tmp/udtf.txt")

//split:分割字符 filter:过滤 equals:相同的 toDF将数据转成DataFrame

val frame:DataFrame = rdd.map(x => x.split("//"))

.filter(x =>x(1).equals("ls"))

.map(x =>(x(0),x(1),x(2)))

.toDF("id","name","class")

//注册表

frame.createOrReplaceTempView("udtfable")

//写SQL语句

spark.sql("CREATE TEMPORARY FUNCTION myudtf As 'kb11.neizhifunction.MyUDTF'")

//写SQL语句

spark.sql("select myudtf(class) from udtfable").show()

}

}

class MyUDTF extends GenericUDTF{

override def initialize(argOIs: StructObjectInspector): StructObjectInspector = {

val fieldName = new java.util.ArrayList[String]()

val fieldOIS =new util.ArrayList[ObjectInspector]()

fieldName.add("type")

fieldOIS.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector)

ObjectInspectorFactory.getStandardStructObjectInspector(fieldName,fieldOIS)

}

/*

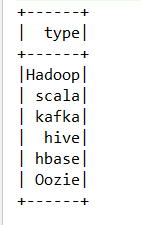

02//ls//Hadoop scala kafka hive hbase Oozie

输出 head type string

Hadoop

scala

kafka

hive

hbase

Oozie

*/

override def process(objects: Array[AnyRef]): Unit = {

//将字符串切割成单个的字符数组

val strings: Array[String] = objects(0).toString.split(" ")

for(str<-strings){

var temp: Array[String] = new Array[String](1)

temp(0)=str

forward(temp)

}

}

override def close(): Unit = {}

}

177

177

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?