1. 在Linux集群下已经搭建了Zookeeper+Hadoop+HBase

| hostname | ip | 组件 |

|---|---|---|

| node0 | 192.168.1.160 | zookeeper,namenode,NodeManager,HMaster,HRegionServer |

| node1 | 192.168.1.161 | zookeeper,datanode,NodeManager,HRegionServer |

| node2 | 192.168.1.162 | zookeeper,datanode,ResourceManager,HMaster,HRegionServer |

2. 在Windows下搭建HBase应用程序开发环境

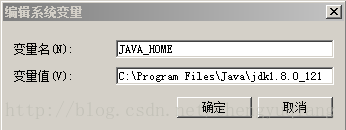

2.1 安装配置JDK

下载JDK1.8,配置环境变量

2.2 安装配置Maven

下载apache-maven-3.3.9,解压缩,配置环境变量

打开CMD,输入mvn -v

可以看到maven版本信息

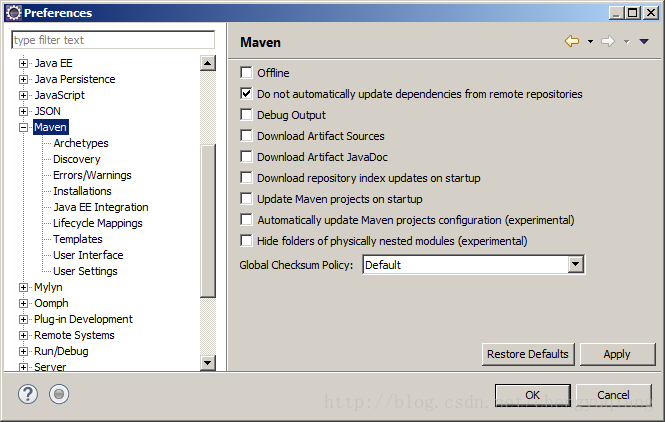

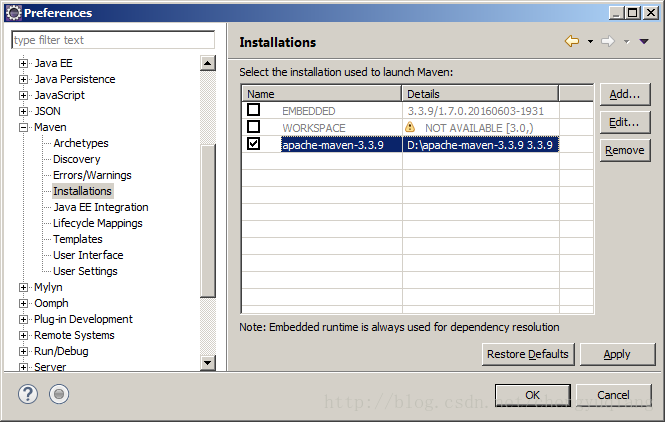

2.3 配置Eclipse

目前的eclipse-javee版本已经自带maven插件了

winows-preferences-左边maven可以看到安装好的maven

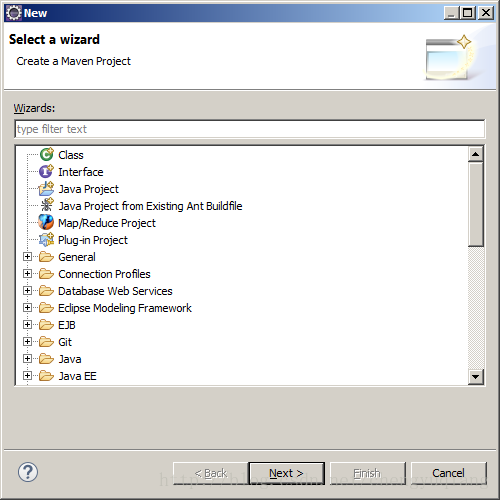

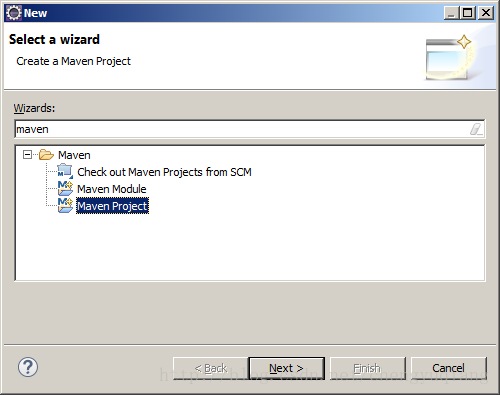

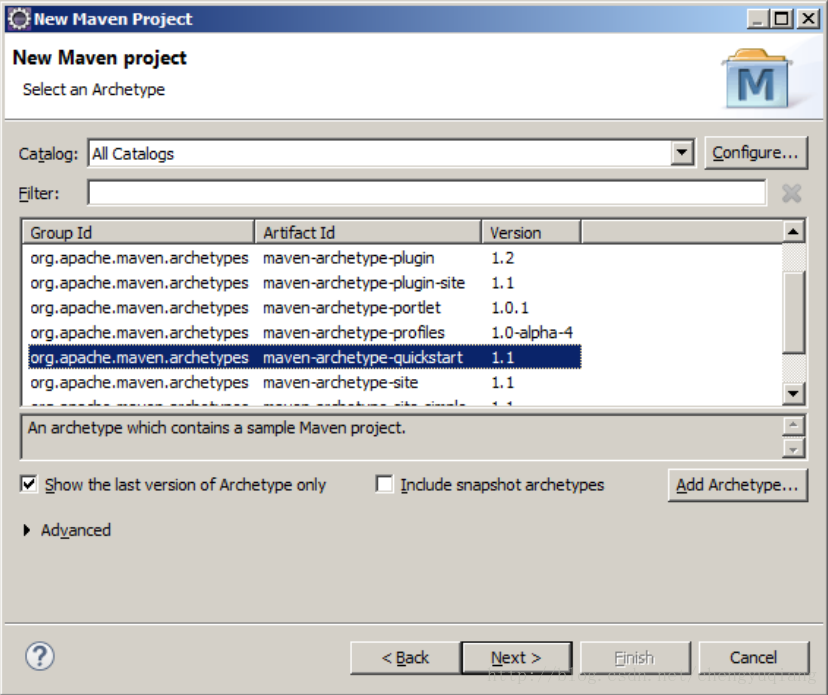

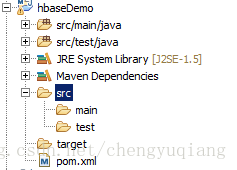

2.4 创建Maven项目

在Wizards中输入maven

http://mvnrepository.com/

比如我们需要引入spring核心jar包spring-core,打开Maven Repository,搜索spring-core

选择最新版本3.2.0.RELEASE,可以看到其dependency写法如下红框所示:

将其复制到pom.xml中的中 。这样,Maven就会开始自动下载jar包到本地仓库,然后关联到你的项目中,下载完成后,我们展开工程目录中External Libraries。

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

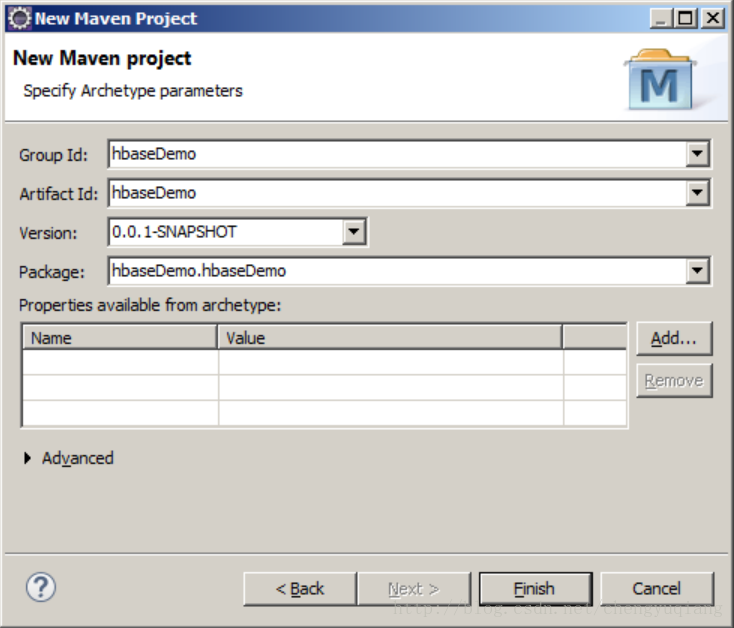

<groupId>hbaseDemo</groupId>

<artifactId>hbaseDemo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>hbaseDemo</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.2.4</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>题外话,如果是WEB项目,还需要在pom.xml中导入 javaee-api.jar

<dependency>

<groupId>javax</groupId>

<artifactId>javaee-api</artifactId>

<version>7.0</version>

</dependency>2.5 编写应用程序

package hbaseDemo.dao;

import java.io.IOException;

import java.util.*;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

public class HbaseDao {

private static Configuration conf = HBaseConfiguration.create();

static {

conf.set("hbase.rootdir", "hdfs://cc/hbase");

// 设置Zookeeper,直接设置IP地址

conf.set("hbase.zookeeper.quorum", "192.168.1.160,192.168.1.162,192.168.1.163");

}

// 创建表

public static void createTable(String tablename, String columnFamily) throws Exception {

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

TableName tableNameObj = TableName.valueOf(tablename);

if (admin.tableExists(tableNameObj)) {

System.out.println("Table exists!");

System.exit(0);

} else {

HTableDescriptor tableDesc = new HTableDescriptor(TableName.valueOf(tablename));

tableDesc.addFamily(new HColumnDescriptor(columnFamily));

admin.createTable(tableDesc);

System.out.println("create table success!");

}

admin.close();

connection.close();

}

// 删除表

public static void deleteTable(String tableName) {

try {

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

TableName table = TableName.valueOf(tableName);

admin.disableTable(table);

admin.deleteTable(table);

System.out.println("delete table " + tableName + " ok.");

} catch (IOException e) {

e.printStackTrace();

}

}

// 插入一行记录

public static void addRecord(String tableName, String rowKey, String family, String qualifier, String value){

try {

Connection connection = ConnectionFactory.createConnection(conf);

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(Bytes.toBytes(rowKey));

put.addColumn(Bytes.toBytes(family), Bytes.toBytes(qualifier), Bytes.toBytes(value));

put.addColumn(Bytes.toBytes(family), Bytes.toBytes(qualifier), Bytes.toBytes(value));

table.put(put);

table.close();

connection.close();

System.out.println("insert recored " + rowKey + " to table " + tableName + " ok.");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) throws Exception {

HbaseDao.createTable("testTb", "info");

HbaseDao.addRecord("testTb", "001", "info", "name", "zhangsan");

HbaseDao.addRecord("testTb", "001", "info", "age", "20");

//HbaseDao.deleteTable("testTb");

}

}

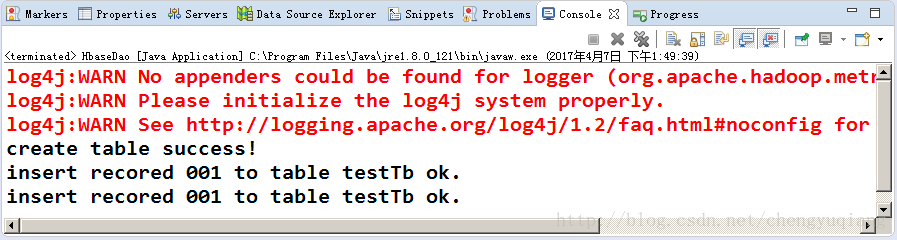

运行结果

[root@node0 ~]# hbase shell

2017-04-07 01:51:31,268 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/lib/hadoop/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.4, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2016

hbase(main):001:0> list

TABLE

googlebook

testTb

2 row(s) in 0.2760 seconds

=> ["googlebook", "testTb"]

hbase(main):002:0> scan 'testTb'

ROW COLUMN+CELL

001 column=info:age, timestamp=1491544218337, value=20

001 column=info:name, timestamp=1491544218154, value=zhangsan

1 row(s) in 0.1980 seconds

2.6 批量导入数据

生成批量数据

[root@node0 data]# vi gen.sh

[root@node data]# cat gen.sh

#!/bin/sh

for i in {1..100000};do

echo -e $i'\t'$RANDOM'\t'$RANDOM'\t'$RANDOM

done;

[root@node0 data]# sh gen.sh > mydata.txt

[root@node0 data]# tail -10 mydata.txt

99991 5421 23010 14796

99992 8131 27221 11846

99993 20723 8007 14215

99994 20876 29543 5465

99995 14753 19926 20000

99996 26226 7228 25424

99997 18393 15515 13721

99998 1855 23042 27666

99999 16761 16120 24486

100000 14619 17100 556上传到HDFS

[root@node0 data]# hdfs dfs -put mydata.txt input

[root@node0 data]# hdfs dfs -ls input

Found 1 items

-rw-r--r-- 3 root hbase 1698432 2017-07-19 20:38 input/mydata.txt

You have mail in /var/spool/mail/root

[root@node0 data]#创建HBase表

hbase(main):021:0> create 'mydata','info'pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>hbaseDemo</groupId>

<artifactId>hbaseDemo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>hbaseDemo</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.version>2.7.1</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>1.1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.1.2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hbase/hbase-protocol -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-protocol</artifactId>

<version>1.1.2</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>

编写MapReduce程序

package hbaseDemo.dao;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.mapreduce.TableOutputFormat;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

public class BatchImport {

public static class BatchImportMapper extends Mapper<LongWritable, Text, LongWritable, Text> {

protected void map(LongWritable key, Text value, Context context)

throws java.io.IOException, InterruptedException {

// super.setup( context );

//System.out.println(key + ":" + value);

context.write(key, value);

};

}

static class BatchImportReducer extends TableReducer<LongWritable, Text, NullWritable> {

protected void reduce(LongWritable key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

for (Text text : values) {

final String[] splited = text.toString().split("\t");

final Put put = new Put(Bytes.toBytes(splited[0]));// 第一列行键

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("data1"), Bytes.toBytes(splited[1]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("data2"), Bytes.toBytes(splited[2]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("data3"), Bytes.toBytes(splited[3]));

context.write(NullWritable.get(), put);

}

};

}

/**

* 之前一直报错,failed on connection exception 拒绝连接:nb0:8020

* 因为namenode节点不在192.168.1.160上,而在192.168.1.161和192.168.1.162

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

final Configuration conf = new Configuration();

conf.set("hbase.rootdir", "hdfs://cetc32/hbase");

// 设置Zookeeper,直接设置IP地址

conf.set("hbase.zookeeper.quorum", "192.168.1.160,192.168.1.161,192.168.1.162");

// 设置hbase表名称(先在shell下创建一个表:create 'mydata','info')

conf.set(TableOutputFormat.OUTPUT_TABLE, "mydata");

// 将该值改大,防止hbase超时退出

conf.set("dfs.socket.timeout", "180000");

//System.setProperty("HADOOP_USER_NAME", "root");

// 设置fs.defaultFS

conf.set("fs.defaultFS", "hdfs://192.168.1.161:8020");

// 设置yarn.resourcemanager节点

conf.set("yarn.resourcemanager.hostname", "nb1");

Job job = Job.getInstance(conf);

job.setJobName("HBaseBatchImport");

job.setMapperClass(BatchImportMapper.class);

job.setReducerClass(BatchImportReducer.class);

// 设置map的输出,不设置reduce的输出类型

job.setMapOutputKeyClass(LongWritable.class);

job.setMapOutputValueClass(Text.class);

job.setInputFormatClass(TextInputFormat.class);

// 不再设置输出路径,而是设置输出格式类型

job.setOutputFormatClass(TableOutputFormat.class);

FileInputFormat.setInputPaths(job, "hdfs://192.168.1.161:8020/user/root/input/mydata.txt");

boolean flag=job.waitForCompletion(true);

System.out.println(flag);

}

}

Eclipse运行

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

true查看Hbase

hbase(main):021:0> count 'mydata'

Current count: 1000, row: 10897

Current count: 2000, row: 11797

Current count: 3000, row: 12697

Current count: 4000, row: 13597

Current count: 5000, row: 14497

Current count: 6000, row: 15397

Current count: 7000, row: 16297

Current count: 8000, row: 17197

Current count: 9000, row: 18097

Current count: 10000, row: 18998

Current count: 11000, row: 19898

Current count: 12000, row: 20797

Current count: 13000, row: 21697

Current count: 14000, row: 22597

Current count: 15000, row: 23497

Current count: 16000, row: 24397

Current count: 17000, row: 25297

Current count: 18000, row: 26197

Current count: 19000, row: 27097

Current count: 20000, row: 27998

Current count: 21000, row: 28898

Current count: 22000, row: 29798

Current count: 23000, row: 30697

Current count: 24000, row: 31597

Current count: 25000, row: 32497

Current count: 26000, row: 33397

Current count: 27000, row: 34297

Current count: 28000, row: 35197

Current count: 29000, row: 36097

Current count: 30000, row: 36998

Current count: 31000, row: 37898

Current count: 32000, row: 38798

Current count: 33000, row: 39698

Current count: 34000, row: 40597

Current count: 35000, row: 41497

Current count: 36000, row: 42397

Current count: 37000, row: 43297

Current count: 38000, row: 44197

Current count: 39000, row: 45097

Current count: 40000, row: 45998

Current count: 41000, row: 46898

Current count: 42000, row: 47798

Current count: 43000, row: 48698

Current count: 44000, row: 49598

Current count: 45000, row: 50497

Current count: 46000, row: 51397

Current count: 47000, row: 52297

Current count: 48000, row: 53197

Current count: 49000, row: 54097

Current count: 50000, row: 54998

Current count: 51000, row: 55898

Current count: 52000, row: 56798

Current count: 53000, row: 57698

Current count: 54000, row: 58598

Current count: 55000, row: 59498

Current count: 56000, row: 60397

Current count: 57000, row: 61297

Current count: 58000, row: 62197

Current count: 59000, row: 63097

Current count: 60000, row: 63998

Current count: 61000, row: 64898

Current count: 62000, row: 65798

Current count: 63000, row: 66698

Current count: 64000, row: 67598

Current count: 65000, row: 68498

Current count: 66000, row: 69398

Current count: 67000, row: 70297

Current count: 68000, row: 71197

Current count: 69000, row: 72097

Current count: 70000, row: 72998

Current count: 71000, row: 73898

Current count: 72000, row: 74798

Current count: 73000, row: 75698

Current count: 74000, row: 76598

Current count: 75000, row: 77498

Current count: 76000, row: 78398

Current count: 77000, row: 79298

Current count: 78000, row: 80197

Current count: 79000, row: 81097

Current count: 80000, row: 81998

Current count: 81000, row: 82898

Current count: 82000, row: 83798

Current count: 83000, row: 84698

Current count: 84000, row: 85598

Current count: 85000, row: 86498

Current count: 86000, row: 87398

Current count: 87000, row: 88298

Current count: 88000, row: 89198

Current count: 89000, row: 90097

Current count: 90000, row: 90998

Current count: 91000, row: 91898

Current count: 92000, row: 92798

Current count: 93000, row: 93698

Current count: 94000, row: 94598

Current count: 95000, row: 95498

Current count: 96000, row: 96398

Current count: 97000, row: 97298

Current count: 98000, row: 98198

Current count: 99000, row: 99098

Current count: 100000, row: 99999

100000 row(s) in 7.0460 seconds

=> 100000

hbase(main):022:0>

170

170

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?