##集群规划

| IP | 端口 | 角色 |

|---|---|---|

| 10.1.200.118 | 27017 | mongos |

| 10.1.200.120 | 27017 | config server |

| 10.1.200.125 | 27017 | shard |

| 10.1.200.143 | 27017 | shard(primary) |

| 10.1.200.146 | 27017 | shard |

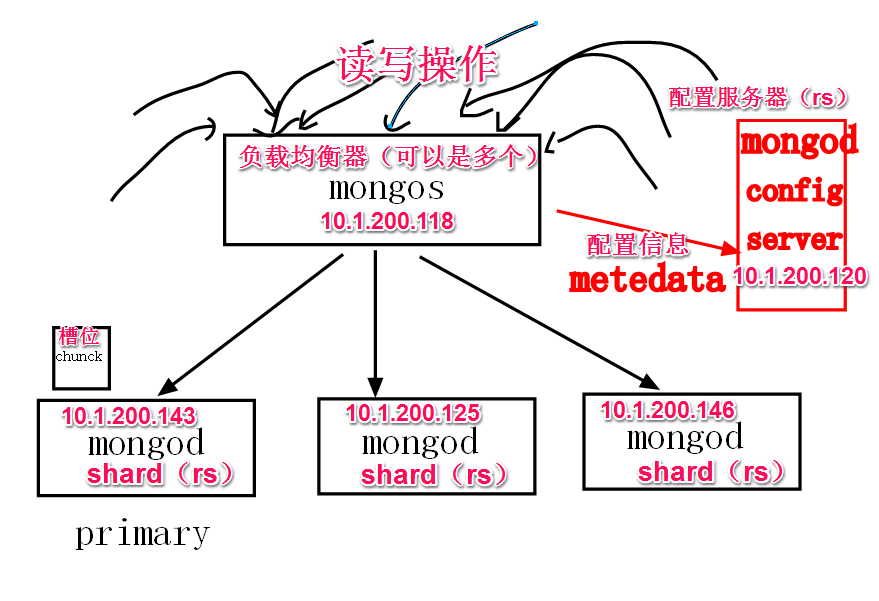

sharding集群具体流程如下图:

分析:

1、sharding集群的三类角色:mongos、config server、shard: mongos:类似于负载均衡器的作用,可以部署多个。 config server:配置服务器用来存储mongos的配置信息,可以部署成一个replica set集群。 shard:片键,可以部署成一个replica set集群。

2、replica set集群是使用集群中的每一台服务器来承载所有对整个集群的流量,容易造成服务器压力过大。而sharding集群是使用负载均衡的思想,将对整个集群的流量分摊到每一台服务器上,这样可以大大降低整个集群的负荷,提高系统的吞吐量。

3、分片的方式有三种:range,hash,tag: 1)range:就是下面要讲述的。 2)hash:和redis cluster一样。 3)tag:手工指定分片。

##安装和配置

参照单实例。

##各个节点配置文件修改

1、10.1.200.118节点:

[root@centos4 logs]# vim /etc/mongod.conf

# 数据库文件位置

dbpath=/usr/local/mongodb/db

# 日志文件位置

logpath=/usr/local/mongodb/logs/mongodb.log

# 以追加方式写入日志

logappend=true

# 绑定服务IP,若绑定127.0.0.1,则只能本机访问,不指定默认本地所有IP

bind_ip=10.1.200.118

# 默认端口为27017

port=27017

2、10.1.200.120节点:

[root@centos5 db]# vim /etc/mongod.conf

# 数据库文件位置

dbpath=/usr/local/mongodb/db

# 日志文件位置

logpath=/usr/local/mongodb/logs/mongodb.log

# 以追加方式写入日志

logappend=true

# 绑定服务IP,若绑定127.0.0.1,则只能本机访问,不指定默认本地所有IP

bind_ip=10.1.200.120

# 默认端口为27017

port=27017

# sharding集群中,设置是否为config server

configsvr=true

3、10.1.200.125、10.1.200.143、10.1.200.146三个节点:

[root@centos6 db]# vim /etc/mongod.conf

# 数据库文件位置

dbpath=/usr/local/mongodb/db

# 日志文件位置

logpath=/usr/local/mongodb/logs/mongodb.log

# 以追加方式写入日志

logappend=true

# 绑定服务IP,若绑定127.0.0.1,则只能本机访问,不指定默认本地所有IP

bind_ip=10.1.200.125

# 默认端口为27017

port=27017

##启动10.1.200.120节点的mongodb服务

[root@centos5 db]# service mongodb start

db path is: /usr/local/mongodb/db

/usr/local/mongodb/bin/mongod

Starting mongod: /usr/local/mongodb/bin/mongod --config /etc/mongod.conf

[root@centos5 db]#

##启动10.1.200.118节点的mongos服务

[root@centos4 logs]# mongos --configdb 10.1.200.120:27017

2016-08-13T15:29:41.652+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

2016-08-13T15:29:41.655+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2016-08-13T15:29:41.655+0800 I CONTROL [main]

2016-08-13T15:29:41.655+0800 I SHARDING [mongosMain] MongoS version 3.2.8 starting: pid=5090 port=27017 64-bit host=centos4 (--help for usage)

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] db version v3.2.8

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] git version: ed70e33130c977bda0024c125b56d159573dbaf0

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] allocator: tcmalloc

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] modules: none

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] build environment:

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] distarch: x86_64

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] target_arch: x86_64

2016-08-13T15:29:41.655+0800 I CONTROL [mongosMain] options: { sharding: { configDB: "10.1.200.120:27017" } }

2016-08-13T15:29:41.655+0800 I SHARDING [mongosMain] Updating config server connection string to: 10.1.200.120:27017

2016-08-13T15:29:41.659+0800 I SHARDING [LockPinger] creating distributed lock ping thread for 10.1.200.120:27017 and process centos4:27017:1471073381:1047189914 (sleeping for 30000ms)

2016-08-13T15:29:41.662+0800 I SHARDING [LockPinger] cluster 10.1.200.120:27017 pinged successfully at 2016-08-13T15:29:41.660+0800 by distributed lock pinger '10.1.200.120:27017/centos4:27017:1471073381:1047189914', sleeping for 30000ms

2016-08-13T15:29:41.670+0800 I NETWORK [HostnameCanonicalizationWorker] Starting hostname canonicalization worker

2016-08-13T15:29:41.670+0800 I SHARDING [Balancer] about to contact config servers and shards

2016-08-13T15:29:41.671+0800 I SHARDING [Balancer] config servers and shards contacted successfully

2016-08-13T15:29:41.671+0800 I SHARDING [Balancer] balancer id: centos4:27017 started

2016-08-13T15:29:41.689+0800 I NETWORK [mongosMain] waiting for connections on port 27017

2016-08-13T15:29:41.693+0800 I SHARDING [Balancer] distributed lock 'balancer/centos4:27017:1471073381:1047189914' acquired for 'doing balance round', ts : 57aecc6597c942c8b7784c79

2016-08-13T15:29:41.694+0800 I SHARDING [Balancer] distributed lock 'balancer/centos4:27017:1471073381:1047189914' unlocked.

2016-08-13T15:29:41.719+0800 I NETWORK [mongosMain] connection accepted from 10.6.101.46:26223 #1 (1 connection now open)

注意:此时10.1.200.118节点的角色是sharding集群的mongos,所以需要开启对应的mongos服务,而不是mongod服务。

##启动另外三个节点的mongod服务:

[root@centos6 db]# service mongodb start

db path is: /usr/local/mongodb/db

/usr/local/mongodb/bin/mongod

Starting mongod: /usr/local/mongodb/bin/mongod --config /etc/mongod.conf

[root@centos6 db]#

##集群配置 1、客户端连接10.1.200.118节点,如下操作添加10.1.200.125、10.1.200.143和10.1.200.146三个节点到集群中:

sh.addShard("10.1.200.125:27017")

sh.addShard("10.1.200.143:27017")

sh.addShard("10.1.200.146:27017")

/* 1 */

{

"shardAdded" : "shard0000",

"ok" : 1.0

}

/* 1 */

{

"shardAdded" : "shard0001",

"ok" : 1.0

}

/* 1 */

{

"shardAdded" : "shard0002",

"ok" : 1.0

}

2、配置在具体某个数据库上启用分片功能:

sh.enableSharding("flightswitch")

/* 1 */

{

"ok" : 1.0

}

如上配置之后在flightswitch数据库上启用分片功能。

3、在某个数据库的某个collection上启用分片功能,片键是主键id:

sh.shardCollection("flightswitch.flightsegment",{"_id":1})

/* 1 */

{

"collectionsharded" : "flightswitch.flightsegment",

"ok" : 1.0

}

注意:我们最后的分片是对某一个collection的数据进行分片的。

4、查看sharding集群的状态:

sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("57aec8ba87cc3e8611b4713e")

}

shards:

{ "_id" : "shard0000", "host" : "10.1.200.125:27017" }

{ "_id" : "shard0001", "host" : "10.1.200.143:27017" }

{ "_id" : "shard0002", "host" : "10.1.200.146:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "flightswitch", "primary" : "shard0001", "partitioned" : true }

flightswitch.flightsegment

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard0001 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0)

从集群状态可以看出来此时集群的主片键是shard0001,也就是10.1.200.143节点。 至此sharding集群已经创建完成。

##集群测试

1、客户端连接10.1.200.118节点,向之前配置分片功能的collection中插入document数据:

for(var i=1; i<=100; i++ ){

db.flightsegment.insert({"originCity":"SHA" + i})

}

Inserted 1 record(s) in 55ms

Inserted 1 record(s) in 10ms

Inserted 1 record(s) in 11ms

Inserted 1 record(s) in 9ms

Inserted 1 record(s) in 9ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 8ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 9ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 6ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 10ms

Inserted 1 record(s) in 7ms

Inserted 1 record(s) in 12ms

……

2、此时再查看集群的状态:

sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("57aec8ba87cc3e8611b4713e")

}

shards:

{ "_id" : "shard0000", "host" : "10.1.200.125:27017" }

{ "_id" : "shard0001", "host" : "10.1.200.143:27017" }

{ "_id" : "shard0002", "host" : "10.1.200.146:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

2 : Success

databases:

{ "_id" : "flightswitch", "primary" : "shard0001", "partitioned" : true }

flightswitch.flightsegment

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 1

shard0001 1

shard0002 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : ObjectId("57aed47d2d291818f5dc78fb") } on : shard0000 Timestamp(2, 0)

{ "_id" : ObjectId("57aed47d2d291818f5dc78fb") } -->> { "_id" : ObjectId("57aed47d2d291818f5dc7911") } on : shard0002 Timestamp(3, 0)

{ "_id" : ObjectId("57aed47d2d291818f5dc7911") } -->> { "_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(3, 1)

可以看到三个节点上都有数据了,说明数据已经被分片存储了。

1335

1335

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?