1.安装准备

a.下载hadoop:hadoop下载地址

b.必须先安装好Java,参考:java安装

c.必须先安装好SSH服务,SSH服务安装:

$ sudo apt-get install ssh

$ sudo apt-get install rsync2.解压安装包

tar -zxvf hadoop-2.7.3.tar.gz 3.修改配置文件

a. vi /opt/hadoop-2.7.3/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>b. vi /opt/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>c. vi /opt/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

修改JAVA_HOME, HADOOP_CONF_DIR

# The java implementation to use.

export JAVA_HOME=/opt/jdk1.8.0_101

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

#export JSVC_HOME=${JSVC_HOME}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/opt/hadoop-2.7.3/etc/hadoop"}d. vi /opt/hadoop-2.7.3/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>hdfs://localhost:9001</value>

</property>

</configuration>4.修改ssh免秘钥

a. 使用ssh-keygen生成秘钥对

b.复制到信任用户中

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys5.设置HADOOP_HOME

vi /etc/profile

export HADOOP_HOME=/opt/hadoop-2.7.3source /etc/profile

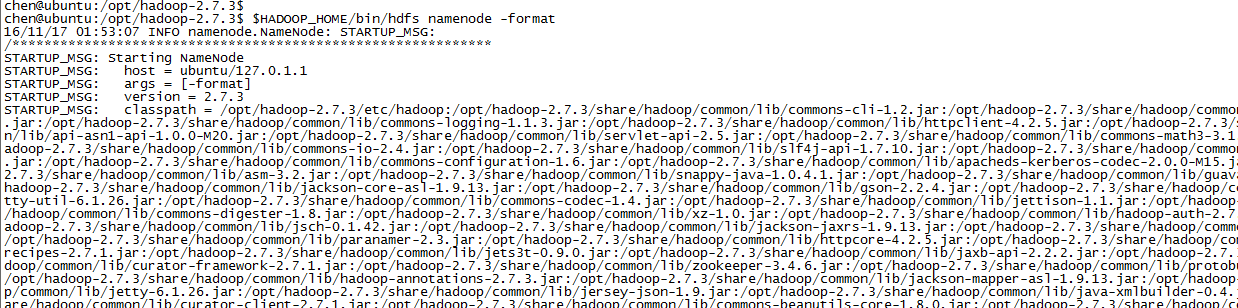

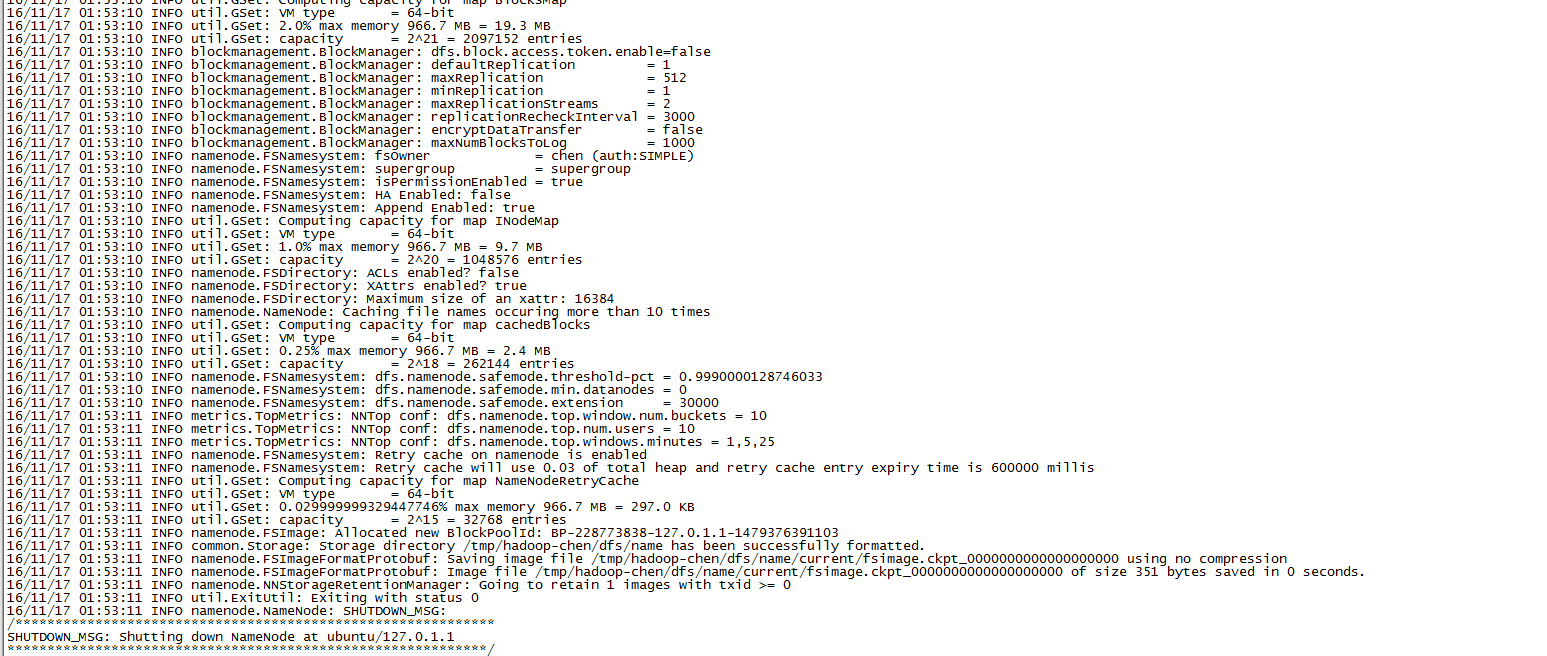

5.format文件系统

$HADOOP_HOME/bin/hdfs namenode -format

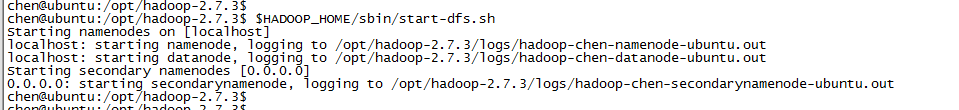

6.启动hadoop

$HADOOP_HOME/sbin/start-dfs.sh

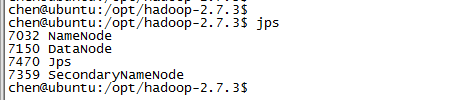

7.查看是否启动成功

官方参考文档:

http://hadoop.apache.org/docs/r2.7.3/hadoop-project-dist/hadoop-common/SingleCluster.html

1388

1388

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?