从三硬币到1+K个多面体

三硬币问题建模及Gibbs采样求解(Python实现)一文中详细介绍了三硬币问题的建模和使用Gibbs采样求解的方法。如果把三枚硬币换成一个K面体和K个V面体呢?或者变成M个K面体和K个V面体呢?

1+K个多面体

假设1+N个多面体,第一个多面体记为A,有K个面,每个面由1~K的数字标识,每个面朝上的概率 p ⃗ = { p 1 , p 2 , … , p K } \vec{p}=\{p_1,p_2,\dots,p_K\} p={p1,p2,…,pK},剩余的K个多面体用1~K编号,每个多面体有V个面,编号1~V,某个面朝上的概率 q ⃗ = { q 1 , q 2 , … , q V } \vec{q}=\{q_1,q_2,\dots,q_V \} q={q1,q2,…,qV}。进行如下实验:首先投掷多面体A,如果数字k朝上,则投掷编号为k的V面体,记下朝上的数字作为观测值,独立地重复M次观测结果记为 X ⃗ = { x 1 , x 2 , … , x M } \vec{X}=\{x_1,x_2,\dots,x_M\} X={x1,x2,…,xM}。同样只能观察投掷多面体的结果,不能观测投掷多面体的的过程,估计参数 p ⃗ \vec{p} p和 q ⃗ \vec{q} q。

分析与建模

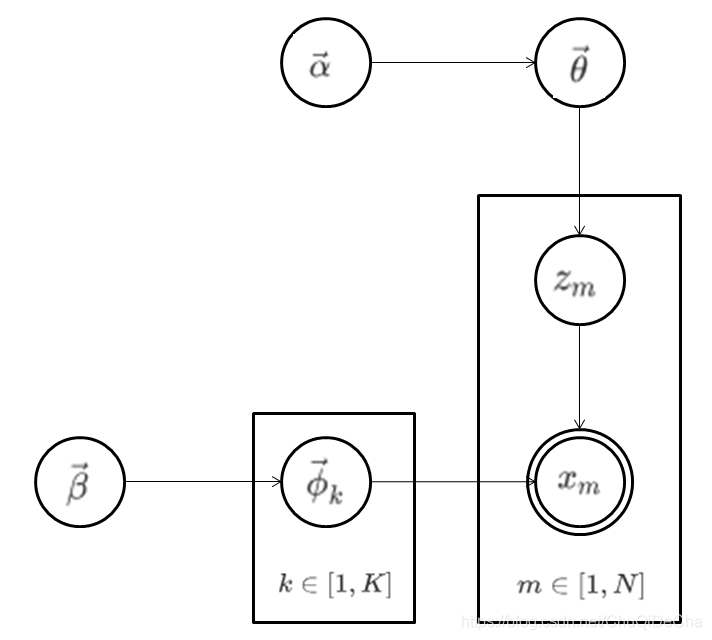

1+N个多面体个多面体问题与三硬币问题除了面数更多一点之外,和三硬币问题并没有区别,概率图模型也一样:

同样是

α

⃗

→

θ

⃗

→

z

m

\vec{\alpha} \to \vec{\theta} \to z_m

α→θ→zm和

β

⃗

→

ϕ

k

⃗

→

x

m

∣

k

=

z

m

\vec{\beta} \to \vec{\phi_k} \to x_m|k=z_m

β→ϕk→xm∣k=zm 两个过程,但

z

⃗

\vec{z}

z服从多项分布而不是二项分布:

P

(

z

⃗

∣

θ

⃗

)

=

∏

i

=

1

K

θ

i

n

i

P(\vec{z}|\vec{\theta})=\prod_{i=1}^{K}\theta_i^{n_i}

P(z∣θ)=i=1∏Kθini

其中 n i n_i ni表示i面朝上的次数。二项分布可以使用Beta分布作为参数的先验分布,多项分布是二项分布在多维上的扩展,很容易想到使用Beta分布在多维分布上的扩展作为多项分布参数的先验分布。Beta分布在多维上的扩展是Dirchlet分布,定义为:

D i r c h l e t ( u ⃗ ∣ α ⃗ ) = Γ ( α 1 + α 2 + ⋯ + α K ) Γ ( α 1 ) + Γ ( α 2 ) + ⋯ + Γ ( α K ) ∏ K u k α k − 1 Dirchlet(\vec{u}|\vec{\alpha}) = \frac{\Gamma(\alpha_1+\alpha_2+ \cdots+\alpha_K)}{\Gamma(\alpha_1)+\Gamma(\alpha_2)+ \cdots+\Gamma(\alpha_K)}\prod^K{u}_k^{\alpha_k-1} Dirchlet(u∣α)=Γ(α1)+Γ(α2)+⋯+Γ(αK)Γ(α1+α2+⋯+αK)∏Kukαk−1

Dirchlet分布的期望为:

E ( u i ) = α i ∑ i = 1 K α i E(u_i)=\frac{\alpha_i}{\sum_{i=1}^{K}\alpha_i} E(ui)=∑i=1Kαiαi

同三硬币问题,Multi-Direchlet共轭结构的后验分布是Direchlet分布:

P ( θ ⃗ ∣ z ⃗ ) ∼ D i r c h l e t ( θ ⃗ ∣ α ⃗ + n ⃗ ) P(\vec{\theta}|\vec{z}) \thicksim Dirchlet(\vec{\theta}|\vec{\alpha} + \vec{n}) P(θ∣z)∼Dirchlet(θ∣α+n)

取后验分布的期望为参数的值,则:

θ ⃗ = ( n 1 + α 1 ∑ i = 1 K ( n i + α i ) , n 2 + α 2 ∑ i = 1 K ( n i + α i ) , ⋯   , n K + α K ∑ i = 1 K ( n i + α i ) ) \vec{\theta}=(\frac{ n_1+ \alpha_1}{\sum_{i=1}^{K}(n_i+ \alpha_i)},\frac{ n_2+ \alpha_2}{\sum_{i=1}^{K}(n_i+ \alpha_i)},\cdots,\frac{ n_K+ \alpha_K}{\sum_{i=1}^{K}(n_i+ \alpha_i)}) θ=(∑i=1K(ni+αi)n1+α1,∑i=1K(ni+αi)n2+α2,⋯,∑i=1K(ni+αi)nK+αK)

α ⃗ → θ ⃗ → z m \vec{\alpha} \to \vec{\theta} \to z_m α→θ→zm的的条件分布:

P ( z ⃗ ∣ α ⃗ ) = ∫ P ( z ⃗ ∣ θ ⃗ ) P ( θ ⃗ ∣ α ⃗ ) d θ ⃗ = ∫ ∏ k = 1 K θ k n k D i r c h l e t ( θ ⃗ ∣ α ⃗ ) d θ ⃗ = ∫ ∏ k = 1 K θ k n k 1 Δ ( α ⃗ ) ∏ k = 1 K θ k α k − 1 d θ ⃗ = 1 Δ ( α ⃗ ) ∫ θ k n k ∏ k = 1 K θ α k − 1 d θ ⃗ = Δ ( α ⃗ + n ⃗ ) Δ ( α ⃗ ) \begin{aligned} P(\vec{z}|\vec{\alpha}) &=\int{P(\vec{z}|\vec{\theta})P(\vec{\theta}|\vec{\alpha})d\vec{\theta}} \\ &= \int{\prod_{k=1}^{K}\theta_k^{n_k}Dirchlet(\vec{\theta}|\vec{\alpha})}d\vec{\theta} \\ &= \int{\prod_{k=1}^{K}\theta_k^{n_k} \frac{1}{\Delta(\vec{\alpha})} \prod^K_{k=1}\theta_k^{\alpha_k-1}}d\vec{\theta} \\ &= \frac{1}{\Delta(\vec{\alpha})} \int{\theta_k^{n_k} \prod^K_{k=1}\theta^{\alpha_k-1}}d\vec{\theta} \\ &= \frac{\Delta(\vec{\alpha}+\vec{n})}{\Delta(\vec{\alpha})} \end{aligned} P(z∣α)=∫P(z∣θ)P(θ∣α)dθ=∫k=1∏KθknkDirchlet(θ∣α)dθ=∫k=1∏KθknkΔ(α)1k=1∏Kθkαk−1dθ=Δ(α)1∫θknkk=1∏Kθαk−1dθ=Δ(α)Δ(α+n)

其中 Δ ( α ⃗ ) = Γ ( α 1 ) + Γ ( α 2 ) + ⋯ + Γ ( α K ) Γ ( α 1 + α 2 + ⋯ + α K ) \Delta(\vec{\alpha})=\frac{\Gamma(\alpha_1)+\Gamma(\alpha_2)+ \cdots+\Gamma(\alpha_K)}{\Gamma(\alpha_1+\alpha_2+ \cdots+\alpha_K)} Δ(α)=Γ(α1+α2+⋯+αK)Γ(α1)+Γ(α2)+⋯+Γ(αK)是Dirchlet分布的归一化参数。

如果已知每次观测结果来自哪个多面体,任何两次观测结果都是可交换的,将来自同一多面体的观测结果放在一起

x ⃗ ′ = ( x ⃗ 1 , x ⃗ 2 , … , x ⃗ K ) \vec{x}'=(\vec{x}_1,\vec{x}_2,\dots,\vec{x}_K) x′=(x1,x2,…,xK)

x ⃗ ′ = ( z ⃗ 1 , z ⃗ 2 , … , z ⃗ K ) \vec{x}'=(\vec{z}_1,\vec{z}_2,\dots,\vec{z}_K) x′=(z1,z2,…,zK)

对于来自多面体k的观测结果,同上

P

(

x

⃗

k

∣

ϕ

⃗

k

)

∼

M

u

l

t

i

(

n

⃗

k

,

ϕ

⃗

k

)

P(\vec{x}_k|\vec{\phi}_k) \thicksim Multi(\vec{n}_k,\vec{\phi}_k)

P(xk∣ϕk)∼Multi(nk,ϕk),参数

ϕ

⃗

k

∼

D

i

r

c

h

l

e

t

(

ϕ

⃗

k

∣

β

⃗

k

)

\vec{\phi}_k \thicksim Dirchlet(\vec{\phi}_k|\vec{\beta}_k)

ϕk∼Dirchlet(ϕk∣βk),组Multi-Dirchlet共轭分布。

因后验分布

P

(

ϕ

⃗

k

∣

x

⃗

k

)

∼

D

i

r

c

h

l

e

t

(

ϕ

⃗

k

∣

β

⃗

k

+

n

⃗

k

)

P(\vec{\phi}_k|\vec{x}_k) \thicksim Dirchlet(\vec{\phi}_k|\vec{\beta}_k + \vec{n}_k)

P(ϕk∣xk)∼Dirchlet(ϕk∣βk+nk),所以参数的解为:

ϕ ⃗ k = ( n k , 1 + α k , 1 ∑ i = 1 V ( n k , i + α k , i ) , n k , 2 + α k , 2 ∑ i = 1 V ( n k , i + α k , i ) , ⋯   , n k , V + α k , V ∑ i = 1 V ( n k , i + α k , i ) ) \vec{\phi}_k= (\frac{n_{k,1} + \alpha_{k,1} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )},\frac{n_{k,2} + \alpha_{k,2} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )},\cdots,\frac{n_{k,V} + \alpha_{k,V} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )}) ϕk=(∑i=1V(nk,i+αk,i)nk,1+αk,1,∑i=1V(nk,i+αk,i)nk,2+αk,2,⋯,∑i=1V(nk,i+αk,i)nk,V+αk,V)

其中 n k , i n_{k,i} nk,i表示观测结果中第k个多面体i面朝上的次数。

β

⃗

→

ϕ

k

⃗

→

x

m

∣

k

=

z

m

\vec{\beta} \to \vec{\phi_k} \to x_m|k=z_m

β→ϕk→xm∣k=zm的条件分布:

P

(

x

⃗

k

∣

z

⃗

k

,

β

⃗

k

)

=

Δ

(

β

⃗

k

+

n

⃗

k

)

Δ

(

β

⃗

k

)

P(\vec{x}_k |\vec{z}_k, \vec{\beta}_k) = \frac{\Delta(\vec{\beta}_k+\vec{n}_k)}{\Delta(\vec{\beta}_k)}

P(xk∣zk,βk)=Δ(βk)Δ(βk+nk)

n ⃗ k \vec{n}_k nk是一个V维的向量,表示来自第k个多面各个观测值出现的次数。

P ( x ⃗ ∣ z ⃗ , β ⃗ ) = P ( x ⃗ ′ ∣ z ⃗ ′ , β ⃗ ′ ) = ∏ k = 1 K P ( x ⃗ k ∣ z ⃗ k , β ⃗ k ) = ∏ k = 1 K Δ ( β ⃗ k + n ⃗ k ) Δ ( β ⃗ k ) \begin{aligned} P(\vec{x} |\vec{z}, \vec{\beta}) &=P(\vec{x}'|\vec{z}',\vec{\beta}') \\ &=\prod_{k=1}^{K}P(\vec{x}_k |\vec{z}_k, \vec{\beta}_k) \\ &= \prod_{k=1}^{K}\frac{\Delta(\vec{\beta}_k+\vec{n}_k)}{\Delta(\vec{\beta}_k)} \end{aligned} P(x∣z,β)=P(x′∣z′,β′)=k=1∏KP(xk∣zk,βk)=k=1∏KΔ(βk)Δ(βk+nk)

结合以上公式,可以得到联合分布:

p ( x ⃗ , z ⃗ ∣ α ⃗ , β ⃗ ) = p ( z ⃗ ∣ α ⃗ ) p ( x ⃗ ∣ z ⃗ , β ⃗ ) = Δ ( α ⃗ + n ⃗ ) Δ ( α ⃗ ) ∏ k = 1 K Δ ( β ⃗ k + n ⃗ k ) Δ ( β ⃗ k ) p(\vec{x},\vec{z}|\vec{\alpha},\vec{\beta}) = p(\vec{z} | \vec{\alpha})p(\vec{x} |\vec{z}, \vec{\beta})= \frac{\Delta(\vec{\alpha}+\vec{n})}{\Delta(\vec{\alpha})}\prod_{k=1}^{K}\frac{\Delta(\vec{\beta}_k+\vec{n}_k)}{\Delta(\vec{\beta}_k)} p(x,z∣α,β)=p(z∣α)p(x∣z,β)=Δ(α)Δ(α+n)k=1∏KΔ(βk)Δ(βk+nk)

联合概率分布是1+K个Multi-Dirchlet共轭结构。

Gibbs采样求解

Gibbs采样求解过程与三硬币相同,只需要将Beta分布换成Dirchlet分布即可。这里仅简要的写出推导过程。不理解的可以回头看看三硬币问题建模及Gibbs采样求解(Python实现)

p ( z i = k ∣ z ⃗ ¬ i , x ⃗ ) = p ( z i = k ∣ x i = t , z ⃗ ¬ i , x ⃗ ¬ i ) = p ( z i = k , x i = t ∣ z ⃗ ¬ i , x ⃗ ¬ i ) p ( x i = t ∣ z ⃗ ¬ i , x ⃗ ¬ i ) ∝ p ( z i = k , x i = t ∣ z ⃗ ¬ i , x ⃗ ¬ i ) \begin{aligned} p(z_i=k|\vec{z}_{\neg i},\vec{x}) &= p(z_i=k|x_i=t,\vec{z}_{\neg i},\vec{x}_{\neg i}) \\ &= \frac{p(z_i=k,x_i=t|\vec{z}_{\neg i},\vec{x}_{\neg i})}{p(x_i=t | \vec{z}_{\neg i},\vec{x}_{\neg i})} \\ & \propto p(z_i=k,x_i=t|\vec{z}_{\neg i},\vec{x}_{\neg i}) \end{aligned} p(zi=k∣z¬i,x)=p(zi=k∣xi=t,z¬i,x¬i)=p(xi=t∣z¬i,x¬i)p(zi=k,xi=t∣z¬i,x¬i)∝p(zi=k,xi=t∣z¬i,x¬i)

去掉第i次观测结果对1+K个Multi-Dirchlet结构没有影响,仅影响该次观测结果所属多面体的一些计数,

P ( θ ⃗ ∣ z ⃗ ¬ i , x ⃗ ¬ i ) = D i r c h l e t ( θ ⃗ ∣ α ⃗ + n ⃗ ¬ i ) P(\vec{\theta}|\vec{z}_{\neg i},\vec{x}_{\neg i}) = Dirchlet(\vec{\theta}|\vec{\alpha} +\vec{n}_{\neg i}) P(θ∣z¬i,x¬i)=Dirchlet(θ∣α+n¬i)

P ( ϕ ⃗ k ∣ z ⃗ k , ¬ i , x ⃗ k , ¬ i ) ∼ D i r c h l e t ( ϕ ⃗ k ∣ β ⃗ k + n ⃗ k , ¬ i ) P(\vec{\phi}_{k}|\vec{z}_{k,\neg i},\vec{x}_{k,\neg i}) \thicksim Dirchlet(\vec{\phi}_k|\vec{\beta}_k + \vec{n}_{k,\neg i}) P(ϕk∣zk,¬i,xk,¬i)∼Dirchlet(ϕk∣βk+nk,¬i)

其余k-1个Multi-Dirchlet结构是独立的,所以,条件分布:

P ( z i = k ∣ z ⃗ ¬ i , x ⃗ ) ∝ P ( z i = k , x i = t ∣ z ⃗ ¬ i , x ⃗ ¬ i ) = ∫ P ( z i = k , x i = t , θ ⃗ , ϕ ⃗ k ∣ z ⃗ ¬ i , x ⃗ ¬ i ) d θ ⃗ d ϕ ⃗ k = ∫ P ( z i = k , θ ⃗ ∣ z ⃗ ¬ i , x ⃗ ¬ i ) P ( x i = t , ϕ ⃗ k ∣ z ⃗ ¬ i , x ⃗ ¬ i ) d θ ⃗ d ϕ ⃗ k = ∫ P ( z i = k , θ ⃗ ∣ z ⃗ ¬ i , x ⃗ ¬ i ) d θ ⃗ ∫ P ( x i = t , ϕ ⃗ k ∣ z ⃗ k , ¬ i , x ⃗ k , ¬ i ) d ϕ ⃗ k = ∫ P ( z i = k ∣ θ ⃗ ) P ( θ ⃗ ∣ z ⃗ ¬ i , x ⃗ ¬ i ) d θ ⃗ ∫ P ( x i = t ∣ ϕ ⃗ k ) P ( ϕ ⃗ k ∣ z ⃗ k , ¬ i , x ⃗ k , ¬ i ) d ϕ ⃗ k = ∫ θ k D i r c h l e t ( θ ⃗ ∣ α ⃗ + n ⃗ ¬ i ) d θ ⃗ ∫ ϕ k , t D i r c h l e t ( ϕ ⃗ k ∣ β ⃗ k + n ⃗ k , ¬ i ) d ϕ ⃗ k = E ( θ k ) E ( ϕ k , t ) = θ ^ k ϕ ^ k , t \begin{aligned}P(z_i=k|\vec{z}_{\neg i},\vec{x}) &\propto P(z_i=k,x_i=t|\vec{z}_{\neg i},\vec{x}_{\neg i}) \\ &= \int{P(z_i=k,x_i=t,\vec{\theta},\vec{\phi}_k|\vec{z}_{\neg i},\vec{x}_{\neg i})}d \vec{\theta}d\vec{\phi}_k \\ &=\int{ P(z_i=k,\vec{\theta}|\vec{z}_{\neg i},\vec{x}_{\neg i}) } P(x_i=t,\vec{\phi}_k|\vec{z}_{\neg i},\vec{x}_{\neg i})d\vec{\theta}d\vec{\phi}_k \\ &= \int{ P(z_i=k,\vec{\theta}|\vec{z}_{\neg i},\vec{x}_{\neg i}) } d \vec{\theta}\int{P(x_i=t,\vec{\phi}_k|\vec{z}_{k,\neg i},\vec{x}_{k,\neg i})}d\vec{\phi}_k \\ &= \int{ P(z_i=k|\vec{\theta})P(\vec{\theta}|\vec{z}_{\neg i},\vec{x}_{\neg i}) } d \vec{\theta} \int{P(x_i=t|\vec{\phi}_k)P(\vec{\phi}_k|\vec{z}_{k,\neg i},\vec{x}_{k,\neg i})}d\vec{\phi}_k \\ &= \int{\theta}_k Dirchlet(\vec{\theta}|\vec{\alpha} +\vec{n}_{\neg i})d\vec{\theta}\int{\phi_{k,t}Dirchlet(\vec{\phi}_k|\vec{\beta}_k + \vec{n}_{k,\neg i})}d\vec{\phi}_k \\ &=E(\theta_k)E(\phi_{k,t})\\ &=\hat{\theta}_k\hat{\phi}_{k,t} \\ \end{aligned} P(zi=k∣z¬i,x)∝P(zi=k,xi=t∣z¬i,x¬i)=∫P(zi=k,xi=t,θ,ϕk∣z¬i,x¬i)dθdϕk=∫P(zi=k,θ∣z¬i,x¬i)P(xi=t,ϕk∣z¬i,x¬i)dθdϕk=∫P(zi=k,θ∣z¬i,x¬i)dθ∫P(xi=t,ϕk∣zk,¬i,xk,¬i)dϕk=∫P(zi=k∣θ)P(θ∣z¬i,x¬i)dθ∫P(xi=t∣ϕk)P(ϕk∣zk,¬i,xk,¬i)dϕk=∫θkDirchlet(θ∣α+n¬i)dθ∫ϕk,tDirchlet(ϕk∣βk+nk,¬i)dϕk=E(θk)E(ϕk,t)=θ^kϕ^k,t

因:

θ ^ k = n k , ¬ i + α k ∑ k = 1 K ( n k , ¬ i + α k ) \hat{\theta}_k = \frac{n_{k,\neg i} + \alpha_k}{\sum_{k=1}^{K}(n_{k,\neg i} + \alpha_k)} θ^k=∑k=1K(nk,¬i+αk)nk,¬i+αk

ϕ ^ k , t = n k , ¬ i t + α k , ¬ i t ∑ t = 1 V ( n k , ¬ i t + α k , ¬ i t ) \hat{\phi}_{k,t} = \frac{n_{k,\neg i}^t + \alpha_{k,\neg i}^t }{\sum_{t=1}^{V} (n_{k,\neg i}^t + \alpha_{k,\neg i}^t )} ϕ^k,t=∑t=1V(nk,¬it+αk,¬it)nk,¬it+αk,¬it

于是,得到最终模型的Gibbs采样公式:

P ( z = k i ∣ z ⃗ ¬ i , x ⃗ ) ∝ n k , ¬ i + α k ∑ i = 1 K ( n k , ¬ i + α k ) ⋅ n k , ¬ i t + α k , ¬ i t ∑ t = 1 V ( n k , ¬ i t + α k , ¬ i t ) P(z=k_i|\vec{z}_{\neg i},\vec{x}) \propto \frac{n_{k,\neg i} + \alpha_k}{\sum_{i=1}^{K}(n_{k,\neg i} + \alpha_k)} \cdot \frac{n_{k,\neg i}^t + \alpha_{k,\neg i}^t }{\sum_{t=1}^{V} (n_{k,\neg i}^t + \alpha_{k,\neg i}^t )} P(z=ki∣z¬i,x)∝∑i=1K(nk,¬i+αk)nk,¬i+αk⋅∑t=1V(nk,¬it+αk,¬it)nk,¬it+αk,¬it

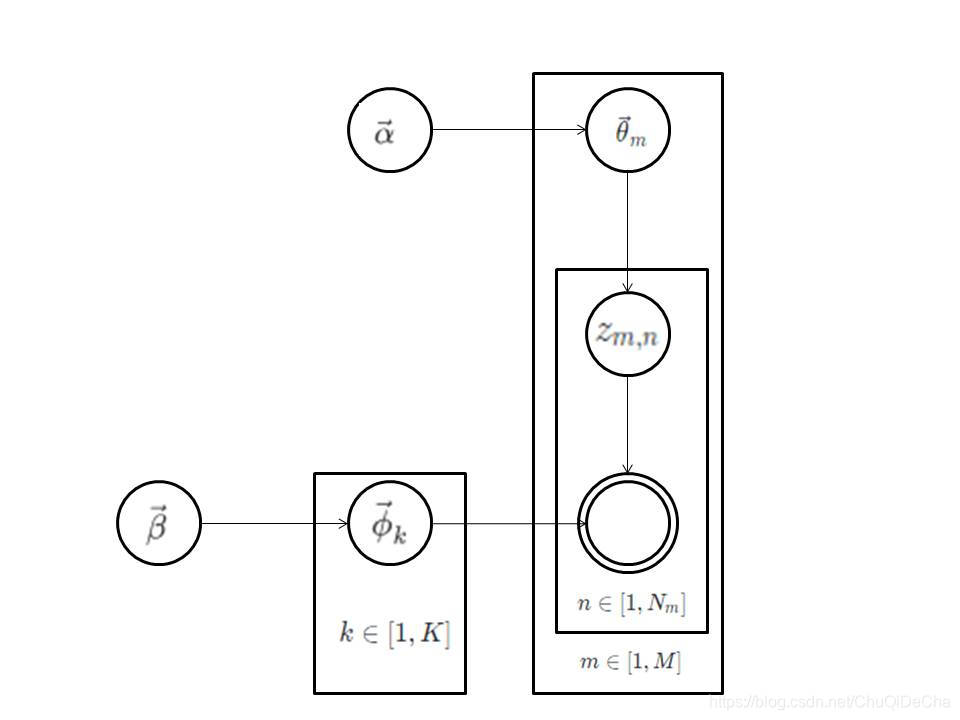

M+K个多面体

进一步修改规则:假设有M个K面体和K个V面体,进行M次观测。每次观测时,先从M个K面体中无放回的随机取出一个(每个多面体被取出的概率是相同的),然后按照1+K个多面体的规则生成观测值(每个K面体对应的观测次数不是固定值)。最终得到观测值:

{

{

x

1

,

1

,

x

1

,

2

,

…

,

x

1

,

N

1

}

,

{

x

2

,

1

,

x

2

,

2

,

…

,

x

1

,

N

2

}

,

…

,

{

x

M

,

1

,

x

M

,

2

,

…

,

x

M

,

N

M

}

}

\{\{x_{1,1},x_{1,2},\dots,x_{1,N_1}\},\{x_{2,1},x_{2,2},\dots,x_{1,N_2}\},\dots,\{x_{M,1},x_{M,2},\dots,x_{M,N_M}\}\}

{{x1,1,x1,2,…,x1,N1},{x2,1,x2,2,…,x1,N2},…,{xM,1,xM,2,…,xM,NM}}

求每个多面体各个面朝上的概率。

M+K个多面体可以看作是M个1+K个多面体问题,但这M个1+K个多面体问题共享K个多面体。因此共有M+K个Mulit-Dirchlet共轭结构。参考1+K个多面体问题,可以得到一下结论:

θ

⃗

m

=

(

n

m

,

1

+

α

m

,

1

∑

i

=

1

K

(

n

m

,

i

+

α

m

,

i

)

,

n

m

,

2

+

α

m

,

2

∑

i

=

1

K

(

n

m

,

i

+

α

m

,

i

)

,

⋯

,

n

m

,

K

+

α

m

,

K

∑

i

=

1

K

(

n

m

,

i

+

α

m

,

i

)

)

\vec{\theta}_m=(\frac{ n_{m,1}+ \alpha_{m,1}}{\sum_{i=1}^{K}(n_{m,i}+ \alpha_{m,i})},\frac{ n_{m,2}+ \alpha_{m,2}}{\sum_{i=1}^{K}(n_{m,i}+ \alpha_{m,i})},\cdots,\frac{ n_{m,K}+ \alpha_{m,K}}{\sum_{i=1}^{K}(n_{m,i}+ \alpha_{m,i})})

θm=(∑i=1K(nm,i+αm,i)nm,1+αm,1,∑i=1K(nm,i+αm,i)nm,2+αm,2,⋯,∑i=1K(nm,i+αm,i)nm,K+αm,K)

P

(

z

⃗

∣

α

⃗

)

=

∏

m

=

1

M

P

(

z

⃗

m

∣

α

⃗

m

)

=

∏

m

=

1

M

∫

P

(

z

⃗

m

∣

θ

⃗

m

)

P

(

θ

⃗

m

∣

α

⃗

m

)

d

θ

⃗

m

=

∏

m

=

1

M

Δ

(

α

⃗

m

+

n

⃗

m

)

Δ

(

α

⃗

m

)

\begin{aligned} P(\vec{z}|\vec{\alpha}) &= \prod_{m=1}^{M}P(\vec{z}_m|\vec{\alpha}_m)\\ &= \prod_{m=1}^{M}\int{P(\vec{z}_m|\vec{\theta}_m)P(\vec{\theta}_m|\vec{\alpha}_m)d\vec{\theta}}_m \\ &= \prod_{m=1}^{M} \frac{\Delta(\vec{\alpha}_m+\vec{n}_m)}{\Delta(\vec{\alpha}_m)} \end{aligned}

P(z∣α)=m=1∏MP(zm∣αm)=m=1∏M∫P(zm∣θm)P(θm∣αm)dθm=m=1∏MΔ(αm)Δ(αm+nm)

其中

n

⃗

m

\vec{n}_m

nm是一个k维向量,表示第m论观测结果中,使用第i个V面体的次数。

ϕ ⃗ k = ( n k , 1 + α k , 1 ∑ i = 1 V ( n k , i + α k , i ) , n k , 2 + α k , 2 ∑ i = 1 V ( n k , i + α k , i ) , ⋯   , n k , V + α k , V ∑ i = 1 V ( n k , i + α k , i ) ) \vec{\phi}_k= (\frac{n_{k,1} + \alpha_{k,1} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )},\frac{n_{k,2} + \alpha_{k,2} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )},\cdots,\frac{n_{k,V} + \alpha_{k,V} }{\sum_{i=1}^{V} (n_{k,i} + \alpha_{k,i} )}) ϕk=(∑i=1V(nk,i+αk,i)nk,1+αk,1,∑i=1V(nk,i+αk,i)nk,2+αk,2,⋯,∑i=1V(nk,i+αk,i)nk,V+αk,V)

P

(

x

⃗

∣

z

⃗

,

β

⃗

)

=

∏

k

=

1

K

P

(

x

⃗

k

∣

z

⃗

k

,

β

⃗

k

)

=

∏

k

=

1

K

Δ

(

β

⃗

k

+

n

⃗

k

)

Δ

(

β

⃗

k

)

P(\vec{x} |\vec{z}, \vec{\beta}) =\prod_{k=1}^{K}P(\vec{x}_k |\vec{z}_k, \vec{\beta}_k) = \prod_{k=1}^{K}\frac{\Delta(\vec{\beta}_k+\vec{n}_k)}{\Delta(\vec{\beta}_k)}

P(x∣z,β)=k=1∏KP(xk∣zk,βk)=k=1∏KΔ(βk)Δ(βk+nk)

其中

n

⃗

k

\vec{n}_{k}

nk是一个V维向量,表示第k个V面体各个面朝上的次数

p ( x ⃗ , z ⃗ ∣ α ⃗ , β ⃗ ) = p ( z ⃗ ∣ α ⃗ ) p ( x ⃗ ∣ z ⃗ , β ⃗ ) = ∏ m = 1 M Δ ( α ⃗ m + n ⃗ m ) Δ ( α ⃗ m ) ∏ k = 1 K Δ ( β ⃗ k + n ⃗ k ) Δ ( β ⃗ k ) p(\vec{x},\vec{z}|\vec{\alpha},\vec{\beta}) = p(\vec{z} | \vec{\alpha})p(\vec{x} |\vec{z}, \vec{\beta})= \prod_{m=1}^{M} \frac{\Delta(\vec{\alpha}_m+\vec{n}_m)}{\Delta(\vec{\alpha}_m)}\prod_{k=1}^{K}\frac{\Delta(\vec{\beta}_k+\vec{n}_k)}{\Delta(\vec{\beta}_k)} p(x,z∣α,β)=p(z∣α)p(x∣z,β)=m=1∏MΔ(αm)Δ(αm+nm)k=1∏KΔ(βk)Δ(βk+nk)

同理用Gibbs采样时条件概率为:

P

(

z

i

=

k

∣

z

⃗

¬

i

,

x

⃗

)

∝

P

(

z

i

=

k

,

x

i

=

t

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

=

∫

P

(

z

i

=

k

,

x

i

=

t

,

θ

⃗

m

,

ϕ

⃗

k

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

d

θ

⃗

m

d

ϕ

⃗

k

=

∫

P

(

z

i

=

k

,

θ

⃗

m

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

P

(

x

i

=

t

,

ϕ

⃗

k

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

d

θ

⃗

m

d

ϕ

⃗

k

=

∫

P

(

z

i

=

k

,

θ

⃗

m

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

d

θ

⃗

m

∫

P

(

x

i

=

t

,

ϕ

⃗

k

∣

z

⃗

k

,

¬

i

,

x

⃗

k

,

¬

i

)

d

ϕ

⃗

k

=

∫

P

(

z

i

=

k

∣

θ

⃗

m

)

P

(

θ

⃗

m

∣

z

⃗

¬

i

,

x

⃗

¬

i

)

d

θ

⃗

m

∫

P

(

x

i

=

t

∣

ϕ

⃗

k

)

P

(

ϕ

⃗

k

∣

z

⃗

k

,

¬

i

,

x

⃗

k

,

¬

i

)

d

ϕ

⃗

k

=

∫

θ

m

,

k

D

i

r

c

h

l

e

t

(

θ

⃗

∣

α

⃗

+

n

⃗

¬

i

)

d

θ

⃗

m

∫

ϕ

k

,

t

D

i

r

c

h

l

e

t

(

ϕ

⃗

k

∣

β

⃗

k

+

n

⃗

k

,

¬

i

)

d

ϕ

⃗

k

=

E

(

θ

m

,

k

)

E

(

ϕ

k

,

t

)

=

θ

^

m

,

k

ϕ

^

k

,

t

\begin{aligned}P(z_i=k|\vec{z}_{\neg i},\vec{x}) &\propto P(z_i=k,x_i=t|\vec{z}_{\neg i},\vec{x}_{\neg i}) \\ &= \int{P(z_i=k,x_i=t,\vec{\theta}_m,\vec{\phi}_k|\vec{z}_{\neg i},\vec{x}_{\neg i})}d \vec{\theta}_md\vec{\phi}_k \\ &=\int{ P(z_i=k,\vec{\theta}_m|\vec{z}_{\neg i},\vec{x}_{\neg i}) } P(x_i=t,\vec{\phi}_k|\vec{z}_{\neg i},\vec{x}_{\neg i})d\vec{\theta}_md\vec{\phi}_k \\ &= \int{ P(z_i=k,\vec{\theta}_m|\vec{z}_{\neg i},\vec{x}_{\neg i}) } d \vec{\theta}_m\int{P(x_i=t,\vec{\phi}_k|\vec{z}_{k,\neg i},\vec{x}_{k,\neg i})}d\vec{\phi}_k \\ &= \int{ P(z_i=k|\vec{\theta}_m)P(\vec{\theta}_m|\vec{z}_{\neg i},\vec{x}_{\neg i}) } d \vec{\theta}_m \int{P(x_i=t|\vec{\phi}_k)P(\vec{\phi}_k|\vec{z}_{k,\neg i},\vec{x}_{k,\neg i})}d\vec{\phi}_k \\ &= \int{\theta}_{m,k} Dirchlet(\vec{\theta}|\vec{\alpha} +\vec{n}_{\neg i})d\vec{\theta}_m\int{\phi_{k,t}Dirchlet(\vec{\phi}_k|\vec{\beta}_k + \vec{n}_{k,\neg i})}d\vec{\phi}_k \\ &=E(\theta_{m,k})E(\phi_{k,t})\\ &=\hat{\theta}_{m,k}\hat{\phi}_{k,t} \\ \end{aligned}

P(zi=k∣z¬i,x)∝P(zi=k,xi=t∣z¬i,x¬i)=∫P(zi=k,xi=t,θm,ϕk∣z¬i,x¬i)dθmdϕk=∫P(zi=k,θm∣z¬i,x¬i)P(xi=t,ϕk∣z¬i,x¬i)dθmdϕk=∫P(zi=k,θm∣z¬i,x¬i)dθm∫P(xi=t,ϕk∣zk,¬i,xk,¬i)dϕk=∫P(zi=k∣θm)P(θm∣z¬i,x¬i)dθm∫P(xi=t∣ϕk)P(ϕk∣zk,¬i,xk,¬i)dϕk=∫θm,kDirchlet(θ∣α+n¬i)dθm∫ϕk,tDirchlet(ϕk∣βk+nk,¬i)dϕk=E(θm,k)E(ϕk,t)=θ^m,kϕ^k,t

于是,得到最终模型的Gibbs采样公式:

P ( z = k i ∣ z ⃗ ¬ i , x ⃗ ) ∝ n k , ¬ i m + α k m ∑ i = 1 K ( n k , ¬ i m + α k m ) ⋅ n k , ¬ i t + α k , ¬ i t ∑ t = 1 V ( n k , ¬ i t + α k , ¬ i t ) P(z=k_i|\vec{z}_{\neg i},\vec{x}) \propto \frac{n_{k,\neg i}^m + \alpha_k^m}{\sum_{i=1}^{K}(n_{k,\neg i}^m + \alpha_k^m)} \cdot \frac{n_{k,\neg i}^t + \alpha_{k,\neg i}^t }{\sum_{t=1}^{V} (n_{k,\neg i}^t + \alpha_{k,\neg i}^t )} P(z=ki∣z¬i,x)∝∑i=1K(nk,¬im+αkm)nk,¬im+αkm⋅∑t=1V(nk,¬it+αk,¬it)nk,¬it+αk,¬it

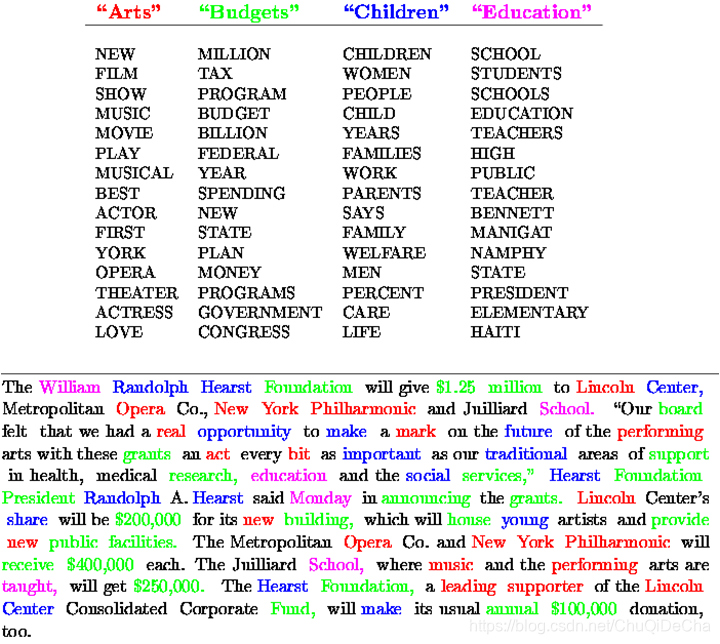

主题模型(LDA,Latent Dirichlet Allocation)

看到这里,细心的读者已经发现:M+K个多面体问题不就是主题模型吗?M轮观测结果相当于M篇文档, θ ⃗ m \vec{\theta}_m θm不就是第m篇文档的主题分布吗? ϕ ⃗ k \vec{\phi}_k ϕk不就是第k个主题的主题词分布吗?的确是这样的。

LDA是一种文档生成模型。它认为一篇文章是有多个主题的,而每个主题又对应着不同的词。语料库的生成过程如下:

- 共有m篇文章,一共涉及了K个主题

- 每篇文章(长度为 N m \N_m Nm)都有各自的主题分布

- 主题分布是多项分布,该多项分布的参数服从Dirichlet分布,该Dirichlet分布的参数为 α \alpha α

- 每个主题都有各自的词分布,词分布为多项分布,该多项分布的参数服从Dirichlet分布,该Dirichlet分布的参数为 β \beta β

- 对于某篇文章中的第n个词,首先从该文章 的主题分布中采样一个主题,然后在这个主题对应的词分布中采样一个词。不断重复这个随机生成过程,直到m篇文章全部完成上述过程。

LDA模型是词袋模型的一种,认为文档和文档之间、文档中的词与词之间是独立可交换的。

参考

- Blei, David M., et al. “Latent Dirichlet Allocation.” Journal of Machine Learning Research, vol. 3, 2003, pp. 993–1022.

- 靳志辉《LDA数学八卦》

2502

2502

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?