技术:JDK 1.7 + SDK 28 + Android Studio 3.4.2+手机Android 4.4以上

运行环境:Java + Android Camera API

概述

AliCamera是基于Android4.4源码中Camera2重新开发的一款全新相机应用,支持拍照、录像、二维码扫描、全景四个模式。 同时相机支持美颜和滤镜功能。

详细

一、运行效果

二、实现过程

①、捕获视频

捕获摄像头捕捉的图像数据并保存为视频。

//CaptureAnimation.java

package com.yunos.camera.animation;

import android.graphics.Color;

import android.view.View;

import android.view.animation.Animation;

import android.view.animation.Transformation;

import android.view.animation.Animation.AnimationListener;

public class CaptureAnimation extends Animation implements AnimationListener {

private View mView;

public CaptureAnimation(View view) {

mView = view;

setDuration(400);

setAnimationListener(this);

}

@Override

protected void applyTransformation(float interpolatedTime, Transformation t) {

float alpha;

if (interpolatedTime < 0.125) {

alpha = interpolatedTime / 0.125f;

} else if (interpolatedTime >= 0.125 && interpolatedTime < 0.5) {

alpha = 1.0f;

} else {

alpha = 0.8f * (1 - interpolatedTime) / 0.5f;

}

mView.setBackgroundColor(Color.argb((int) (alpha * 255 * 0.8), 0, 0, 0));

}

@Override

public void onAnimationStart(Animation animation) {

mView.setBackgroundColor(Color.TRANSPARENT);

}

@Override

public void onAnimationRepeat(Animation animation) {

}

@Override

public void onAnimationEnd(Animation animation) {

}

}

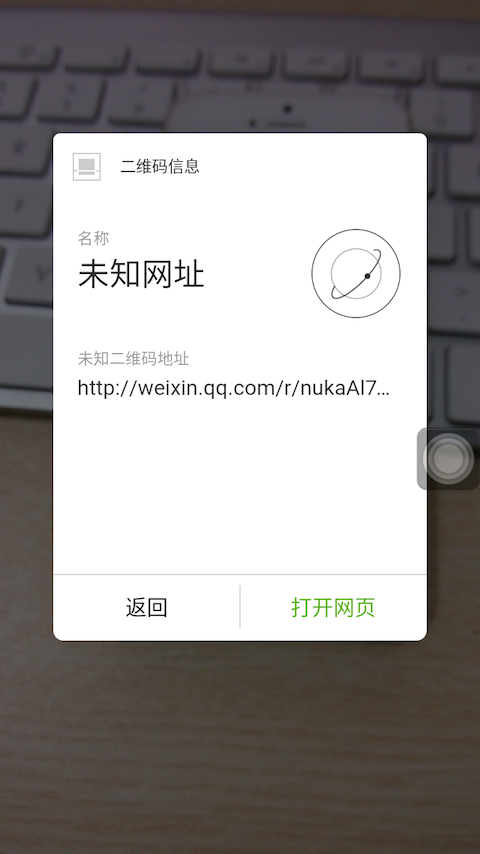

②、识别二维码

将摄像头捕获图像,由于从本地图片拿到的bitmap是ARGB进行存储的,需要要先转化出YUV数据,然后先采解析图片的YUV数据。

具体来看步骤:

(1)从本地图片中拿到的bitmap,通过算法拿到它的YUV数据

(2)把YUV数据进行解析

//DecodeHandler.java

package com.yunos.camera.qrcode;

import java.util.EnumMap;

import java.util.EnumSet;

import java.util.Map;

import java.util.Set;

import android.os.Handler;

import android.os.Looper;

import android.os.Message;

import android.util.Log;

import com.google.zxing.BarcodeFormat;

import com.google.zxing.BinaryBitmap;

import com.google.zxing.DecodeHintType;

import com.google.zxing.MultiFormatReader;

import com.google.zxing.ReaderException;

import com.google.zxing.Result;

import com.google.zxing.common.HybridBinarizer;

import com.way.camera.R;

import com.yunos.camera.ScanModule;

//import com.zijunlin.Zxing.Demo.camera.CameraManager;

//import com.zijunlin.Zxing.Demo.CaptureActivity;

//import com.zijunlin.Zxing.Demo.R;

public class DecodeHandler extends Handler {

private static final String TAG = DecodeHandler.class.getSimpleName();

public static final int RESULT_NONE = -1;

public static final int RESULT_QR = 0;

public static final int RESULT_BAR = 1;

private final ScanModule scanModule;

private final MultiFormatReader multiFormatReader;

static final Set<BarcodeFormat> PRODUCT_FORMATS;

static final Set<BarcodeFormat> INDUSTRIAL_FORMATS;

private static final Set<BarcodeFormat> ONE_D_FORMATS;

static final Set<BarcodeFormat> QR_CODE_FORMATS = EnumSet.of(BarcodeFormat.QR_CODE);

static final Set<BarcodeFormat> DATA_MATRIX_FORMATS = EnumSet.of(BarcodeFormat.DATA_MATRIX);

static final Set<BarcodeFormat> AZTEC_FORMATS = EnumSet.of(BarcodeFormat.AZTEC);

static final Set<BarcodeFormat> PDF417_FORMATS = EnumSet.of(BarcodeFormat.PDF_417);

static {

PRODUCT_FORMATS = EnumSet.of(BarcodeFormat.UPC_A,

BarcodeFormat.UPC_E,

BarcodeFormat.EAN_13,

BarcodeFormat.EAN_8,

BarcodeFormat.RSS_14,

BarcodeFormat.RSS_EXPANDED);

INDUSTRIAL_FORMATS = EnumSet.of(BarcodeFormat.CODE_39,

BarcodeFormat.CODE_93,

BarcodeFormat.CODE_128,

BarcodeFormat.ITF,

BarcodeFormat.CODABAR);

ONE_D_FORMATS = EnumSet.copyOf(PRODUCT_FORMATS);

ONE_D_FORMATS.addAll(INDUSTRIAL_FORMATS);

}

private static boolean isRotate = true;

DecodeHandler(ScanModule scanModule) {

Map<DecodeHintType,Object> hints = new EnumMap<DecodeHintType, Object>(DecodeHintType.class);

Set<BarcodeFormat> decodeFormats = EnumSet.noneOf(BarcodeFormat.class);

decodeFormats.addAll(ONE_D_FORMATS);

decodeFormats.addAll(QR_CODE_FORMATS);

String characterSet = "utf-8";

hints.put(DecodeHintType.CHARACTER_SET, characterSet);

hints.put(DecodeHintType.POSSIBLE_FORMATS, decodeFormats);

multiFormatReader = new MultiFormatReader();

multiFormatReader.setHints(hints);

this.scanModule = scanModule;

}

@Override

public void handleMessage(Message message) {

switch (message.what) {

case R.id.decode:

// Log.d(TAG, "Got decode message");

synchronized (this) {

decode((byte[]) message.obj, message.arg1, message.arg2);

}

break;

case R.id.quit:

Looper.myLooper().quit();

break;

}

}

/**

* Decode the data within the viewfinder rectangle, and time how long it

* took. For efficiency, reuse the same reader objects from one decode to

* the next.

*

* @param data The YUV preview frame.

* @param width The width of the preview frame.

* @param height The height of the preview frame.

*/

private void decode(byte[] data, int width, int height) {

long start = System.currentTimeMillis();

//Log.d("dyb_scan", "" + data.length + ", width " + width + " height " + height);

int rectleft = (width-height)/2;

if (isRotate) {

isRotate = false;

} else {

isRotate = true;

}

byte tmp[] = new byte[height*height];

if (isRotate) {

for (int row = 0; row < height; row++) {

for (int col = 0; col < height; col++) {

tmp[col*height+row] = data[row*width+col+rectleft];

}

}

} else {

for (int row = 0; row < height; row++) {

for (int col = 0; col < height; col++) {

tmp[row*height+col] = data[row*width+col+rectleft];

}

}

}

PlanarYUVLuminanceSource source = new PlanarYUVLuminanceSource(tmp, height, height, 0, 0,

height, height, false);

tmp = null;

Result rawResult = null;

if (source != null) {

BinaryBitmap bitmap = new BinaryBitmap(new HybridBinarizer(source));

try {

rawResult = multiFormatReader.decodeWithState(bitmap);

} catch (ReaderException re) {

// continue

} finally {

multiFormatReader.reset();

}

}

long cost = System.currentTimeMillis()-start;

Log.d("dyb_scan", "decode time is " + cost);

//long start = System.currentTimeMillis();

// QRCodeResult rawResult = new QRCodeResult();

//rawResult.resString = decode(data, rawResult.location, width, height);

//long cost = System.currentTimeMillis()-start;

//Log.d("dyb_scan", "decode time is " + cost);

if (rawResult != null) {

//Log.d("dyb_scan", "decode result is " + rawResult.resString);

Message message = Message.obtain(scanModule.getHandler(), R.id.decode_succeeded,

rawResult);

message.sendToTarget();

} else {

Message message = Message.obtain(scanModule.getHandler(), R.id.decode_failed,

rawResult);

message.sendToTarget();

}

}

public static native String decode(byte data[], int points[], int width, int height);

static {

System.loadLibrary("qrcode_jni");

}

}

//PlanarYUVLuminanceSource.java

/*

* Copyright 2009 ZXing authors

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.yunos.camera.qrcode;

import com.google.zxing.LuminanceSource;

/**

* This object extends LuminanceSource around an array of YUV data returned from the camera driver,

* with the option to crop to a rectangle within the full data. This can be used to exclude

* superfluous pixels around the perimeter and speed up decoding.

*

* It works for any pixel format where the Y channel is planar and appears first, including

* YCbCr_420_SP and YCbCr_422_SP.

*

* @author dswitkin@google.com (Daniel Switkin)

*/

public final class PlanarYUVLuminanceSource extends LuminanceSource {

private static final int THUMBNAIL_SCALE_FACTOR = 2;

private final byte[] yuvData;

private final int dataWidth;

private final int dataHeight;

private final int left;

private final int top;

public PlanarYUVLuminanceSource(byte[] yuvData,

int dataWidth,

int dataHeight,

int left,

int top,

int width,

int height,

boolean reverseHorizontal) {

super(width, height);

if (left + width > dataWidth || top + height > dataHeight) {

throw new IllegalArgumentException("Crop rectangle does not fit within image data.");

}

this.yuvData = yuvData;

this.dataWidth = dataWidth;

this.dataHeight = dataHeight;

this.left = left;

this.top = top;

if (reverseHorizontal) {

reverseHorizontal(width, height);

}

}

@Override

public byte[] getRow(int y, byte[] row) {

if (y < 0 || y >= getHeight()) {

throw new IllegalArgumentException("Requested row is outside the image: " + y);

}

int width = getWidth();

if (row == null || row.length < width) {

row = new byte[width];

}

int offset = (y + top) * dataWidth + left;

System.arraycopy(yuvData, offset, row, 0, width);

return row;

}

@Override

public byte[] getMatrix() {

int width = getWidth();

int height = getHeight();

// If the caller asks for the entire underlying image, save the copy and give them the

// original data. The docs specifically warn that result.length must be ignored.

if (width == dataWidth && height == dataHeight) {

return yuvData;

}

int area = width * height;

byte[] matrix = new byte[area];

int inputOffset = top * dataWidth + left;

// If the width matches the full width of the underlying data, perform a single copy.

if (width == dataWidth) {

System.arraycopy(yuvData, inputOffset, matrix, 0, area);

return matrix;

}

// Otherwise copy one cropped row at a time.

byte[] yuv = yuvData;

for (int y = 0; y < height; y++) {

int outputOffset = y * width;

System.arraycopy(yuv, inputOffset, matrix, outputOffset, width);

inputOffset += dataWidth;

}

return matrix;

}

@Override

public boolean isCropSupported() {

return true;

}

@Override

public LuminanceSource crop(int left, int top, int width, int height) {

return new PlanarYUVLuminanceSource(yuvData,

dataWidth,

dataHeight,

this.left + left,

this.top + top,

width,

height,

false);

}

public int[] renderThumbnail() {

int width = getWidth() / THUMBNAIL_SCALE_FACTOR;

int height = getHeight() / THUMBNAIL_SCALE_FACTOR;

int[] pixels = new int[width * height];

byte[] yuv = yuvData;

int inputOffset = top * dataWidth + left;

for (int y = 0; y < height; y++) {

int outputOffset = y * width;

for (int x = 0; x < width; x++) {

int grey = yuv[inputOffset + x * THUMBNAIL_SCALE_FACTOR] & 0xff;

pixels[outputOffset + x] = 0xFF000000 | (grey * 0x00010101);

}

inputOffset += dataWidth * THUMBNAIL_SCALE_FACTOR;

}

return pixels;

}

/**

* @return width of image from {@link #renderThumbnail()}

*/

public int getThumbnailWidth() {

return getWidth() / THUMBNAIL_SCALE_FACTOR;

}

/**

* @return height of image from {@link #renderThumbnail()}

*/

public int getThumbnailHeight() {

return getHeight() / THUMBNAIL_SCALE_FACTOR;

}

private void reverseHorizontal(int width, int height) {

byte[] yuvData = this.yuvData;

for (int y = 0, rowStart = top * dataWidth + left; y < height; y++, rowStart += dataWidth) {

int middle = rowStart + width / 2;

for (int x1 = rowStart, x2 = rowStart + width - 1; x1 < middle; x1++, x2--) {

byte temp = yuvData[x1];

yuvData[x1] = yuvData[x2];

yuvData[x2] = temp;

}

}

}

}

③、滤镜处理

滤镜图像处理是通过摄像头捕获图像转化成Bitmap位图,并进行相关处理实现。

颜色处理滤镜:

//ColorMappingProcessor.java

package com.yunos.camera.filters;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.opengl.GLES20;

import android.util.Log;

import com.yunos.camera.ImageProcessNativeInterface;

import com.yunos.gallery3d.glrenderer.BitmapTexture;

import com.yunos.gallery3d.glrenderer.GLCanvas;

import com.yunos.gallery3d.glrenderer.ShaderParameter;

import com.yunos.gallery3d.glrenderer.UniformShaderParameter;

import java.util.HashMap;

import java.util.List;

/*

* back up texture translate algorithm

*

* for (int i = 0; i < 256; i++) {

* ((unsigned char *)(outImage->imageData))[3*i+2] = ((unsigned char *)(inImage->imageData)+inImage->widthStep*2)[3*i];

* ((unsigned char *)(outImage->imageData))[3*i+1] = ((unsigned char *)(inImage->imageData+inImage->widthStep))[3*i+1];

* ((unsigned char *)(outImage->imageData))[3*i] = ((unsigned char *)(inImage->imageData))[3*i+2];

* }

*

*

* */

public class ColorMappingProcessor extends BaseProcessor{

private BitmapTexture colorTabelTexture = null;

private String bitmapPath;

public ColorMappingProcessor() {

NAME = "ColorMapping";

mMethodString = ""

+ "uniform sampler2D " + COLOR_TABLE + ";\n"

+ "mediump vec3 colorTable(vec4 texel, sampler2D table) {\n"

+ " vec2 lookup; \n"

+ " lookup.y = .5;\n"

+ " lookup.x = texel.r;\n"

+ " texel.r = texture2D(table, lookup).r;\n"

+ " lookup.x = texel.g;\n"

+ " texel.g = texture2D(table, lookup).g;\n"

+ " lookup.x = texel.b;\n"

+ " texel.b = texture2D(table, lookup).b;\n"

+ " return texel.rgb;\n"

+ " }\n";

mProcessString = " texel.rgb = colorTable(texel," + COLOR_TABLE + ");\n";

paramMap = new HashMap<String, Integer>();

mTextureCount = 1;

}

@Override

public void loadProcess(String path, String mProcessString) {

bitmapPath = path+"/"+mProcessString;

Bitmap bitmap = BitmapFactory.decodeFile(bitmapPath);

colorTabelTexture = new BitmapTexture(bitmap);

}

@Override

public void appendParams(List<ShaderParameter> paramList) {

// TODO Auto-generated method stub

int length = paramList.size();

paramList.add(new UniformShaderParameter(COLOR_TABLE));

paramMap.put(COLOR_TABLE, length);

}

@Override

public void prepareParams(ShaderParameter[] params, GLCanvas canvas) {

// TODO Auto-generated method stub

bindTexture(canvas, colorTabelTexture);

GLES20.glUniform1i(params[paramMap.get(COLOR_TABLE)].handle, textureIndex);

FiltersUtil.checkError();

}

@Override

public void jpegProcess() {

// TODO Auto-generated method stub

Log.d("dyb_filter", NAME + " jpegProcess");

Bitmap bitmap = BitmapFactory.decodeFile(bitmapPath);

ImageProcessNativeInterface.filterColorMapping(bitmap);

bitmap.recycle();

}

}

材质处理滤镜:

//ShaderProcess.java

package com.yunos.camera.filters;

import android.opengl.GLES20;

import android.util.Log;

public class ShaderProcess {

private static final String ALPHA_UNIFORM = "uAlpha";

private static final String TEXTURE_SAMPLER_UNIFORM = "uTextureSampler";

private static final String POSITION_ATTRIBUTE = "aPosition";

private static final String MATRIX_UNIFORM = "uMatrix";

private static final String TEXTURE_MATRIX_UNIFORM = "uTextureMatrix";

ShaderParameter[] mFilterParameters = {

new AttributeShaderParameter(POSITION_ATTRIBUTE), // INDEX_POSITION

new UniformShaderParameter(MATRIX_UNIFORM), // INDEX_MATRIX

new UniformShaderParameter(TEXTURE_MATRIX_UNIFORM), // INDEX_TEXTURE_MATRIX

new UniformShaderParameter(TEXTURE_SAMPLER_UNIFORM), // INDEX_TEXTURE_SAMPLER

new UniformShaderParameter(ALPHA_UNIFORM), // INDEX_ALPHA

// new UniformShaderParameter("delta"), //INDEX_DELTA

};

private static final String FILTER_FRAGMENT_SHADER = ""

+ "#extension GL_OES_EGL_image_external : require\n"

+ "precision mediump float;\n"

+ "varying vec2 vTextureCoord;\n"

+ "uniform float " + ALPHA_UNIFORM + ";\n"

+ "uniform samplerExternalOES " + TEXTURE_SAMPLER_UNIFORM + ";\n"

// + "uniform uFilterMatrix;\n"

+ "void main() {\n"

+ " vec3 gMonoMult1 = vec3(0.299, 0.587, 0.114);"

+ " gl_FragColor = texture2D(" + TEXTURE_SAMPLER_UNIFORM + ", vTextureCoord);\n"

+ " gl_FragColor *= " + ALPHA_UNIFORM + ";\n"

+ " gl_FragColor.r = dot(gMonoMult1, gl_FragColor.rgb);\n"

// + " gl_FragColor.r = gl_FragColor.r * 0.299 + gl_FragColor.g * 0.587 + gl_FragColor.b * 0.114;\n"

+ " gl_FragColor.g = gl_FragColor.r;\n"

+ " gl_FragColor.b = gl_FragColor.r;\n"

+ "}\n";

private static final String TAG = null;

private abstract static class ShaderParameter {

public int handle;

protected final String mName;

public ShaderParameter(String name) {

mName = name;

}

public abstract void loadHandle(int program);

}

private static class UniformShaderParameter extends ShaderParameter {

public UniformShaderParameter(String name) {

super(name);

}

@Override

public void loadHandle(int program) {

handle = GLES20.glGetUniformLocation(program, mName);

checkError();

}

}

private static class AttributeShaderParameter extends ShaderParameter {

public AttributeShaderParameter(String name) {

super(name);

}

@Override

public void loadHandle(int program) {

handle = GLES20.glGetAttribLocation(program, mName);

checkError();

}

}

public static void checkError() {

int error = GLES20.glGetError();

if (error != 0) {

Throwable t = new Throwable();

Log.e(TAG, "GL error: " + error, t);

}

}

}

覆盖图像滤镜:

//OverlayProcessor.java

package com.yunos.camera.filters;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.opengl.GLES20;

import android.util.Log;

import com.yunos.camera.ImageProcessNativeInterface;

import com.yunos.gallery3d.glrenderer.BitmapTexture;

import com.yunos.gallery3d.glrenderer.GLCanvas;

import com.yunos.gallery3d.glrenderer.ShaderParameter;

import com.yunos.gallery3d.glrenderer.UniformShaderParameter;

import java.util.HashMap;

import java.util.List;

public class OverlayProcessor extends BaseProcessor{

private BitmapTexture blowoutTexture = null;

private BitmapTexture overlayTexture = null;

private String blowoutBitmapPath;

private String overlayBitmapPath;

public OverlayProcessor() {

NAME = "Overlay";

mMethodString = ""

+ "uniform sampler2D " + BLOWOUT_TABLE + ";\n"

+ "uniform sampler2D " + OVERLAY_TABLE + ";\n"

+ "mediump vec3 overlayTable(vec4 texel, vec2 textureCoordinate, sampler2D blowout, sampler2D overlay) {\n"

+ " vec3 bbTexel = texture2D(blowout, textureCoordinate).rgb; \n"

+ " texel.r = texture2D(overlay, vec2(bbTexel.r, texel.r)).r; \n"

+ " texel.g = texture2D(overlay, vec2(bbTexel.g, texel.g)).g; \n"

+ " texel.b = texture2D(overlay, vec2(bbTexel.b, texel.b)).b; \n"

+ " return texel.rgb;\n"

+ " }\n";

mProcessString = " texel.rgb = overlayTable(texel, vTextureCoord, " + BLOWOUT_TABLE + ", " + OVERLAY_TABLE + ");\n";

paramMap = new HashMap<String, Integer>();

mTextureCount = 2;

}

@Override

public void loadProcess(String path, String mProcessString) {

// TODO Auto-generated method stub

String sub[] = mProcessString.split(",");

blowoutBitmapPath = path+"/"+sub[0];

overlayBitmapPath = path+"/"+sub[1];

Bitmap bitmapBlow = BitmapFactory.decodeFile(blowoutBitmapPath);

blowoutTexture = new BitmapTexture(bitmapBlow);

Bitmap bitmapOverlay = BitmapFactory.decodeFile(overlayBitmapPath);

overlayTexture = new BitmapTexture(bitmapOverlay);

}

@Override

public void appendParams(List<ShaderParameter> paramList) {

// TODO Auto-generated method stub

int length = paramList.size();

paramList.add(new UniformShaderParameter(BLOWOUT_TABLE));

paramMap.put(BLOWOUT_TABLE, length);

length = paramList.size();

paramList.add(new UniformShaderParameter(OVERLAY_TABLE));

paramMap.put(OVERLAY_TABLE, length);

}

@Override

public void prepareParams(ShaderParameter[] params, GLCanvas canvas) {

// TODO Auto-generated method stub

bindTexture(canvas, blowoutTexture);

GLES20.glUniform1i(params[paramMap.get(BLOWOUT_TABLE)].handle, textureIndex);

FiltersUtil.checkError();

textureIndex++;

bindTexture(canvas, overlayTexture);

GLES20.glUniform1i(params[paramMap.get(OVERLAY_TABLE)].handle, textureIndex);

textureIndex--;

FiltersUtil.checkError();

}

@Override

public void jpegProcess() {

// TODO Auto-generated method stub

Log.d("dyb_filter", NAME + " jpegProcess");

Bitmap blowoutBitmap = BitmapFactory.decodeFile(blowoutBitmapPath);

&nbsnbsp; Bitmap overlayBitmap = BitmapFactory.decodeFile(overlayBitmapPath);

ImageProcessNativeInterface.filterOverlay(blowoutBitmap, overlayBitmap);

blowoutBitmap.recycle();

overlayBitmap.recycle();

}

}

像素矩阵处理滤镜:

//MatrixTuningProcessor.java

package com.yunos.camera.filters;

import android.R.integer;

import android.opengl.GLES20;

import android.util.Log;

import com.yunos.camera.ImageProcessNativeInterface;

import com.yunos.gallery3d.glrenderer.GLCanvas;

import com.yunos.gallery3d.glrenderer.ShaderParameter;

import com.yunos.gallery3d.glrenderer.UniformShaderParameter;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

public class MatrixTuningProcessor extends BaseProcessor{

private float mMatrix[];

public MatrixTuningProcessor() {

NAME = MATRIX_TUNING_PROCESSOR;

mMethodString = "uniform mat3 " + TRANSFORM_MATRIX + ";\n"

+ "mediump vec4 linearTransform(vec4 src, mat3 transform) {\n "

+ " src.rgb *= transform;\n"

+ " return src;\n"

+ "}\n";

mProcessString = " texel = linearTransform( texel, " + TRANSFORM_MATRIX + "); \n";

paramMap = new HashMap<String, Integer>();

mTextureCount = 0;

}

@Override

public void loadProcess(String path, String mProcessString) {

mMatrix = FiltersUtil.convertTextToFloatArrayt(mProcessString);

}

@Override

public void appendParams(List<ShaderParameter> paramList) {

// TODO Auto-generated method stub

int length = paramList.size();

paramList.add(new UniformShaderParameter(TRANSFORM_MATRIX));

paramMap.put(TRANSFORM_MATRIX, length);

}

@Override

public void prepareParams(ShaderParameter[] params, GLCanvas canvas) {

GLES20.glUniformMatrix3fv(params[paramMap.get(TRANSFORM_MATRIX)].handle, 1, false, mMatrix, 0);

}

@Override

public void jpegProcess() {

// TODO Auto-generated method stub

Log.d("dyb_filter", NAME + " jpegProcess");

ImageProcessNativeInterface.filterMatrixTuning(mMatrix);

}

}

图像梯度滤镜:

//GradientProcessor.java

package com.yunos.camera.filters;

import android.opengl.GLES20;

import android.util.Log;

import com.yunos.camera.ImageProcessNativeInterface;

import com.yunos.gallery3d.glrenderer.GLCanvas;

import com.yunos.gallery3d.glrenderer.ShaderParameter;

import com.yunos.gallery3d.glrenderer.UniformShaderParameter;

import java.util.HashMap;

import java.util.List;

public class GradientProcessor extends BaseProcessor{

private float gradientPixSize[] = new float[2];

public GradientProcessor() {

mMethodString = ""

+ "uniform vec2 " + GRADIENT_PIX_SIZE +";\n"

+ "mediump vec4 calculateGradient(samplerExternalOES sampler2d, vec2 _uv, vec2 pixSize) { \n"

+ " mediump vec4 color = vec4(0.0, 0.0, 0.0, 0.0); \n"

+ " highp vec4 up;\n"

+ " highp vec4 down; \n"

+ " highp vec4 left; \n"

+ " highp vec4 right; \n"

+ " highp vec4 temp; \n"

+ " highp float r, g, b; \n"

+ " mediump float amount = 0.0; \n"

+ " mediump float ind = 0.0; \n"

+ " mediump vec2 offset; \n"

+ " mediump float pixNum = 1.0; \n"

+ " highp float gray1 = 0.0; \n"

+ " highp float gray2 = 0.0; \n"

+ " highp float gray3 = 0.0; \n"

+ " highp float maxG = 0.0; \n"

+ " mediump float x,y; \n"

+ " left = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " right = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " for( ind = 0.0; ind < pixNum; ind++ ) {\n"

+ " x = max(0.0, min(1.0, _uv.s-ind*pixSize.s)); \n"

+ " y = _uv.t; \n"

+ " left += texture2D(sampler2d,vec2(x,y)); \n"

+ " x = max(0.0, min(1.0, _uv.s+(ind+2.0)*pixSize.s)); \n"

+ " y = _uv.t; \n"

+ " right += texture2D(sampler2d,vec2(x,y)); \n"

+ " } \n"

+ " temp = left - right; \n"

+ " if( temp.r < 0.0 ) {\n"

+ " gray1 += temp.r * temp.r; \n"

+ " } \n"

+ " if( temp.g < 0.0 ) {\n"

+ " gray2 +=temp.g * temp.g; \n"

+ " } \n"

+ " if( temp.b < 0.0 ) {\n"

+ " gray3 +=temp.b * temp.b; \n"

+ " } \n"

+ " left = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " right = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " for( ind = 0.0; ind < pixNum; ind++ ) {\n"

+ " x = max(0.0, min(1.0, _uv.s-(ind*2.0)*pixSize.s)); \n"

+ " y = _uv.t; \n"

+ " left += texture2D(sampler2d,vec2(x, y)); \n"

+ " x = max(0.0, min(1.0, _uv.s+ind*pixSize.s)); \n"

+ " y = _uv.t; \n"

+ " right += texture2D(sampler2d,vec2(x, y)); \n"

+ " } \n"

+ " temp = left - right; \n"

+ " if( temp.r > 0.0 ) {\n"

+ " gray1 += temp.r * temp.r ; \n"

+ " } \n"

+ " if( temp.g > 0.0 ) {\n"

+ " gray2 += temp.g * temp.g ; \n"

+ " } \n"

+ " if( temp.b > 0.0 ) {\n"

+ " gray3 += temp.b * temp.b ; \n"

+ " } \n"

+ " up = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " down = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " for( ind = 0.0; ind < pixNum; ind++) {\n"

+ " x = _uv.s; \n"

+ " y = max(0.0, min(1.0, _uv.t-ind*pixSize.t)); \n"

+ " up += texture2D(sampler2d, vec2(x, y)); \n"

+ " y = max(0.0, min(1.0, _uv.t+(ind+2.0)*pixSize.t)); \n"

+ " down += texture2D(sampler2d, vec2(x, y)); \n"

+ " } \n"

+ " temp = up - down; \n"

+ " if( temp.r < 0.0 ) {\n"

+ " gray1 += temp.r * temp.r; \n"

+ " } \n"

+ " if( temp.g < 0.0 ) {\n"

+ " gray2 += temp.g * temp.g; \n"

+ " } \n"

+ " if( temp.b < 0.0 ) {\n"

+ " gray3 += temp.b * temp.b; \n"

+ " } \n"

+ " up = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " down = vec4( 0.0, 0.0, 0.0, 0.0 ); \n"

+ " for( ind = 0.0; ind < pixNum; ind++ ) {\n"

+ " x = _uv.s; \n"

+ " y = max(0.0, min(1.0, _uv.t - (ind+2.0)*pixSize.t)); \n"

+ " up += texture2D(sampler2d, vec2(x, y)); \n"

+ " y = max(0.0, min(1.0, _uv.t + ind*pixSize.t)); \n"

+ " down += texture2D(sampler2d, vec2(x, y)); \n"

+ " } \n"

+ " temp = up - down; \n"

+ " if( temp.r > 0.0 ) {\n"

+ " gray1 += temp.r * temp.r; \n"

+ " } \n"

+ " if( temp.g > 0.0 ) {\n"

+ " gray2 += temp.g *temp.g ; \n"

+ " } \n"

+ " if( temp.b > 0.0 ) {\n"

+ " gray3 += temp.b * temp.b; \n"

+ " } \n"

+ " r = texture2D(sampler2d, _uv).r; \n"

+ " g = texture2D(sampler2d, _uv).g; \n"

+ " b = texture2D(sampler2d, _uv).b; \n"

+ " maxG = pixNum; \n"

+ " if( ( -0.000001 < gray1 / maxG ) && ( gray1 / maxG < 0.000001)) {\n"

+ " gray1 = pow( 0.000001, r); \n"

+ " } else { \n"

+ " gray1 = pow( gray1 / maxG, r ); \n"

+ " } \n"

+ " if( ( -0.000001 < ( gray2 / maxG) ) && ( ( gray2 / maxG) < 0.000001)) {\n"

+ " gray2 = pow( 0.000001, g); \n"

+ " } else { \n"

+ " gray2 = pow( gray2 / maxG, g ); \n"

+ " } \n"

+ " if( ( -0.000001 < gray3 / maxG ) && ( gray3 / maxG < 0.000001)) {\n"

+ " gray3 = pow( 0.000001, b); \n"

+ " } else {\n"

+ " gray3 = pow( gray3 / maxG, b ); \n"

+ " } \n"

+ " gray1 = 1.0 - gray1; \n"

+ " gray2 = 1.0 - gray2; \n"

+ " gray3 = 1.0 - gray3; \n"

+ " color.r = gray1; \n"

+ " color.g = gray2; \n"

+ " color.b = gray3; \n"

+ " return color;\n"

+ " }\n";

mProcessString = " texel = calculateGradient(" + TEXTURE_SAMPLER_UNIFORM + ", vTextureCoord," + GRADIENT_PIX_SIZE + ");\n";

paramMap = new HashMap<String, Integer>();

mTextureCount = 0;

}

@Override

public void loadProcess(String path, String mProcessString) {

// TODO Auto-generated method stub

String sub[] = mProcessString.split(",");

gradientPixSize[0] = Float.valueOf(sub[0]);

gradientPixSize[1] = Float.valueOf(sub[1]);

}

@Override

public void appendParams(List<ShaderParameter> paramList) {

// TODO Auto-generated method stub

int length = paramList.size();

paramList.add(new UniformShaderParameter(GRADIENT_PIX_SIZE));

paramMap.put(GRADIENT_PIX_SIZE, length);

}

@Override

public void prepareParams(ShaderParameter[] params, GLCanvas canvas) {

// TODO Auto-generated method stub

GLES20.glUniform2f(params[paramMap.get(GRADIENT_PIX_SIZE)].handle, gradientPixSize[0], gradientPixSize[1]);

FiltersUtil.checkError();

}

@Override

public void jpegProcess() {

// TODO Auto-generated method stub

Log.d("dyb_filter", NAME + " jpegProcess");

ImageProcessNativeInterface.filterGradient(gradientPixSize);

}

}

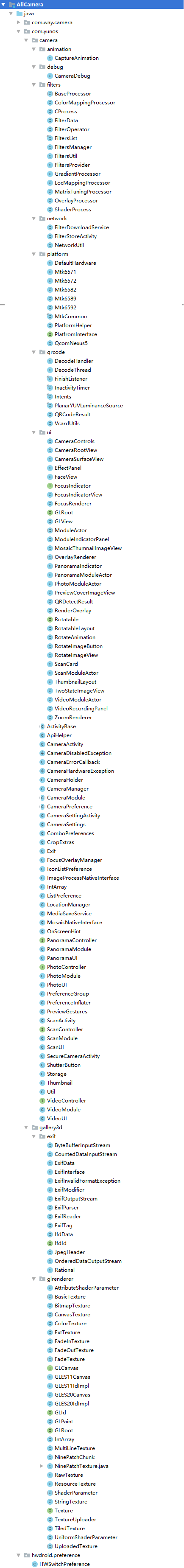

三、项目结构图

四、补充

App的界面使用原生Holo风格。

2652

2652

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?