参考链接

libswscale的sws_getContext()

- FFmpeg中类库libswsscale用于图像处理(缩放,YUV/RGB格式转换)

- libswscale是一个主要用于处理图片像素数据的类库。

- 可以完成图片像素格式的转换,图片的拉伸等工作。

- 有关libswscale的使用可以参考文章:最简单的基于FFmpeg的libswscale的示例(YUV转RGB)_雷霄骅的博客-CSDN博客_ffmpeg yuv422转rgb

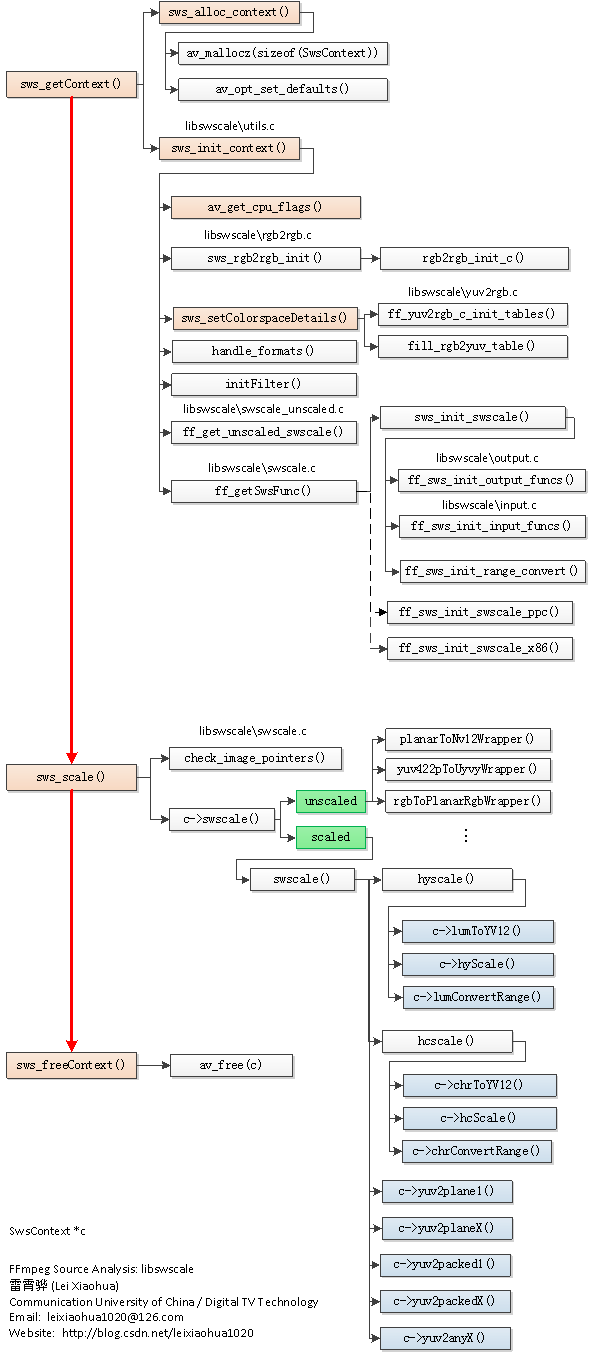

- libswscale常用的函数数量很少,一般情况下就3个:

- sws_getContext():初始化一个SwsContext。

- sws_scale():处理图像数据。

- sws_freeContext():释放一个SwsContext。

- 其中sws_getContext()也可以用sws_getCachedContext()取代。

- sws_getContext()是初始化SwsContext的函数。

- sws_getContext()的声明位于libswscale\swscale.h,如下所示。

/**

* Allocate and return an SwsContext. You need it to perform

* scaling/conversion operations using sws_scale().

*

* @param srcW the width of the source image

* @param srcH the height of the source image

* @param srcFormat the source image format

* @param dstW the width of the destination image

* @param dstH the height of the destination image

* @param dstFormat the destination image format

* @param flags specify which algorithm and options to use for rescaling

* @param param extra parameters to tune the used scaler

* For SWS_BICUBIC param[0] and [1] tune the shape of the basis

* function, param[0] tunes f(1) and param[1] f麓(1)

* For SWS_GAUSS param[0] tunes the exponent and thus cutoff

* frequency

* For SWS_LANCZOS param[0] tunes the width of the window function

* @return a pointer to an allocated context, or NULL in case of error

* @note this function is to be removed after a saner alternative is

* written

*/

struct SwsContext *sws_getContext(int srcW, int srcH, enum AVPixelFormat srcFormat,

int dstW, int dstH, enum AVPixelFormat dstFormat,

int flags, SwsFilter *srcFilter,

SwsFilter *dstFilter, const double *param);- 该函数包含以下参数:

- srcW:源图像的宽

- srcH:源图像的高

- srcFormat:源图像的像素格式

- dstW:目标图像的宽

- dstH:目标图像的高

- dstFormat:目标图像的像素格式

- flags:设定图像拉伸使用的算法

- 成功执行的话返回生成的SwsContext,否则返回NULL。

- sws_getContext()的定义位于libswscale\utils.c,如下所示。

SwsContext *sws_getContext(int srcW, int srcH, enum AVPixelFormat srcFormat,

int dstW, int dstH, enum AVPixelFormat dstFormat,

int flags, SwsFilter *srcFilter,

SwsFilter *dstFilter, const double *param)

{

SwsContext *c;

c = sws_alloc_set_opts(srcW, srcH, srcFormat,

dstW, dstH, dstFormat,

flags, param);

if (!c)

return NULL;

if (sws_init_context(c, srcFilter, dstFilter) < 0) {

sws_freeContext(c);

return NULL;

}

return c;

}

- 从sws_getContext()的定义中可以看出,它首先调用了一个函数sws_alloc_set_opts,sws_alloc_set_opts函数包含了先前的sws_alloc_context()函数,目的保持不变给SwsContext分配内存。

- 然后将传入的源图像,目标图像的宽高,像素格式,以及标志位分别赋值给该SwsContext相应的字段。最后调用一个函数sws_init_context()完成初始化工作。

- 下面我们分别看一下sws_alloc_set_opts、sws_alloc_context()和sws_init_context()这几个函数。

sws_alloc_set_opts

/**

* Allocate and return an SwsContext.

* This is like sws_getContext() but does not perform the init step, allowing

* the user to set additional AVOptions.

*

* @see sws_getContext()

*/

struct SwsContext *sws_alloc_set_opts(int srcW, int srcH, enum AVPixelFormat srcFormat,

int dstW, int dstH, enum AVPixelFormat dstFormat,

int flags, const double *param);SwsContext *sws_alloc_set_opts(int srcW, int srcH, enum AVPixelFormat srcFormat,

int dstW, int dstH, enum AVPixelFormat dstFormat,

int flags, const double *param)

{

SwsContext *c;

if (!(c = sws_alloc_context()))

return NULL;

c->flags = flags;

c->srcW = srcW;

c->srcH = srcH;

c->dstW = dstW;

c->dstH = dstH;

c->srcFormat = srcFormat;

c->dstFormat = dstFormat;

if (param) {

c->param[0] = param[0];

c->param[1] = param[1];

}

return c;

}

sws_alloc_context()

- sws_alloc_context()是FFmpeg的一个API,用于给SwsContext分配内存,它的声明如下所示。

/**

* Allocate an empty SwsContext. This must be filled and passed to

* sws_init_context(). For filling see AVOptions, options.c and

* sws_setColorspaceDetails().

*/

struct SwsContext *sws_alloc_context(void);- sws_alloc_context()的定义位于libswscale\utils.c,如下所示。

- 从代码中可以看出,sws_alloc_context()首先调用av_mallocz()为SwsContext结构体分配了一块内存;

- 然后设置了该结构体的AVClass,并且给该结构体的字段设置了默认值。

SwsContext *sws_alloc_context(void)

{

SwsContext *c = av_mallocz(sizeof(SwsContext));

av_assert0(offsetof(SwsContext, redDither) + DITHER32_INT == offsetof(SwsContext, dither32));

if (c) {

c->av_class = &ff_sws_context_class;

av_opt_set_defaults(c);

atomic_init(&c->stride_unaligned_warned, 0);

atomic_init(&c->data_unaligned_warned, 0);

}

return c;

}

sws_init_context

- sws_init_context()的是FFmpeg的一个API,用于初始化SwsContext。

/**

* Initialize the swscaler context sws_context.

*

* @return zero or positive value on success, a negative value on

* error

*/

av_warn_unused_result

int sws_init_context(struct SwsContext *sws_context, SwsFilter *srcFilter, SwsFilter *dstFilter);- sws_init_context()的函数定义非常的长,位于libswscale\utils.c,如下所示。

av_cold int sws_init_context(SwsContext *c, SwsFilter *srcFilter,

SwsFilter *dstFilter)

{

int i;

int usesVFilter, usesHFilter;

int unscaled;

SwsFilter dummyFilter = { NULL, NULL, NULL, NULL };

int srcW = c->srcW;

int srcH = c->srcH;

int dstW = c->dstW;

int dstH = c->dstH;

int dst_stride = FFALIGN(dstW * sizeof(int16_t) + 66, 16);

int flags, cpu_flags;

enum AVPixelFormat srcFormat = c->srcFormat;

enum AVPixelFormat dstFormat = c->dstFormat;

const AVPixFmtDescriptor *desc_src;

const AVPixFmtDescriptor *desc_dst;

int ret = 0;

enum AVPixelFormat tmpFmt;

static const float float_mult = 1.0f / 255.0f;

static AVOnce rgb2rgb_once = AV_ONCE_INIT;

if (c->nb_threads != 1) {

ret = context_init_threaded(c, srcFilter, dstFilter);

if (ret < 0 || c->nb_threads > 1)

return ret;

// threading disabled in this build, init as single-threaded

}

cpu_flags = av_get_cpu_flags();

flags = c->flags;

emms_c();

if (ff_thread_once(&rgb2rgb_once, ff_sws_rgb2rgb_init) != 0)

return AVERROR_UNKNOWN;

unscaled = (srcW == dstW && srcH == dstH);

c->srcRange |= handle_jpeg(&c->srcFormat);

c->dstRange |= handle_jpeg(&c->dstFormat);

if(srcFormat!=c->srcFormat || dstFormat!=c->dstFormat)

av_log(c, AV_LOG_WARNING, "deprecated pixel format used, make sure you did set range correctly\n");

if (!c->contrast && !c->saturation && !c->dstFormatBpp)

sws_setColorspaceDetails(c, ff_yuv2rgb_coeffs[SWS_CS_DEFAULT], c->srcRange,

ff_yuv2rgb_coeffs[SWS_CS_DEFAULT],

c->dstRange, 0, 1 << 16, 1 << 16);

handle_formats(c);

srcFormat = c->srcFormat;

dstFormat = c->dstFormat;

desc_src = av_pix_fmt_desc_get(srcFormat);

desc_dst = av_pix_fmt_desc_get(dstFormat);

// If the source has no alpha then disable alpha blendaway

if (c->src0Alpha)

c->alphablend = SWS_ALPHA_BLEND_NONE;

if (!(unscaled && sws_isSupportedEndiannessConversion(srcFormat) &&

av_pix_fmt_swap_endianness(srcFormat) == dstFormat)) {

if (!sws_isSupportedInput(srcFormat)) {

av_log(c, AV_LOG_ERROR, "%s is not supported as input pixel format\n",

av_get_pix_fmt_name(srcFormat));

return AVERROR(EINVAL);

}

if (!sws_isSupportedOutput(dstFormat)) {

av_log(c, AV_LOG_ERROR, "%s is not supported as output pixel format\n",

av_get_pix_fmt_name(dstFormat));

return AVERROR(EINVAL);

}

}

av_assert2(desc_src && desc_dst);

i = flags & (SWS_POINT |

SWS_AREA |

SWS_BILINEAR |

SWS_FAST_BILINEAR |

SWS_BICUBIC |

SWS_X |

SWS_GAUSS |

SWS_LANCZOS |

SWS_SINC |

SWS_SPLINE |

SWS_BICUBLIN);

/* provide a default scaler if not set by caller */

if (!i) {

if (dstW < srcW && dstH < srcH)

flags |= SWS_BICUBIC;

else if (dstW > srcW && dstH > srcH)

flags |= SWS_BICUBIC;

else

flags |= SWS_BICUBIC;

c->flags = flags;

} else if (i & (i - 1)) {

av_log(c, AV_LOG_ERROR,

"Exactly one scaler algorithm must be chosen, got %X\n", i);

return AVERROR(EINVAL);

}

/* sanity check */

if (srcW < 1 || srcH < 1 || dstW < 1 || dstH < 1) {

/* FIXME check if these are enough and try to lower them after

* fixing the relevant parts of the code */

av_log(c, AV_LOG_ERROR, "%dx%d -> %dx%d is invalid scaling dimension\n",

srcW, srcH, dstW, dstH);

return AVERROR(EINVAL);

}

if (flags & SWS_FAST_BILINEAR) {

if (srcW < 8 || dstW < 8) {

flags ^= SWS_FAST_BILINEAR | SWS_BILINEAR;

c->flags = flags;

}

}

if (!dstFilter)

dstFilter = &dummyFilter;

if (!srcFilter)

srcFilter = &dummyFilter;

c->lumXInc = (((int64_t)srcW << 16) + (dstW >> 1)) / dstW;

c->lumYInc = (((int64_t)srcH << 16) + (dstH >> 1)) / dstH;

c->dstFormatBpp = av_get_bits_per_pixel(desc_dst);

c->srcFormatBpp = av_get_bits_per_pixel(desc_src);

c->vRounder = 4 * 0x0001000100010001ULL;

usesVFilter = (srcFilter->lumV && srcFilter->lumV->length > 1) ||

(srcFilter->chrV && srcFilter->chrV->length > 1) ||

(dstFilter->lumV && dstFilter->lumV->length > 1) ||

(dstFilter->chrV && dstFilter->chrV->length > 1);

usesHFilter = (srcFilter->lumH && srcFilter->lumH->length > 1) ||

(srcFilter->chrH && srcFilter->chrH->length > 1) ||

(dstFilter->lumH && dstFilter->lumH->length > 1) ||

(dstFilter->chrH && dstFilter->chrH->length > 1);

av_pix_fmt_get_chroma_sub_sample(srcFormat, &c->chrSrcHSubSample, &c->chrSrcVSubSample);

av_pix_fmt_get_chroma_sub_sample(dstFormat, &c->chrDstHSubSample, &c->chrDstVSubSample);

c->dst_slice_align = 1 << c->chrDstVSubSample;

if (isAnyRGB(dstFormat) && !(flags&SWS_FULL_CHR_H_INT)) {

if (dstW&1) {

av_log(c, AV_LOG_DEBUG, "Forcing full internal H chroma due to odd output size\n");

flags |= SWS_FULL_CHR_H_INT;

c->flags = flags;

}

if ( c->chrSrcHSubSample == 0

&& c->chrSrcVSubSample == 0

&& c->dither != SWS_DITHER_BAYER //SWS_FULL_CHR_H_INT is currently not supported with SWS_DITHER_BAYER

&& !(c->flags & SWS_FAST_BILINEAR)

) {

av_log(c, AV_LOG_DEBUG, "Forcing full internal H chroma due to input having non subsampled chroma\n");

flags |= SWS_FULL_CHR_H_INT;

c->flags = flags;

}

}

if (c->dither == SWS_DITHER_AUTO) {

if (flags & SWS_ERROR_DIFFUSION)

c->dither = SWS_DITHER_ED;

}

if(dstFormat == AV_PIX_FMT_BGR4_BYTE ||

dstFormat == AV_PIX_FMT_RGB4_BYTE ||

dstFormat == AV_PIX_FMT_BGR8 ||

dstFormat == AV_PIX_FMT_RGB8) {

if (c->dither == SWS_DITHER_AUTO)

c->dither = (flags & SWS_FULL_CHR_H_INT) ? SWS_DITHER_ED : SWS_DITHER_BAYER;

if (!(flags & SWS_FULL_CHR_H_INT)) {

if (c->dither == SWS_DITHER_ED || c->dither == SWS_DITHER_A_DITHER || c->dither == SWS_DITHER_X_DITHER || c->dither == SWS_DITHER_NONE) {

av_log(c, AV_LOG_DEBUG,

"Desired dithering only supported in full chroma interpolation for destination format '%s'\n",

av_get_pix_fmt_name(dstFormat));

flags |= SWS_FULL_CHR_H_INT;

c->flags = flags;

}

}

if (flags & SWS_FULL_CHR_H_INT) {

if (c->dither == SWS_DITHER_BAYER) {

av_log(c, AV_LOG_DEBUG,

"Ordered dither is not supported in full chroma interpolation for destination format '%s'\n",

av_get_pix_fmt_name(dstFormat));

c->dither = SWS_DITHER_ED;

}

}

}

if (isPlanarRGB(dstFormat)) {

if (!(flags & SWS_FULL_CHR_H_INT)) {

av_log(c, AV_LOG_DEBUG,

"%s output is not supported with half chroma resolution, switching to full\n",

av_get_pix_fmt_name(dstFormat));

flags |= SWS_FULL_CHR_H_INT;

c->flags = flags;

}

}

/* reuse chroma for 2 pixels RGB/BGR unless user wants full

* chroma interpolation */

if (flags & SWS_FULL_CHR_H_INT &&

isAnyRGB(dstFormat) &&

!isPlanarRGB(dstFormat) &&

dstFormat != AV_PIX_FMT_RGBA64LE &&

dstFormat != AV_PIX_FMT_RGBA64BE &&

dstFormat != AV_PIX_FMT_BGRA64LE &&

dstFormat != AV_PIX_FMT_BGRA64BE &&

dstFormat != AV_PIX_FMT_RGB48LE &&

dstFormat != AV_PIX_FMT_RGB48BE &&

dstFormat != AV_PIX_FMT_BGR48LE &&

dstFormat != AV_PIX_FMT_BGR48BE &&

dstFormat != AV_PIX_FMT_RGBA &&

dstFormat != AV_PIX_FMT_ARGB &&

dstFormat != AV_PIX_FMT_BGRA &&

dstFormat != AV_PIX_FMT_ABGR &&

dstFormat != AV_PIX_FMT_RGB24 &&

dstFormat != AV_PIX_FMT_BGR24 &&

dstFormat != AV_PIX_FMT_BGR4_BYTE &&

dstFormat != AV_PIX_FMT_RGB4_BYTE &&

dstFormat != AV_PIX_FMT_BGR8 &&

dstFormat != AV_PIX_FMT_RGB8

) {

av_log(c, AV_LOG_WARNING,

"full chroma interpolation for destination format '%s' not yet implemented\n",

av_get_pix_fmt_name(dstFormat));

flags &= ~SWS_FULL_CHR_H_INT;

c->flags = flags;

}

if (isAnyRGB(dstFormat) && !(flags & SWS_FULL_CHR_H_INT))

c->chrDstHSubSample = 1;

// drop some chroma lines if the user wants it

c->vChrDrop = (flags & SWS_SRC_V_CHR_DROP_MASK) >>

SWS_SRC_V_CHR_DROP_SHIFT;

c->chrSrcVSubSample += c->vChrDrop;

/* drop every other pixel for chroma calculation unless user

* wants full chroma */

if (isAnyRGB(srcFormat) && !(flags & SWS_FULL_CHR_H_INP) &&

srcFormat != AV_PIX_FMT_RGB8 && srcFormat != AV_PIX_FMT_BGR8 &&

srcFormat != AV_PIX_FMT_RGB4 && srcFormat != AV_PIX_FMT_BGR4 &&

srcFormat != AV_PIX_FMT_RGB4_BYTE && srcFormat != AV_PIX_FMT_BGR4_BYTE &&

srcFormat != AV_PIX_FMT_GBRP9BE && srcFormat != AV_PIX_FMT_GBRP9LE &&

srcFormat != AV_PIX_FMT_GBRP10BE && srcFormat != AV_PIX_FMT_GBRP10LE &&

srcFormat != AV_PIX_FMT_GBRAP10BE && srcFormat != AV_PIX_FMT_GBRAP10LE &&

srcFormat != AV_PIX_FMT_GBRP12BE && srcFormat != AV_PIX_FMT_GBRP12LE &&

srcFormat != AV_PIX_FMT_GBRAP12BE && srcFormat != AV_PIX_FMT_GBRAP12LE &&

srcFormat != AV_PIX_FMT_GBRP14BE && srcFormat != AV_PIX_FMT_GBRP14LE &&

srcFormat != AV_PIX_FMT_GBRP16BE && srcFormat != AV_PIX_FMT_GBRP16LE &&

srcFormat != AV_PIX_FMT_GBRAP16BE && srcFormat != AV_PIX_FMT_GBRAP16LE &&

srcFormat != AV_PIX_FMT_GBRPF32BE && srcFormat != AV_PIX_FMT_GBRPF32LE &&

srcFormat != AV_PIX_FMT_GBRAPF32BE && srcFormat != AV_PIX_FMT_GBRAPF32LE &&

((dstW >> c->chrDstHSubSample) <= (srcW >> 1) ||

(flags & SWS_FAST_BILINEAR)))

c->chrSrcHSubSample = 1;

// Note the AV_CEIL_RSHIFT is so that we always round toward +inf.

c->chrSrcW = AV_CEIL_RSHIFT(srcW, c->chrSrcHSubSample);

c->chrSrcH = AV_CEIL_RSHIFT(srcH, c->chrSrcVSubSample);

c->chrDstW = AV_CEIL_RSHIFT(dstW, c->chrDstHSubSample);

c->chrDstH = AV_CEIL_RSHIFT(dstH, c->chrDstVSubSample);

if (!FF_ALLOCZ_TYPED_ARRAY(c->formatConvBuffer, FFALIGN(srcW * 2 + 78, 16) * 2))

goto nomem;

c->frame_src = av_frame_alloc();

c->frame_dst = av_frame_alloc();

if (!c->frame_src || !c->frame_dst)

goto nomem;

c->srcBpc = desc_src->comp[0].depth;

if (c->srcBpc < 8)

c->srcBpc = 8;

c->dstBpc = desc_dst->comp[0].depth;

if (c->dstBpc < 8)

c->dstBpc = 8;

if (isAnyRGB(srcFormat) || srcFormat == AV_PIX_FMT_PAL8)

c->srcBpc = 16;

if (c->dstBpc == 16)

dst_stride <<= 1;

if (INLINE_MMXEXT(cpu_flags) && c->srcBpc == 8 && c->dstBpc <= 14) {

c->canMMXEXTBeUsed = dstW >= srcW && (dstW & 31) == 0 &&

c->chrDstW >= c->chrSrcW &&

(srcW & 15) == 0;

if (!c->canMMXEXTBeUsed && dstW >= srcW && c->chrDstW >= c->chrSrcW && (srcW & 15) == 0

&& (flags & SWS_FAST_BILINEAR)) {

if (flags & SWS_PRINT_INFO)

av_log(c, AV_LOG_INFO,

"output width is not a multiple of 32 -> no MMXEXT scaler\n");

}

if (usesHFilter || isNBPS(c->srcFormat) || is16BPS(c->srcFormat) || isAnyRGB(c->srcFormat))

c->canMMXEXTBeUsed = 0;

} else

c->canMMXEXTBeUsed = 0;

c->chrXInc = (((int64_t)c->chrSrcW << 16) + (c->chrDstW >> 1)) / c->chrDstW;

c->chrYInc = (((int64_t)c->chrSrcH << 16) + (c->chrDstH >> 1)) / c->chrDstH;

/* Match pixel 0 of the src to pixel 0 of dst and match pixel n-2 of src

* to pixel n-2 of dst, but only for the FAST_BILINEAR mode otherwise do

* correct scaling.

* n-2 is the last chrominance sample available.

* This is not perfect, but no one should notice the difference, the more

* correct variant would be like the vertical one, but that would require

* some special code for the first and last pixel */

if (flags & SWS_FAST_BILINEAR) {

if (c->canMMXEXTBeUsed) {

c->lumXInc += 20;

c->chrXInc += 20;

}

// we don't use the x86 asm scaler if MMX is available

else if (INLINE_MMX(cpu_flags) && c->dstBpc <= 14) {

c->lumXInc = ((int64_t)(srcW - 2) << 16) / (dstW - 2) - 20;

c->chrXInc = ((int64_t)(c->chrSrcW - 2) << 16) / (c->chrDstW - 2) - 20;

}

}

// hardcoded for now

c->gamma_value = 2.2;

tmpFmt = AV_PIX_FMT_RGBA64LE;

if (!unscaled && c->gamma_flag && (srcFormat != tmpFmt || dstFormat != tmpFmt)) {

SwsContext *c2;

c->cascaded_context[0] = NULL;

ret = av_image_alloc(c->cascaded_tmp, c->cascaded_tmpStride,

srcW, srcH, tmpFmt, 64);

if (ret < 0)

return ret;

c->cascaded_context[0] = sws_getContext(srcW, srcH, srcFormat,

srcW, srcH, tmpFmt,

flags, NULL, NULL, c->param);

if (!c->cascaded_context[0]) {

return AVERROR(ENOMEM);

}

c->cascaded_context[1] = sws_getContext(srcW, srcH, tmpFmt,

dstW, dstH, tmpFmt,

flags, srcFilter, dstFilter, c->param);

if (!c->cascaded_context[1])

return AVERROR(ENOMEM);

c2 = c->cascaded_context[1];

c2->is_internal_gamma = 1;

c2->gamma = alloc_gamma_tbl( c->gamma_value);

c2->inv_gamma = alloc_gamma_tbl(1.f/c->gamma_value);

if (!c2->gamma || !c2->inv_gamma)

return AVERROR(ENOMEM);

// is_internal_flag is set after creating the context

// to properly create the gamma convert FilterDescriptor

// we have to re-initialize it

ff_free_filters(c2);

if ((ret = ff_init_filters(c2)) < 0) {

sws_freeContext(c2);

c->cascaded_context[1] = NULL;

return ret;

}

c->cascaded_context[2] = NULL;

if (dstFormat != tmpFmt) {

ret = av_image_alloc(c->cascaded1_tmp, c->cascaded1_tmpStride,

dstW, dstH, tmpFmt, 64);

if (ret < 0)

return ret;

c->cascaded_context[2] = sws_getContext(dstW, dstH, tmpFmt,

dstW, dstH, dstFormat,

flags, NULL, NULL, c->param);

if (!c->cascaded_context[2])

return AVERROR(ENOMEM);

}

return 0;

}

if (isBayer(srcFormat)) {

if (!unscaled ||

(dstFormat != AV_PIX_FMT_RGB24 && dstFormat != AV_PIX_FMT_YUV420P &&

dstFormat != AV_PIX_FMT_RGB48)) {

enum AVPixelFormat tmpFormat = isBayer16BPS(srcFormat) ? AV_PIX_FMT_RGB48 : AV_PIX_FMT_RGB24;

ret = av_image_alloc(c->cascaded_tmp, c->cascaded_tmpStride,

srcW, srcH, tmpFormat, 64);

if (ret < 0)

return ret;

c->cascaded_context[0] = sws_getContext(srcW, srcH, srcFormat,

srcW, srcH, tmpFormat,

flags, srcFilter, NULL, c->param);

if (!c->cascaded_context[0])

return AVERROR(ENOMEM);

c->cascaded_context[1] = sws_getContext(srcW, srcH, tmpFormat,

dstW, dstH, dstFormat,

flags, NULL, dstFilter, c->param);

if (!c->cascaded_context[1])

return AVERROR(ENOMEM);

return 0;

}

}

if (unscaled && c->srcBpc == 8 && dstFormat == AV_PIX_FMT_GRAYF32){

for (i = 0; i < 256; ++i){

c->uint2float_lut[i] = (float)i * float_mult;

}

}

// float will be converted to uint16_t

if ((srcFormat == AV_PIX_FMT_GRAYF32BE || srcFormat == AV_PIX_FMT_GRAYF32LE) &&

(!unscaled || unscaled && dstFormat != srcFormat && (srcFormat != AV_PIX_FMT_GRAYF32 ||

dstFormat != AV_PIX_FMT_GRAY8))){

c->srcBpc = 16;

}

if (CONFIG_SWSCALE_ALPHA && isALPHA(srcFormat) && !isALPHA(dstFormat)) {

enum AVPixelFormat tmpFormat = alphaless_fmt(srcFormat);

if (tmpFormat != AV_PIX_FMT_NONE && c->alphablend != SWS_ALPHA_BLEND_NONE) {

if (!unscaled ||

dstFormat != tmpFormat ||

usesHFilter || usesVFilter ||

c->srcRange != c->dstRange

) {

c->cascaded_mainindex = 1;

ret = av_image_alloc(c->cascaded_tmp, c->cascaded_tmpStride,

srcW, srcH, tmpFormat, 64);

if (ret < 0)

return ret;

c->cascaded_context[0] = sws_alloc_set_opts(srcW, srcH, srcFormat,

srcW, srcH, tmpFormat,

flags, c->param);

if (!c->cascaded_context[0])

return AVERROR(EINVAL);

c->cascaded_context[0]->alphablend = c->alphablend;

ret = sws_init_context(c->cascaded_context[0], NULL , NULL);

if (ret < 0)

return ret;

c->cascaded_context[1] = sws_alloc_set_opts(srcW, srcH, tmpFormat,

dstW, dstH, dstFormat,

flags, c->param);

if (!c->cascaded_context[1])

return AVERROR(EINVAL);

c->cascaded_context[1]->srcRange = c->srcRange;

c->cascaded_context[1]->dstRange = c->dstRange;

ret = sws_init_context(c->cascaded_context[1], srcFilter , dstFilter);

if (ret < 0)

return ret;

return 0;

}

}

}

#if HAVE_MMAP && HAVE_MPROTECT && defined(MAP_ANONYMOUS)

#define USE_MMAP 1

#else

#define USE_MMAP 0

#endif

/* precalculate horizontal scaler filter coefficients */

{

#if HAVE_MMXEXT_INLINE

// can't downscale !!!

if (c->canMMXEXTBeUsed && (flags & SWS_FAST_BILINEAR)) {

c->lumMmxextFilterCodeSize = ff_init_hscaler_mmxext(dstW, c->lumXInc, NULL,

NULL, NULL, 8);

c->chrMmxextFilterCodeSize = ff_init_hscaler_mmxext(c->chrDstW, c->chrXInc,

NULL, NULL, NULL, 4);

#if USE_MMAP

c->lumMmxextFilterCode = mmap(NULL, c->lumMmxextFilterCodeSize,

PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS,

-1, 0);

c->chrMmxextFilterCode = mmap(NULL, c->chrMmxextFilterCodeSize,

PROT_READ | PROT_WRITE,

MAP_PRIVATE | MAP_ANONYMOUS,

-1, 0);

#elif HAVE_VIRTUALALLOC

c->lumMmxextFilterCode = VirtualAlloc(NULL,

c->lumMmxextFilterCodeSize,

MEM_COMMIT,

PAGE_EXECUTE_READWRITE);

c->chrMmxextFilterCode = VirtualAlloc(NULL,

c->chrMmxextFilterCodeSize,

MEM_COMMIT,

PAGE_EXECUTE_READWRITE);

#else

c->lumMmxextFilterCode = av_malloc(c->lumMmxextFilterCodeSize);

c->chrMmxextFilterCode = av_malloc(c->chrMmxextFilterCodeSize);

#endif

#ifdef MAP_ANONYMOUS

if (c->lumMmxextFilterCode == MAP_FAILED || c->chrMmxextFilterCode == MAP_FAILED)

#else

if (!c->lumMmxextFilterCode || !c->chrMmxextFilterCode)

#endif

{

av_log(c, AV_LOG_ERROR, "Failed to allocate MMX2FilterCode\n");

return AVERROR(ENOMEM);

}

if (!FF_ALLOCZ_TYPED_ARRAY(c->hLumFilter, dstW / 8 + 8) ||

!FF_ALLOCZ_TYPED_ARRAY(c->hChrFilter, c->chrDstW / 4 + 8) ||

!FF_ALLOCZ_TYPED_ARRAY(c->hLumFilterPos, dstW / 2 / 8 + 8) ||

!FF_ALLOCZ_TYPED_ARRAY(c->hChrFilterPos, c->chrDstW / 2 / 4 + 8))

goto nomem;

ff_init_hscaler_mmxext( dstW, c->lumXInc, c->lumMmxextFilterCode,

c->hLumFilter, (uint32_t*)c->hLumFilterPos, 8);

ff_init_hscaler_mmxext(c->chrDstW, c->chrXInc, c->chrMmxextFilterCode,

c->hChrFilter, (uint32_t*)c->hChrFilterPos, 4);

#if USE_MMAP

if ( mprotect(c->lumMmxextFilterCode, c->lumMmxextFilterCodeSize, PROT_EXEC | PROT_READ) == -1

|| mprotect(c->chrMmxextFilterCode, c->chrMmxextFilterCodeSize, PROT_EXEC | PROT_READ) == -1) {

av_log(c, AV_LOG_ERROR, "mprotect failed, cannot use fast bilinear scaler\n");

ret = AVERROR(EINVAL);

goto fail;

}

#endif

} else

#endif /* HAVE_MMXEXT_INLINE */

{

const int filterAlign = X86_MMX(cpu_flags) ? 4 :

PPC_ALTIVEC(cpu_flags) ? 8 :

have_neon(cpu_flags) ? 4 : 1;

if ((ret = initFilter(&c->hLumFilter, &c->hLumFilterPos,

&c->hLumFilterSize, c->lumXInc,

srcW, dstW, filterAlign, 1 << 14,

(flags & SWS_BICUBLIN) ? (flags | SWS_BICUBIC) : flags,

cpu_flags, srcFilter->lumH, dstFilter->lumH,

c->param,

get_local_pos(c, 0, 0, 0),

get_local_pos(c, 0, 0, 0))) < 0)

goto fail;

if (ff_shuffle_filter_coefficients(c, c->hLumFilterPos, c->hLumFilterSize, c->hLumFilter, dstW) < 0)

goto nomem;

if ((ret = initFilter(&c->hChrFilter, &c->hChrFilterPos,

&c->hChrFilterSize, c->chrXInc,

c->chrSrcW, c->chrDstW, filterAlign, 1 << 14,

(flags & SWS_BICUBLIN) ? (flags | SWS_BILINEAR) : flags,

cpu_flags, srcFilter->chrH, dstFilter->chrH,

c->param,

get_local_pos(c, c->chrSrcHSubSample, c->src_h_chr_pos, 0),

get_local_pos(c, c->chrDstHSubSample, c->dst_h_chr_pos, 0))) < 0)

goto fail;

if (ff_shuffle_filter_coefficients(c, c->hChrFilterPos, c->hChrFilterSize, c->hChrFilter, c->chrDstW) < 0)

goto nomem;

}

} // initialize horizontal stuff

/* precalculate vertical scaler filter coefficients */

{

const int filterAlign = X86_MMX(cpu_flags) ? 2 :

PPC_ALTIVEC(cpu_flags) ? 8 :

have_neon(cpu_flags) ? 2 : 1;

if ((ret = initFilter(&c->vLumFilter, &c->vLumFilterPos, &c->vLumFilterSize,

c->lumYInc, srcH, dstH, filterAlign, (1 << 12),

(flags & SWS_BICUBLIN) ? (flags | SWS_BICUBIC) : flags,

cpu_flags, srcFilter->lumV, dstFilter->lumV,

c->param,

get_local_pos(c, 0, 0, 1),

get_local_pos(c, 0, 0, 1))) < 0)

goto fail;

if ((ret = initFilter(&c->vChrFilter, &c->vChrFilterPos, &c->vChrFilterSize,

c->chrYInc, c->chrSrcH, c->chrDstH,

filterAlign, (1 << 12),

(flags & SWS_BICUBLIN) ? (flags | SWS_BILINEAR) : flags,

cpu_flags, srcFilter->chrV, dstFilter->chrV,

c->param,

get_local_pos(c, c->chrSrcVSubSample, c->src_v_chr_pos, 1),

get_local_pos(c, c->chrDstVSubSample, c->dst_v_chr_pos, 1))) < 0)

goto fail;

#if HAVE_ALTIVEC

if (!FF_ALLOC_TYPED_ARRAY(c->vYCoeffsBank, c->vLumFilterSize * c->dstH) ||

!FF_ALLOC_TYPED_ARRAY(c->vCCoeffsBank, c->vChrFilterSize * c->chrDstH))

goto nomem;

for (i = 0; i < c->vLumFilterSize * c->dstH; i++) {

int j;

short *p = (short *)&c->vYCoeffsBank[i];

for (j = 0; j < 8; j++)

p[j] = c->vLumFilter[i];

}

for (i = 0; i < c->vChrFilterSize * c->chrDstH; i++) {

int j;

short *p = (short *)&c->vCCoeffsBank[i];

for (j = 0; j < 8; j++)

p[j] = c->vChrFilter[i];

}

#endif

}

for (i = 0; i < 4; i++)

if (!FF_ALLOCZ_TYPED_ARRAY(c->dither_error[i], c->dstW + 2))

goto nomem;

c->needAlpha = (CONFIG_SWSCALE_ALPHA && isALPHA(c->srcFormat) && isALPHA(c->dstFormat)) ? 1 : 0;

// 64 / c->scalingBpp is the same as 16 / sizeof(scaling_intermediate)

c->uv_off = (dst_stride>>1) + 64 / (c->dstBpc &~ 7);

c->uv_offx2 = dst_stride + 16;

av_assert0(c->chrDstH <= dstH);

if (flags & SWS_PRINT_INFO) {

const char *scaler = NULL, *cpucaps;

for (i = 0; i < FF_ARRAY_ELEMS(scale_algorithms); i++) {

if (flags & scale_algorithms[i].flag) {

scaler = scale_algorithms[i].description;

break;

}

}

if (!scaler)

scaler = "ehh flags invalid?!";

av_log(c, AV_LOG_INFO, "%s scaler, from %s to %s%s ",

scaler,

av_get_pix_fmt_name(srcFormat),

#ifdef DITHER1XBPP

dstFormat == AV_PIX_FMT_BGR555 || dstFormat == AV_PIX_FMT_BGR565 ||

dstFormat == AV_PIX_FMT_RGB444BE || dstFormat == AV_PIX_FMT_RGB444LE ||

dstFormat == AV_PIX_FMT_BGR444BE || dstFormat == AV_PIX_FMT_BGR444LE ?

"dithered " : "",

#else

"",

#endif

av_get_pix_fmt_name(dstFormat));

if (INLINE_MMXEXT(cpu_flags))

cpucaps = "MMXEXT";

else if (INLINE_AMD3DNOW(cpu_flags))

cpucaps = "3DNOW";

else if (INLINE_MMX(cpu_flags))

cpucaps = "MMX";

else if (PPC_ALTIVEC(cpu_flags))

cpucaps = "AltiVec";

else

cpucaps = "C";

av_log(c, AV_LOG_INFO, "using %s\n", cpucaps);

av_log(c, AV_LOG_VERBOSE, "%dx%d -> %dx%d\n", srcW, srcH, dstW, dstH);

av_log(c, AV_LOG_DEBUG,

"lum srcW=%d srcH=%d dstW=%d dstH=%d xInc=%d yInc=%d\n",

c->srcW, c->srcH, c->dstW, c->dstH, c->lumXInc, c->lumYInc);

av_log(c, AV_LOG_DEBUG,

"chr srcW=%d srcH=%d dstW=%d dstH=%d xInc=%d yInc=%d\n",

c->chrSrcW, c->chrSrcH, c->chrDstW, c->chrDstH,

c->chrXInc, c->chrYInc);

}

/* alpha blend special case, note this has been split via cascaded contexts if its scaled */

if (unscaled && !usesHFilter && !usesVFilter &&

c->alphablend != SWS_ALPHA_BLEND_NONE &&

isALPHA(srcFormat) &&

(c->srcRange == c->dstRange || isAnyRGB(dstFormat)) &&

alphaless_fmt(srcFormat) == dstFormat

) {

c->convert_unscaled = ff_sws_alphablendaway;

if (flags & SWS_PRINT_INFO)

av_log(c, AV_LOG_INFO,

"using alpha blendaway %s -> %s special converter\n",

av_get_pix_fmt_name(srcFormat), av_get_pix_fmt_name(dstFormat));

return 0;

}

/* unscaled special cases */

if (unscaled && !usesHFilter && !usesVFilter &&

(c->srcRange == c->dstRange || isAnyRGB(dstFormat) ||

isFloat(srcFormat) || isFloat(dstFormat))){

ff_get_unscaled_swscale(c);

if (c->convert_unscaled) {

if (flags & SWS_PRINT_INFO)

av_log(c, AV_LOG_INFO,

"using unscaled %s -> %s special converter\n",

av_get_pix_fmt_name(srcFormat), av_get_pix_fmt_name(dstFormat));

return 0;

}

}

ff_sws_init_scale(c);

return ff_init_filters(c);

nomem:

ret = AVERROR(ENOMEM);

fail: // FIXME replace things by appropriate error codes

if (ret == RETCODE_USE_CASCADE) {

int tmpW = sqrt(srcW * (int64_t)dstW);

int tmpH = sqrt(srcH * (int64_t)dstH);

enum AVPixelFormat tmpFormat = AV_PIX_FMT_YUV420P;

if (isALPHA(srcFormat))

tmpFormat = AV_PIX_FMT_YUVA420P;

if (srcW*(int64_t)srcH <= 4LL*dstW*dstH)

return AVERROR(EINVAL);

ret = av_image_alloc(c->cascaded_tmp, c->cascaded_tmpStride,

tmpW, tmpH, tmpFormat, 64);

if (ret < 0)

return ret;

c->cascaded_context[0] = sws_getContext(srcW, srcH, srcFormat,

tmpW, tmpH, tmpFormat,

flags, srcFilter, NULL, c->param);

if (!c->cascaded_context[0])

return AVERROR(ENOMEM);

c->cascaded_context[1] = sws_getContext(tmpW, tmpH, tmpFormat,

dstW, dstH, dstFormat,

flags, NULL, dstFilter, c->param);

if (!c->cascaded_context[1])

return AVERROR(ENOMEM);

return 0;

}

return ret;

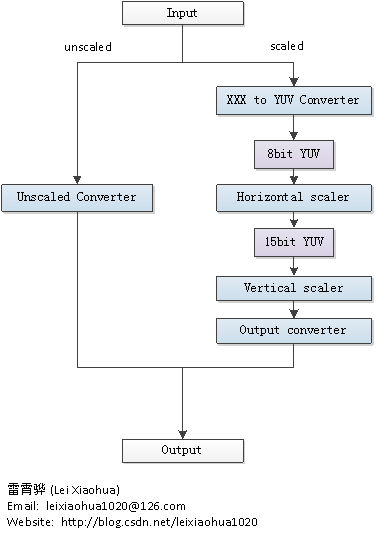

}- sws_init_context()除了对SwsContext中的各种变量进行赋值之外,主要按照顺序完成了以下一些工作:

- 1. 通过sws_rgb2rgb_init()初始化RGB转RGB(或者YUV转YUV)的函数(注意不包含RGB与YUV相互转换的函数)。sws_rgb2rgb_init函数被ff_sws_rgb2rgb_init取代,但是二者内部的函数实现是一样的

- 2. 通过判断输入输出图像的宽高来判断图像是否需要拉伸。如果图像需要拉伸,那么unscaled变量会被标记为1。

- 3. 通过sws_setColorspaceDetails()初始化颜色空间。

- 4. 一些输入参数的检测。例如:如果没有设置图像拉伸方法的话,默认设置为SWS_BICUBIC;如果输入和输出图像的宽高小于等于0的话,也会返回错误信息。

- 5. 初始化Filter。这一步根据拉伸方法的不同,初始化不同的Filter。

- 6. 如果flags中设置了“打印信息”选项SWS_PRINT_INFO,则输出信息。

- 7. 如果不需要拉伸的话,调用ff_get_unscaled_swscale()将特定的像素转换函数的指针赋值给SwsContext中的swscale指针。

- 8. 如果需要拉伸的话,调用ff_getSwsFunc()将通用的swscale()赋值给SwsContext中的swscale指针(这个地方有点绕,但是确实是这样的)。没有找到对应的代码进行论证 ff_getSwsFunc函数已被弃用

下面分别记录一下上述步骤的实现。

1.初始化RGB转RGB(或者YUV转YUV)的函数。注意这部分函数不包含RGB与YUV相互转换的函数。

ff_sws_rgb2rgb_init()

- ff_sws_rgb2rgb_init()的定义位于libswscale\rgb2rgb.c,如下所示。

/*

* RGB15->RGB16 original by Strepto/Astral

* ported to gcc & bugfixed : A'rpi

* MMXEXT, 3DNOW optimization by Nick Kurshev

* 32-bit C version, and and&add trick by Michael Niedermayer

*/

av_cold void ff_sws_rgb2rgb_init(void)

{

rgb2rgb_init_c();

if (ARCH_AARCH64)

rgb2rgb_init_aarch64();

if (ARCH_X86)

rgb2rgb_init_x86();

}- 从ff_sws_rgb2rgb_init()代码中可以看出,有两个初始化函数:

- rgb2rgb_init_c()是初始化C语言版本的RGB互转(或者YUV互转)的函数,

- rgb2rgb_init_x86()则是初始化X86汇编版本的RGB互转的函数。

- PS:在libswscale中有一点需要注意:很多的函数名称中包含类似“_c”这样的字符串,代表了该函数是C语言写的。与之对应的还有其它标记,比如“_mmx”,“sse2”等。

rgb2rgb_init_c()

- 首先来看一下C语言版本的RGB互转函数的初始化函数rgb2rgb_init_c(),

- 定义位于libswscale\rgb2rgb_template.c,如下所示。

static av_cold void rgb2rgb_init_c(void)

{

rgb15to16 = rgb15to16_c;

rgb15tobgr24 = rgb15tobgr24_c;

rgb15to32 = rgb15to32_c;

rgb16tobgr24 = rgb16tobgr24_c;

rgb16to32 = rgb16to32_c;

rgb16to15 = rgb16to15_c;

rgb24tobgr16 = rgb24tobgr16_c;

rgb24tobgr15 = rgb24tobgr15_c;

rgb24tobgr32 = rgb24tobgr32_c;

rgb32to16 = rgb32to16_c;

rgb32to15 = rgb32to15_c;

rgb32tobgr24 = rgb32tobgr24_c;

rgb24to15 = rgb24to15_c;

rgb24to16 = rgb24to16_c;

rgb24tobgr24 = rgb24tobgr24_c;

#if HAVE_BIGENDIAN

shuffle_bytes_0321 = shuffle_bytes_2103_c;

shuffle_bytes_2103 = shuffle_bytes_0321_c;

#else

shuffle_bytes_0321 = shuffle_bytes_0321_c;

shuffle_bytes_2103 = shuffle_bytes_2103_c;

#endif

shuffle_bytes_1230 = shuffle_bytes_1230_c;

shuffle_bytes_3012 = shuffle_bytes_3012_c;

shuffle_bytes_3210 = shuffle_bytes_3210_c;

rgb32tobgr16 = rgb32tobgr16_c;

rgb32tobgr15 = rgb32tobgr15_c;

yv12toyuy2 = yv12toyuy2_c;

yv12touyvy = yv12touyvy_c;

yuv422ptoyuy2 = yuv422ptoyuy2_c;

yuv422ptouyvy = yuv422ptouyvy_c;

yuy2toyv12 = yuy2toyv12_c;

planar2x = planar2x_c;

ff_rgb24toyv12 = ff_rgb24toyv12_c;

interleaveBytes = interleaveBytes_c;

deinterleaveBytes = deinterleaveBytes_c;

vu9_to_vu12 = vu9_to_vu12_c;

yvu9_to_yuy2 = yvu9_to_yuy2_c;

uyvytoyuv420 = uyvytoyuv420_c;

uyvytoyuv422 = uyvytoyuv422_c;

yuyvtoyuv420 = yuyvtoyuv420_c;

yuyvtoyuv422 = yuyvtoyuv422_c;

}

- 可以看出rgb2rgb_init_c()执行后,会把C语言版本的图像格式转换函数赋值给系统的函数指针。

- 下面我们选择几个函数看一下这些转换函数的定义。

rgb24tobgr24_c

- rgb24tobgr24_c()完成了RGB24向BGR24格式的转换。函数的定义如下所示。从代码中可以看出,该函数实现了“R”与“B”之间位置的对调,从而完成了这两种格式之间的转换。

static inline void rgb24tobgr24_c(const uint8_t *src, uint8_t *dst, int src_size)

{

unsigned i;

for (i = 0; i < src_size; i += 3) {

register uint8_t x = src[i + 2];

dst[i + 1] = src[i + 1];

dst[i + 2] = src[i + 0];

dst[i + 0] = x;

}

}rgb24to16_c()

- rgb24to16_c()完成了RGB24向RGB16像素格式的转换。

- 函数的定义如下所示。

static inline void rgb24to16_c(const uint8_t *src, uint8_t *dst, int src_size)

{

uint16_t *d = (uint16_t *)dst;

const uint8_t *s = src;

const uint8_t *end = s + src_size;

while (s < end) {

const int r = *s++;

const int g = *s++;

const int b = *s++;

*d++ = (b >> 3) | ((g & 0xFC) << 3) | ((r & 0xF8) << 8);

}

}

yuyvtoyuv422_c()

- yuyvtoyuv422_c()完成了YUYV向YUV422像素格式的转换。函数的定义如下所示。

static void yuyvtoyuv422_c(uint8_t *ydst, uint8_t *udst, uint8_t *vdst,

const uint8_t *src, int width, int height,

int lumStride, int chromStride, int srcStride)

{

int y;

const int chromWidth = AV_CEIL_RSHIFT(width, 1);

for (y = 0; y < height; y++) {

extract_even_c(src, ydst, width);

extract_odd2_c(src, udst, vdst, chromWidth);

src += srcStride;

ydst += lumStride;

udst += chromStride;

vdst += chromStride;

}

}

- 该函数将YUYV像素数据分离成为Y,U,V三个分量的像素数据。

- 其中extract_even_c()用于获取一行像素中序数为偶数的像素,对应提取了YUYV像素格式中的“Y”。

- extract_odd2_c()用于获取一行像素中序数为奇数的像素,并且把这些像素值再次按照奇偶的不同,存储于两个数组中。

- 对应提取了YUYV像素格式中的“U”和“V”。

- extract_even_c()定义如下所示。

static void extract_even_c(const uint8_t *src, uint8_t *dst, int count)

{

dst += count;

src += count * 2;

count = -count;

while (count < 0) {

dst[count] = src[2 * count];

count++;

}

}

- extract_odd2_c()定义如下所示。

static void extract_odd2_c(const uint8_t *src, uint8_t *dst0, uint8_t *dst1,

int count)

{

dst0 += count;

dst1 += count;

src += count * 4;

count = -count;

src++;

while (count < 0) {

dst0[count] = src[4 * count + 0];

dst1[count] = src[4 * count + 2];

count++;

}

}rgb2rgb_init_x86

- rgb2rgb_init_x86()用于初始化基于X86汇编语言的RGB互转的代码。由于对汇编不是很熟,不再作详细分析,出于和rgb2rgb_init_c()相对比的目的,列出它的代码。

- 它的代码位于libswscale\x86\rgb2rgb.c,如下所示。

- PS:所有和汇编有关的代码都位于libswscale目录的x86子目录下。

av_cold void rgb2rgb_init_x86(void)

{

int cpu_flags = av_get_cpu_flags();

#if HAVE_INLINE_ASM

if (INLINE_MMX(cpu_flags))

rgb2rgb_init_mmx();

if (INLINE_AMD3DNOW(cpu_flags))

rgb2rgb_init_3dnow();

if (INLINE_MMXEXT(cpu_flags))

rgb2rgb_init_mmxext();

if (INLINE_SSE2(cpu_flags))

rgb2rgb_init_sse2();

if (INLINE_AVX(cpu_flags))

rgb2rgb_init_avx();

#endif /* HAVE_INLINE_ASM */

if (EXTERNAL_MMXEXT(cpu_flags)) {

shuffle_bytes_2103 = ff_shuffle_bytes_2103_mmxext;

}

if (EXTERNAL_SSE2(cpu_flags)) {

#if ARCH_X86_64

uyvytoyuv422 = ff_uyvytoyuv422_sse2;

#endif

}

if (EXTERNAL_SSSE3(cpu_flags)) {

shuffle_bytes_0321 = ff_shuffle_bytes_0321_ssse3;

shuffle_bytes_2103 = ff_shuffle_bytes_2103_ssse3;

shuffle_bytes_1230 = ff_shuffle_bytes_1230_ssse3;

shuffle_bytes_3012 = ff_shuffle_bytes_3012_ssse3;

shuffle_bytes_3210 = ff_shuffle_bytes_3210_ssse3;

}

#if ARCH_X86_64

if (EXTERNAL_AVX2_FAST(cpu_flags)) {

shuffle_bytes_0321 = ff_shuffle_bytes_0321_avx2;

shuffle_bytes_2103 = ff_shuffle_bytes_2103_avx2;

shuffle_bytes_1230 = ff_shuffle_bytes_1230_avx2;

shuffle_bytes_3012 = ff_shuffle_bytes_3012_avx2;

shuffle_bytes_3210 = ff_shuffle_bytes_3210_avx2;

}

if (EXTERNAL_AVX(cpu_flags)) {

uyvytoyuv422 = ff_uyvytoyuv422_avx;

}

#endif

}

- 可以看出,rgb2rgb_init_x86()首先调用了av_get_cpu_flags()获取CPU支持的特性,根据特性调用rgb2rgb_init_mmx(),rgb2rgb_init_3dnow(),rgb2rgb_init_mmxext(),rgb2rgb_init_sse2(),rgb2rgb_init_avx()等函数。

2.判断图像是否需要拉伸

- 这一步主要通过比较输入图像和输出图像的宽高实现。

- 系统使用一个unscaled变量记录图像是否需要拉伸,如下所示。

- unscaled = (srcW == dstW && srcH == dstH);

3.初始化颜色空间。

- 初始化颜色空间通过函数sws_setColorspaceDetails()完成。

- sws_setColorspaceDetails()是FFmpeg的一个API函数,它的声明如下所示:

/**

* @param dstRange flag indicating the while-black range of the output (1=jpeg / 0=mpeg)

* @param srcRange flag indicating the while-black range of the input (1=jpeg / 0=mpeg)

* @param table the yuv2rgb coefficients describing the output yuv space, normally ff_yuv2rgb_coeffs[x]

* @param inv_table the yuv2rgb coefficients describing the input yuv space, normally ff_yuv2rgb_coeffs[x]

* @param brightness 16.16 fixed point brightness correction

* @param contrast 16.16 fixed point contrast correction

* @param saturation 16.16 fixed point saturation correction

#if LIBSWSCALE_VERSION_MAJOR > 6

* @return negative error code on error, non negative otherwise

#else

* @return -1 if not supported

#endif

*/

int sws_setColorspaceDetails(struct SwsContext *c, const int inv_table[4],

int srcRange, const int table[4], int dstRange,

int brightness, int contrast, int saturation);- 简单解释一下几个参数的含义:

- c:需要设定的SwsContext。

- inv_table:描述输出YUV颜色空间的参数表。

- srcRange:输入图像的取值范围(“1”代表JPEG标准,取值范围是0-255;“0”代表MPEG标准,取值范围是16-235)。

- table:描述输入YUV颜色空间的参数表。

- dstRange:输出图像的取值范围。

- brightness:未研究。

- contrast:未研究。

- saturation:未研究。

- 如果返回-1代表设置不成功。

- 其中描述颜色空间的参数表可以通过sws_getCoefficients()获取。

- 该函数在后文中再详细记录。

- sws_setColorspaceDetails()的定义位于libswscale\utils.c,如下所示。

int sws_setColorspaceDetails(struct SwsContext *c, const int inv_table[4],

int srcRange, const int table[4], int dstRange,

int brightness, int contrast, int saturation)

{

const AVPixFmtDescriptor *desc_dst;

const AVPixFmtDescriptor *desc_src;

int need_reinit = 0;

if (c->nb_slice_ctx) {

int parent_ret = 0;

for (int i = 0; i < c->nb_slice_ctx; i++) {

int ret = sws_setColorspaceDetails(c->slice_ctx[i], inv_table,

srcRange, table, dstRange,

brightness, contrast, saturation);

if (ret < 0)

parent_ret = ret;

}

return parent_ret;

}

handle_formats(c);

desc_dst = av_pix_fmt_desc_get(c->dstFormat);

desc_src = av_pix_fmt_desc_get(c->srcFormat);

if(range_override_needed(c->dstFormat))

dstRange = 0;

if(range_override_needed(c->srcFormat))

srcRange = 0;

if (c->srcRange != srcRange ||

c->dstRange != dstRange ||

c->brightness != brightness ||

c->contrast != contrast ||

c->saturation != saturation ||

memcmp(c->srcColorspaceTable, inv_table, sizeof(int) * 4) ||

memcmp(c->dstColorspaceTable, table, sizeof(int) * 4)

)

need_reinit = 1;

memmove(c->srcColorspaceTable, inv_table, sizeof(int) * 4);

memmove(c->dstColorspaceTable, table, sizeof(int) * 4);

c->brightness = brightness;

c->contrast = contrast;

c->saturation = saturation;

c->srcRange = srcRange;

c->dstRange = dstRange;

//The srcBpc check is possibly wrong but we seem to lack a definitive reference to test this

//and what we have in ticket 2939 looks better with this check

if (need_reinit && (c->srcBpc == 8 || !isYUV(c->srcFormat)))

ff_sws_init_range_convert(c);

c->dstFormatBpp = av_get_bits_per_pixel(desc_dst);

c->srcFormatBpp = av_get_bits_per_pixel(desc_src);

if (c->cascaded_context[c->cascaded_mainindex])

return sws_setColorspaceDetails(c->cascaded_context[c->cascaded_mainindex],inv_table, srcRange,table, dstRange, brightness, contrast, saturation);

if (!need_reinit)

return 0;

if ((isYUV(c->dstFormat) || isGray(c->dstFormat)) && (isYUV(c->srcFormat) || isGray(c->srcFormat))) {

if (!c->cascaded_context[0] &&

memcmp(c->dstColorspaceTable, c->srcColorspaceTable, sizeof(int) * 4) &&

c->srcW && c->srcH && c->dstW && c->dstH) {

enum AVPixelFormat tmp_format;

int tmp_width, tmp_height;

int srcW = c->srcW;

int srcH = c->srcH;

int dstW = c->dstW;

int dstH = c->dstH;

int ret;

av_log(c, AV_LOG_VERBOSE, "YUV color matrix differs for YUV->YUV, using intermediate RGB to convert\n");

if (isNBPS(c->dstFormat) || is16BPS(c->dstFormat)) {

if (isALPHA(c->srcFormat) && isALPHA(c->dstFormat)) {

tmp_format = AV_PIX_FMT_BGRA64;

} else {

tmp_format = AV_PIX_FMT_BGR48;

}

} else {

if (isALPHA(c->srcFormat) && isALPHA(c->dstFormat)) {

tmp_format = AV_PIX_FMT_BGRA;

} else {

tmp_format = AV_PIX_FMT_BGR24;

}

}

if (srcW*srcH > dstW*dstH) {

tmp_width = dstW;

tmp_height = dstH;

} else {

tmp_width = srcW;

tmp_height = srcH;

}

ret = av_image_alloc(c->cascaded_tmp, c->cascaded_tmpStride,

tmp_width, tmp_height, tmp_format, 64);

if (ret < 0)

return ret;

c->cascaded_context[0] = sws_alloc_set_opts(srcW, srcH, c->srcFormat,

tmp_width, tmp_height, tmp_format,

c->flags, c->param);

if (!c->cascaded_context[0])

return -1;

c->cascaded_context[0]->alphablend = c->alphablend;

ret = sws_init_context(c->cascaded_context[0], NULL , NULL);

if (ret < 0)

return ret;

//we set both src and dst depending on that the RGB side will be ignored

sws_setColorspaceDetails(c->cascaded_context[0], inv_table,

srcRange, table, dstRange,

brightness, contrast, saturation);

c->cascaded_context[1] = sws_alloc_set_opts(tmp_width, tmp_height, tmp_format,

dstW, dstH, c->dstFormat,

c->flags, c->param);

if (!c->cascaded_context[1])

return -1;

c->cascaded_context[1]->srcRange = srcRange;

c->cascaded_context[1]->dstRange = dstRange;

ret = sws_init_context(c->cascaded_context[1], NULL , NULL);

if (ret < 0)

return ret;

sws_setColorspaceDetails(c->cascaded_context[1], inv_table,

srcRange, table, dstRange,

0, 1 << 16, 1 << 16);

return 0;

}

//We do not support this combination currently, we need to cascade more contexts to compensate

if (c->cascaded_context[0] && memcmp(c->dstColorspaceTable, c->srcColorspaceTable, sizeof(int) * 4))

return -1; //AVERROR_PATCHWELCOME;

return 0;

}

if (!isYUV(c->dstFormat) && !isGray(c->dstFormat)) {

ff_yuv2rgb_c_init_tables(c, inv_table, srcRange, brightness,

contrast, saturation);

// FIXME factorize

if (ARCH_PPC)

ff_yuv2rgb_init_tables_ppc(c, inv_table, brightness,

contrast, saturation);

}

fill_rgb2yuv_table(c, table, dstRange);

return 0;

}

- 从sws_setColorspaceDetails()定义中可以看出,该函数将输入的参数分别赋值给了相应的变量,并且在最后调用了一个函数fill_rgb2yuv_table()。

- fill_rgb2yuv_table()函数还没有弄懂,暂时不记录。

sws_getCoefficients()

- sws_getCoefficients()用于获取描述颜色空间的参数表。

- 它的声明如下

/**

* Return a pointer to yuv<->rgb coefficients for the given colorspace

* suitable for sws_setColorspaceDetails().

*

* @param colorspace One of the SWS_CS_* macros. If invalid,

* SWS_CS_DEFAULT is used.

*/

const int *sws_getCoefficients(int colorspace);- 其中colorspace可以取值如下变量。

- 默认的取值SWS_CS_DEFAULT等同于SWS_CS_ITU601或者SWS_CS_SMPTE170M。

#define SWS_CS_ITU709 1

#define SWS_CS_FCC 4

#define SWS_CS_ITU601 5

#define SWS_CS_ITU624 5

#define SWS_CS_SMPTE170M 5

#define SWS_CS_SMPTE240M 7

#define SWS_CS_DEFAULT 5

#define SWS_CS_BT2020 9

- 下面看一下sws_getCoefficients()的定义,位于libswscale\yuv2rgb.c,如下所示。

const int *sws_getCoefficients(int colorspace)

{

if (colorspace > 10 || colorspace < 0 || colorspace == 8)

colorspace = SWS_CS_DEFAULT;

return ff_yuv2rgb_coeffs[colorspace];

}- 可以看出它返回了一个名称为ff_yuv2rgb_coeffs的数组中的一个元素,该数组的定义如下所示。

/* Color space conversion coefficients for YCbCr -> RGB mapping.

*

* Entries are {crv, cbu, cgu, cgv}

*

* crv = (255 / 224) * 65536 * (1 - cr) / 0.5

* cbu = (255 / 224) * 65536 * (1 - cb) / 0.5

* cgu = (255 / 224) * 65536 * (cb / cg) * (1 - cb) / 0.5

* cgv = (255 / 224) * 65536 * (cr / cg) * (1 - cr) / 0.5

*

* where Y = cr * R + cg * G + cb * B and cr + cg + cb = 1.

*/

const int32_t ff_yuv2rgb_coeffs[11][4] = {

{ 117489, 138438, 13975, 34925 }, /* no sequence_display_extension */

{ 117489, 138438, 13975, 34925 }, /* ITU-R Rec. 709 (1990) */

{ 104597, 132201, 25675, 53279 }, /* unspecified */

{ 104597, 132201, 25675, 53279 }, /* reserved */

{ 104448, 132798, 24759, 53109 }, /* FCC */

{ 104597, 132201, 25675, 53279 }, /* ITU-R Rec. 624-4 System B, G */

{ 104597, 132201, 25675, 53279 }, /* SMPTE 170M */

{ 117579, 136230, 16907, 35559 }, /* SMPTE 240M (1987) */

{ 0 }, /* YCgCo */

{ 110013, 140363, 12277, 42626 }, /* Bt-2020-NCL */

{ 110013, 140363, 12277, 42626 }, /* Bt-2020-CL */

};4.一些输入参数的检测。

- 例如:如果没有设置图像拉伸方法的话,默认设置为SWS_BICUBIC;

- 如果输入和输出图像的宽高小于等于0的话,也会返回错误信息。

- 有关这方面的代码比较多,简单举个例子。

i = flags & (SWS_POINT |

SWS_AREA |

SWS_BILINEAR |

SWS_FAST_BILINEAR |

SWS_BICUBIC |

SWS_X |

SWS_GAUSS |

SWS_LANCZOS |

SWS_SINC |

SWS_SPLINE |

SWS_BICUBLIN);

/* provide a default scaler if not set by caller */

if (!i) {

if (dstW < srcW && dstH < srcH)

flags |= SWS_BICUBIC;

else if (dstW > srcW && dstH > srcH)

flags |= SWS_BICUBIC;

else

flags |= SWS_BICUBIC;

c->flags = flags;

} else if (i & (i - 1)) {

av_log(c, AV_LOG_ERROR,

"Exactly one scaler algorithm must be chosen, got %X\n", i);

return AVERROR(EINVAL);

}

/* sanity check */

if (srcW < 1 || srcH < 1 || dstW < 1 || dstH < 1) {

/* FIXME check if these are enough and try to lower them after

* fixing the relevant parts of the code */

av_log(c, AV_LOG_ERROR, "%dx%d -> %dx%d is invalid scaling dimension\n",

srcW, srcH, dstW, dstH);

return AVERROR(EINVAL);

}5.初始化Filter。这一步根据拉伸方法的不同,初始化不同的Filter。

- 这一部分的工作在函数initFilter()中完成,暂时不详细分析。

6.如果flags中设置了“打印信息”选项SWS_PRINT_INFO,则输出信息。

- SwsContext初始化的时候,可以给flags设置SWS_PRINT_INFO标记。

- 这样SwsContext初始化完成的时候就可以打印出一些配置信息。

- 与打印相关的代码如下所示。

if (flags & SWS_PRINT_INFO) {

const char *scaler = NULL, *cpucaps;

for (i = 0; i < FF_ARRAY_ELEMS(scale_algorithms); i++) {

if (flags & scale_algorithms[i].flag) {

scaler = scale_algorithms[i].description;

break;

}

}

if (!scaler)

scaler = "ehh flags invalid?!";

av_log(c, AV_LOG_INFO, "%s scaler, from %s to %s%s ",

scaler,

av_get_pix_fmt_name(srcFormat),

#ifdef DITHER1XBPP

dstFormat == AV_PIX_FMT_BGR555 || dstFormat == AV_PIX_FMT_BGR565 ||

dstFormat == AV_PIX_FMT_RGB444BE || dstFormat == AV_PIX_FMT_RGB444LE ||

dstFormat == AV_PIX_FMT_BGR444BE || dstFormat == AV_PIX_FMT_BGR444LE ?

"dithered " : "",

#else

"",

#endif

av_get_pix_fmt_name(dstFormat));

if (INLINE_MMXEXT(cpu_flags))

cpucaps = "MMXEXT";

else if (INLINE_AMD3DNOW(cpu_flags))

cpucaps = "3DNOW";

else if (INLINE_MMX(cpu_flags))

cpucaps = "MMX";

else if (PPC_ALTIVEC(cpu_flags))

cpucaps = "AltiVec";

else

cpucaps = "C";

av_log(c, AV_LOG_INFO, "using %s\n", cpucaps);

av_log(c, AV_LOG_VERBOSE, "%dx%d -> %dx%d\n", srcW, srcH, dstW, dstH);

av_log(c, AV_LOG_DEBUG,

"lum srcW=%d srcH=%d dstW=%d dstH=%d xInc=%d yInc=%d\n",

c->srcW, c->srcH, c->dstW, c->dstH, c->lumXInc, c->lumYInc);

av_log(c, AV_LOG_DEBUG,

"chr srcW=%d srcH=%d dstW=%d dstH=%d xInc=%d yInc=%d\n",

c->chrSrcW, c->chrSrcH, c->chrDstW, c->chrDstH,

c->chrXInc, c->chrYInc);

} 7.如果不需要拉伸的话,就会调用ff_get_unscaled_swscale()将特定的像素转换函数的指针赋值给SwsContext中的swscale指针。

ff_get_unscaled_swscale()

- ff_get_unscaled_swscale()的定义如下所示。

- 该函数根据输入图像像素格式和输出图像像素格式,选择不同的像素格式转换函数。

void ff_get_unscaled_swscale(SwsContext *c)

{

const enum AVPixelFormat srcFormat = c->srcFormat;

const enum AVPixelFormat dstFormat = c->dstFormat;

const int flags = c->flags;

const int dstH = c->dstH;

const int dstW = c->dstW;

int needsDither;

needsDither = isAnyRGB(dstFormat) &&

c->dstFormatBpp < 24 &&

(c->dstFormatBpp < c->srcFormatBpp || (!isAnyRGB(srcFormat)));

/* yv12_to_nv12 */

if ((srcFormat == AV_PIX_FMT_YUV420P || srcFormat == AV_PIX_FMT_YUVA420P) &&

(dstFormat == AV_PIX_FMT_NV12 || dstFormat == AV_PIX_FMT_NV21)) {

c->convert_unscaled = planarToNv12Wrapper;

}

/* yv24_to_nv24 */

if ((srcFormat == AV_PIX_FMT_YUV444P || srcFormat == AV_PIX_FMT_YUVA444P) &&

(dstFormat == AV_PIX_FMT_NV24 || dstFormat == AV_PIX_FMT_NV42)) {

c->convert_unscaled = planarToNv24Wrapper;

}

/* nv12_to_yv12 */

if (dstFormat == AV_PIX_FMT_YUV420P &&

(srcFormat == AV_PIX_FMT_NV12 || srcFormat == AV_PIX_FMT_NV21)) {

c->convert_unscaled = nv12ToPlanarWrapper;

}

/* nv24_to_yv24 */

if (dstFormat == AV_PIX_FMT_YUV444P &&

(srcFormat == AV_PIX_FMT_NV24 || srcFormat == AV_PIX_FMT_NV42)) {

c->convert_unscaled = nv24ToPlanarWrapper;

}

/* yuv2bgr */

if ((srcFormat == AV_PIX_FMT_YUV420P || srcFormat == AV_PIX_FMT_YUV422P ||

srcFormat == AV_PIX_FMT_YUVA420P) && isAnyRGB(dstFormat) &&

!(flags & SWS_ACCURATE_RND) && (c->dither == SWS_DITHER_BAYER || c->dither == SWS_DITHER_AUTO) && !(dstH & 1)) {

c->convert_unscaled = ff_yuv2rgb_get_func_ptr(c);

c->dst_slice_align = 2;

}

/* yuv420p1x_to_p01x */

if ((srcFormat == AV_PIX_FMT_YUV420P10 || srcFormat == AV_PIX_FMT_YUVA420P10 ||

srcFormat == AV_PIX_FMT_YUV420P12 ||

srcFormat == AV_PIX_FMT_YUV420P14 ||

srcFormat == AV_PIX_FMT_YUV420P16 || srcFormat == AV_PIX_FMT_YUVA420P16) &&

(dstFormat == AV_PIX_FMT_P010 || dstFormat == AV_PIX_FMT_P016)) {

c->convert_unscaled = planarToP01xWrapper;

}

/* yuv420p_to_p01xle */

if ((srcFormat == AV_PIX_FMT_YUV420P || srcFormat == AV_PIX_FMT_YUVA420P) &&

(dstFormat == AV_PIX_FMT_P010LE || dstFormat == AV_PIX_FMT_P016LE)) {

c->convert_unscaled = planar8ToP01xleWrapper;

}

if (srcFormat == AV_PIX_FMT_YUV410P && !(dstH & 3) &&

(dstFormat == AV_PIX_FMT_YUV420P || dstFormat == AV_PIX_FMT_YUVA420P) &&

!(flags & SWS_BITEXACT)) {

c->convert_unscaled = yvu9ToYv12Wrapper;

c->dst_slice_align = 4;

}

/* bgr24toYV12 */

if (srcFormat == AV_PIX_FMT_BGR24 &&

(dstFormat == AV_PIX_FMT_YUV420P || dstFormat == AV_PIX_FMT_YUVA420P) &&

!(flags & SWS_ACCURATE_RND) && !(dstW&1))

c->convert_unscaled = bgr24ToYv12Wrapper;

/* RGB/BGR -> RGB/BGR (no dither needed forms) */

if (isAnyRGB(srcFormat) && isAnyRGB(dstFormat) && findRgbConvFn(c)

&& (!needsDither || (c->flags&(SWS_FAST_BILINEAR|SWS_POINT))))

c->convert_unscaled = rgbToRgbWrapper;

/* RGB to planar RGB */

if ((srcFormat == AV_PIX_FMT_GBRP && dstFormat == AV_PIX_FMT_GBRAP) ||

(srcFormat == AV_PIX_FMT_GBRAP && dstFormat == AV_PIX_FMT_GBRP))

c->convert_unscaled = planarRgbToplanarRgbWrapper;

#define isByteRGB(f) ( \

f == AV_PIX_FMT_RGB32 || \

f == AV_PIX_FMT_RGB32_1 || \

f == AV_PIX_FMT_RGB24 || \

f == AV_PIX_FMT_BGR32 || \

f == AV_PIX_FMT_BGR32_1 || \

f == AV_PIX_FMT_BGR24)

if (srcFormat == AV_PIX_FMT_GBRP && isPlanar(srcFormat) && isByteRGB(dstFormat))

c->convert_unscaled = planarRgbToRgbWrapper;

if (srcFormat == AV_PIX_FMT_GBRAP && isByteRGB(dstFormat))

c->convert_unscaled = planarRgbaToRgbWrapper;

if ((srcFormat == AV_PIX_FMT_RGB48LE || srcFormat == AV_PIX_FMT_RGB48BE ||

srcFormat == AV_PIX_FMT_BGR48LE || srcFormat == AV_PIX_FMT_BGR48BE ||

srcFormat == AV_PIX_FMT_RGBA64LE || srcFormat == AV_PIX_FMT_RGBA64BE ||

srcFormat == AV_PIX_FMT_BGRA64LE || srcFormat == AV_PIX_FMT_BGRA64BE) &&

(dstFormat == AV_PIX_FMT_GBRP9LE || dstFormat == AV_PIX_FMT_GBRP9BE ||

dstFormat == AV_PIX_FMT_GBRP10LE || dstFormat == AV_PIX_FMT_GBRP10BE ||

dstFormat == AV_PIX_FMT_GBRP12LE || dstFormat == AV_PIX_FMT_GBRP12BE ||

dstFormat == AV_PIX_FMT_GBRP14LE || dstFormat == AV_PIX_FMT_GBRP14BE ||

dstFormat == AV_PIX_FMT_GBRP16LE || dstFormat == AV_PIX_FMT_GBRP16BE ||

dstFormat == AV_PIX_FMT_GBRAP10LE || dstFormat == AV_PIX_FMT_GBRAP10BE ||

dstFormat == AV_PIX_FMT_GBRAP12LE || dstFormat == AV_PIX_FMT_GBRAP12BE ||

dstFormat == AV_PIX_FMT_GBRAP16LE || dstFormat == AV_PIX_FMT_GBRAP16BE ))

c->convert_unscaled = Rgb16ToPlanarRgb16Wrapper;

if ((srcFormat == AV_PIX_FMT_GBRP9LE || srcFormat == AV_PIX_FMT_GBRP9BE ||

srcFormat == AV_PIX_FMT_GBRP16LE || srcFormat == AV_PIX_FMT_GBRP16BE ||

srcFormat == AV_PIX_FMT_GBRP10LE || srcFormat == AV_PIX_FMT_GBRP10BE ||

srcFormat == AV_PIX_FMT_GBRP12LE || srcFormat == AV_PIX_FMT_GBRP12BE ||

srcFormat == AV_PIX_FMT_GBRP14LE || srcFormat == AV_PIX_FMT_GBRP14BE ||

srcFormat == AV_PIX_FMT_GBRAP10LE || srcFormat == AV_PIX_FMT_GBRAP10BE ||

srcFormat == AV_PIX_FMT_GBRAP12LE || srcFormat == AV_PIX_FMT_GBRAP12BE ||

srcFormat == AV_PIX_FMT_GBRAP16LE || srcFormat == AV_PIX_FMT_GBRAP16BE) &&

(dstFormat == AV_PIX_FMT_RGB48LE || dstFormat == AV_PIX_FMT_RGB48BE ||

dstFormat == AV_PIX_FMT_BGR48LE || dstFormat == AV_PIX_FMT_BGR48BE ||

dstFormat == AV_PIX_FMT_RGBA64LE || dstFormat == AV_PIX_FMT_RGBA64BE ||

dstFormat == AV_PIX_FMT_BGRA64LE || dstFormat == AV_PIX_FMT_BGRA64BE))

c->convert_unscaled = planarRgb16ToRgb16Wrapper;

if (av_pix_fmt_desc_get(srcFormat)->comp[0].depth == 8 &&

isPackedRGB(srcFormat) && dstFormat == AV_PIX_FMT_GBRP)

c->convert_unscaled = rgbToPlanarRgbWrapper;

if (isBayer(srcFormat)) {

if (dstFormat == AV_PIX_FMT_RGB24)

c->convert_unscaled = bayer_to_rgb24_wrapper;

else if (dstFormat == AV_PIX_FMT_RGB48)

c->convert_unscaled = bayer_to_rgb48_wrapper;

else if (dstFormat == AV_PIX_FMT_YUV420P)

c->convert_unscaled = bayer_to_yv12_wrapper;

else if (!isBayer(dstFormat)) {

av_log(c, AV_LOG_ERROR, "unsupported bayer conversion\n");

av_assert0(0);

}

}

/* bswap 16 bits per pixel/component packed formats */

if (IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BAYER_BGGR16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BAYER_RGGB16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BAYER_GBRG16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BAYER_GRBG16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BGR444) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BGR48) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BGR555) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BGR565) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_BGRA64) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GRAY9) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GRAY10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GRAY12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GRAY14) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GRAY16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YA16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_AYUV64) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRP9) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRP10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRP12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRP14) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRP16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRAP10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRAP12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRAP16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_RGB444) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_RGB48) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_RGB555) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_RGB565) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_RGBA64) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_XYZ12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV420P9) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV420P10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV420P12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV420P14) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV420P16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV422P9) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV422P10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV422P12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV422P14) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV422P16) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV440P10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV440P12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV444P9) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV444P10) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV444P12) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV444P14) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_YUV444P16))

c->convert_unscaled = bswap_16bpc;

/* bswap 32 bits per pixel/component formats */

if (IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRPF32) ||

IS_DIFFERENT_ENDIANESS(srcFormat, dstFormat, AV_PIX_FMT_GBRAPF32))

c->convert_unscaled = bswap_32bpc;

if (usePal(srcFormat) && isByteRGB(dstFormat))

c->convert_unscaled = palToRgbWrapper;

if (srcFormat == AV_PIX_FMT_YUV422P) {

if (dstFormat == AV_PIX_FMT_YUYV422)

c->convert_unscaled = yuv422pToYuy2Wrapper;

else if (dstFormat == AV_PIX_FMT_UYVY422)

c->convert_unscaled = yuv422pToUyvyWrapper;

}

/* uint Y to float Y */

if (srcFormat == AV_PIX_FMT_GRAY8 && dstFormat == AV_PIX_FMT_GRAYF32){

c->convert_unscaled = uint_y_to_float_y_wrapper;

}

/* float Y to uint Y */

if (srcFormat == AV_PIX_FMT_GRAYF32 && dstFormat == AV_PIX_FMT_GRAY8){

c->convert_unscaled = float_y_to_uint_y_wrapper;

}

/* LQ converters if -sws 0 or -sws 4*/

if (c->flags&(SWS_FAST_BILINEAR|SWS_POINT)) {

/* yv12_to_yuy2 */

if (srcFormat == AV_PIX_FMT_YUV420P || srcFormat == AV_PIX_FMT_YUVA420P) {

if (dstFormat == AV_PIX_FMT_YUYV422)

c->convert_unscaled = planarToYuy2Wrapper;

else if (dstFormat == AV_PIX_FMT_UYVY422)

c->convert_unscaled = planarToUyvyWrapper;

}

}

if (srcFormat == AV_PIX_FMT_YUYV422 &&

(dstFormat == AV_PIX_FMT_YUV420P || dstFormat == AV_PIX_FMT_YUVA420P))

c->convert_unscaled = yuyvToYuv420Wrapper;

if (srcFormat == AV_PIX_FMT_UYVY422 &&

(dstFormat == AV_PIX_FMT_YUV420P || dstFormat == AV_PIX_FMT_YUVA420P))

c->convert_unscaled = uyvyToYuv420Wrapper;

if (srcFormat == AV_PIX_FMT_YUYV422 && dstFormat == AV_PIX_FMT_YUV422P)

c->convert_unscaled = yuyvToYuv422Wrapper;

if (srcFormat == AV_PIX_FMT_UYVY422 && dstFormat == AV_PIX_FMT_YUV422P)

c->convert_unscaled = uyvyToYuv422Wrapper;

#define isPlanarGray(x) (isGray(x) && (x) != AV_PIX_FMT_YA8 && (x) != AV_PIX_FMT_YA16LE && (x) != AV_PIX_FMT_YA16BE)

/* simple copy */

if ( srcFormat == dstFormat ||

(srcFormat == AV_PIX_FMT_YUVA420P && dstFormat == AV_PIX_FMT_YUV420P) ||

(srcFormat == AV_PIX_FMT_YUV420P && dstFormat == AV_PIX_FMT_YUVA420P) ||

(isFloat(srcFormat) == isFloat(dstFormat)) && ((isPlanarYUV(srcFormat) && isPlanarGray(dstFormat)) ||

(isPlanarYUV(dstFormat) && isPlanarGray(srcFormat)) ||

(isPlanarGray(dstFormat) && isPlanarGray(srcFormat)) ||

(isPlanarYUV(srcFormat) && isPlanarYUV(dstFormat) &&

c->chrDstHSubSample == c->chrSrcHSubSample &&

c->chrDstVSubSample == c->chrSrcVSubSample &&

!isSemiPlanarYUV(srcFormat) && !isSemiPlanarYUV(dstFormat))))

{

if (isPacked(c->srcFormat))

c->convert_unscaled = packedCopyWrapper;

else /* Planar YUV or gray */

c->convert_unscaled = planarCopyWrapper;

}

if (ARCH_PPC)

ff_get_unscaled_swscale_ppc(c);

if (ARCH_ARM)

ff_get_unscaled_swscale_arm(c);

if (ARCH_AARCH64)

ff_get_unscaled_swscale_aarch64(c);

}

- 从ff_get_unscaled_swscale()源代码中可以看出,赋值给SwsContext的swscale指针的函数名称大多数为XXXWrapper()。实际上这些函数封装了一些基本的像素格式转换函数。

- 例如yuyvToYuv422Wrapper()的定义如下所示。

static int yuyvToYuv422Wrapper(SwsContext *c, const uint8_t *src[],

int srcStride[], int srcSliceY, int srcSliceH,

uint8_t *dstParam[], int dstStride[])

{

uint8_t *ydst = dstParam[0] + dstStride[0] * srcSliceY;

uint8_t *udst = dstParam[1] + dstStride[1] * srcSliceY;

uint8_t *vdst = dstParam[2] + dstStride[2] * srcSliceY;

yuyvtoyuv422(ydst, udst, vdst, src[0], c->srcW, srcSliceH, dstStride[0],

dstStride[1], srcStride[0]);

return srcSliceH;

}

- 从yuyvToYuv422Wrapper()的定义中可以看出,它调用了yuyvtoyuv422()。

- 而yuyvtoyuv422()则是rgb2rgb.c中的一个函数,用于将YUVU转换为YUV422(该函数在前文中已经记录)。

8.如果需要拉伸的话,就会调用ff_getSwsFunc()将通用的swscale()赋值给SwsContext中的swscale指针,然后返回。

- 上一步骤(图像不用缩放)实际上是一种不太常见的情况,更多的情况下会执行本步骤。

- 这个时候就会调用ff_getSwsFunc()获取图像的缩放函数。

ff_getSwsFunc()

- ff_getSwsFunc()用于获取通用的swscale()函数。

- ff_getSwsFunc 已被 弃用

SwsFunc ff_getSwsFunc(SwsContext *c)

{

sws_init_swscale(c);

if (ARCH_PPC)

ff_sws_init_swscale_ppc(c);

if (ARCH_X86)

ff_sws_init_swscale_x86(c);

return swscale;

}- ff_sws_init_scale函数的内部执行逻辑和 ff_getSwsFunc 类似

void ff_sws_init_scale(SwsContext *c)

{

sws_init_swscale(c);

if (ARCH_PPC)

ff_sws_init_swscale_ppc(c);

if (ARCH_X86)

ff_sws_init_swscale_x86(c);

if (ARCH_AARCH64)

ff_sws_init_swscale_aarch64(c);

if (ARCH_ARM)

ff_sws_init_swscale_arm(c);

}

- 从源代码中可以看出ff_getSwsFunc()调用了函数sws_init_swscale()。

- 如果系统支持X86汇编的话,还会调用ff_sws_init_swscale_x86()。

sws_init_swscale()

- sws_init_swscale()的定义位于libswscale\swscale.c,如下所示。

static av_cold void sws_init_swscale(SwsContext *c)

{

enum AVPixelFormat srcFormat = c->srcFormat;

ff_sws_init_output_funcs(c, &c->yuv2plane1, &c->yuv2planeX,

&c->yuv2nv12cX, &c->yuv2packed1,

&c->yuv2packed2, &c->yuv2packedX, &c->yuv2anyX);

ff_sws_init_input_funcs(c);

if (c->srcBpc == 8) {

if (c->dstBpc <= 14) {

c->hyScale = c->hcScale = hScale8To15_c;

if (c->flags & SWS_FAST_BILINEAR) {

c->hyscale_fast = ff_hyscale_fast_c;

c->hcscale_fast = ff_hcscale_fast_c;

}

} else {

c->hyScale = c->hcScale = hScale8To19_c;

}

} else {

c->hyScale = c->hcScale = c->dstBpc > 14 ? hScale16To19_c

: hScale16To15_c;

}

ff_sws_init_range_convert(c);

if (!(isGray(srcFormat) || isGray(c->dstFormat) ||

srcFormat == AV_PIX_FMT_MONOBLACK || srcFormat == AV_PIX_FMT_MONOWHITE))

c->needs_hcscale = 1;

}

- 从函数中可以看出,sws_init_swscale()主要调用了3个函数:ff_sws_init_output_funcs(),ff_sws_init_input_funcs(),ff_sws_init_range_convert()。

- 其中,ff_sws_init_output_funcs()用于初始化输出的函数,ff_sws_init_input_funcs()用于初始化输入的函数,ff_sws_init_range_convert()用于初始化像素值范围转换的函数。

ff_sws_init_output_funcs()

- ff_sws_init_output_funcs()用于初始化“输出函数”。“输出函数”在libswscale中的作用就是将处理后的一行像素数据输出出来。

- ff_sws_init_output_funcs()的定义位于libswscale\output.c,如下所示。

av_cold void ff_sws_init_output_funcs(SwsContext *c,

yuv2planar1_fn *yuv2plane1,

yuv2planarX_fn *yuv2planeX,

yuv2interleavedX_fn *yuv2nv12cX,

yuv2packed1_fn *yuv2packed1,

yuv2packed2_fn *yuv2packed2,

yuv2packedX_fn *yuv2packedX,

yuv2anyX_fn *yuv2anyX)

{

enum AVPixelFormat dstFormat = c->dstFormat;

const AVPixFmtDescriptor *desc = av_pix_fmt_desc_get(dstFormat);

if (isSemiPlanarYUV(dstFormat) && isDataInHighBits(dstFormat)) {

av_assert0(desc->comp[0].depth == 10);

*yuv2plane1 = isBE(dstFormat) ? yuv2p010l1_BE_c : yuv2p010l1_LE_c;

*yuv2planeX = isBE(dstFormat) ? yuv2p010lX_BE_c : yuv2p010lX_LE_c;

*yuv2nv12cX = isBE(dstFormat) ? yuv2p010cX_BE_c : yuv2p010cX_LE_c;

} else if (is16BPS(dstFormat)) {

*yuv2planeX = isBE(dstFormat) ? yuv2planeX_16BE_c : yuv2planeX_16LE_c;

*yuv2plane1 = isBE(dstFormat) ? yuv2plane1_16BE_c : yuv2plane1_16LE_c;

if (isSemiPlanarYUV(dstFormat)) {

*yuv2nv12cX = isBE(dstFormat) ? yuv2nv12cX_16BE_c : yuv2nv12cX_16LE_c;

}

} else if (isNBPS(dstFormat)) {

if (desc->comp[0].depth == 9) {

*yuv2planeX = isBE(dstFormat) ? yuv2planeX_9BE_c : yuv2planeX_9LE_c;

*yuv2plane1 = isBE(dstFormat) ? yuv2plane1_9BE_c : yuv2plane1_9LE_c;

} else if (desc->comp[0].depth == 10) {

*yuv2planeX = isBE(dstFormat) ? yuv2planeX_10BE_c : yuv2planeX_10LE_c;

*yuv2plane1 = isBE(dstFormat) ? yuv2plane1_10BE_c : yuv2plane1_10LE_c;

} else if (desc->comp[0].depth == 12) {

*yuv2planeX = isBE(dstFormat) ? yuv2planeX_12BE_c : yuv2planeX_12LE_c;

*yuv2plane1 = isBE(dstFormat) ? yuv2plane1_12BE_c : yuv2plane1_12LE_c;

} else if (desc->comp[0].depth == 14) {

*yuv2planeX = isBE(dstFormat) ? yuv2planeX_14BE_c : yuv2planeX_14LE_c;

*yuv2plane1 = isBE(dstFormat) ? yuv2plane1_14BE_c : yuv2plane1_14LE_c;

} else

av_assert0(0);

} else if (dstFormat == AV_PIX_FMT_GRAYF32BE) {

*yuv2planeX = yuv2planeX_floatBE_c;

*yuv2plane1 = yuv2plane1_floatBE_c;

} else if (dstFormat == AV_PIX_FMT_GRAYF32LE) {

*yuv2planeX = yuv2planeX_floatLE_c;

*yuv2plane1 = yuv2plane1_floatLE_c;

} else {

*yuv2plane1 = yuv2plane1_8_c;

*yuv2planeX = yuv2planeX_8_c;

if (isSemiPlanarYUV(dstFormat))

*yuv2nv12cX = yuv2nv12cX_c;

}

if(c->flags & SWS_FULL_CHR_H_INT) {

switch (dstFormat) {

case AV_PIX_FMT_RGBA:

#if CONFIG_SMALL

*yuv2packedX = yuv2rgba32_full_X_c;

*yuv2packed2 = yuv2rgba32_full_2_c;

*yuv2packed1 = yuv2rgba32_full_1_c;

#else

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2rgba32_full_X_c;

*yuv2packed2 = yuv2rgba32_full_2_c;

*yuv2packed1 = yuv2rgba32_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2rgbx32_full_X_c;

*yuv2packed2 = yuv2rgbx32_full_2_c;

*yuv2packed1 = yuv2rgbx32_full_1_c;

}

#endif /* !CONFIG_SMALL */

break;

case AV_PIX_FMT_ARGB:

#if CONFIG_SMALL

*yuv2packedX = yuv2argb32_full_X_c;

*yuv2packed2 = yuv2argb32_full_2_c;

*yuv2packed1 = yuv2argb32_full_1_c;

#else

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2argb32_full_X_c;

*yuv2packed2 = yuv2argb32_full_2_c;

*yuv2packed1 = yuv2argb32_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2xrgb32_full_X_c;

*yuv2packed2 = yuv2xrgb32_full_2_c;

*yuv2packed1 = yuv2xrgb32_full_1_c;

}

#endif /* !CONFIG_SMALL */

break;

case AV_PIX_FMT_BGRA:

#if CONFIG_SMALL

*yuv2packedX = yuv2bgra32_full_X_c;

*yuv2packed2 = yuv2bgra32_full_2_c;

*yuv2packed1 = yuv2bgra32_full_1_c;

#else

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2bgra32_full_X_c;

*yuv2packed2 = yuv2bgra32_full_2_c;

*yuv2packed1 = yuv2bgra32_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2bgrx32_full_X_c;

*yuv2packed2 = yuv2bgrx32_full_2_c;

*yuv2packed1 = yuv2bgrx32_full_1_c;

}

#endif /* !CONFIG_SMALL */

break;

case AV_PIX_FMT_ABGR:

#if CONFIG_SMALL

*yuv2packedX = yuv2abgr32_full_X_c;

*yuv2packed2 = yuv2abgr32_full_2_c;

*yuv2packed1 = yuv2abgr32_full_1_c;

#else

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2abgr32_full_X_c;

*yuv2packed2 = yuv2abgr32_full_2_c;

*yuv2packed1 = yuv2abgr32_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2xbgr32_full_X_c;

*yuv2packed2 = yuv2xbgr32_full_2_c;

*yuv2packed1 = yuv2xbgr32_full_1_c;

}

#endif /* !CONFIG_SMALL */

break;

case AV_PIX_FMT_RGBA64LE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2rgba64le_full_X_c;

*yuv2packed2 = yuv2rgba64le_full_2_c;

*yuv2packed1 = yuv2rgba64le_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2rgbx64le_full_X_c;

*yuv2packed2 = yuv2rgbx64le_full_2_c;

*yuv2packed1 = yuv2rgbx64le_full_1_c;

}

break;

case AV_PIX_FMT_RGBA64BE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2rgba64be_full_X_c;

*yuv2packed2 = yuv2rgba64be_full_2_c;

*yuv2packed1 = yuv2rgba64be_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2rgbx64be_full_X_c;

*yuv2packed2 = yuv2rgbx64be_full_2_c;

*yuv2packed1 = yuv2rgbx64be_full_1_c;

}

break;

case AV_PIX_FMT_BGRA64LE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2bgra64le_full_X_c;

*yuv2packed2 = yuv2bgra64le_full_2_c;

*yuv2packed1 = yuv2bgra64le_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2bgrx64le_full_X_c;

*yuv2packed2 = yuv2bgrx64le_full_2_c;

*yuv2packed1 = yuv2bgrx64le_full_1_c;

}

break;

case AV_PIX_FMT_BGRA64BE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packedX = yuv2bgra64be_full_X_c;

*yuv2packed2 = yuv2bgra64be_full_2_c;

*yuv2packed1 = yuv2bgra64be_full_1_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packedX = yuv2bgrx64be_full_X_c;

*yuv2packed2 = yuv2bgrx64be_full_2_c;

*yuv2packed1 = yuv2bgrx64be_full_1_c;

}

break;

case AV_PIX_FMT_RGB24:

*yuv2packedX = yuv2rgb24_full_X_c;

*yuv2packed2 = yuv2rgb24_full_2_c;

*yuv2packed1 = yuv2rgb24_full_1_c;

break;

case AV_PIX_FMT_BGR24:

*yuv2packedX = yuv2bgr24_full_X_c;

*yuv2packed2 = yuv2bgr24_full_2_c;

*yuv2packed1 = yuv2bgr24_full_1_c;

break;

case AV_PIX_FMT_RGB48LE:

*yuv2packedX = yuv2rgb48le_full_X_c;

*yuv2packed2 = yuv2rgb48le_full_2_c;

*yuv2packed1 = yuv2rgb48le_full_1_c;

break;

case AV_PIX_FMT_BGR48LE:

*yuv2packedX = yuv2bgr48le_full_X_c;

*yuv2packed2 = yuv2bgr48le_full_2_c;

*yuv2packed1 = yuv2bgr48le_full_1_c;

break;

case AV_PIX_FMT_RGB48BE:

*yuv2packedX = yuv2rgb48be_full_X_c;

*yuv2packed2 = yuv2rgb48be_full_2_c;

*yuv2packed1 = yuv2rgb48be_full_1_c;

break;

case AV_PIX_FMT_BGR48BE:

*yuv2packedX = yuv2bgr48be_full_X_c;

*yuv2packed2 = yuv2bgr48be_full_2_c;

*yuv2packed1 = yuv2bgr48be_full_1_c;

break;

case AV_PIX_FMT_BGR4_BYTE:

*yuv2packedX = yuv2bgr4_byte_full_X_c;

*yuv2packed2 = yuv2bgr4_byte_full_2_c;

*yuv2packed1 = yuv2bgr4_byte_full_1_c;

break;

case AV_PIX_FMT_RGB4_BYTE:

*yuv2packedX = yuv2rgb4_byte_full_X_c;

*yuv2packed2 = yuv2rgb4_byte_full_2_c;

*yuv2packed1 = yuv2rgb4_byte_full_1_c;

break;

case AV_PIX_FMT_BGR8:

*yuv2packedX = yuv2bgr8_full_X_c;

*yuv2packed2 = yuv2bgr8_full_2_c;

*yuv2packed1 = yuv2bgr8_full_1_c;

break;

case AV_PIX_FMT_RGB8:

*yuv2packedX = yuv2rgb8_full_X_c;

*yuv2packed2 = yuv2rgb8_full_2_c;

*yuv2packed1 = yuv2rgb8_full_1_c;

break;

case AV_PIX_FMT_GBRP:

case AV_PIX_FMT_GBRP9BE:

case AV_PIX_FMT_GBRP9LE:

case AV_PIX_FMT_GBRP10BE:

case AV_PIX_FMT_GBRP10LE:

case AV_PIX_FMT_GBRP12BE:

case AV_PIX_FMT_GBRP12LE:

case AV_PIX_FMT_GBRP14BE:

case AV_PIX_FMT_GBRP14LE:

case AV_PIX_FMT_GBRAP:

case AV_PIX_FMT_GBRAP10BE:

case AV_PIX_FMT_GBRAP10LE:

case AV_PIX_FMT_GBRAP12BE:

case AV_PIX_FMT_GBRAP12LE:

*yuv2anyX = yuv2gbrp_full_X_c;

break;

case AV_PIX_FMT_GBRP16BE:

case AV_PIX_FMT_GBRP16LE:

case AV_PIX_FMT_GBRAP16BE:

case AV_PIX_FMT_GBRAP16LE:

*yuv2anyX = yuv2gbrp16_full_X_c;

break;

case AV_PIX_FMT_GBRPF32BE:

case AV_PIX_FMT_GBRPF32LE:

case AV_PIX_FMT_GBRAPF32BE:

case AV_PIX_FMT_GBRAPF32LE:

*yuv2anyX = yuv2gbrpf32_full_X_c;

break;

}

if (!*yuv2packedX && !*yuv2anyX)

goto YUV_PACKED;

} else {

YUV_PACKED:

switch (dstFormat) {

case AV_PIX_FMT_RGBA64LE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packed1 = yuv2rgba64le_1_c;

*yuv2packed2 = yuv2rgba64le_2_c;

*yuv2packedX = yuv2rgba64le_X_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packed1 = yuv2rgbx64le_1_c;

*yuv2packed2 = yuv2rgbx64le_2_c;

*yuv2packedX = yuv2rgbx64le_X_c;

}

break;

case AV_PIX_FMT_RGBA64BE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packed1 = yuv2rgba64be_1_c;

*yuv2packed2 = yuv2rgba64be_2_c;

*yuv2packedX = yuv2rgba64be_X_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packed1 = yuv2rgbx64be_1_c;

*yuv2packed2 = yuv2rgbx64be_2_c;

*yuv2packedX = yuv2rgbx64be_X_c;

}

break;

case AV_PIX_FMT_BGRA64LE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packed1 = yuv2bgra64le_1_c;

*yuv2packed2 = yuv2bgra64le_2_c;

*yuv2packedX = yuv2bgra64le_X_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packed1 = yuv2bgrx64le_1_c;

*yuv2packed2 = yuv2bgrx64le_2_c;

*yuv2packedX = yuv2bgrx64le_X_c;

}

break;

case AV_PIX_FMT_BGRA64BE:

#if CONFIG_SWSCALE_ALPHA

if (c->needAlpha) {

*yuv2packed1 = yuv2bgra64be_1_c;

*yuv2packed2 = yuv2bgra64be_2_c;

*yuv2packedX = yuv2bgra64be_X_c;

} else

#endif /* CONFIG_SWSCALE_ALPHA */

{

*yuv2packed1 = yuv2bgrx64be_1_c;

*yuv2packed2 = yuv2bgrx64be_2_c;

*yuv2packedX = yuv2bgrx64be_X_c;

}

break;

case AV_PIX_FMT_RGB48LE:

*yuv2packed1 = yuv2rgb48le_1_c;

*yuv2packed2 = yuv2rgb48le_2_c;

*yuv2packedX = yuv2rgb48le_X_c;

break;

case AV_PIX_FMT_RGB48BE:

*yuv2packed1 = yuv2rgb48be_1_c;

*yuv2packed2 = yuv2rgb48be_2_c;

*yuv2packedX = yuv2rgb48be_X_c;

break;