先决条件(已经安装好filebeat+elasticsearch)

详情可以参考官网,或者

centos7 使用rpm包部署filebeat-CSDN博客

filebeat配置文件

标准输入console输出

(1)编写测试的配置⽂件

mkdir /etc/filebeat/config

[root@elk101 /tmp]$vim /etc/filebeat/config/01-stdin-to-console.yml

# 指定输⼊的类型

filebeat.inputs:

# 指定输⼊的类型为"stdin",表示标准输⼊

- type: stdin

# 指定输出的类型

output.console:

# 打印漂亮的格式

pretty: true

这里指定输入类型伟标准输入(命令行输入),输出类型为console类型.启动后会在终端保持一个会话,用来进行信息的输入和输出.运⾏filebeat实例

[root@elk101 filebeat[]# filebeat -e -c /etc/filebeat/config/01-stdin-to-console.yml

2024-03-07T14:59:26.140+0800 INFO instance/beat.go:698 Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat] Hostfs Path: [/]

2024-03-07T14:59:26.141+0800 INFO instance/beat.go:706 Beat ID: a05f015d-df07-44f7-b96a-32322b036928

2024-03-07T14:59:26.143+0800 INFO [seccomp] seccomp/seccomp.go:124 Syscall filter successfully installed

2024-03-07T14:59:26.143+0800 INFO [beat] instance/beat.go:1052 Beat info {"system_info": {"beat": {"path": {"config": "/etc/filebeat", "data": "/var/lib/filebeat", "home": "/usr/share/filebeat", "logs": "/var/log/filebeat"}, "type": "filebeat", "uuid": "a05f015d-df07-44f7-b96a-32322b036928"}}}

2024-03-07T14:59:26.143+0800 INFO [beat] instance/beat.go:1061 Build info {"system_info": {"build": {"commit": "e1892997ca7ff26dbb5e9060f76eb6aed4531d7d", "libbeat": "7.17.18", "time": "2024-02-01T22:59:34.000Z", "version": "7.17.18"}}}

2024-03-07T14:59:26.143+0800 INFO [beat] instance/beat.go:1064 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":2,"version":"go1.20.12"}}}

2024-03-07T14:59:26.144+0800 INFO [beat] instance/beat.go:1070 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2024-03-06T19:11:41+08:00","containerized":false,"name":"elk101","ip":["127.0.0.1","::1","192.168.75.101","fe80::20c:29ff:fef2:edef","172.17.0.1"],"kernel_version":"3.10.0-1160.71.1.el7.x86_64","mac":["00:0c:29:f2:ed:ef","02:42:59:a8:96:7a"],"os":{"type":"linux","family":"redhat","platform":"centos","name":"CentOS Linux","version":"7 (Core)","major":7,"minor":9,"patch":2009,"codename":"Core"},"timezone":"CST","timezone_offset_sec":28800,"id":"c97822d4b11d4ddd822e8f52aec0d1b9"}}}

2024-03-07T14:59:26.144+0800 INFO [beat] instance/beat.go:1099 Process info {"system_info": {"process": {"capabilities": {"inheritable":null,"permitted":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"effective":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"bounding":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"ambient":null}, "cwd": "/var/lib/filebeat/registry/filebeat", "exe": "/usr/share/filebeat/bin/filebeat", "name": "filebeat", "pid": 6837, "ppid": 1873, "seccomp": {"mode":"filter","no_new_privs":true}, "start_time": "2024-03-07T14:59:25.380+0800"}}}

2024-03-07T14:59:26.144+0800 INFO instance/beat.go:292 Setup Beat: filebeat; Version: 7.17.18

2024-03-07T14:59:26.144+0800 INFO [publisher] pipeline/module.go:113 Beat name: elk101

2024-03-07T14:59:26.145+0800 WARN beater/filebeat.go:202 Filebeat is unable to load the ingest pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the ingest pipelines or are using Logstash pipelines, you can ignore this warning.

2024-03-07T14:59:26.145+0800 INFO instance/beat.go:457 filebeat start running.

2024-03-07T14:59:26.145+0800 INFO [monitoring] log/log.go:142 Starting metrics logging every 30s

2024-03-07T14:59:26.145+0800 INFO memlog/store.go:119 Loading data file of '/var/lib/filebeat/registry/filebeat' succeeded. Active transaction id=0

2024-03-07T14:59:26.146+0800 INFO memlog/store.go:124 Finished loading transaction log file for '/var/lib/filebeat/registry/filebeat'. Active transaction id=6

2024-03-07T14:59:26.147+0800 WARN beater/filebeat.go:411 Filebeat is unable to load the ingest pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the ingest pipelines or are using Logstash pipelines, you can ignore this warning.

2024-03-07T14:59:26.148+0800 INFO [registrar] registrar/registrar.go:109 States Loaded from registrar: 1

2024-03-07T14:59:26.148+0800 INFO [crawler] beater/crawler.go:71 Loading Inputs: 1

2024-03-07T14:59:26.148+0800 INFO [crawler] beater/crawler.go:117 starting input, keys present on the config: [filebeat.inputs.0.type]

2024-03-07T14:59:26.148+0800 INFO [crawler] beater/crawler.go:148 Starting input (ID: 16876905907669988323)

2024-03-07T14:59:26.148+0800 INFO [crawler] beater/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

2024-03-07T14:59:26.149+0800 INFO [stdin.harvester] log/harvester.go:310 Harvester started for paths: [] {"harvester_id": "f1bd16ab-7561-4f01-92a6-01ba815c829e"}检查没有错误信息表示filebeat启动成功.可以在命令行输入内容看下结果.

#这里第一行是输入内容,后面的是console以及添加的附带内容输出结果.

yaochizaocan

{

"@timestamp": "2024-03-07T07:00:32.840Z",

"@metadata": {

"beat": "filebeat",

"type": "_doc",

"version": "7.17.18"

},

"host": {

"name": "elk101"

},

"agent": {

"id": "a05f015d-df07-44f7-b96a-32322b036928",

"name": "elk101",

"type": "filebeat",

"version": "7.17.18",

"hostname": "elk101",

"ephemeral_id": "0d01b347-318b-49a2-84d6-f260ca4ad419"

},

"log": {

"file": {

"path": ""

},

"offset": 0

},

"message": "yaochizaocan", #这里是你输入的内容.最终以json的形式输出到命令行

"input": {

"type": "stdin"

},

"ecs": {

"version": "1.12.0"

}

}

log输入console输出

配置文件

[root@elk101 /etc/filebeat/config]$cat 02-log-to-console.yml

# 指定输⼊的类型

filebeat.inputs:

# 指定输⼊的类型为"stdin",表示标准输⼊

- type: log

paths:

- /tmp/test.log

- /tmp/*.txt

# 指定输出的类型

output.console:

# 打印漂亮的格式

pretty: true

#这里说明一下.其中type类型是一个列表.可以配置多个. paths 字段对应的文件也是可以配置多个的.并且支持通配符.启动filebeat,并指定log类型输入配置文件.

[root@elk101 filebeat[]# filebeat -e -c /etc/filebeat/config/02-log-to-console.yml

2024-03-07T15:03:07.467+0800 INFO instance/beat.go:698 Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat] Hostfs Path: [/]

2024-03-07T15:03:07.467+0800 INFO instance/beat.go:706 Beat ID: a05f015d-df07-44f7-b96a-32322b036928

2024-03-07T15:03:07.467+0800 INFO [seccomp] seccomp/seccomp.go:124 Syscall filter successfully installed

2024-03-07T15:03:07.467+0800 INFO [beat] instance/beat.go:1052 Beat info {"system_info": {"beat": {"path": {"config": "/etc/filebeat", "data": "/var/lib/filebeat", "home": "/usr/share/filebeat", "logs": "/var/log/filebeat"}, "type": "filebeat", "uuid": "a05f015d-df07-44f7-b96a-32322b036928"}}}

2024-03-07T15:03:07.467+0800 INFO [beat] instance/beat.go:1061 Build info {"system_info": {"build": {"commit": "e1892997ca7ff26dbb5e9060f76eb6aed4531d7d", "libbeat": "7.17.18", "time": "2024-02-01T22:59:34.000Z", "version": "7.17.18"}}}

2024-03-07T15:03:07.467+0800 INFO [beat] instance/beat.go:1064 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":2,"version":"go1.20.12"}}}

2024-03-07T15:03:07.468+0800 INFO [beat] instance/beat.go:1070 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2024-03-06T19:11:41+08:00","containerized":false,"name":"elk101","ip":["127.0.0.1","::1","192.168.75.101","fe80::20c:29ff:fef2:edef","172.17.0.1"],"kernel_version":"3.10.0-1160.71.1.el7.x86_64","mac":["00:0c:29:f2:ed:ef","02:42:59:a8:96:7a"],"os":{"type":"linux","family":"redhat","platform":"centos","name":"CentOS Linux","version":"7 (Core)","major":7,"minor":9,"patch":2009,"codename":"Core"},"timezone":"CST","timezone_offset_sec":28800,"id":"c97822d4b11d4ddd822e8f52aec0d1b9"}}}

2024-03-07T15:03:07.468+0800 INFO [beat] instance/beat.go:1099 Process info {"system_info": {"process": {"capabilities": {"inheritable":null,"permitted":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"effective":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"bounding":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend"],"ambient":null}, "cwd": "/var/lib/filebeat/registry/filebeat", "exe": "/usr/share/filebeat/bin/filebeat", "name": "filebeat", "pid": 6871, "ppid": 1873, "seccomp": {"mode":"filter","no_new_privs":true}, "start_time": "2024-03-07T15:03:06.700+0800"}}}

2024-03-07T15:03:07.468+0800 INFO instance/beat.go:292 Setup Beat: filebeat; Version: 7.17.18

2024-03-07T15:03:07.469+0800 INFO [publisher] pipeline/module.go:113 Beat name: elk101

2024-03-07T15:03:07.469+0800 WARN beater/filebeat.go:202 Filebeat is unable to load the ingest pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the ingest pipelines or are using Logstash pipelines, you can ignore this warning.

2024-03-07T15:03:07.470+0800 INFO [monitoring] log/log.go:142 Starting metrics logging every 30s

2024-03-07T15:03:07.470+0800 INFO instance/beat.go:457 filebeat start running.

2024-03-07T15:03:07.470+0800 INFO memlog/store.go:119 Loading data file of '/var/lib/filebeat/registry/filebeat' succeeded. Active transaction id=0

2024-03-07T15:03:07.472+0800 INFO memlog/store.go:124 Finished loading transaction log file for '/var/lib/filebeat/registry/filebeat'. Active transaction id=12

2024-03-07T15:03:07.472+0800 WARN beater/filebeat.go:411 Filebeat is unable to load the ingest pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the ingest pipelines or are using Logstash pipelines, you can ignore this warning.

2024-03-07T15:03:07.473+0800 INFO [registrar] registrar/registrar.go:109 States Loaded from registrar: 2

2024-03-07T15:03:07.473+0800 INFO [crawler] beater/crawler.go:71 Loading Inputs: 1

2024-03-07T15:03:07.473+0800 INFO [crawler] beater/crawler.go:117 starting input, keys present on the config: [filebeat.inputs.0.paths.0 filebeat.inputs.0.paths.1 filebeat.inputs.0.type]

2024-03-07T15:03:07.473+0800 WARN [cfgwarn] log/input.go:89 DEPRECATED: Log input. Use Filestream input instead.

2024-03-07T15:03:07.474+0800 INFO [input] log/input.go:171 Configured paths: [/tmp/test.log /tmp/*.txt] {"input_id": "bbb2e99c-8df2-4541-abc3-86baf6f9363c"}

2024-03-07T15:03:07.474+0800 INFO [crawler] beater/crawler.go:148 Starting input (ID: 12953166147809564661)

2024-03-07T15:03:07.474+0800 INFO [crawler] beater/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

如果文件中有新的内容更新那么就会将内容打印到终端

{

"@timestamp": "2024-03-07T07:09:44.158Z",

"@metadata": {

"beat": "filebeat",

"type": "_doc",

"version": "7.17.18"

},

"host": {

"name": "elk101"

},

"agent": {

"version": "7.17.18",

"hostname": "elk101",

"ephemeral_id": "883c54d9-0172-4cc9-898d-af4f96fc6de2",

"id": "a05f015d-df07-44f7-b96a-32322b036928",

"name": "elk101",

"type": "filebeat"

},

"log": {

"file": {

"path": "/tmp/test.log"

},

"offset": 0

},

"message": "11111",

"input": {

"type": "log"

},

"ecs": {

"version": "1.12.0"

}

}input通用字段输入,

这里有多个type标签,用于标注不同的收集输入类型.

#修改配置文件

[root@elk101 /tmp]$vim /etc/filebeat/config/03-log-corrency-to-console.yml

filebeat.inputs:

- type: log

# 是否启动当前的输⼊类型,默认值为true

enabled: true

# 指定数据路径

paths:

- /tmp/test.log

- /tmp/*.txt

# 给当前的输⼊类型搭上标签

tags: ["快要被卷死","容器运维","DBA运维","SRE运维⼯程师"]

# ⾃定义字段

fields:

school: "为了部落"

class: "有吼吼吼"

- type: log

enabled: true

paths:

- /tmp/test/*/*.log

tags: ["你是来拉屎的吗?","云原⽣开发"]

fields:

name: "光头大魔王"

hobby: "大佬牛逼"

# 将⾃定义字段的key-value放到顶级字段.

# 默认值为false,会将数据放在⼀个叫"fields"字段的下⾯.

fields_under_root: true

output.console:

pretty: true

输出结果,

其中输出结果中包含数据源,偏移量信息,以及配置的标签信息.

2024-03-07T16:00:33.165+0800 INFO [input.harvester] log/harvester.go:310 Harvester started for paths: [/tmp/test.log /tmp/*.txt] {"input_id": "c1c96a1c-45a3-4094-9457-95254681ab8b", "source": "/tmp/test.log", "state_id": "native::68646985-64768", "finished": false, "os_id": "68646985-64768", "old_source": "/tmp/test.log", "old_finished": true, "old_os_id": "68646985-64768", "harvester_id": "2ee3562f-233f-48fb-b792-59bc20c0069c"}

{

"@timestamp": "2024-03-07T08:00:33.165Z",

"@metadata": {

"beat": "filebeat",

"type": "_doc",

"version": "7.17.18"

},

"message": "为了部落",

"tags": [

"快要被卷死",

"容器运维",

"DBA运维",

"SRE运维⼯程师"

],

"input": {

"type": "log"

},

"fields": {

"class": "有吼吼吼",

"school": "为了部落"

},

"ecs": {

"version": "1.12.0"

},

"host": {

"name": "elk101"

},

"agent": {

"hostname": "elk101",

"ephemeral_id": "59ee22ec-4c0c-44ca-be7b-8e0830f0277c",

"id": "a05f015d-df07-44f7-b96a-32322b036928",

"name": "elk101",

"type": "filebeat",

"version": "7.17.18"

},

"log": {

"file": {

"path": "/tmp/test.log"

},

"offset": 11

}

}

日志过滤

配置文件

[root@elk101 /etc/filebeat/config]$vim 04-log-include-to-console.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/*.log

# 注意,⿊⽩名单均⽀持通配符,⽣产环节中不建议同时使⽤,

# 指定⽩名单,包含指定的内容才会采集,且区分⼤⼩写!

include_lines: ['^ERR', '^WARN','warnning']

# 指定⿊名单,排除指定的内容

exclude_lines: ['^DBG',"linux","info"]

output.console:

pretty: true

#说明一下,这里在- type列表中指定了两个字段,一个是包含哪些字母开头的文件. 另一个是指定哪些文件不做数据采集.将数据写入es

配置文件

[root@elk101 /etc/filebeat/config]$vim 05-log-to-elasticsearch.yaml

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/test.log

tags: ["一","二","DBA运维","SRE运维⼯程师"]

fields:

school: "牛逼大学"

class: "三年二班"

- type: log

enabled: true

paths:

- /tmp/*.log

tags: ["大佬牛逼","云"]

fields:

name: "光头大魔王"

hobby: "吃喝"

fields_under_root: true

output.elasticsearch:

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

日志写入信息

[root@elk101 /tmp]$pwd

/tmp

[root@elk101 /tmp]$for i in {1..9} ;do echo "$i"为了部落 >> test.log ;done

[root@elk101 /tmp]$cat test.log

11111

2222

为了部落

33333

1为了部落

2为了部落

3为了部落

4为了部落

5为了部落

6为了部落

7为了部落

8为了部落

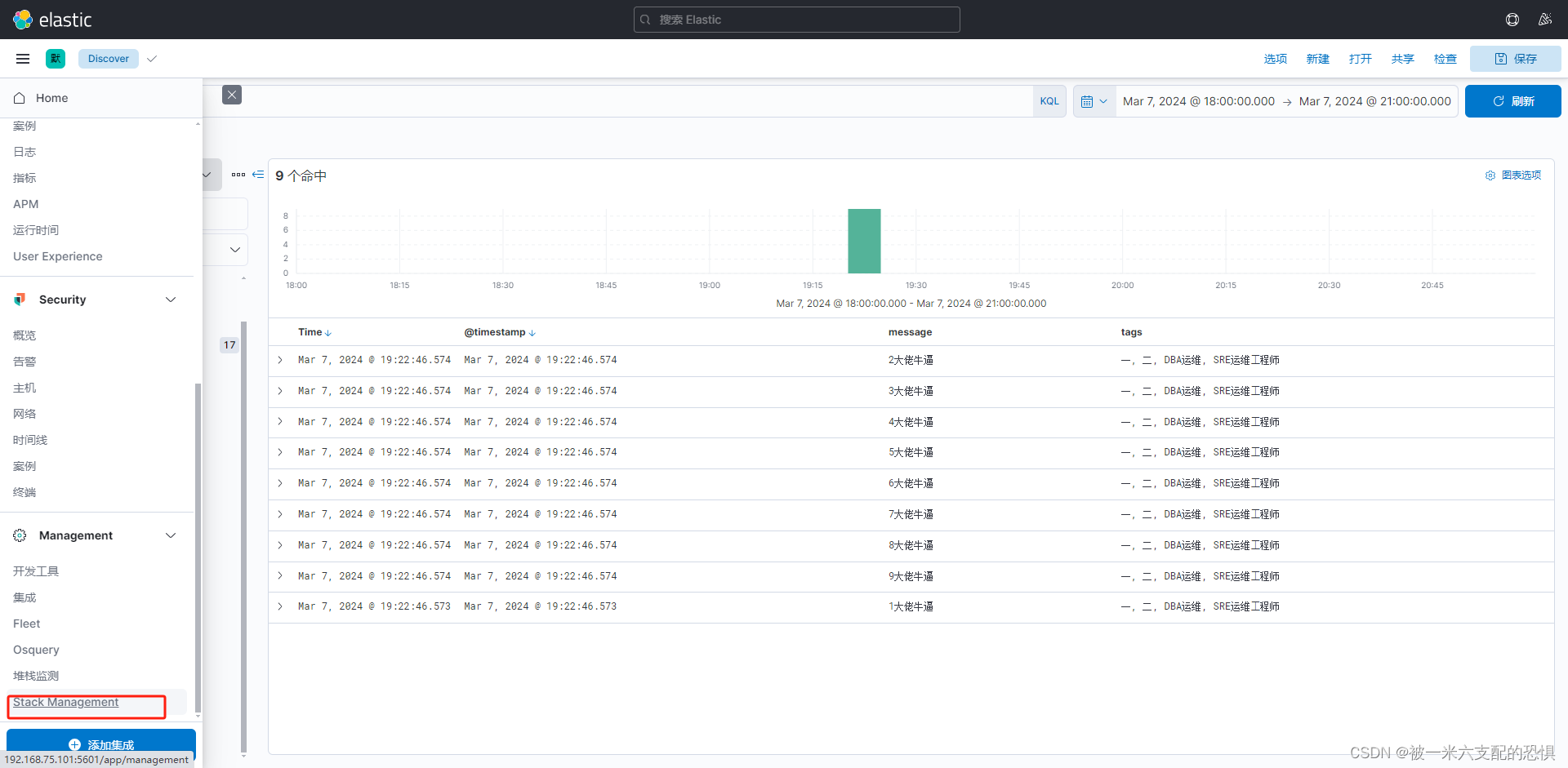

9为了部落使用kibana查看输入信息(这里有重复的日志,因为是采集了两次.)

创建索引:

进入stack memagement

点击索引管理:可以查看当前有哪些索引.(有一些手动创建的,有一些是自动创建的.)索引的相关信息,和状态.

创建索引模式用来后续查看索引对应的相关数据

指定索引名称(这里需要添加*号来匹配对应的索引),添加时间戳字段.

索引模式创建完成

查看索引中对应的数据信息

找到需要查看的索引模式

指定需要查看的字段信息

指定需要查看的字段信息

自定义索引名称

配置文件

[root@elk101 /etc/filebeat/config]$vim 06-log-to-elasticsearch-index.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/test.log

tags: ["一","二","DBA运维","SRE运维⼯程师"]

fields:

school: "牛逼大学"

class: "三年二班"

- type: log

enabled: true

paths:

- /tmp/test/*/*.log

tags: ["大佬牛逼","云"]

fields:

name: "光头大魔王"

hobby: "吃喝"

fields_under_root: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "dalaoniubi-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "dalaoniubi-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "dalaoniubi-linux*"

查看结果

指定索引分片数量

#添加tag,指定输入模式为log,指定多个索引.指定副本数量.

[root@elk101 /tmp]$cat /etc/filebeat/config/08-log-more-repl.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/test.log

tags: ["yieryi","二","DBA运维","SRE运维?程师"]

fields:

school: "牛逼大学"

class: "三年二班"

- type: log

enabled: true

paths:

- /tmp/test/*/*.log

tags: ["liubai","云"]

fields:

name: "光头大魔王"

hobby: "吃喝"

fields_under_root: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

indices:

- index: "guangtou-linux-elk-%{+yyyy.MM.dd}"

when.contains:

tags: "yieryi"

- index: "yiyimiliu-linux-elk-%{+yyyy.MM.dd}"

when.contains:

tags: "liubai"

# 禁?索引?命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "guangtou-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "guangtou-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分?数量

index.number_of_shards: 3

# # 设置副本数量,要求?于集群的数量

index.number_of_replicas: 2

上面的说明一下.关于tag的使用.如果有多个数据源进行日志收集,那么可能会配置多个index,这些index会分别关联对应的tag标签.来创建相应的索引.上面的配置表示创建了两个索引.关联对应的tag.(如果对应目录下面没有数据的话那么就不会处罚创建index的操作.)

采集nginx的日志

配置文件如下:

[root@elk101 /etc/filebeat/config]$cat 09-log-nginx.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log*

tags: ["access"]

fields_under_root: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "nginx-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "nginx-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "nginx-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分⽚数量

index.number_of_shards: 3

# # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2将nginx的access.log日志中的内容转换成json格式.目的是可以灵活的选取json中的指定字段进行数据分析.

json格式步骤如下:

1.首先停止filebeat服务,

2.将原来的access.log文件置空.因为之前的文件中有两种不同的格式,1原生的message,2.json格式的message.这会导致filebeat无法完整识别日志的格式.

3.然后将创建好的索引删除.(在kibana中删掉),在启动filebeat的时候会自动创建索引.

4.将nginx.conf文件中的日志格式变成json格式.

[root@elk101 /etc/filebeat/config]$cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

#指定nginx的日志格式

log_format main '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"SendBytes":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"uri":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"tcp_xff":"$proxy_protocol_addr",'

'"http_user_agent":"$http_user_agent",'

'"status":"$status"}';

#指定日志路径以及使用的日志格式是什么

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

修改filebeat配置文件

#这里其实没变.收集方式与正常的文件收集方式是相同的.但是需要指定一个参数,json.key_under_root

[root@elk101 /etc/filebeat/config]$cat 10-log-nginx-json.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log*

tags: ["access"]

json.keys_under_root: true #指定的参数在这里

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "nginx-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "nginx-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "nginx-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分⽚数量

index.number_of_shards: 3

# # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2

启动filebeat服务.

[root@elk101 ~]$filebeat -e -c /etc/filebeat/config/10-log-nginx-json.yml 这里说明一下(上面已经说明过了.)如果收集的日志文件没有数据,那么在kibana上就不会显示filebeat配置文件中声明的索引.

-->之后就是根据已经有的索引创建索引模式.并查看数据(略)

kibana上查看写入es中的数据.

这里发现原本message字段被拆分成了多个k/v字段.变成了json格式进行显示.并且可以对其进行指定筛选.方便我们后续的查看和数据分析.

使用module模式收集nginx access日志

接上:

首先找到filebeat的module配置文件存放位置,这里是 /etc/filebeat/module.d/

然后修改filebeat启动用的配置文件

[root@elk101 /etc/filebeat/config]$vim 11-model-nginx.yml

#指定使用module模式进行日志收集

filebeat.config.modules:

#指定模式配置文件存放位置.

path: /etc/filebeat/modules.d/*.yml

#开启热加载

reload.enabled: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "nginx-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "nginx-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "nginx-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分⽚数量

index.number_of_shards: 3

# # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2

查看有哪些module启动

这里没有启动的模块.如果要使用到某些模块可以手动启动.(也可以将你想要的模块的文件后缀修改成yml.)

[root@elk101 /etc/filebeat/config]$filebeat -c /etc/filebeat/config/11-model-nginx.yml modules list

Enabled:

Disabled:

activemq

apache

....

traefik

zeek

zookeeper

zoom

zscaler

启动指定模块

#启动nginx tomcat模块

[root@elk101 /etc/filebeat/modules.d]$filebeat modules enable nginx tomcat

Enabled nginx

Enabled tomcat

#查看模块启动状态

[root@elk101 /etc/filebeat/modules.d]$filebeat -c /etc/filebeat/config/11-model-nginx.yml modules list

Enabled:

nginx

tomcat

Disabled:

activemq

apache

auditd

aws

awsfargate

启动模块2

[root@elk101 /etc/filebeat/modules.d]$ls |grep mysql

mysqlenterprise.yml.disabled

mysql.yml.disabled

[root@elk101 /etc/filebeat/modules.d]$mv mysql.yml.disabled mysql.yml

#开启的模块检查

[root@elk101 /etc/filebeat/modules.d]$filebeat -c /etc/filebeat/config/11-model-nginx.yml modules list

Enabled:

mysql

nginx

tomcat

Disabled:

activemq

apache

auditd

aws

awsfargate

azure

barracuda

发现 mysql模块已经启动修改模块配置文件,这里指定的var.path使用的是一个切片.需要稍微注意下.

[root@elk101 /etc/filebeat/modules.d]$cat nginx.yml

# Module: nginx

# Docs: https://www.elastic.co/guide/en/beats/filebeat/7.17/filebeat-module-nginx.html

- module: nginx

# Access logs

access:

enabled: true

var.paths: ["/var/log/nginx/access.log*"]

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

# Error logs

error:

enabled: true

var.paths: ["/var/log/nginx/error.log"]

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

# Ingress-nginx controller logs. This is disabled by default. It could be used in Kubernetes environments to parse ingress-nginx logs

ingress_controller:

enabled: false

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

删除索引.清空日志,启动服务(还是上面的流程).验证结果.

使用filebeat 收集tomcat日志.

tomcat自己搭建.

首先看下filebeat配置文件

#指定tomcat代码收集模板

[root@elk101 /etc/filebeat/config]$cat 12-module-tomcat.yml

filebeat.config.modules:

path: /etc/filebeat/modules.d/tomcat.yml

tags: ["tomcat"]

reload.enabled: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "tomcat-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "tomcat-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "tomcat-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分⽚数量

index.number_of_shards: 3

# # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2

配置模板内容

#指定tomcat写入日志的位置.

[root@elk101 /etc/filebeat/modules.d]$cat tomcat.yml

# Module: tomcat

# Docs: https://www.elastic.co/guide/en/beats/filebeat/7.17/filebeat-module-tomcat.html

- module: tomcat

log:

enabled: true

var.input: file

var.paths:

- /zpf/tomcat/apache-tomcat-10.1.19/logs/localhost_access_log*.txt

# Set which input to use between udp (default), tcp or file.

# var.input: udp

# var.syslog_host: localhost

# var.syslog_port: 9501

# Set paths for the log files when file input is used.

# var.paths:

# - /var/log/tomcat/*.log

# Toggle output of non-ECS fields (default true).

# var.rsa_fields: true

# Set custom timezone offset.

# "local" (default) for system timezone.

# "+02:00" for GMT+02:00

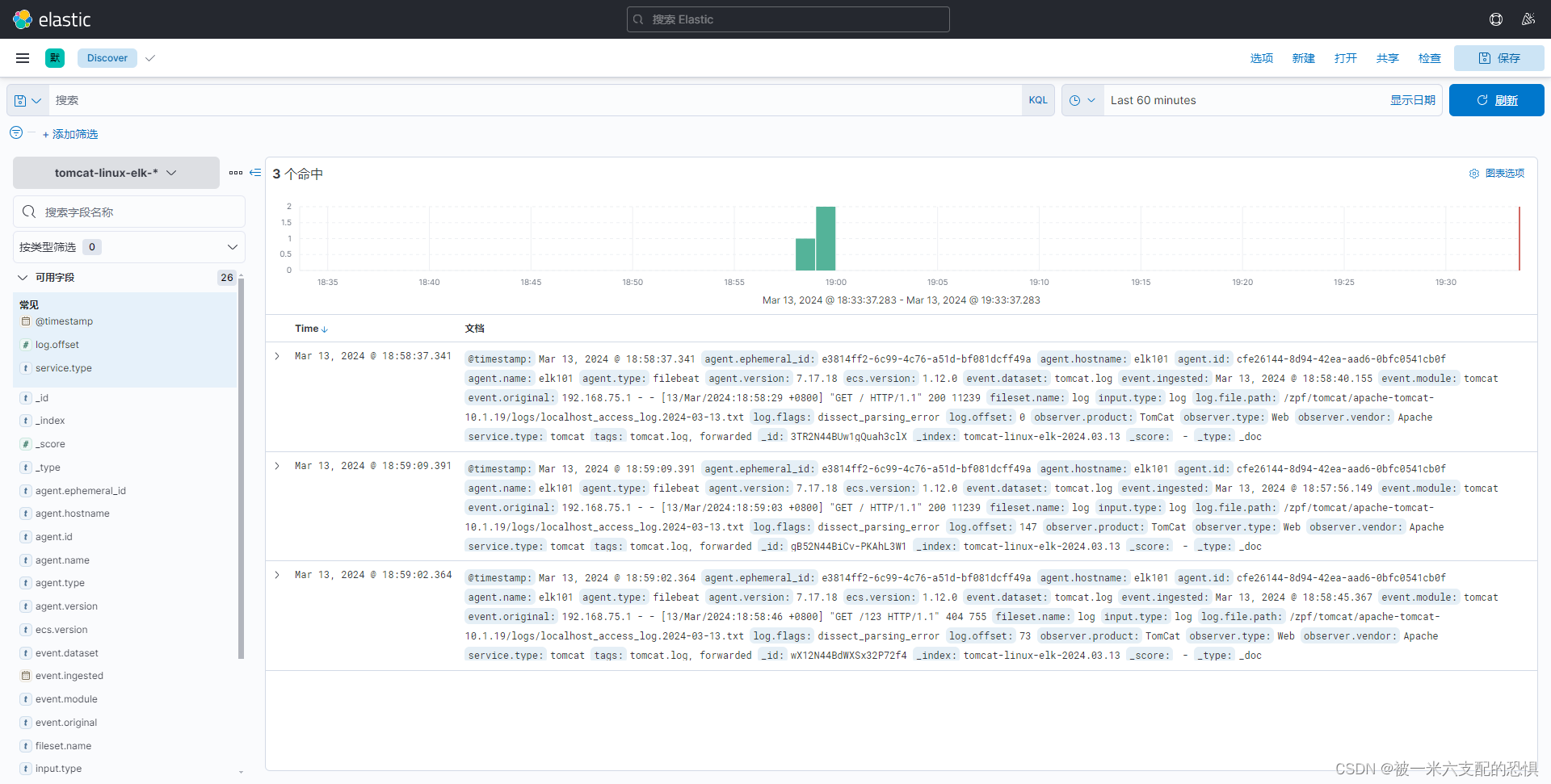

# var.tz_offset: local启动filebeat收集tomcat日志

[root@elk101 /var/log/nginx]$filebeat -e -c /etc/filebeat/config/12-module-tomcat.yml查看kibana中收集到es中的日志.

将tomcat日志形式变换成json格式

修改tomcat配置文件

#先将原来的配置文件备份

cp {server.xml,server.xml-`date +%F`}

#查看备份情况,这里已经将server.xml备份完成.

[root@elk101 /zpf/tomcat/apache-tomcat-10.1.19/conf]$ls

Catalina catalina.properties jaspic-providers.xml logging.properties server.xml-2024-03-13 tomcat-users.xsd

catalina.policy context.xml jaspic-providers.xsd server.xml tomcat-users.xml web.xml

#修改server.xml配置文件.

[root@elk101 /zpf/tomcat/apache-tomcat-10.1.19/conf]$vim server.xml

#直接到底.修改tomcat日志输出格式.

...

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="

{"requestTime":"%t","clientIP":"%h","threadID":"%I","protocol":"%H","requestMethod":"%r","requestStatus":"%s","sendBytes":"%b","queryString":"%q","responseTime":"%Dms","partner":"%{Referer}i","agentVersion":"%{User-Agent}i"}"

/>修改filebeat配置文件.

#这里指定为json收集格式

[root@elk101 /etc/filebeat/config]$cat 12-module-tomcat.yml

filebeat.config.modules:

path: /etc/filebeat/modules.d/tomcat.yml

json.keys_under_root: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "tomcat-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # 设置索引模板的名称

setup.template.name: "tomcat-linux"

# # 设置索引模板的匹配模式

setup.template.pattern: "tomcat-linux*"

# # 覆盖已有的索引模板

setup.template.overwrite: false

# # 配置索引模板

setup.template.settings:

# # 设置分⽚数量

index.number_of_shards: 3

# # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2

#清空tomcat日志-->通过kibana删除es上的索引.-->删除索引模式-->启动kibana.多⾏匹配收集elasticsearch的错误⽇志

#这里使用module配置elastic的相关日志信息.

[root@elk101 /etc/filebeat/config]$cat 13-elasticsearch-module-to-elastic.yml

filebeat.config.modules:

path: /etc/filebeat/modules.d/elasticsearch.yml

tags: ["elasticsearch"]

reload.enabled: true

output.elasticsearch:

enabled: true

hosts: ["http://192.168.75.101:9200","http://192.168.75.102:9200","http://192.168.75.103:9200"]

index: "elasticsearch-linux-elk-%{+yyyy.MM.dd}"

# 禁⽤索引⽣命周期管理

setup.ilm.enabled: false

# # # 设置索引模板的名称

setup.template.name: "elasticsearch-linux"

# # # 设置索引模板的匹配模式

setup.template.pattern: "elasticsearch-linux*"

# # # 覆盖已有的索引模板

setup.template.overwrite: false

# # # 配置索引模板

setup.template.settings:

# # # 设置分⽚数量

index.number_of_shards: 3

# # # 设置副本数量,要求⼩于集群的数量

index.number_of_replicas: 2

3337

3337

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?